The architecture of artificial intelligence needs to be changed.

Using von Neumann architecture for applications with artificial intelligence is inefficient. What will replace her?

Using existing architectures to solve machine learning (MO) and artificial intelligence (AI) problems has become impractical. The power consumed by the AI has increased significantly, and the CPU along with the GPU seems to be increasingly inappropriate tools for this job.

Participants at several symposia agreed that the best opportunities for significant change arise in the absence of inherited features that have to be dragged along. Most of the systems evolved gradually over time - and, let it provide a safe way forward, such a scheme does not provide optimal solutions. When something new appears, it is possible to take a fresh look at things and choose a better direction than what the conventional technologies offer. That was what was discussed at a recent conference, where the question was studied whether the complementary metal-oxide-semiconductor ( CMOS ) structure is the best basic technology on which to build an AI application.

An Chan, appointed by IBM as executive director of the Nanoelectronics Research Initiative (NRI), formulated a discussion framework. “For many years we have been researching new, modern technologies, including the search for an alternative to CMOS, especially because of its problems associated with power consumption and scaling. After all these years, the opinion was developed that we did not find anything better as a basis for creating logical circuits. Today, many researchers are concentrating on AI, and it does offer new ways of thinking and new schemes, and they have new technological products. Will new AI devices have the ability to replace CMOS? ”

AI today

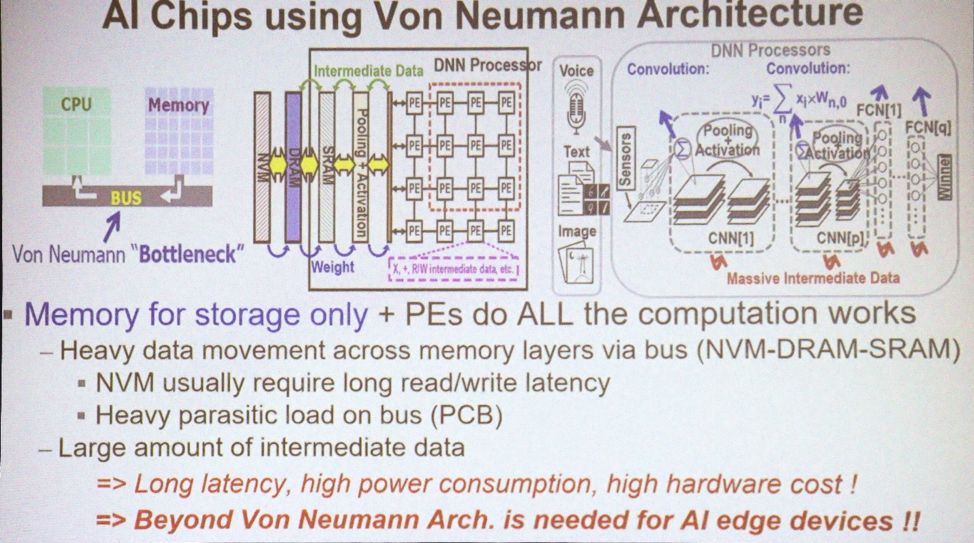

Most applications for MO and AI use von Neumann architecture. “It uses memory to store arrays of data, and the CPU performs all the calculations,” explains Marvin Chen, a professor of electrical engineering at Xinhua National University. “Large amounts of data are moving along the bus. Today, GPUs are also often used for in-depth training, including convolutional [neural networks]. One of the main problems is the appearance of intermediate data necessary for drawing up a conclusion. Moving data, especially off-chip, leads to energy losses and delays. This is a bottleneck of technology. ”

')

Architecture used for AI

What you need today is to combine data processing and memory. “The concept of memory computing has been offered by computer architecture experts for many years,” says Chen. - There are several schemes for SRAM and non-volatile memory, with which they tried to use and implement such a concept. Ideally, if this works out, you can save a huge amount of energy by eliminating the movement of data between the CPU and memory. But this is the ideal. ”

But today we have no memory calculations. “We still have AI 1.0, using the von Neumann architecture, because silicon devices that implement memory processing haven't appeared yet,” complains. Chen. - The only way to somehow use 3D TSV is to use high-speed memory along with the GPU to solve the bandwidth problem. But it still remains a bottleneck for energy and time. ”

Is there enough data processing in memory to solve the problem of energy loss? "There are a hundred billion neurons and 10 15 synapses in the human brain," said Sean Lee, assistant director at Taiwan Semiconductor Manufacturing Company. "Now look at the IBM TrueNorth." TrueNorth is a multi-core processor developed by IBM in 2014. It has 4096 cores, and each has 256 programmable artificial neurons. “Suppose we want to scale it and reproduce the size of the brain. The difference is 5 orders of magnitude. But if we just directly increase the numbers and multiply TrueNorth, which consumes 65 mW, then we will have a machine with a consumption of 65 kW against a human brain that consumes 25 watts. Consumption must be reduced by several orders of magnitude. "

Lee offers another way to imagine this opportunity. “The most efficient supercomputer for today is the Green500 from Japan, issuing 17 Gflops per Watt, or 1 flop per 59 pJ”. The Green500 website says that the ZettaScaler-2.2 system installed at the Advanced Computing and Communications Center (RIKEN) in Japan measured 18.4 Gflops / W during the Linpack test run, which required 858 teraflops. “Compare this with the Landauer principle , according to which at room temperature the minimum switching energy of the transistor is about 2.75 zJ [10-21 J]. Again, the difference is several orders of magnitude. 59 pJ is about 10-11 against a theoretical minimum of about 10-21 . We have a huge field for research. "

Is it fair to compare such computers with the brain? “After examining the latest successes of in-depth training, we will see that in most cases the latter have won seven years in a row at the competition of people and machines,” says Kaushik Roy, honorary professor of electrical engineering and computer science at Purdue University. “In 1997 Deep Blue defeated Kasparov, in 2011 IBM Watson won the game Jeopardy !, and in 2016 Alpha Go defeated Lee Sedol. This is the greatest achievement. But at what cost? These machines consumed 200 to 300 kW. The human brain consumes about 20 watts. Huge break. Where does innovation come from?

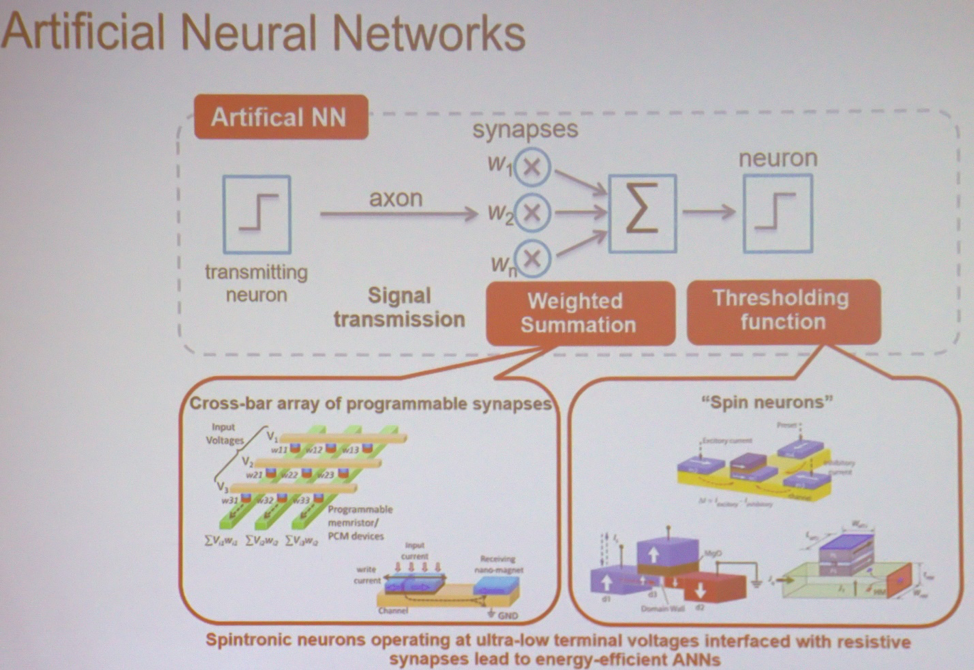

At the heart of most applications of MO and AI are the simplest calculations performed on a huge scale. “If you take the simplest neural network, it performs a weighted summation followed by a threshold operation,” Roy explains. - This can be done in matrices of various types. This may be a device from spintronics or resistive memory. In this case, the input voltage and total conductivity will be associated with each intersection point. At the output, you get the sum of the voltages multiplied by the conductivity. This is current. Then you can take similar devices that perform the threshold operation. The architecture can be imagined as a bunch of these nodes, connected together so as to carry out calculations. "

The main components of the neural network

New types of memory

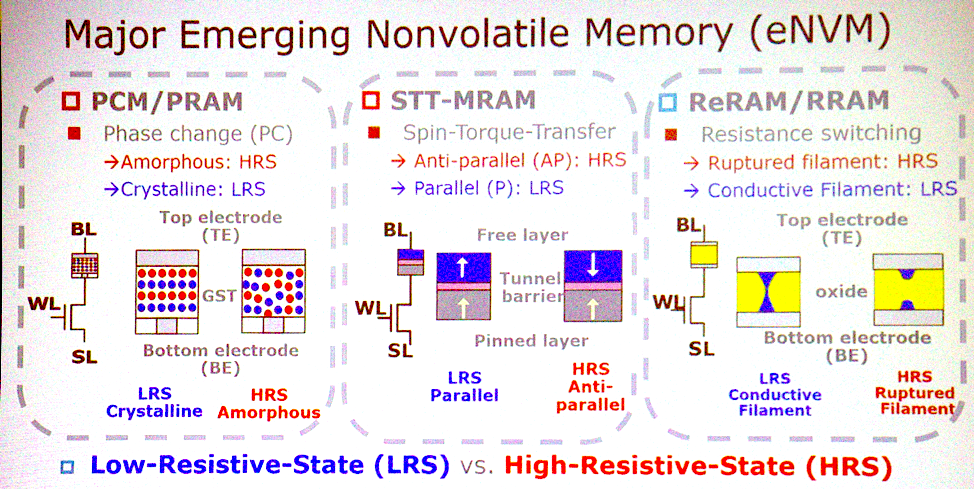

Most potential architectures are associated with emerging types of non-volatile memory. “What are the most important characteristics?” Asks Jeffrey Barr, a researcher at IBM Research. “I would put it on nonvolatile analog resistive memory, such as phase state memory, memristors, and so on. The idea is that these devices are capable of doing all the multiplications for fully connected layers of neural networks in a single clock cycle. On a set of processors, this can take a million clock cycles, and in an analog device this can be done with the help of physics, working at the location of the data. There are enough very interesting aspects in terms of time and energy for this idea to evolve into something more. ”

New memory technologies

Chen agrees with this. “PCM, STT has serious applications for victory. These three types of memory are good candidates for implementing in-memory computing. They are capable of basic logical operations. Some species have problems with reliability, and they can not be used for training, but it is possible to get a conclusion. "

But it may turn out that it is not necessary to switch to this memory. “People talk about using SRAM for exactly the same purpose,” adds Lee. “They do analog computing with SRAM.” The only negative is that SRAM is too big - 6 or 8 transistors per bit. Therefore, it’s not a fact that we will use these new technologies in analog computing. ”

The transition to analog computing also implies that the accuracy of the calculations will no longer be a matter of prime necessity. “AI is specializing, classifying and predicting,” he says. - It makes decisions that can be rude. From the point of view of accuracy, we can sacrifice something. We need to determine which calculations are error tolerant. Then some technology can be applied to reduce power consumption or speed up calculations. Over probabilistic CMOS work since 2003. This includes reducing the voltage up to the appearance of several errors, the number of which remains tolerable. Today, people are already using approximate computing techniques, for example, quantization. Instead of a 32-bit floating point number, you will have 8-bit integers. Analog computers are another feature already mentioned. ”

Get out of the lab

Moving technology from the lab to shared use can be challenging. “Sometimes you have to look for alternatives,” says Barr. “When the two-dimensional flash memory didn’t take off, the three-dimensional flash memory seemed no longer so difficult. If we continue to improve existing technologies, getting a doubling of the characteristics here, doubling there, then analog computing inside the memory will be abandoned. But if the following improvements are minor, analog memory will look more attractive. We, as researchers, must be ready for new opportunities. ”

The economy often hinders development, especially in the field of memory, but Barr says that in this case this will not happen. “One of our advantages is that this product will not be related to memory. It will not be something with small improvements. This is not a consumer product. This thing is competing with the GPU. They are sold at a price 70 times higher than the cost of the DRAM placed on them, so this is clearly a non-memory product. And the cost of the product will not be much different from the memory. It sounds good, but when you make decisions worth billions of dollars, all the costs and the product development plan should be crystal clear. To overcome this barrier, we need to produce impressive prototypes. ”

CMOS Replacement

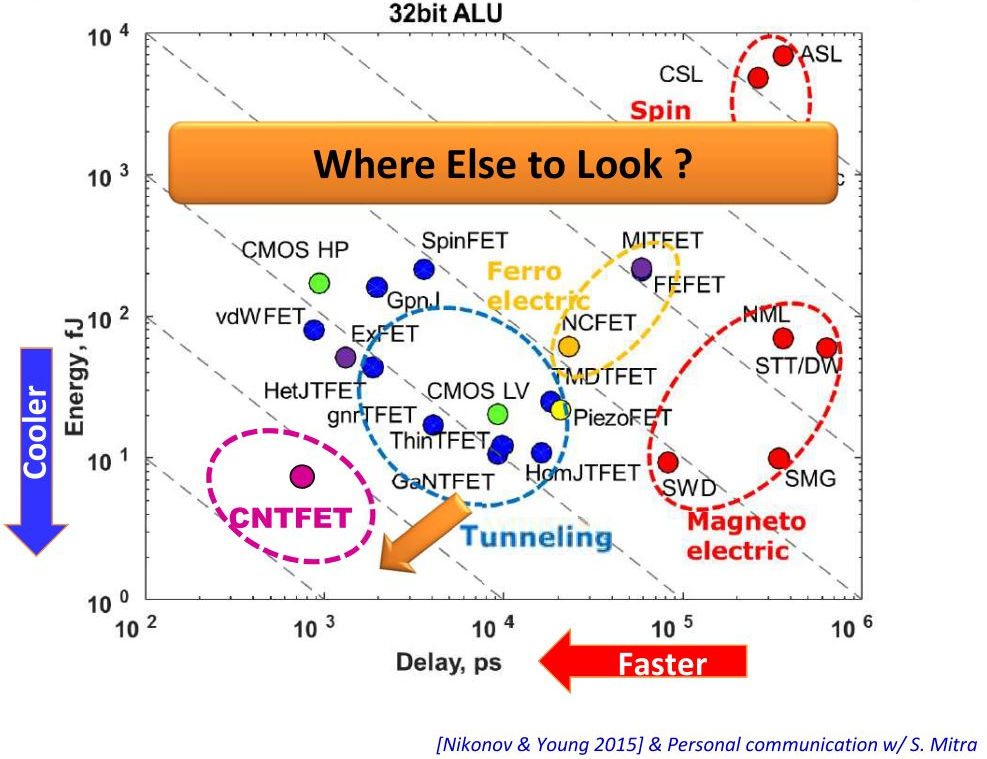

Processing data in memory can provide impressive benefits, but more is needed to implement the technology. Can any material other than CMOS help in this? “Considering the transition from CMOS low consumption to tunnel FET, we are talking about reducing consumption by 1-2 orders of magnitude,” says Lee. - Another possibility is three-dimensional integrated circuits. They reduce the length of wiring using TSV. This reduces power consumption and delays. Look at the data centers, they all remove the metal wiring and connect the optical one. ”

Vertically - power consumption, horizontally - delays in the operation of devices

Although it is possible to achieve certain advantages when switching to another technology, they may not be worth it. “It will be very difficult to replace CMOS, but some of the devices under discussion can complement CMOS technology so that it performs computations in memory,” says Roy. - CMOS can support in-memory computations in analog form, possibly in cell 8T. Is it possible to create an architecture with a clear advantage over CMOS? If everything is done correctly, then CMOS will give me energy efficiency increases thousands of times. But it takes time. ”

It is clear that CMOS will not replace. “New technologies will not reject the old ones, and they will not be made on any other substrates other than CMOS,” Barr concludes.

Source: https://habr.com/ru/post/420943/

All Articles