From zero to “Actions on Google” hero: your code

In the first part, we figured out the basic principles of designing and developing applications for Google Assistant. Now it's time to write your own assistant so that users can finally choose a movie for the evening. Developers shipa_o , raenardev and designer ComradeGuest continue to tell.

We write our code

Let's try to write something more complicated.

Suppose our agent recommends movies by genre.

We ask him to “Show the horror”, and the agent will parse the genre, search for the film in the collection by genre and display it on the screen.

var json = { "filmsList": [ { "id": "1", "title": " : ", "description": " ", "genres": ["", "", ""], "imageUrl": "http://t3.gstatic.com/images?q=tbn:ANd9GcQEA5a7K9k9ajHIu4Z5AqZr7Y8P7Fgvd4txmQpDrlQY2047coRk", "trailer": "https://www.youtube.com/watch?v=RNksw9VU2BQ" }, { "id": "2", "title": " : 2 – ", "description": " ", "genres": ["", "", "", ""], "imageUrl": "http://t3.gstatic.com/images?q=tbn:ANd9GcTPPAiysdP0Sra8XcIhska4MOq86IaDS_MnEmm6H7vQCaSRwahQ", "trailer": "https://www.youtube.com/watch?v=vX_2QRHEl34" }, { "id": "3", "title": "", "description": " ", "genres": ["", "", ""], "imageUrl": "https://www.kinopoisk.ru/images/film_big/386.jpg", "trailer": "https://www.youtube.com/watch?v=xIe98nyo3xI" } ] }; exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => { const agent = new WebhookClient({ request, response }); let result = request.body.queryResult; let parameters = result.parameters; let outputContexts = result.outputContexts; let intentMap = new Map(); // agent parameters, intentMap.set('search-by-genre', searchByGenre.bind(this, agent, parameters)); agent.handleRequest(intentMap); }); function searchByGenre(agent, parameters) { let filmsList = json.filmsList; // let filteredFilms = filmsList.filter((film) => { // , return film.genres.some((genre) => genre == parameters.genre); }); // let firstFlim = filteredFilms[0]; // agent.add(firstFlim.title); // agent.add(new Card({ title: firstFlim.title, imageUrl: firstFlim.imageUrl, text: firstFlim.description, buttonText: ' ', buttonUrl: firstFlim.trailer })); // agent.add([ " ?", new Suggestion(" ?"), new Suggestion(""), new Suggestion("") ]); } Now the answer has become more informative.

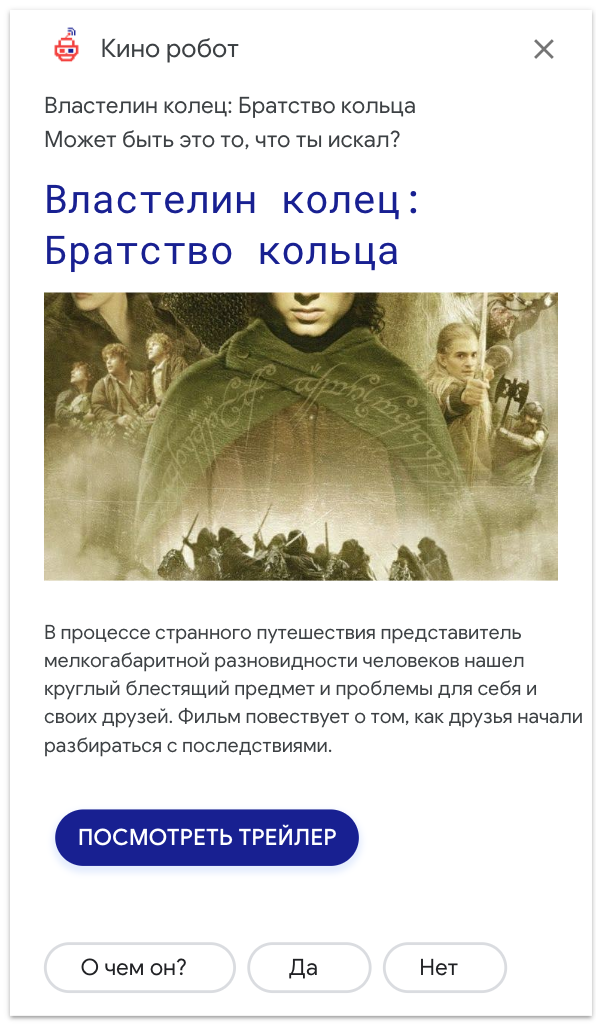

We display text, a card with information and tips:

A good feature of Dialogflow is that it is adapted from a box for different devices.

If the device has speakers, all the phrases that we send to the add method will be voiced, and if there is no screen, then the Card and Suggestion objects will simply not be displayed.

We connect the database

Let's complicate the task and add data retrieval from the database (DB).

The easiest way is to use firebase realtime database.

For example, we will use the Admin Database API.

First you need to create a database and fill it.

You can do this in the same project that was created for Cloud Functions :

// firebase-admin const firebaseAdmin = require('firebase-admin'); // firebaseAdmin firebaseAdmin.initializeApp({ credential: firebaseAdmin.credential.applicationDefault(), databaseURL: 'https://<ID->.firebaseio.com' }); // , function getFilmsList() { return firebaseAdmin .database() .ref() .child('filmsList') .once('value') .then(snapshot => { const filmsList = snapshot.val(); console.log('filmsList: ' + JSON.stringify(filmsList)); return filmsList; }) .catch(error => { console.log('getFilmsList error: ' + error); return error; }); } Appeal to the database requires multithreading. API firebase database is designed to use Promise . The .once('value') method returns us a Promise. Then we get our data in the then() block and return the Promise with them as the result of the function.

It is important to return this Promise to the handleRequest() method, otherwise the agent will exit from our callback without waiting for a response and processing the result.

'use strict'; const functions = require('firebase-functions'); const firebaseAdmin = require('firebase-admin'); const { WebhookClient } = require('dialogflow-fulfillment'); const { Card, Suggestion } = require('dialogflow-fulfillment'); firebaseAdmin.initializeApp({ credential: firebaseAdmin.credential.applicationDefault(), databaseURL: 'https://<ID->.firebaseio.com' }); exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => { const agent = new WebhookClient({ request, response }); let result = request.body.queryResult; let parameters = result.parameters; let outputContexts = result.outputContexts; let intentMap = new Map(); intentMap.set('search-by-genre', searchByGenre.bind(this, agent, parameters)); agent.handleRequest(intentMap); }); function getFilmsList() { return firebaseAdmin .database() .ref() .child('filmsList') .once('value') .then(snapshot => { const filmsList = snapshot.val(); console.log('filmsList: ' + JSON.stringify(filmsList)); return filmsList; }) .catch(error => { console.log('getFilmsList error: ' + error); return error; }); } function searchByGenre(agent, parameters) { return getFilmsList() .then(filmsList => { let filteredFilms = filmsList.filter((film) => { return film.genres.some((genre) => genre == parameters.genre); }); let firstFlim = filteredFilms[0]; agent.add(firstFlim.title); agent.add(new Card({ title: firstFlim.title, imageUrl: firstFlim.imageUrl, text: firstFlim.description, buttonText: ' ', buttonUrl: firstFlim.trailer })); agent.add([ " ?", new Suggestion(" ?"), new Suggestion(""), new Suggestion("") ]); }) .catch(error => { console.log('getFilmsList error' + error); }); } Add unpredictability

Our skill will each time produce films in the same sequence. The same answers will annoy the user first, and then he will stop talking to our robot.

Fix this with the shuffle-array shuffle library .

Add the dependency to the package.json file:

"dependencies": { // ... "shuffle-array": "^1.0.1" // ... } // const shuffle = require('shuffle-array'); function searchByGenre(agent, parameters) { return getFilmsList() .then(filmsList => { let filteredFilms = filmsList.filter((film) => { return film.genres.some((genre) => genre == parameters.genre); }); // shuffle(filteredFilms); let firstFlim = filteredFilms[0]; agent.add(firstFlim.title); agent.add(new Card({ title: firstFlim.title, imageUrl: firstFlim.imageUrl, text: firstFlim.description, buttonText: ' ', buttonUrl: firstFlim.trailer })); agent.add([ " ?", new Suggestion(" ?"), new Suggestion(""), new Suggestion("") ]); }) .catch(error => { console.log('getFilmsList error' + error); }); } Now every time a new film will be issued.

In the same way, you can add output of different phrases: create an array with phrases, mix it up and take the first one from the array.

Working with context

We ask the agent:

Show fantasy

Agent displays us the movie "The Lord of the Rings."

Then we ask:

What is he talking about?

People don't say: “What is the Lord of the Rings movie about?” Is unnatural. Therefore, we need to save information about the displayed movie. This can be done in context:

// , agent.setContext({ name: 'current-film', lifespan: 5, parameters: { id: firstFlim.id } }); function genreSearchDescription(agent) { // current-film const context = agent.getContext('current-film'); console.log('context current-film: ' + JSON.stringify(context)); // id const currentFilmId = context.parameters.id; // return getFilmsList() .then(filmsList => { // id const currentFilm = filmsList.filter(film => film.id === currentFilmId); agent.add(currentFilm[0].description); agent.add([ ' ?', new Suggestion(''), new Suggestion(' ') ]); }) .catch(error => { console.log('getFilmsList error:' + error); }); } In the same way, we can filter the list of films already shown.

Telegram integration

Documentation and useful links:

Virtually nothing is required to integrate with Telegram, but there are several features to consider.

1) If you make a display of a Card or Suggestion in fullfilment, then they will work in Telegram too.

But there is one bug : for quick replies, you must specify the title, otherwise the Telegram will display "Choose an item".

We have not yet succeeded in solving the problem of indicating the title in fullfilment.

2) If the intent is using the Suggestion Chips for the google assistant

then the same functionality for Telegram can be implemented in two ways:

Quick replies

Custom payload

Here you can implement quick answers using the main keyboard:

')

{ "telegram": { "text": " :", "reply_markup": { "keyboard": [ [ "", "", "", "", "" ] ], "one_time_keyboard": true, "resize_keyboard": true } } } and built-in keyboard:

{ "telegram": { "text": " :", "reply_markup": { "inline_keyboard": [ [{ "text": "", "callback_data": "" }], [{ "text": "", "callback_data": "" }], [{ "text": "", "callback_data": "" }], [{ "text": "", "callback_data": "" }], [{ "text": "", "callback_data": "" }] ] } } } The main keyboard will send a message that is saved in history, while the built-in keyboard does not.

It is important to remember that the main keyboard does not disappear over time. For this, the Telegram API has a special request . Therefore, you need to slip to ensure that the user always has relevant tips.

3) If you need different logic for Telegram and Google Assistant, you can do it like this:

let intentRequest = request.body.originalDetectIntentRequest; if(intentRequest.source == 'google'){ let conv = agent.conv(); conv.ask(' ?'); agent.add(conv); } else { agent.add(' ?'); } 4) Sending an audio file can be implemented as follows:

{ "telegram": { "text": "https://s0.vocaroo.com/media/download_temp/Vocaroo_s0bXjLT1pSXK.mp3" } } 5) The context in Dialogflow will be stored for 20 minutes. You need to take this into account when designing a Telegram bot. If the user is distracted by 20 minutes, he can not continue from the same place.

Examples

We will publish the source code of the skill in the near future. Immediately after its release.

Ps. What was on hakatone.

It was a busy 2 days.

First there were training lectures, and in the afternoon we started to implement our projects.

The next day was an active revision of projects and preparation of presentations.

The guys from Google all this time helped us and answered a bunch of questions that inevitably arise in work. It was a great opportunity to learn a lot of new things and leave feedback while the iron is still hot.

Thanks to all the participants, organizers from Google, as well as experts who lectured and helped us throughout the hackathon!

We, by the way, took the second place.

If you have questions, you can write:

shipa_o

raenardev

comradeguest

And also there is a Telegram-chat dedicated to the discussion of voice interfaces, come in:

https://t.me/conversational_interfaces_ru

Source: https://habr.com/ru/post/420111/

All Articles