Perfect Action for Google Assistant - 8 lessons of the Moscow hackathon

In late July, Google conducted a closed hackathon in its Moscow office (the one opposite the Kremlin). The topic was the development of Actions for the voice assistant . We ( Just AI ) and about a dozen teams visited this event and took out from it not only red backpacks with Google nishtyak, but also a lot of useful knowledge about how to create high-quality voice interfaces.

For a couple of days of the hackathon, everyone tried to develop a voice application for an assistant, and someone even published their works in the Google Actions catalog. Using our application as an example , we will talk about how to create a perfect Action and easily google moderation.

What is Actions on Google?

Google Assistant can not only perform standard voice commands. For it, you can create your own add-ons that will add functionality to the assistant. Such additions Google calls Actions. And in Russian it translates as “application”. Read more about Actions on Google here .

')

Why create your own action

If you are doing some kind of mobile application, or you have a website that provides your clients with some useful services, then Google Assistant is another great channel for interacting with your consumer. After all, the voice assistant is installed on more than 500 million devices! And this is not only smartphones, but also smart speakers, cars, watches, televisions. Therefore, if you complement your website or application with the skill for a voice assistant, then you will most likely find new customers and users, because they already speak to the assistant on all these devices. And they are more willing to tell friends and acquaintances about your service.

How to make an action correctly

But do not think that the application for the voice assistant is the same as the site. This is a fundamentally different user experience (UX), which already has its own guidelines . The user speaks with the assistant, so your application for the assistant must speak to the user in natural language.

On the hackathon, we used our own conversational interface designer Aimylogic , on which we implemented our first application for Google Assistant. And on his example, now we will tell about the most valuable lessons that we have learned during this time.

This is how our ready-made Action looks in the Aimylogic designer.

Lesson number 1. Action - this is exactly the voice

The assistant is a voice interface. Users speak with an assistant when it is more convenient for them to tell, rather than open an application or website.

You need to clearly understand why this or that function of your service can be useful to the user through a voice interface.

A voice is when you need to quickly, and sometimes without looking at the screen at all. The voice is when both the question and the answer are short and clear from the first time. And if for this the user does not need to do five clicks - then he will definitely take advantage of this opportunity.

In our application “Yoga for the eyes” there is such a function. This is actually an exercise for vision. The user can not be distracted by the screen during class. That's why we use voice interface.

Lesson number 2. Action must be really useful.

Assistant solves user problems, not just opens the browser

Do not make an app for an assistant that does nothing useful for the user. Action can be very simple and perform only one function of your service, but it should be completely useful. Otherwise, it does not make sense.

“Yoga for the eyes” is useful because the user does not need to memorize the exercises and their order in different complexes. He simply calls Action, which reads the exercise one by one, and the user performs them.

To do this, we chose several different complexes and placed the exercises in a regular Google spreadsheet on different sheets. Our application uses this table as a database , selects a list of exercises from the desired sheet using an HTTP request and then read them to the user in a cycle. As soon as the user finishes the next exercise, he says “Go ahead”, and Action reads the following.

Lesson number 3. Action must be clear and predictable.

The assistant always explains what he expects from the user. And the user always knows what the assistant will do now.

Action is a dialogue between an assistant and a user. When Action waits for the next replica, the user must understand what he can say now and how the assistant will react. Otherwise, the assistant will appear to the user inadequate and incomprehensible. And do not want to use it.

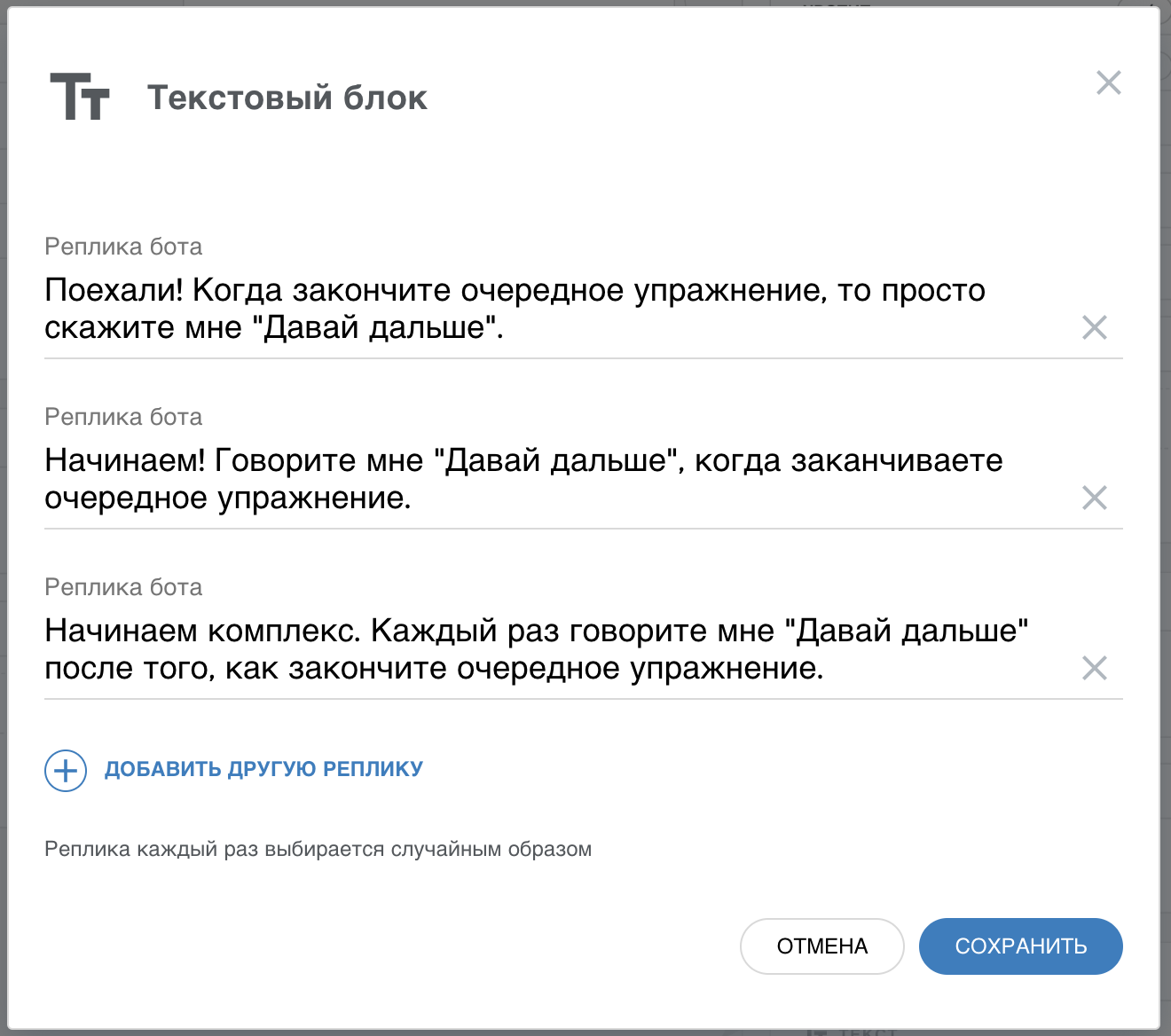

The application “Yoga for the eyes” briefly, but clearly explains to the user that he should say “Come on” every time he completes the next exercise. After that, the assistant reads the next exercise and waits again. So the user understands what he should do, and what the assistant expects from him. The dialogue is simple, but surprisingly effective.

In the Phrases block, we added the synonyms of the phrase “Come on,” so that the assistant responds adequately to other wordings. We also added buttons with tips - so that the user can understand how our application works the first time. We also used the variability in the answers in order not to repeat the same phrase every time the user starts the activity.

Lesson number 4. Action should speak briefly, but naturally.

Listening to a long text from a robot is difficult. And if the text sounds unnatural, it will be even worse.

An assistant is a robot that wants to be like a person. In practice, this results in the fact that all the replicas of the assistant should be understandable, but should not be too robotic.

The synthesis of speech is a complicated thing. Slightly longer text - and the user does not listen to him until the end. And if the assistant just as the robot will encrypt the message in two words, the user will not understand it at all. Well, or he will need more effort to understand what is required of him.

So work on your answers . On the one hand, make them short, and on the other, add colloquial language to them.

When we selected from the Internet complexes of exercises for sight, we saw that they are well suited for the site, and not for the interactive interface. Therefore, we reworked each exercise to make it both shorter and clearer by ear. For example, on the exercise site there was such a text.

"Close your eyes. Then rub your palms and apply them to your eyes. Stay in this position until your hands give out heat. Then, without opening your eyes, rub your palms again and apply them again to your eyes. Do the exercise three times. ”

We changed it so

"Close your eyes. Scrub the palms firmly, bring them to your eyes and sit like that for a minute. ”

And broke into two replicas. When the user says “Come on,” the assistant will reply, “Now repeat this two more times . ”

You can not just copy the text from the site and use it for a voice assistant . You need to work on the texts so that they are clear to the ear.

Lesson number 5. Action must interact with the user.

An assistant is a personal assistant. It should help the user to get the result, and not just wait for action from him.

On the website or in a mobile application, we make an interface with buttons, lists, pictures, and so on. The voice assistant can do all this, too, but the main difference is that it speaks and allows the user to say any phrase. And this changes the approach to UI.

The user can not hear what the assistant told him. Or do not understand the first time. An assistant should always be ready to repeat if asked. Or reformulate your answer, if the user is not clear. Put yourself in the assistant's seat. You talk with another person, and it is important for you that he understands you, and not necessarily the first time. You do not just show your partner a sheet of paper with menu items, but help you make a choice.

In “Yoga for the eyes,” we made it so that the assistant could always repeat the exercise if the user asked. And not just to display the text of the exercise anew, but in a conversational manner to suggest doing the exercise one more time. To do this, we used several variations of the phrase “Repeat” in the Phrases block and put another bubble with the text before repeating the text of the exercise.

Lesson number 6. Action has no right to break.

The assistant must respond adequately even to the user's incomprehensible phrases.

The assistant does not have a “blue screen” or an error window and an OK button. And the user, in turn, can say anything, and not at all what your Action has been trained for. In this case, the application should not “accuse” the user that he “does not say that”. It is necessary to somehow respond to an incomprehensible command and once again explain to the user what is expected of him.

Just saying “Oh, I didn't understand you” is not enough. This is the same as displaying your

“Yoga for the eyes” responds to obscure phrases very simply - it clarifies with the user whether the current exercise has completed. And if in this case he answers something incomprehensible, he asks if he wants to finish studying at all. To do this, we used the “Any other phrase” branch in the Phrase block to respond to incomprehensible commands. It turned out that if the user is not set to continue the lesson, the assistant in a relaxed manner invites him to finish.

Give incomprehensible phrases more attention . After all, this is the “most frequently used function” of your Action.

Lesson number 7. Action must recognize the user.

The assistant is an assistant who knows his user and changes his behavior with time.

If the user launched your Action for the very first time, the application should tell you what it is for and how to use it. But if the user calls the application every day, then it does not make sense to load it with the same help every time. It is necessary to change the behavior of the application, as does the assistant, who every day gets to know his user better.

In Aimylogic there is information about how much time the user has accessed the application last time. Yoga for the Eyes uses this to greet the user differently when they start. And since charging needs to be done every day, our application reminds you of this if the user has not launched the application for a long time. To do this, we use the Conditions block, in which we check how long ago we received the previous request. Depending on this, the Action goes on different branches of the dialogue.

Lesson number 8. Action must end

You need to disconnect from the microphone when the application finishes its work.

If you do not do this, then Google will reject your application when publishing to the directory. Therefore, your application should have at least one conversation thread that leads to exit from the application. In this case, it is necessary to “close” the microphone.

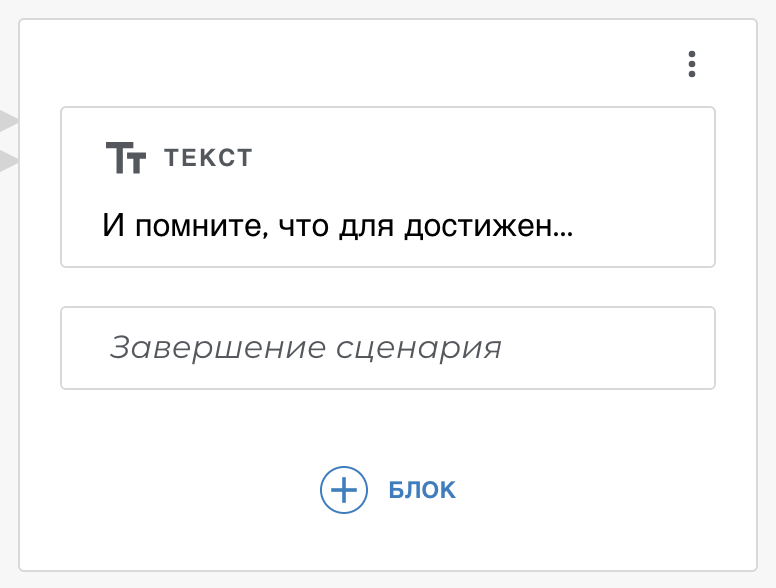

In Yoga for the Eyes, the user can always say “Enough” or “I'm tired” to complete the classes. In Aimylogic there is a Script Completion block - we used it in our dialogue to say goodbye to the user and complete our Action.

And some more tips from us

Do not try to cram all the functions of your service into the voice application for the assistant. Dialogue is about simplicity, not about multifunctionality. Your Action can perform only one function, but it is so convenient to do that the user will contact your application every day.

Do not make another IVR from the assistant . The assistant should not list the possible answers - this is unnatural. When you get to the voice menu - this is suffering. No need to do the same from the assistant. He knows how to recognize speech, so work on your script so that it is natural. In the same Aimylogic for this there are all the tools, and at the same time you can not write any code at all.

Buttons are hints, not the main element of voice UI . The assistant is not a button interface, but a voice interface. Therefore, the buttons should be used only as tips. Your dialogue should be such that the user does it without buttons.

Write a short privacy policy and put the name of your application in it. Without this, your application will not be moderated by Google. Look at our final version , so as not to make the same mistakes as we :)

Finally

Google Assistant just started to understand Russian. And there is still not much of what is in the west (a cool voice, a clever speaker, etc.). But all this is a matter of time. But now you can try to master this new channel for your services, using the experience that Google itself and other developers share.

PS A little later we will publish in our tutorials step by step instructions on how to do "Yoga for the Eyes" on Aimylogic. Come to our Telegram chat for developers not to miss the publication.

Source: https://habr.com/ru/post/420083/

All Articles