Client-server interaction in a new mobile PvP-shooter and game server device: problems and solutions

In previous articles of the cycle (all links at the end of the article) on the development of a new fast paced shooter, we examined the mechanisms of the basic architecture of game logic based on ECS, and the specifics of working with the shooter on the client, in particular, the implementation of the player’s prediction system for local responsiveness . This time we will dwell more on the issues of client-server interaction in the conditions of poorly connected mobile networks and ways to improve the quality of the game for the end user. Also briefly describe the architecture of the game server.

During the development of a new synchronous PvP for mobile devices, we encountered typical problems for the genre:

')

We made the first prototype on UNet, even if it imposed restrictions on scalability, control over the network component and added dependence on the capricious mix of master clients. Then they switched to samopisny netcode over the Photon Server , but more on that later.

Consider the mechanisms for organizing the interaction between customers in synchronous PvP games. The most popular of them are:

It was decided to write an authoritarian server.

Network interaction with peer-to-peer (left) and client-server (right)

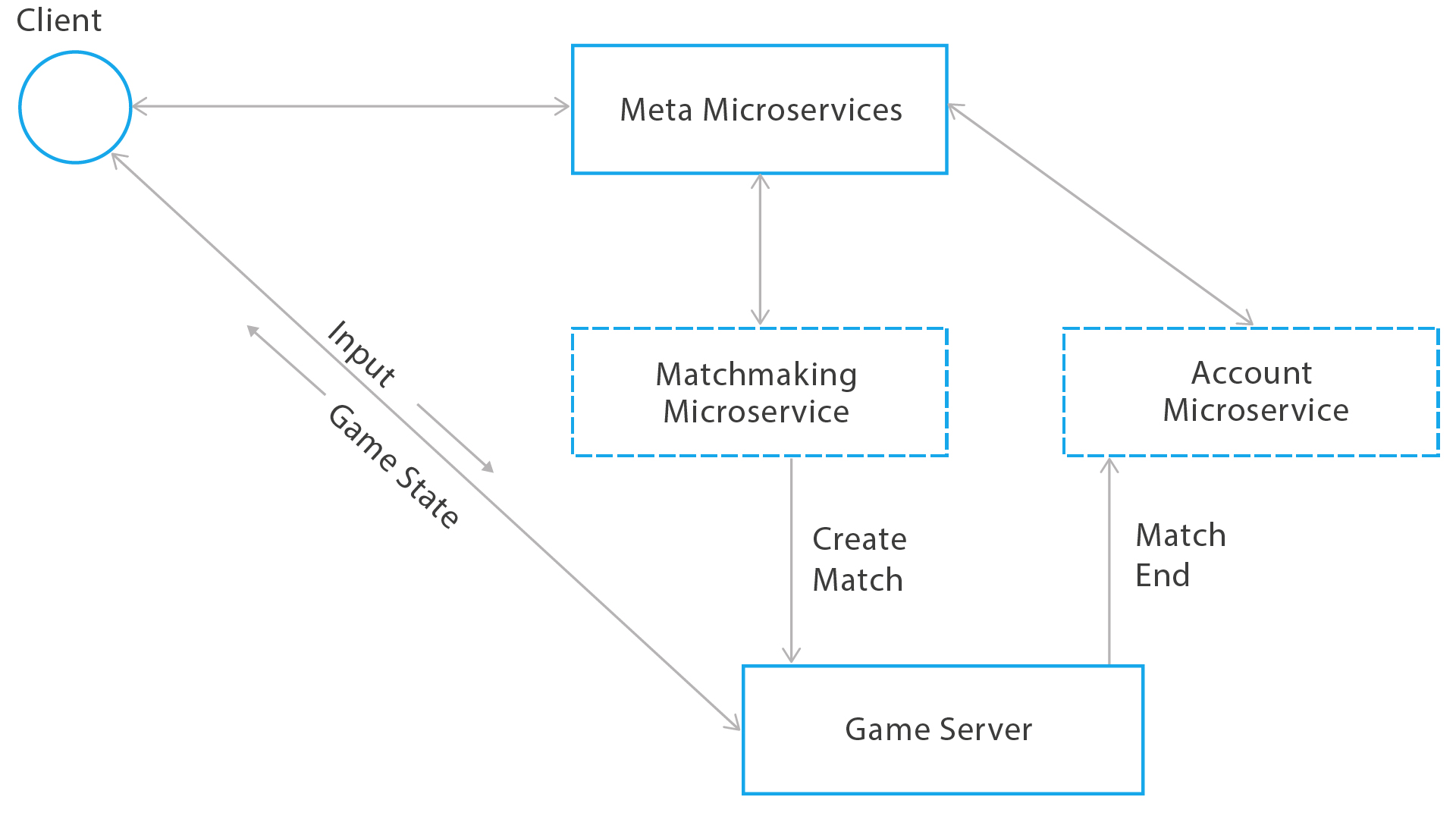

We use Photon Server - this allowed us to quickly deploy the necessary infrastructure for the project based on the scheme already worked out over the years (in War Robots we use it).

Photon Server for us is exclusively a transport solution, without high-level constructions that are strongly tied to a specific game engine. Which gives some advantage, as the data transfer library can be replaced at any time.

The game server is a multi-threaded application in the Photon container. For each match, a separate stream is created that encapsulates the entire logic of the work and prevents the influence of one match on another. All server connections are controlled by Photon, and the data that comes from clients is added to a queue, which is then disassembled by ECS.

The general scheme of streams of matches in the container Photon Server

Each match consists of several stages:

Sequence of actions on the server within one frame

From the client’s side, the process of connecting to a match is as follows:

When a player directly affects other users (does damage, applies effects, etc.) - the server searches the state history to compare the game world actually seen by the client at a particular simulation tick with what was happening on the server to others gaming entities.

For example, you shoot at your client. For you, this happens instantly, but the client has already “run away” for some time in comparison with the surrounding world, which he displays. Therefore, due to the local prediction of the player's behavior, the server needs to understand where and in what condition the opponents were at the moment of the shot (perhaps they were already dead or, conversely, invulnerable). The server checks all the factors and makes its verdict on the damage done.

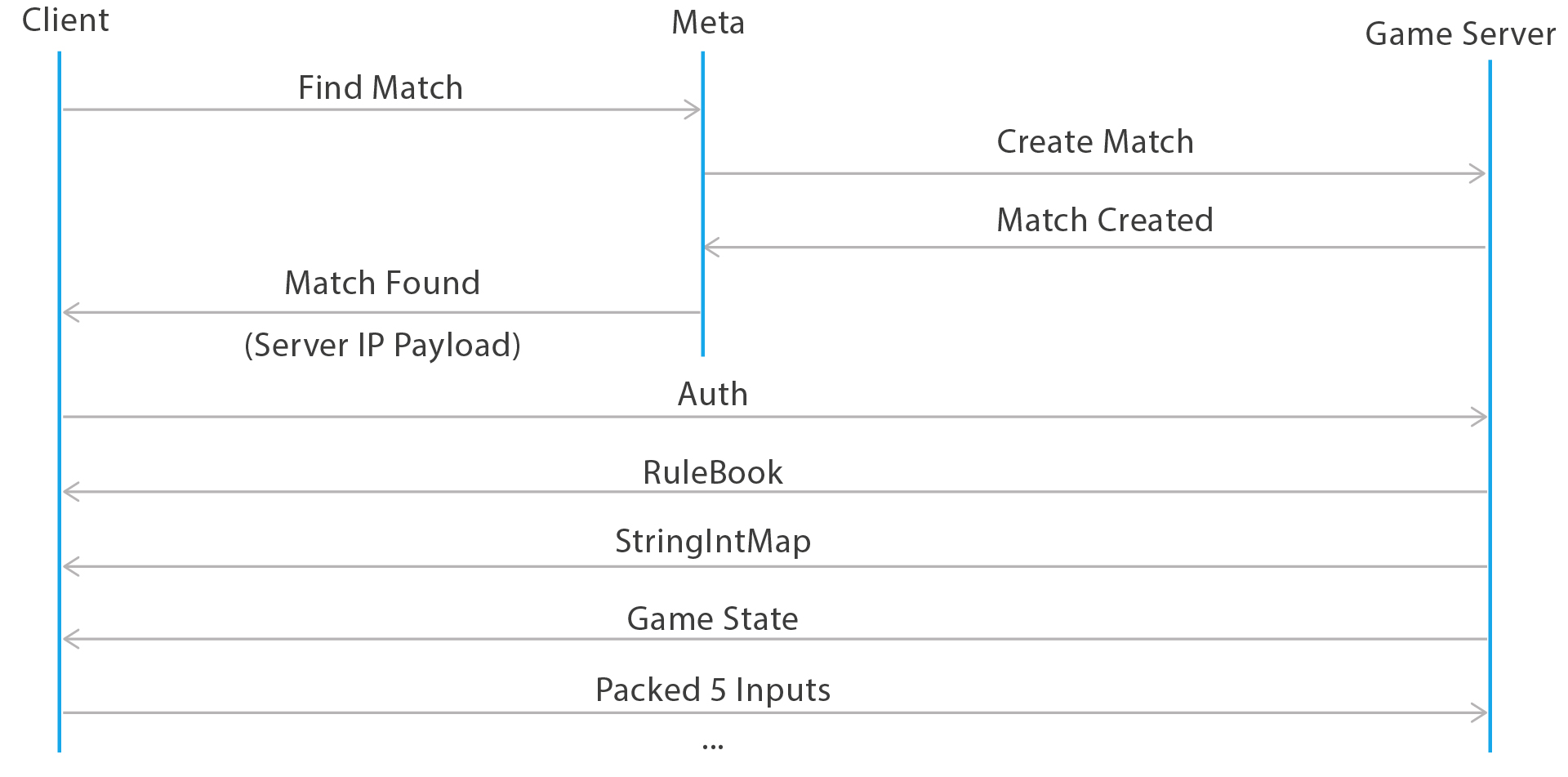

Scheme of the request to create a match, connect to the game server and authorization

We have self-written binary serialization of data, and we use UDP for data transfer.

UDP is the most obvious option for quickly sending messages between the client and the server, where it is usually more important to display the data as soon as possible than to display it in principle. Lost packages make adjustments, but problems are solved for each case individually, but because Since data is constantly coming from the client to the server and back, then we can introduce the concept of a connection between the client and the server.

To create an optimal and convenient code based on the declarative description of the structure of our ECS, we use code generation. When creating components for them, serialization and deserialization rules are also generated. The serialization is based on a custom binary packer, which allows you to package data in the most economical way. The set of bytes obtained during its operation is not the most optimal, but allows you to create a stream from which you can read some packet data without the need for its complete deserialization.

The data transfer limit of 1500 bytes (also known as MTU) is, in fact, the maximum packet size that can be transmitted over Ethernet. This property can be configured on every network hop and is often even below 1500 bytes. What happens if you send a packet of more than 1500 bytes? Package fragmentation begins. Those. Each packet will be forcibly divided into several fragments, which will be separately sent from one interface to another. They can be sent by completely different routes and the time to receive such packets may increase significantly before the network layer issues a glued packet to your application.

In the case of Photon, the library forcibly begins to send such packets in reliable UDP mode. Those. Photon will wait for each packet fragment, and also send the missing fragments if they are lost during the transfer. But such work of the network part is unacceptable in games where minimum network delay is necessary. Therefore, it is recommended to reduce the size of forwarded packets to a minimum and not exceed the recommended 1500 bytes (in our game, the size of one complete world state does not exceed 1000 bytes; the size of a packet with delta compression is 200 bytes).

Each packet from the server has a short header that contains several bytes describing the type of packet. The client first unpacks this set of bytes and determines which package we are dealing with. We strongly rely on authorization for this property of our deserialization mechanism: in order not to exceed the recommended packet size of 1500 bytes, we break the RuleBook and StringIntMap package into several steps; and in order to understand exactly what we got from the server - the rules of the game or the state itself - we use the package header.

When developing a new project features the size of the package is steadily increasing. When we encountered this problem, it was decided to write our own delta compression system, as well as contextual clipping of unnecessary data to the client.

The contextual data cutting is written manually on the basis of what data the client needs to correctly display the world and correct the local prediction of its own data. Then a delta compression is applied to the remaining data.

Our game every tick produces a new state of the world that needs to be packaged and handed over to customers. Usually delta compression is to first send a full state with all the necessary data to the client, and then send only the changes to this data. This can be represented as:

deltaGameState = newGameState - prevGameState

But for each client, different data is sent and the loss of just one packet can lead to the need to send the full state of the world.

Forwarding the full state of the world is a fairly expensive task for the network. Therefore, we modified the approach and send the difference between the current processed state of the world and the one that was received by the client. For this, the client in his batch with the input also sends the tick number, which is a unique identifier of the game state, which he has already received. Now the server knows on the basis of which state it is necessary to build a delta compression. The client usually does not have time to send the server the number of the tick that he has before the server prepares the next frame with the data. Therefore, on the client there is a history of the server states of the world, to which the deltaGameState patch generated by the server is applied.

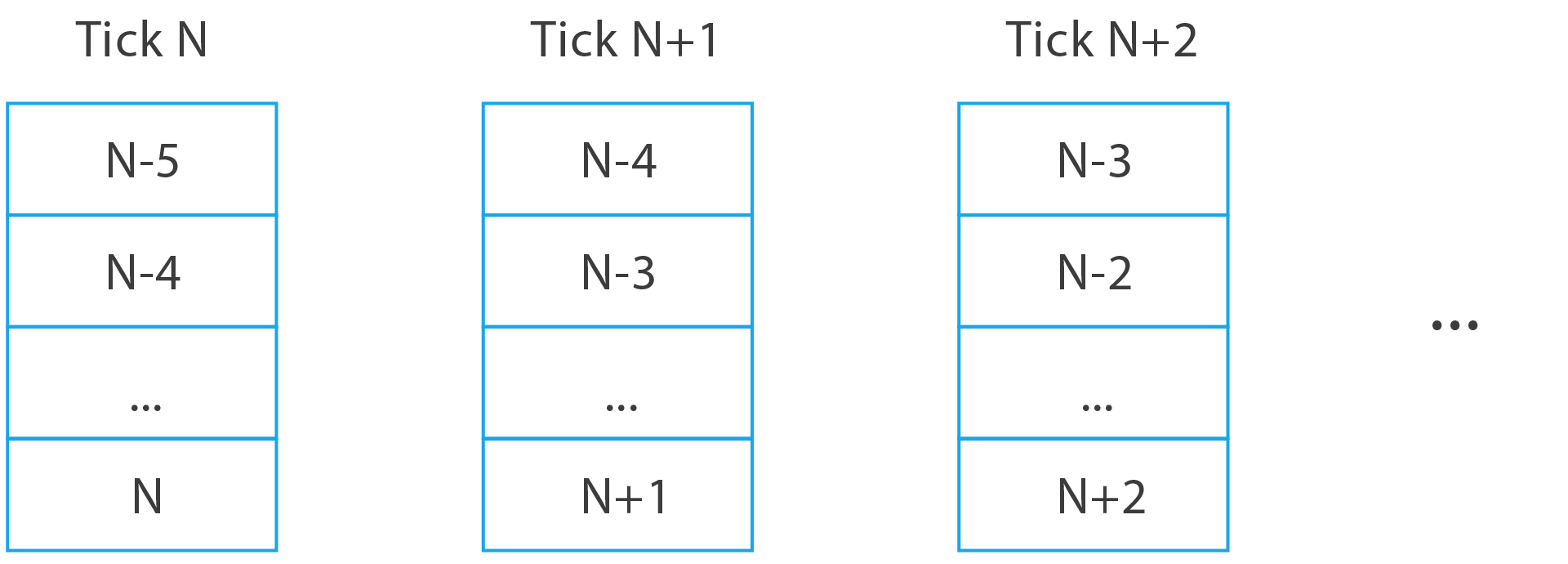

Illustration of the frequency of client-server interaction in the project

Let us dwell on what the client sends. In classic shooters, this package is called ClientCmd and contains information about the player’s keystrokes and the time the team was created. Inside the packet with the input, we send much more data:

There are some interesting points. First, the client informs the server in which tick he sees all the objects of the game world around him that he is unable to predict (WorldTick). It may seem that the client is able to “stop” time for the world, and he himself can run and shoot everyone because of a local prediction. This is not true. We trust only a limited set of values from the client and do not allow him to shoot back in time for more than 1 second. Also, the WorldTick field is used as an acknowledgment packet, on the basis of which delta compression is built.

In the package you can find floating-point numbers. Typically, these values are often used to take readings from a player's joystick, but are not very well transmitted over the network, as they have a lot of bounce and are usually too accurate. We quantize such numbers and package using a binary packer so that they do not exceed an integer value that can fit into several bits depending on its size. Thus, the packing of the input from the joystick aiming is broken:

Another interesting feature when sending input is that some commands can be sent several times. Very often we are asked what to do if a person pressed an ultimatum, and the package with its input was lost? We just send this input several times. This is similar to the work of guaranteed delivery, but more flexible and faster. Since the size of the input packet is very small, we can pack several adjacent player inputs into the resulting packet. At the moment, the window size, which determines their number is equal to five.

Input packets generated by the client in each tick and sent to the server

Transmission of this kind of data is the fastest and most reliable for solving our problems without using reliable UDP. We assume that the probability of losing so many packets in a row is very low and is an indicator of a serious degradation of the quality of the network as a whole. If this happens, the server simply copies the last input received from the player and applies it, hoping that it remains unchanged.

If the client understands that he has not received packets over the network for a very long time, then the process of reconnection to the server is started. For its part, the server keeps track of the input queue from the player.

There are many other systems on the game server that are responsible for detecting, debugging and editing “profitable” matches, updating the configuration by game designers without restarting, logging and tracking servers. We also want to write more about this, but separately.

First of all, when developing a network game on mobile platforms, you should pay attention to the correctness of your client's work with high pings (about 200 ms), slightly more frequent data loss, as well as with the size of the data sent. And you need to clearly fit into the packet limit of 1500 bytes to avoid fragmentation and traffic delays.

Useful links:

Previous articles on the project:

During the development of a new synchronous PvP for mobile devices, we encountered typical problems for the genre:

')

- The quality of the connection of mobile clients leaves much to be desired. This is a relatively high average ping in the region of 200-250 ms, and an unstable distribution of ping over time, taking into account the change of access points (although, contrary to popular opinion, the percentage of packet loss in 3G + mobile networks is rather low - about 1%).

- Existing technical solutions are monstrous frameworks that drive developers into a rigid framework.

We made the first prototype on UNet, even if it imposed restrictions on scalability, control over the network component and added dependence on the capricious mix of master clients. Then they switched to samopisny netcode over the Photon Server , but more on that later.

Consider the mechanisms for organizing the interaction between customers in synchronous PvP games. The most popular of them are:

- P2P or peer-to-peer . The whole logic of the match is hosted on one of the clients and does not require practically any traffic costs from us. But the space for cheaters and the high demands on the host of the match client, as well as restrictions on NAT did not allow us to take this solution for a mobile game.

- Client-server . A dedicated server, on the contrary, allows you to fully control everything that happens in a match (farewell, cheaters), and its performance - to count some things specific to our project. Also, many large hosting providers have their own subnet structure, which provides the minimum latency for the end user.

It was decided to write an authoritarian server.

Network interaction with peer-to-peer (left) and client-server (right)

Data transfer between client and server

We use Photon Server - this allowed us to quickly deploy the necessary infrastructure for the project based on the scheme already worked out over the years (in War Robots we use it).

Photon Server for us is exclusively a transport solution, without high-level constructions that are strongly tied to a specific game engine. Which gives some advantage, as the data transfer library can be replaced at any time.

The game server is a multi-threaded application in the Photon container. For each match, a separate stream is created that encapsulates the entire logic of the work and prevents the influence of one match on another. All server connections are controlled by Photon, and the data that comes from clients is added to a queue, which is then disassembled by ECS.

The general scheme of streams of matches in the container Photon Server

Each match consists of several stages:

- The game client queues up in the so-called matchmaking service. As soon as it gathers the required number of players that meet certain conditions, he reports this to the game server using gRPC. At the same time, all the data needed to create the game are transferred.

The general scheme for creating a match - On the game server, the initialization of the match begins. All match parameters are processed and prepared, including card data, as well as all client data received from the match creation service. Processing and preparing data implies that we parse all the necessary data and write it into a special subset of entities, which we call RuleBook. It stores match statistics (which do not change during its course) and will be transferred to all clients during connection and authorization on the game server once or upon reconnection after losing the connection. Static data of a match includes the configuration of the card (the representation of the card by the components of ECS, connecting them with the physics engine), customer data (nicknames, a set of weapons that they have and do not change during the battle, etc.).

- Start the match. The ECS systems that make up the game on the server begin to work. All systems are ticking 30 frames per second.

- Each frame is the reading and unpacking of player inputs or copying, if the players did not send their input within a certain interval.

- Then, in the same frame, the input is processed in the ECS system, namely: a change in the player’s state; the world he influences by his input; and the status of other players.

- At the end of the frame, the resulting world state for the player is packed and sent over the network.

- At the end of the match, the results are sent to customers and to microservice processing awards for the battle using gRPC, as well as match analyst.

- After the stream flow is cleared, the flow closes.

Sequence of actions on the server within one frame

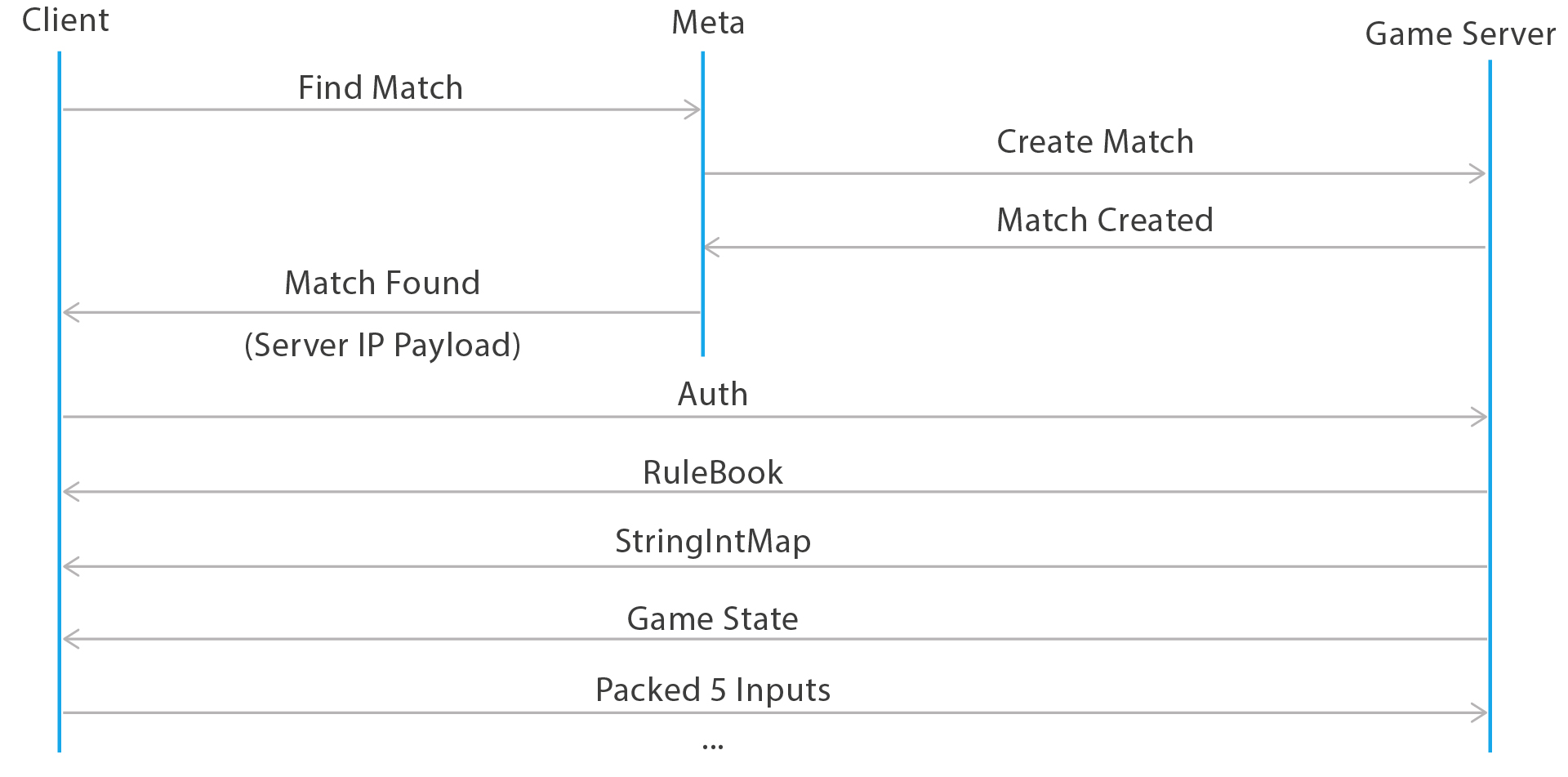

From the client’s side, the process of connecting to a match is as follows:

- First, a request is made for queuing to the match creation service via websocket with serialization through protobuf.

- When creating a match, this service tells the client the address of the game server and sends an additional payload needed by the client before the match. Now the client is ready to begin the authorization process on the game server.

- The client creates a UDP socket and starts sending a connection request to the game server along with some identification data. The server is already waiting for this client. When connected, he sends him all the necessary data to start the game and the primary display of the world. These include: RuleBook (a list of static data for a match), as well as a StringIntMap (the data used in the gameplay strings that will be identified by integers during the match). This is necessary to save traffic, because transferring rows each frame creates a significant load on the network. For example, all player names, class names, weapon identifiers, accounts, and the like are all recorded in StringIntMap, where they are encoded with simple integer data.

When a player directly affects other users (does damage, applies effects, etc.) - the server searches the state history to compare the game world actually seen by the client at a particular simulation tick with what was happening on the server to others gaming entities.

For example, you shoot at your client. For you, this happens instantly, but the client has already “run away” for some time in comparison with the surrounding world, which he displays. Therefore, due to the local prediction of the player's behavior, the server needs to understand where and in what condition the opponents were at the moment of the shot (perhaps they were already dead or, conversely, invulnerable). The server checks all the factors and makes its verdict on the damage done.

Scheme of the request to create a match, connect to the game server and authorization

Serialization and deserialization, packing and unpacking of the first byte of a match

We have self-written binary serialization of data, and we use UDP for data transfer.

UDP is the most obvious option for quickly sending messages between the client and the server, where it is usually more important to display the data as soon as possible than to display it in principle. Lost packages make adjustments, but problems are solved for each case individually, but because Since data is constantly coming from the client to the server and back, then we can introduce the concept of a connection between the client and the server.

To create an optimal and convenient code based on the declarative description of the structure of our ECS, we use code generation. When creating components for them, serialization and deserialization rules are also generated. The serialization is based on a custom binary packer, which allows you to package data in the most economical way. The set of bytes obtained during its operation is not the most optimal, but allows you to create a stream from which you can read some packet data without the need for its complete deserialization.

The data transfer limit of 1500 bytes (also known as MTU) is, in fact, the maximum packet size that can be transmitted over Ethernet. This property can be configured on every network hop and is often even below 1500 bytes. What happens if you send a packet of more than 1500 bytes? Package fragmentation begins. Those. Each packet will be forcibly divided into several fragments, which will be separately sent from one interface to another. They can be sent by completely different routes and the time to receive such packets may increase significantly before the network layer issues a glued packet to your application.

In the case of Photon, the library forcibly begins to send such packets in reliable UDP mode. Those. Photon will wait for each packet fragment, and also send the missing fragments if they are lost during the transfer. But such work of the network part is unacceptable in games where minimum network delay is necessary. Therefore, it is recommended to reduce the size of forwarded packets to a minimum and not exceed the recommended 1500 bytes (in our game, the size of one complete world state does not exceed 1000 bytes; the size of a packet with delta compression is 200 bytes).

Each packet from the server has a short header that contains several bytes describing the type of packet. The client first unpacks this set of bytes and determines which package we are dealing with. We strongly rely on authorization for this property of our deserialization mechanism: in order not to exceed the recommended packet size of 1500 bytes, we break the RuleBook and StringIntMap package into several steps; and in order to understand exactly what we got from the server - the rules of the game or the state itself - we use the package header.

When developing a new project features the size of the package is steadily increasing. When we encountered this problem, it was decided to write our own delta compression system, as well as contextual clipping of unnecessary data to the client.

Context sensitive network traffic optimization. Delta compression

The contextual data cutting is written manually on the basis of what data the client needs to correctly display the world and correct the local prediction of its own data. Then a delta compression is applied to the remaining data.

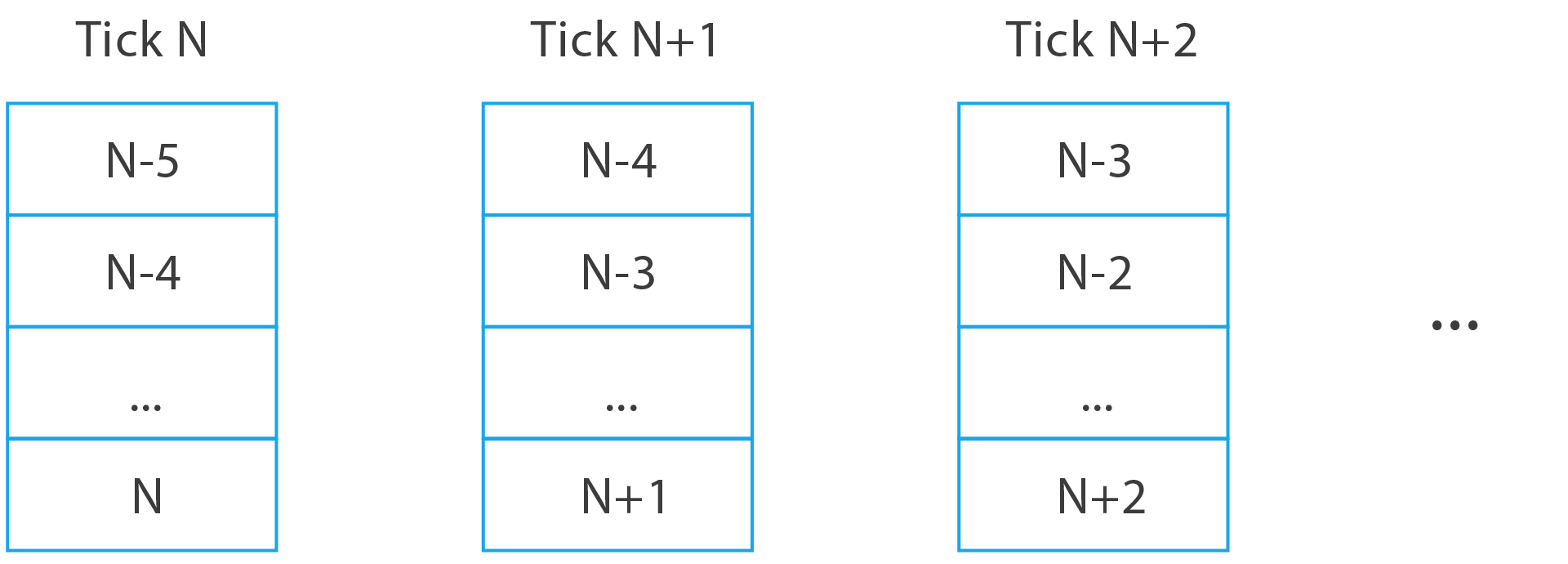

Our game every tick produces a new state of the world that needs to be packaged and handed over to customers. Usually delta compression is to first send a full state with all the necessary data to the client, and then send only the changes to this data. This can be represented as:

deltaGameState = newGameState - prevGameState

But for each client, different data is sent and the loss of just one packet can lead to the need to send the full state of the world.

Forwarding the full state of the world is a fairly expensive task for the network. Therefore, we modified the approach and send the difference between the current processed state of the world and the one that was received by the client. For this, the client in his batch with the input also sends the tick number, which is a unique identifier of the game state, which he has already received. Now the server knows on the basis of which state it is necessary to build a delta compression. The client usually does not have time to send the server the number of the tick that he has before the server prepares the next frame with the data. Therefore, on the client there is a history of the server states of the world, to which the deltaGameState patch generated by the server is applied.

Illustration of the frequency of client-server interaction in the project

Let us dwell on what the client sends. In classic shooters, this package is called ClientCmd and contains information about the player’s keystrokes and the time the team was created. Inside the packet with the input, we send much more data:

public sealed class InputSample { // , public uint WorldTick; // , , public uint PlayerSimulationTick; // . (idle, , ) public MovementMagnitude MovementMagnitude; // , public float MovementAngle; // public AimMagnitude AimMagnitude; // public float AimAngle; // , public uint ShotTarget; // , public float AimMagnitudeCompressed; } There are some interesting points. First, the client informs the server in which tick he sees all the objects of the game world around him that he is unable to predict (WorldTick). It may seem that the client is able to “stop” time for the world, and he himself can run and shoot everyone because of a local prediction. This is not true. We trust only a limited set of values from the client and do not allow him to shoot back in time for more than 1 second. Also, the WorldTick field is used as an acknowledgment packet, on the basis of which delta compression is built.

In the package you can find floating-point numbers. Typically, these values are often used to take readings from a player's joystick, but are not very well transmitted over the network, as they have a lot of bounce and are usually too accurate. We quantize such numbers and package using a binary packer so that they do not exceed an integer value that can fit into several bits depending on its size. Thus, the packing of the input from the joystick aiming is broken:

if (Math.Abs(s.AimMagnitudeCompressed) < float.Epsilon) { packer.PackByte(0, 1); } else { packer.PackByte(1, 1); float min = 0; float max = 1; float step = 0.001f; // 1000 , // // packer.PackUInt32((uint)((s.AimMagnitudeCompressed - min)/step), CalcFloatRangeBits(min, max, step)); } Another interesting feature when sending input is that some commands can be sent several times. Very often we are asked what to do if a person pressed an ultimatum, and the package with its input was lost? We just send this input several times. This is similar to the work of guaranteed delivery, but more flexible and faster. Since the size of the input packet is very small, we can pack several adjacent player inputs into the resulting packet. At the moment, the window size, which determines their number is equal to five.

Input packets generated by the client in each tick and sent to the server

Transmission of this kind of data is the fastest and most reliable for solving our problems without using reliable UDP. We assume that the probability of losing so many packets in a row is very low and is an indicator of a serious degradation of the quality of the network as a whole. If this happens, the server simply copies the last input received from the player and applies it, hoping that it remains unchanged.

If the client understands that he has not received packets over the network for a very long time, then the process of reconnection to the server is started. For its part, the server keeps track of the input queue from the player.

Instead of conclusion and reference

There are many other systems on the game server that are responsible for detecting, debugging and editing “profitable” matches, updating the configuration by game designers without restarting, logging and tracking servers. We also want to write more about this, but separately.

First of all, when developing a network game on mobile platforms, you should pay attention to the correctness of your client's work with high pings (about 200 ms), slightly more frequent data loss, as well as with the size of the data sent. And you need to clearly fit into the packet limit of 1500 bytes to avoid fragmentation and traffic delays.

Useful links:

- https://gafferongames.com/post/udp_vs_tcp/ is a great article for choosing between TCP and UDP for online games.

- https://api.unrealengine.com/udk/Three/NetworkingOverview.html - description of the server model in the Unreal Engine.

- http://ieeexplore.ieee.org/document/5360721 - study the network quality of mobile connections.

- http://ithare.com/mmog-rtt-input-lag-and-how-to-mitigate-them/ - networking in fast paced games.

Previous articles on the project:

Source: https://habr.com/ru/post/420019/

All Articles