From 0.01 TFlops HPL to ASC'18 Application Innovation

Hi, Habr! We continue a series of articles about the participation of the team from St. Petersburg State University (we call ourselves EnterTildeDot) at the world's largest student supercomputer competitions.

In this article, we will look at the path to ASC'18 using the example of one team member, focusing on the calling card of the competition and modern supercomputers in general - Linpack. Well, let's look at the secret of achieving a record and anti-record performance of a computing system.

Short excursion on supercomputer competitions

General information about what kind of competitions such can be found in our past articles , including the long post about the contest this year. However, to complete the picture, some information about the competition as a whole, we still give here.

The Asian Supercomputer Challenge is one of the three main high-performance computing team competitions, annually attracting more and more student teams from around the world to participate. ASC, like other similar competitions, presupposes a qualifying and final round with the following provisions:

- Main activity: HPC problem solving;

- Team: 5 students + coach;

- Selection stage: an absentee description of the proposal with a description of the solution of the tasks presented, on the basis of which a list of 20 finalists is determined.

- The final stage: an in-person competition for 20 teams with a duration of about 5 days of competition, including the complete assembly and configuration of the computing cluster, problem solving, and presentation. The cluster is assembled on the basis of restrictions on the power of 3 kW, either from the iron provided by the organizers or from its own. The cluster does not have access to the Internet. The tasks overlap with the tasks of the qualifying stage, however, there is also an unknown task - the Mystery Application.

Well, now in order with retreats to the educational program. Unlike other team members who have already reached the ASC'17 final, I joined the competition only this year. I joined the team in September, assignments for the qualifying stage are sent only in January, so I had enough time to learn the basic concepts of the competition, as well as to study the only known task in advance - HPL & HPCG. A task is met in one form or another almost every year, however, it is not always known in advance on what equipment it is necessary to carry out the task (sometimes the organizers provide remote access to their own resources).

HPL

HPL (High Performance Computing Linpack Benchmark) is a test of the performance of a computing system, based on the results of which a modern list of the world's best supercomputers is formed. The essence of the test lies in solving dense systems of linear algebraic equations. The appearance of this benchmark has introduced a metric that allows you to rank supercomputers, at the same time having turned out to be a “disservice” to HPC community. If you look at the list of the best supercomputers, then you can understand that Lynpack's secret was unraveled pretty quickly - take as many graphics accelerators as you can and you will be in the top. Of course, there are exceptions, but mainly the top places are occupied by supercomputers with graphic accelerators. What is the "disservice"? The fact is that besides measuring performance, Linpack is not used anywhere else and has nothing to do with real computing tasks. As a result, the supercomputer race went in the direction of obtaining the greatest efficiency of Lynpak, and not real workloads, like setting up common USE tasks instead of mastering the school curriculum.

HPL developers have also created another package - HPCG, on the basis of which the supercomputer rating is also formed. It is considered that this benchmark is closer to real problems than HPL, and, in some way, a significant discrepancy between the positions of the supercomputer in these two lists reflects the real state of affairs. However, the latest ratings (June 2018) were a pleasant exception, and, finally, the first positions of the lists coincided.

And now about the real HPL

We return to more practical moments of the story and competition. Linpack is open-source, available for download on the official website , however, hardly in the world top there really is a supercomputer, the performance of which was measured by this version of the benchmark. Accelerator manufacturers produce their own versions of HPL, optimized for specific devices, which allows to obtain a significant performance gain. Of course, custom versions of HPL must meet certain criteria and must successfully pass special tests.

Each vendor has its own version of HPL for each accelerator, however, unlike the original benchmark, there is no mention of any open-source. Nvidia releases HPL versions that are optimized for each of the cards, and the code is already being delivered not as source files, but as binary files. In addition, there are only two ways to access them:

- You have a supercomputer with Nvidia cards that can enter the top - Nvidia will find you on its own. Alas, you probably will not get the binaries, exactly as there will be no opportunity to participate in the optimization of HPL parameters. One way or another, you will get an adequate performance value obtained on an optimized benchmark.

- You are a member of one of three student supercomputer competitions. But we will return to this part.

So what is the essence of the task, especially if smart uncles from large companies have already optimized the benchmark for your equipment?

In the case of the qualifying stage of the competition - describe the possible actions to increase system performance. In this case, there is no need to chase absolute performance numbers, since some teams can have access to a large and class cluster of 226 nodes with modern accelerators, while others only have university computer class number 226, which we call a cluster.

In the case of the final stage, it already makes sense to compare the absolute performance values. Not to say that everyone here is on an equal footing, but at least there is a limit on the maximum system capacity allowed.

The result of the benchmark implementation mainly depends on two components: the cluster configuration and the parameter settings of the benchmark itself. It would also be worth noting the influence of the choice of compilers and libraries for matrix and vector calculations, but everything is pretty boring here, everyone uses the compiler from Intel + MKL. And in the case of binaries, there is no choice at all, since they have already been compiled. The result of HPL is a numerical value that indicates how many floating point operations per second this computing system performs. The basic unit of measurement is FLOPS (FLoating-point Operations Per Second) with corresponding prefixes. In the case of the final stage of the competition, almost always we are talking about Tera-scale systems.

Results optimization

Setting the benchmark parameters consists in a meaningful selection of input data of the task computed by Linpack (HPL.dat file). In this case, the dimensionality of this task has the greatest influence - the size of the matrix, the size of the blocks into which the matrix is divided, the ratio in which the blocks are distributed, etc ... A total of parameters is several dozen, the possible values are thousands. Bruteforce is not the best choice, especially if the test is performed on relatively small systems from a couple of minutes to a couple of hours, depending on the configuration (for the GPU, the test is performed much faster).

I had enough time to study, as already described in other sources, patterns that contribute to optimizing the results of the benchmark, and to identify new ones. I began to run tests a huge number of times, made a lot of Google tablets, tried to get access to systems with a previously untested configuration in order to run the benchmark on them. As a result, even before the start of the qualifying stage, a number of systems, both CPU and GPU, were tested, including even completely inappropriate for this Nvidia Quadro P5000. By the time the qualifying stage began, we had access to several nodes with the P100 and P6000, which greatly helped us in the preparation. The configuration of this system was in many respects similar to the one we planned to assemble during the final stage of the competition, and also, we finally got access to low-level settings, including changing the frequency.

As for the configuration, the presence and number of accelerators have the greatest impact. In the case of testing a system with a GPU, the most optimal option is when the main computational part of the task is delegated to the GPU component. The CPU component will also be loaded with auxiliary tasks, but it will not contribute to the system performance. But at the same time, the peak performance of the CPU must be taken into account in the peak performance of the system as a whole, which may look extremely disadvantageous from the point of view of the ratio of maximum performance to peak (theoretical). When running HPL on a GPU, a system with 2 GPU accelerators and two processors will at least not yield to a system with 2 GPUs and 20 CPUs.

Having described the proposals for the possible optimization of the HPL results, I ended up with my part of the proposal for the qualifying stage, and after passing to the final of the competition, a new stage of the competition began - the search for sponsors. On the one hand, we needed a sponsor who would bear the cost of flying the team to China, on the other hand, a sponsor who would kindly agree to provide the team with graphics accelerators. In the end, we were lucky with the first one, the university allocated some of the money, and Devexperts helped us to fully cover the tickets. With sponsors, from whom we planned to borrow the cards, we were less fortunate, and here we are flying to the final again with the basic cluster configuration without a single chance for competitiveness in HPL. Do not worry, squeeze the maximum of what they give, we thought.

Final ASC'18

And here we are in China, in the tiny by Chinese standards town - Nanchang, in the final. Two days we collect a cluster, and then - puzzles.

This year, all the teams were given 4 Nvidia V100 cards, this did not give us advantages over other teams, but it did not allow us to run HPL on the CPU. Nodes are initially given to everyone at 10, but the extra ones (remember the 3 kW limit) must be returned before the stage of the main competitive tasks is reached. There is a trick here - by reducing the frequency of the CPU and GPU, their performance decreases, but you can choose values for the frequency that we get more performance per unit of energy consumed. By reducing the frequency, we are able to add even more accelerators, which ultimately will affect the performance for the better. Alas, this trick would be useful to us much more if we came to the competition with a suitcase of accelerators, like other participants. However, we were able to afford to keep the maximum amount of CPU. Since not all competition tasks require a GPU, there was a suspicion that in some way this might play into our hands.

So, the most common cluster configuration in the final of the competition is minimum nodes, maximum cards.

Final linpack and some records

The tasks at the competition were tied to specific competition days, and HPL was the first of them, of course, after the cluster was assembled. The HPL results submission deadline is lunch on the third day of the competition, in addition, access to the remaining tasks of this day of competition opens immediately after the delivery of Lynpack. Nevertheless, Linpak begin to drive in the first days. Firstly, to make sure that the cluster is correctly assembled, and secondly, the Lynpack setup is not fast, and since no additional input data is required, then why not.

We assembled our cluster pretty quickly and started including Linpack. For our configuration, we got quite adequate values - about 20 TFlops, and everything would be fine, but after the output of the result was a string with an error. Earlier, I received similar errors only when I intentionally indicated incorrect sizes of blocks into which the task matrix is divided. Here we were waiting for a very unpleasant surprise. I told earlier that we were given 4 V100 cards, well, so ... HPL binaries we did not receive for them and no one could help us with this. A few months have passed, but for me it is still a mystery what happened at that final with our Linpack. We changed the versions of compilers and other libraries in the hope of getting rid of the error, repeatedly checked whether we installed the accelerators correctly (as we did it for the first time), but we did not succeed in correcting the error.

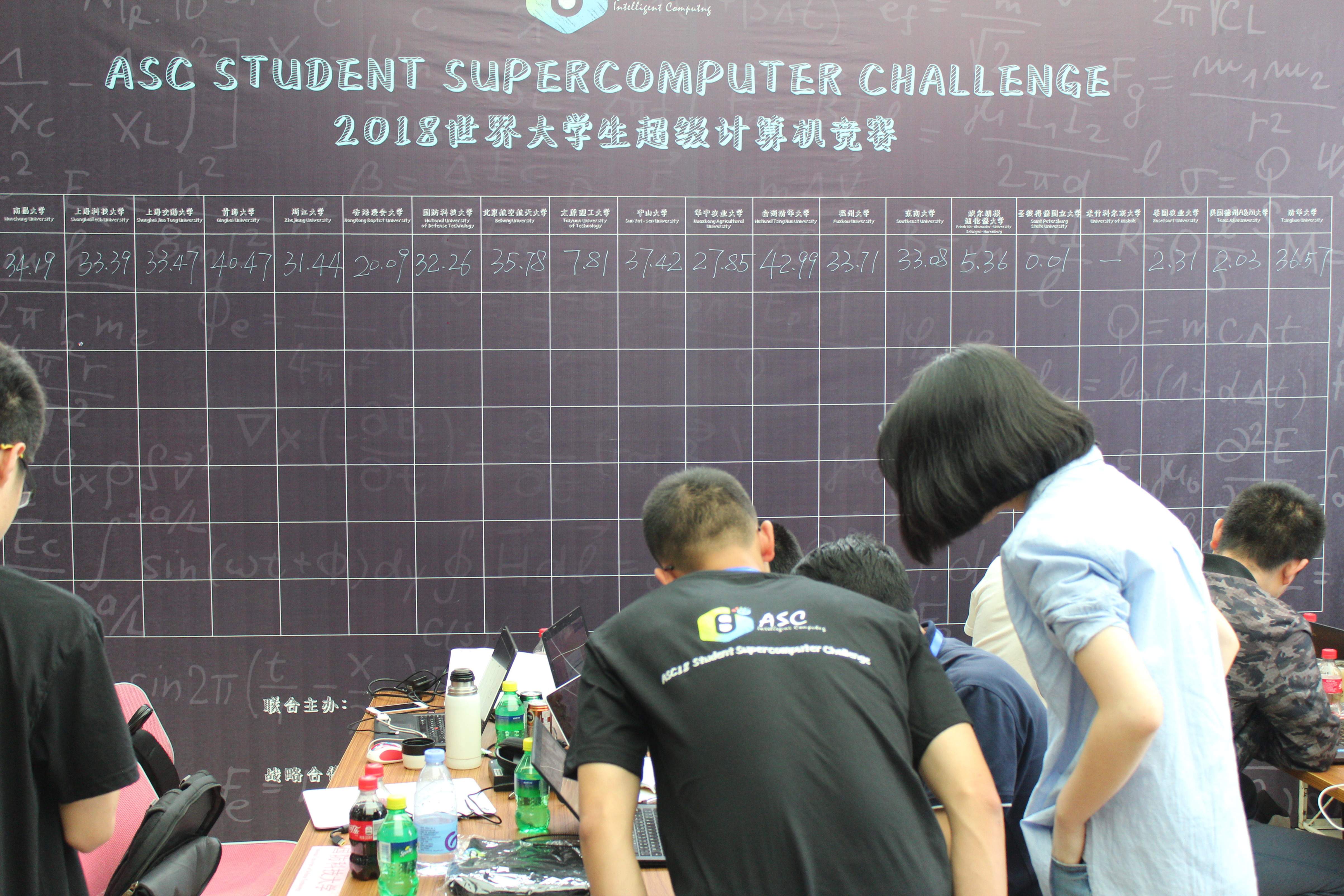

On the night before Lynpak’s delivery, we once again carefully studied the criteria for evaluating tasks, and so, for Lynpak, the formula consisted of two components — some value depending on the result of the team that won Lingpack and the coefficient for successful completion of the task. So it turned out that this coefficient is so large that it is completely unprofitable to pass an adequate Lingpack value, but with an incomprehensible error in comparison with the delivery of any value, but without error. After carefully considering everything, taking into account the fact that a lot of time was spent on finding a solution to the error and that obtaining datasets from the following tasks completely depends on the time Lincack takes, we decided to tactically merge this task. Thus, an absolute “record” was set in the history of supercomputer competitions among the correct values. Our Linpack broke out with a value of 0.01 TFlops. Of course, by optimizing the benchmark for the available CPUs, we would get a slightly higher performance value, however, this would not have affected the scores much, and time would have been significantly more. Remember that on a CPU, Linpack runs much longer. National Tsing Hua University showed the best result - 43 TFlops. After a day or two, Jack Dongarra (the creator of Lynpak), who is a member of the organizing committee of the competition, casually asked us, saying how is Lynpak there? Apparently, at that time he had not yet seen the board with the results: his WHAAAT reaction was worth every hour we spent on HPL.

Mystery application

Having passed the benchmarks, according to a plan prepared in advance, I joined the part of the team that was supposed to do the Mystery Application. Nobody knew in advance what the task would be, so they were preparing for the worst - they installed everything that could be useful from a flash drive to a cluster in advance. As a rule, the main difficulty of the tasks from this section is to collect them. This time it turned out to be a little different. The application was collected almost the first time, without any problems. The problems started when, on most of the presented datasets, we received an error at the address, despite the fact that it was a Fortran application. Judging by the results board, not only this task caused problems for us.

Secret weapon: CPU

Well, the last task in which I participated was scheduled for the next competition day. Unlike the Mystery Application, we have previously seen the package with which we had to work - it was cfl3d. When we learned that this is a NASA product, for some reason everyone was delighted, thinking that there really would be fine, and everything would be fine with the assembly and with optimization. When we tested the package at home, there were no problems with the assembly, but the examples of use were very interesting. Most of the examples had dependencies on installing additional tools, and it happened that in an attempt to google one of these tools - tool XX, we found the article of the year 1995, where it was said that tool XX was now outdated and use YY. A product site from the same times - the documentation often sent the user to the pages of the site, but only the site on frames and beyond the main page will not work. The relevance of the examples left much to be desired.

If it is very simple, then the essence of the task was to tricky split multi-level grid while maintaining the specified level of accuracy. Of course, the main metric here was time. Somehow it happened that on this day we were already very relaxed and just did what we should have been. The task was for the CPU, and this is exactly what we had a lot. The input task files had a very specific look and, often, a large size - up to hundreds of lines. A script was written by a member of our team that automated the process of forming the input file, which accelerated the process, probably hundreds of times. In the end, all datasets were successfully completed and optimized; there was even time to try to rebuild the package with some interesting options, but we did not get much acceleration. We performed this task better than others, having received a special prize from Application Innovation, as well as 11th place in the team event (out of 20 in the final, out of 300+ among all the contestants).

The table with the configurations of computing systems, as well as the main photo taken from the site http://www.hpcwire.com/ .

')

Source: https://habr.com/ru/post/419847/

All Articles