Machine Training in Offensive Security

Contrary to popular belief, machine learning is not a 21st century invention. Over the past twenty years, only sufficiently productive hardware platforms have appeared, so that it would be expedient to use neural networks and other machine learning models for solving any everyday applied tasks. The software implementations of algorithms and models have also been tightened.

The temptation to make the cars themselves take care of our safety and protect the people (rather lazy, but smart), became too great. According to CB Insights, almost 90 startups (2 of them with an estimate of more than a billion US dollars) are trying to automate at least some of the routine and repetitive tasks. With varied success.

The main problem of Artificial Intelligence is now safe - too much hyip and frank marketing bullshit. The phrase "Artificial Intelligence" attracts investors. People come to the industry who are willing to call AI the simplest correlation of events. Buyers of solutions for their own money do not get what they hoped for (even if these expectations were initially too high).

')

As can be seen from the map CB Insights, allocate a dozen areas in which the MO is applied. But machine learning has not yet become the “cybersecurity pill” of cybersecurity due to several serious limitations.

The first limitation is the narrow applicability of the functionality of each specific model. A neural network can do one thing well. If it recognizes images well, then the same network will not be able to recognize audio. It is the same with IB, if the model is trained to classify events from network sensors and detect computer attacks on network equipment, then it will not be able to work with mobile devices, for example. If the customer is an AI fan, then he will buy, buy and buy.

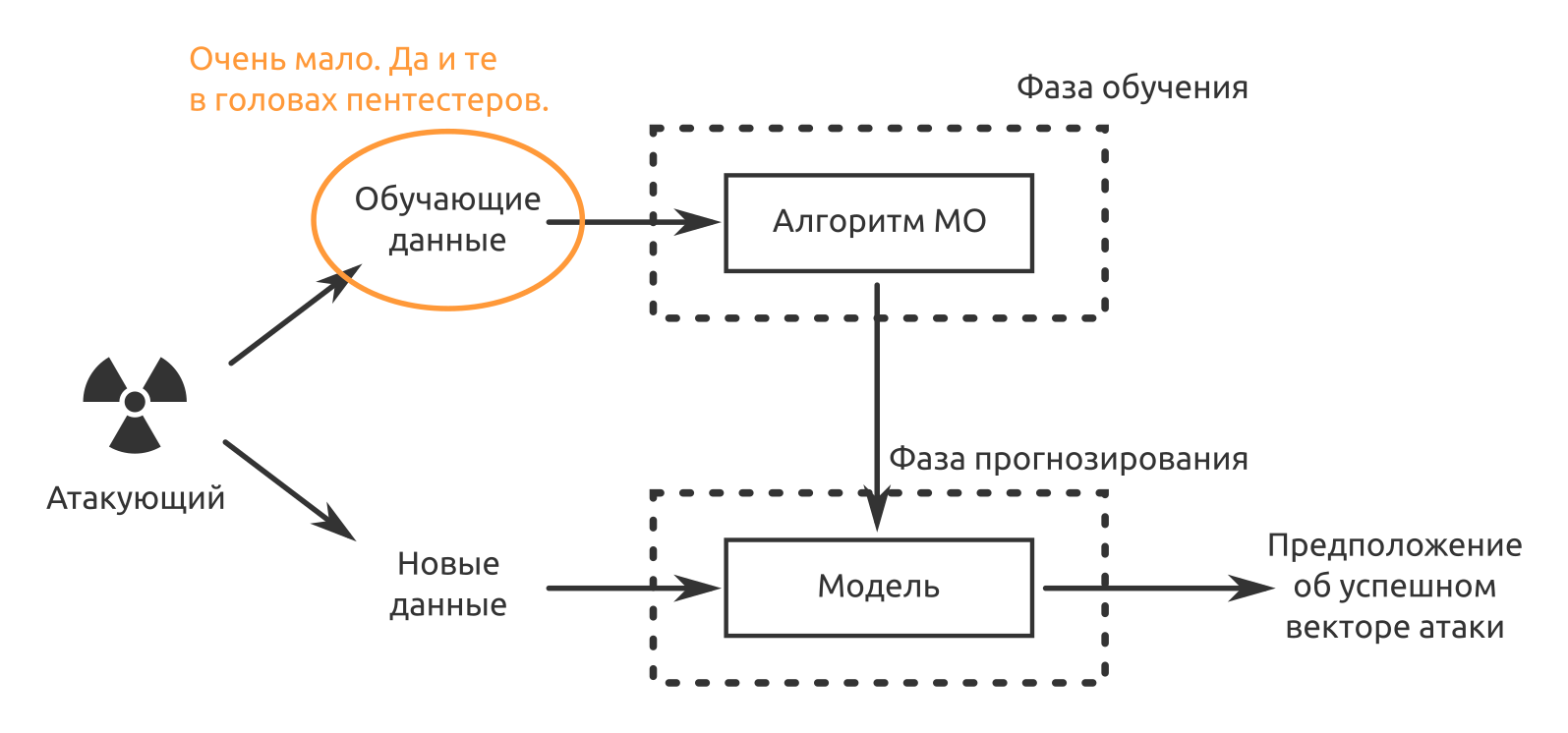

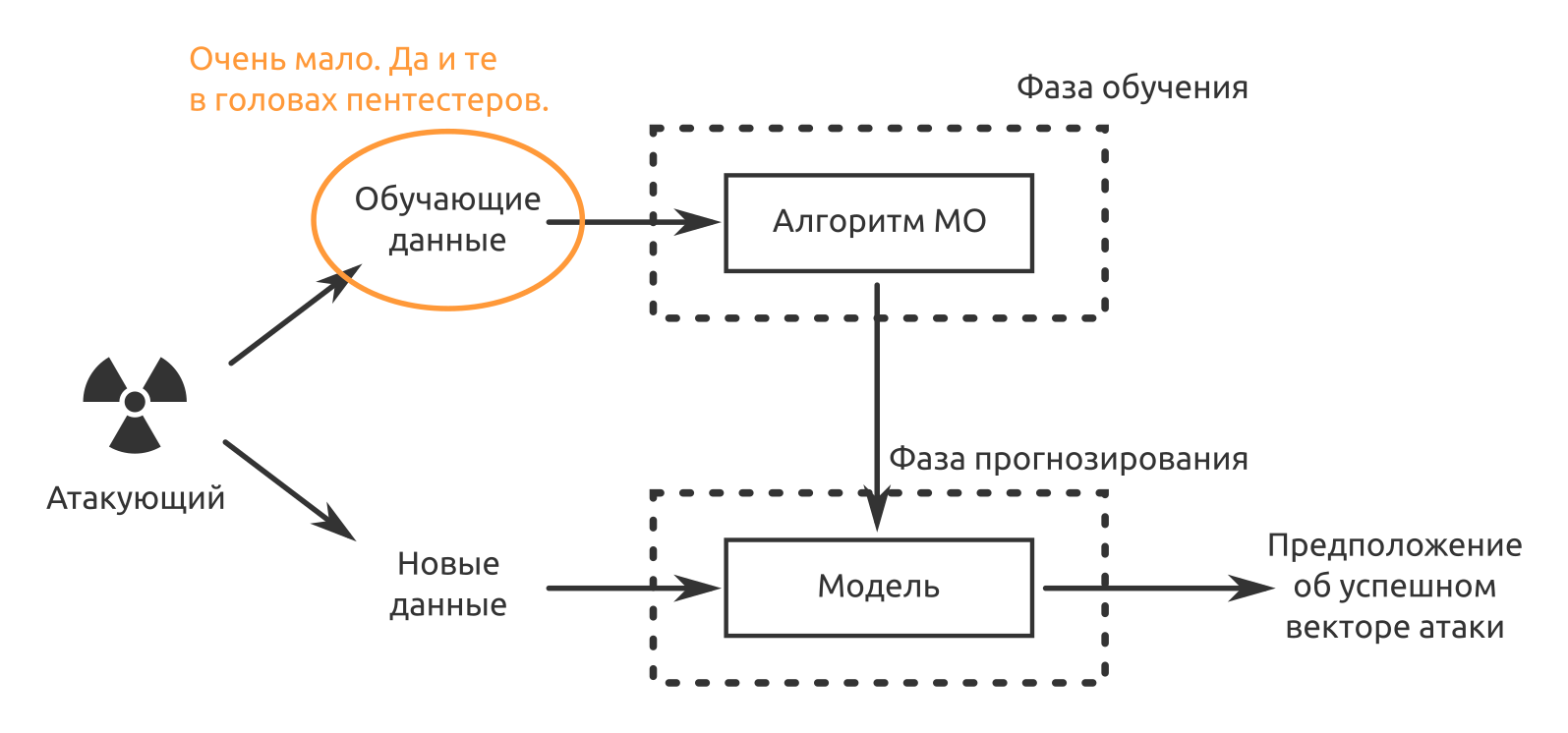

The second limitation is the lack of training data. Solutions are pre-trained, but not on your data. If the situation “Who considers false-positive in the first two weeks of operation” can still be taken, then in the future “bezopasnik” awaits a slight bewilderment, because the decision was bought so that the car took over the routine, and not vice versa.

The third and, probably, the most important thing so far is that MO products cannot be made responsible for their decisions. Even the developer of “unique means of protection with artificial intelligence” can answer such claims, “Well, what did you want? A neural network is a black box! Why she decided this way, no one but her knows. ” Therefore, now information security incidents are confirmed by people. Cars help, but people are still responsible.

There is a problem with data protection. They will be decided sooner or later. But how are you doing with the attack? Can the MoD and AI become a “silver bullet” of cyber attacks?

Probably, it is now most profitable to use MOs where:

The MoD is already doing an excellent job with these tasks. But besides this, some tasks can be accelerated. For example, my colleagues have already written about attack automation using python and metasploit .

Or check the awareness of employees in matters of information security. As our penetration testing practice shows, sotsinzheneriya works - in almost all projects where such an attack was carried out, we achieved success.

Suppose that we have already restored using traditional methods (company website, social networks, job sites, publications, etc.):

Next we need to get the data to train the neural network, which will imitate the voice of a particular person. In our case - someone from the management of the tested company. This article says that just one minute of voice is enough to reliably pretend.

We are looking for recordings of speeches at conferences, we go to them and write them down, we try to talk to the person we need. If we manage to imitate a voice, then we can create a stressful situation for a specific victim of the attack ourselves.

Who will not answer? Who will not see the attachment? Look at everything. And you can load anything into this letter. At the same time, it is not necessary to know either the director’s phone or the seller’s personal phone number; you do not need to fake an e-mail address on the corporate one, from which the malicious letter comes.

By the way, the preparation of an attack (data collection and analysis) can also be partially automated. We are just now looking for a developer to a team that solves such a task and creates a software package that facilitates the life of an analyst in the field of competitive intelligence and economic security of business.

Suppose we can listen to the encrypted traffic of the organization under attack. But we would like to know what exactly is in this traffic. The idea came to me at this research by Cisco employees of “ Detecting malicious code in encrypted TLS traffic (without decryption) ”. After all, if we can determine the presence of malicious objects based on data from NetFlow, TLS and DNS service data, what prevents us from using the same data to identify communications between employees of the attacked organization and between employees and corporate IT services?

Attacking a crypt in the forehead is more expensive. Therefore, using data about the addresses and ports of the source and the recipient, the number of transmitted packets and their size, time parameters, we try to determine the encrypted traffic.

Next, by defining crypto-gateways or end nodes in the case of p2p communications, we begin to reach them, forcing users to switch to less secure communication methods that are easier to attack.

The beauty of the method lies in two advantages:

Disadvantage - MitM still need to get.

Probably the most famous attempt to automate the search, exploitation and correction of vulnerabilities - DARPA Cyber Grand Challenge. In 2016, seven fully automatic systems, designed by different teams, came together in the final CTF-like battle. Of course, the goal of the development was declared exceptionally good - to protect the infrastructure, iot, applications in real time and with minimal participation of people. But the results can be viewed from a different angle.

The first direction in which the MO is developing in this matter is the automation of fuzzing. The same CGC members used american fuzzy lop extensively. Depending on the setting, fuzzers generate more or less output during operation. Where there is a lot of structured and weakly structured data, MO models are perfectly looking for patterns. If the attempt to “drop” the application worked with some input data, there is a possibility that this approach will work somewhere else.

It is the same with static code analysis and dynamic analysis of executable files when the application source code is not available. Neural networks can search for not just pieces of code with vulnerabilities, but code that looks like vulnerable. Fortunately, there are a lot of code with confirmed (and fixed) vulnerabilities. The researcher will need to verify this suspicion. With each new bug found, such an NS will become “smarter”. With this approach, you can get away from using only pre-written signatures.

In dynamic analysis, if the neural network is able to “understand” the connection between the input data (including user data), the order of execution, system calls, memory allocation and confirmed vulnerabilities, then it will eventually be able to look for new ones.

Now with purely automatic operation there is a problem -

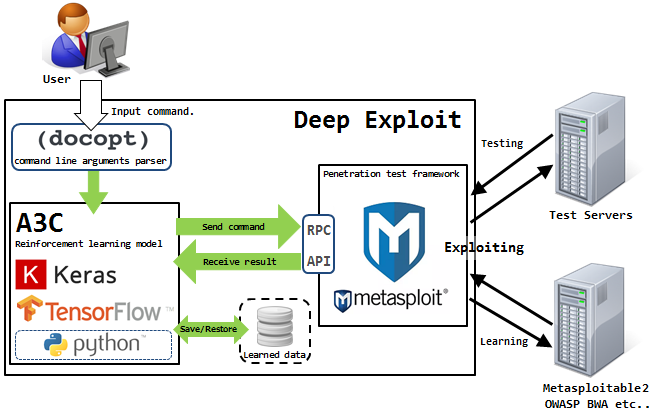

Isao Takaesu and other contributors who are developing Deep Exploit - “Fully automatic penetration test tool using Machine Learning” are trying to solve it. Details about him are written here and here .

This solution can operate in two modes - data collection mode and brute force mode.

In the first mode, DE identifies all open ports on the attacked node and launches exploits that have already worked for this combination.

In the second mode, the attacker indicates the product name and port number, and DE “hits the squares” using all available combinations of exploit, payload and target.

Deep Exploit can independently learn operating methods using reinforcement training (thanks to the feedback that DE receives from the system under attack).

Probably not yet.

Machines have problems with building logical chains of exploitation of identified vulnerabilities. But it is precisely this that often directly affects the achievement of the goal of penetration testing. A machine may find a vulnerability, it may even create an exploit on its own, but it cannot assess the extent to which this vulnerability affects a particular information system, information resources or business processes of the organization as a whole.

The work of automated systems generates a lot of noise on the attacked system, which is easily noticed by means of protection. Machines work clumsily. To reduce this noise and get an idea about the system, you can use social engineering, and with this, the machines also do not really.

And cars have no ingenuity and "chuiki." We recently had a project where the most cost-effective way to conduct testing would be to use a radio-controlled model. I have no idea how a non-person could figure this out.

And what ideas for automation could you suggest?

The temptation to make the cars themselves take care of our safety and protect the people (rather lazy, but smart), became too great. According to CB Insights, almost 90 startups (2 of them with an estimate of more than a billion US dollars) are trying to automate at least some of the routine and repetitive tasks. With varied success.

The main problem of Artificial Intelligence is now safe - too much hyip and frank marketing bullshit. The phrase "Artificial Intelligence" attracts investors. People come to the industry who are willing to call AI the simplest correlation of events. Buyers of solutions for their own money do not get what they hoped for (even if these expectations were initially too high).

')

As can be seen from the map CB Insights, allocate a dozen areas in which the MO is applied. But machine learning has not yet become the “cybersecurity pill” of cybersecurity due to several serious limitations.

The first limitation is the narrow applicability of the functionality of each specific model. A neural network can do one thing well. If it recognizes images well, then the same network will not be able to recognize audio. It is the same with IB, if the model is trained to classify events from network sensors and detect computer attacks on network equipment, then it will not be able to work with mobile devices, for example. If the customer is an AI fan, then he will buy, buy and buy.

The second limitation is the lack of training data. Solutions are pre-trained, but not on your data. If the situation “Who considers false-positive in the first two weeks of operation” can still be taken, then in the future “bezopasnik” awaits a slight bewilderment, because the decision was bought so that the car took over the routine, and not vice versa.

The third and, probably, the most important thing so far is that MO products cannot be made responsible for their decisions. Even the developer of “unique means of protection with artificial intelligence” can answer such claims, “Well, what did you want? A neural network is a black box! Why she decided this way, no one but her knows. ” Therefore, now information security incidents are confirmed by people. Cars help, but people are still responsible.

There is a problem with data protection. They will be decided sooner or later. But how are you doing with the attack? Can the MoD and AI become a “silver bullet” of cyber attacks?

Variants of using machine learning to increase the likelihood of success of a pentest or security analysis

Probably, it is now most profitable to use MOs where:

- you need to create something similar to what the neural network has already encountered;

- it is necessary to identify patterns that are not obvious to man.

The MoD is already doing an excellent job with these tasks. But besides this, some tasks can be accelerated. For example, my colleagues have already written about attack automation using python and metasploit .

Trying to cheat

Or check the awareness of employees in matters of information security. As our penetration testing practice shows, sotsinzheneriya works - in almost all projects where such an attack was carried out, we achieved success.

Suppose that we have already restored using traditional methods (company website, social networks, job sites, publications, etc.):

- organizational structure;

- list of key employees;

- email address patterns or real addresses;

- they called, pretended to be a potential client, learned the name of the seller, manager, secretary.

Next we need to get the data to train the neural network, which will imitate the voice of a particular person. In our case - someone from the management of the tested company. This article says that just one minute of voice is enough to reliably pretend.

We are looking for recordings of speeches at conferences, we go to them and write them down, we try to talk to the person we need. If we manage to imitate a voice, then we can create a stressful situation for a specific victim of the attack ourselves.

- Hello?

- Seller Preseilovich, hello. This is Director Nachalnikovich. You have something mobile is not responding. There you will receive a letter from Vector-Fake LLC, please see. This is urgent!

“Yes, but ...”

- Everything, I can not talk anymore. I'm at the meeting. Before communication. Answer them!

Who will not answer? Who will not see the attachment? Look at everything. And you can load anything into this letter. At the same time, it is not necessary to know either the director’s phone or the seller’s personal phone number; you do not need to fake an e-mail address on the corporate one, from which the malicious letter comes.

By the way, the preparation of an attack (data collection and analysis) can also be partially automated. We are just now looking for a developer to a team that solves such a task and creates a software package that facilitates the life of an analyst in the field of competitive intelligence and economic security of business.

We attack the implementation of cryptosystems

Suppose we can listen to the encrypted traffic of the organization under attack. But we would like to know what exactly is in this traffic. The idea came to me at this research by Cisco employees of “ Detecting malicious code in encrypted TLS traffic (without decryption) ”. After all, if we can determine the presence of malicious objects based on data from NetFlow, TLS and DNS service data, what prevents us from using the same data to identify communications between employees of the attacked organization and between employees and corporate IT services?

Attacking a crypt in the forehead is more expensive. Therefore, using data about the addresses and ports of the source and the recipient, the number of transmitted packets and their size, time parameters, we try to determine the encrypted traffic.

Next, by defining crypto-gateways or end nodes in the case of p2p communications, we begin to reach them, forcing users to switch to less secure communication methods that are easier to attack.

The beauty of the method lies in two advantages:

- The machine can be trained at home, on virtualochki. The benefit of free and even open-source products for creating secure communications exists abound. “A machine, this is such a protocol, it has such and such packet sizes, such and such entropy. Do you understand? Remember? Repeat as many times as possible on different types of open data.

- No need to "drive" and pass through the model all the traffic, just enough metadata.

Disadvantage - MitM still need to get.

We are looking for software bugs and vulnerabilities

Probably the most famous attempt to automate the search, exploitation and correction of vulnerabilities - DARPA Cyber Grand Challenge. In 2016, seven fully automatic systems, designed by different teams, came together in the final CTF-like battle. Of course, the goal of the development was declared exceptionally good - to protect the infrastructure, iot, applications in real time and with minimal participation of people. But the results can be viewed from a different angle.

The first direction in which the MO is developing in this matter is the automation of fuzzing. The same CGC members used american fuzzy lop extensively. Depending on the setting, fuzzers generate more or less output during operation. Where there is a lot of structured and weakly structured data, MO models are perfectly looking for patterns. If the attempt to “drop” the application worked with some input data, there is a possibility that this approach will work somewhere else.

It is the same with static code analysis and dynamic analysis of executable files when the application source code is not available. Neural networks can search for not just pieces of code with vulnerabilities, but code that looks like vulnerable. Fortunately, there are a lot of code with confirmed (and fixed) vulnerabilities. The researcher will need to verify this suspicion. With each new bug found, such an NS will become “smarter”. With this approach, you can get away from using only pre-written signatures.

In dynamic analysis, if the neural network is able to “understand” the connection between the input data (including user data), the order of execution, system calls, memory allocation and confirmed vulnerabilities, then it will eventually be able to look for new ones.

Automate operation

Now with purely automatic operation there is a problem -

Isao Takaesu and other contributors who are developing Deep Exploit - “Fully automatic penetration test tool using Machine Learning” are trying to solve it. Details about him are written here and here .

This solution can operate in two modes - data collection mode and brute force mode.

In the first mode, DE identifies all open ports on the attacked node and launches exploits that have already worked for this combination.

In the second mode, the attacker indicates the product name and port number, and DE “hits the squares” using all available combinations of exploit, payload and target.

Deep Exploit can independently learn operating methods using reinforcement training (thanks to the feedback that DE receives from the system under attack).

Can the AI now replace the pentester team?

Probably not yet.

Machines have problems with building logical chains of exploitation of identified vulnerabilities. But it is precisely this that often directly affects the achievement of the goal of penetration testing. A machine may find a vulnerability, it may even create an exploit on its own, but it cannot assess the extent to which this vulnerability affects a particular information system, information resources or business processes of the organization as a whole.

The work of automated systems generates a lot of noise on the attacked system, which is easily noticed by means of protection. Machines work clumsily. To reduce this noise and get an idea about the system, you can use social engineering, and with this, the machines also do not really.

And cars have no ingenuity and "chuiki." We recently had a project where the most cost-effective way to conduct testing would be to use a radio-controlled model. I have no idea how a non-person could figure this out.

And what ideas for automation could you suggest?

Source: https://habr.com/ru/post/419617/

All Articles