How to run Istio using Kubernetes in production. Part 1

What is Istio ? This is the so-called Service mesh, a technology that adds a layer of abstraction over the network. We intercept all or part of the traffic in the cluster and perform a certain set of operations with it. Which one? For example, we do smart routing, or implement the circuit breaker approach, we can organize “canary deployment”, partially switching traffic to a new version of the service, and we can limit external interactions and monitor all hikes from the cluster to the external network. It is possible to set policy rules to control hikes between different microservices. Finally, we can get the entire map of interaction over the network and make a unified collection of metrics completely transparent to applications.

About the mechanism of work can be read in the official documentation . Istio is a really powerful tool that allows you to solve many problems and problems. In this article I would like to answer the basic questions that usually arise at the beginning of working with Istio. This will help you deal with it faster.

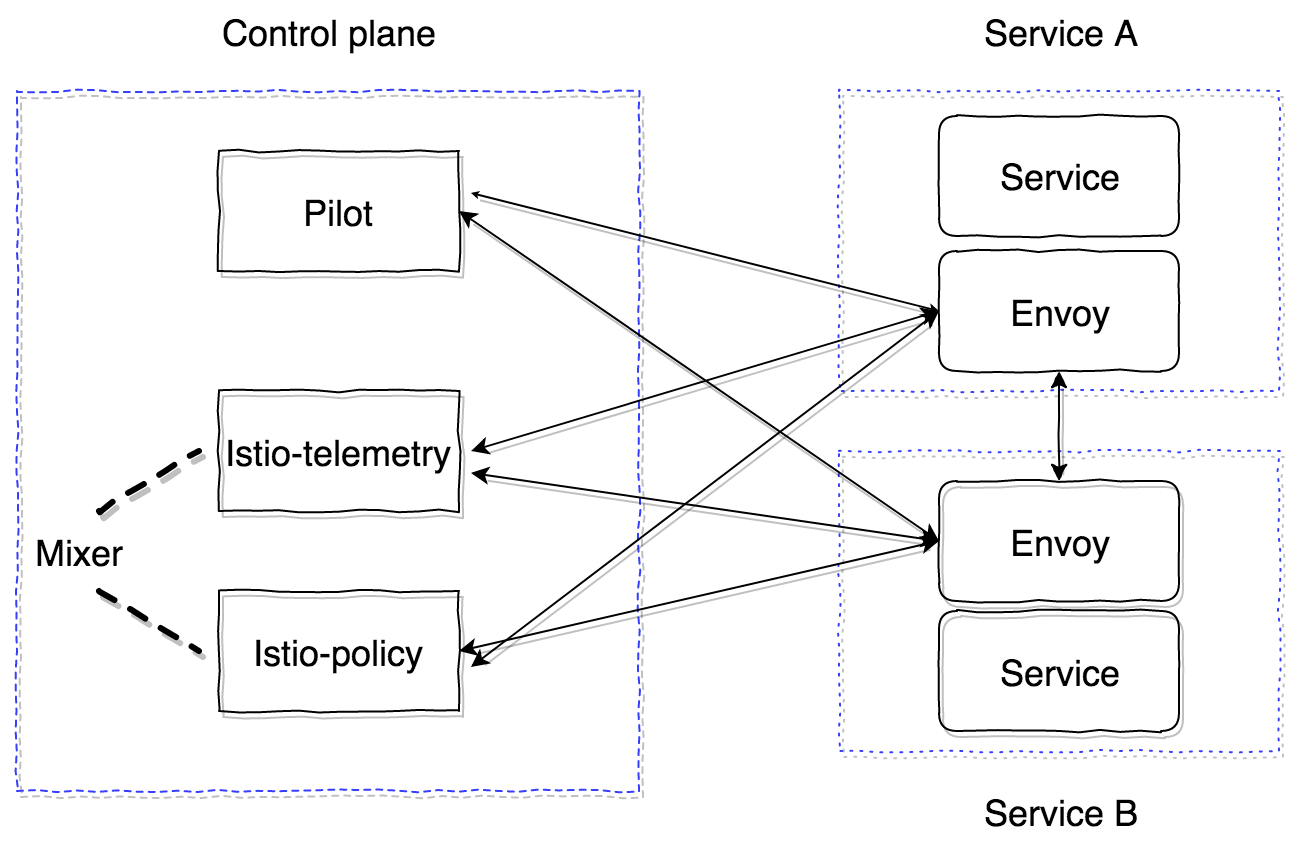

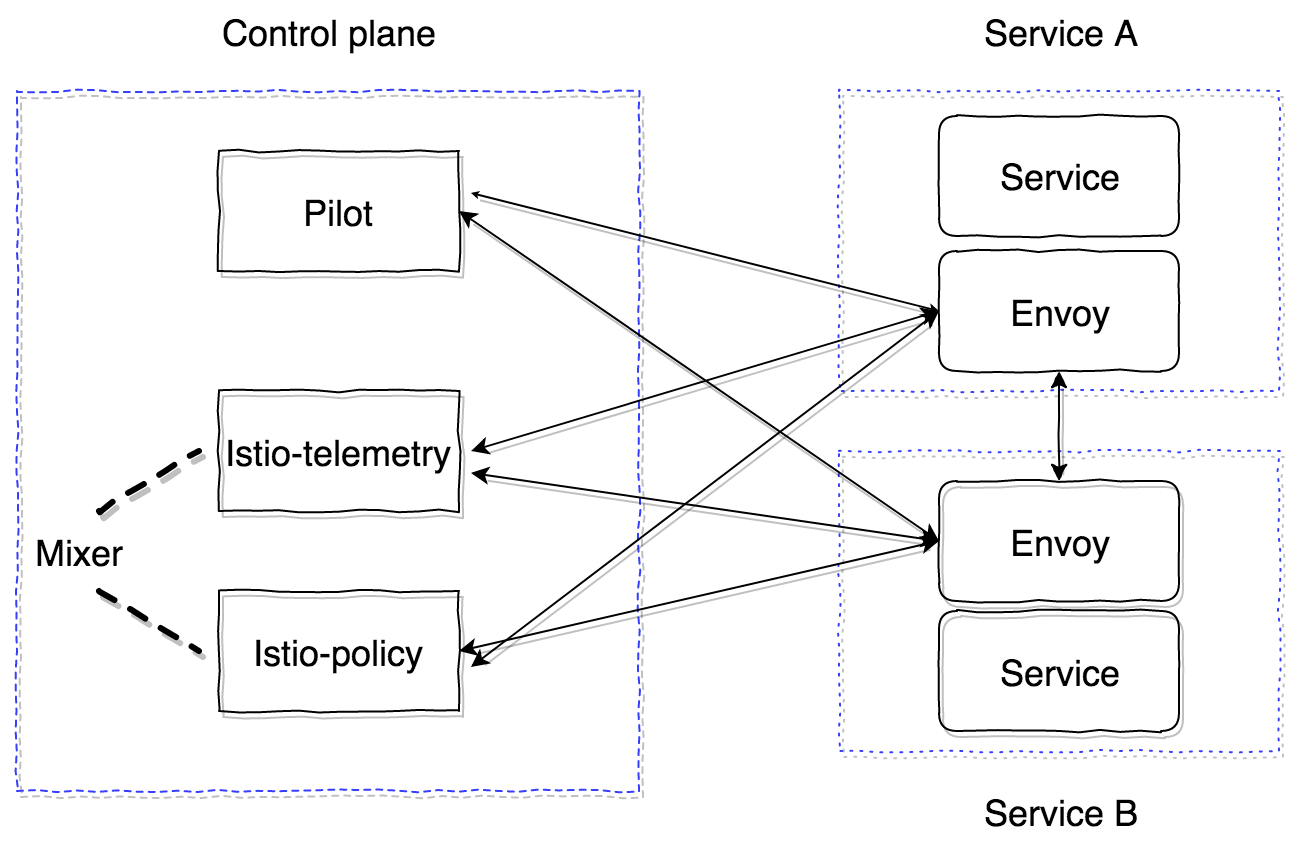

Istio consists of two main zones - the control plane and the data plane. Control plane contains the main components that ensure the correct operation of the rest. In the current version (1.0), the control plane has three main components: Pilot, Mixer, Citadel. We will not consider Citadel, it is needed to generate certificates for mutual TLS operation between services. Let's take a closer look at the device and purpose of the Pilot and Mixer.

')

Pilot is the main control component that propagates all the information that we have in the cluster - services, their endpoints and routing rules (for example, rules for Canary deployment or circuit breaker rules).

Mixer is an optional component of the control plane, which provides the ability to collect metrics, logs, and any information about network interaction. He also oversees the observance of Policy rules and the observance of rate limits.

Data plane is implemented using sidecar proxy containers. By default, the powerful proxy server envoy is used . It can be replaced with another implementation, for example nginx (nginmesh).

In order for Istio to work completely transparently for applications, there is an automatic injecting system. The latest implementation is suitable for versions Kubernetes 1.9+ (mutational admission webhook). For Kubernetes versions 1.7, 1.8 it is possible to use Initializer.

Sidecar containers are connected to Pilot using the GRPC protocol, which allows optimization of the pushing model of changes occurring in the cluster. GRPC began to be used in Envoy since version 1.6, in Istio it is used from version 0.8 and is a pilot-agent - a wrapper on golang over envoy, which configures launch parameters.

Pilot and Mixer are fully stateless components, all states are kept in memory. The configuration for them is given in the form of Kubernetes Custom Resources, which are stored in etcd.

The Istio-agent receives the Pilot address and opens the GRPC stream to it.

As I said, Istio implements all the functionality completely transparent to applications. Let's see how. The algorithm is as follows:

As a result, we get a whole network of envoy proxies, which we can configure from one point (Pilot). All inbound and outbound requests go through envoy. Moreover, only TCP traffic is intercepted. This means that Kubernetes service IP is resolved using kube-dns via UDP without modification. Then, after rezolv, the outgoing request is intercepted and processed by envoy, which already decides at which endpoint the request should be sent (or not, in the case of access policies or triggering the circuit breaker algorithm).

With the Pilot figured out, now you need to understand how the Mixer works and why it is needed. Read the official documentation on it here .

Mixer in its current form consists of two components: istio-telemetry, istio-policy (prior to version 0.8, this was one component of istio-mixer). Both are the mixers, each of which is responsible for its own task. Istio telemetry accepts GRPC from sidecar Report containers about who goes where and with what parameters. Istio-policy accepts Check requests to verify that Policy is compliant. Poilicy check is carried out, of course, not for each request, but is cached on the client (in the sidecar) for a certain time. Report checks are sent by batch requests. How to configure and which parameters need to be sent will see later.

Mixer is supposed to be a highly available component that ensures uninterrupted work on the assembly and processing of telemetry data. The system is ultimately obtained as a multi-level buffer. Initially, data is buffered on the sidecar side of containers, then on the mixer side and then sent to so-called mixer backends. As a result, if any of the system components fails, the buffer grows and after the system is restored, it crashes. Mixer backend's are end points for sending telemetry data: statsd, newrelic, and so on. You can write your backend, it's quite simple, and we will see how to do it.

To summarize, the scheme of working with istio-telemetry is as follows.

Now let's see how to deploy in the Istio system, consisting only of the main components (Pilot and sidecar envoy).

First, look at the basic configuration (mesh) that Pilot reads:

All the main components of the control (control plane) will be placed in the namespace istio-system in Kubernetes.

The minimum we need to deploy only the Pilot. To do this, we use this configuration.

And manually configure the injecting sidecar of the container.

Init container:

And sidecar:

In order for everything to start successfully, you need to have ServiceAccount, ClusterRole, ClusterRoleBinding, CRD for Pilot, descriptions of which can be found here .

As a result, the service into which we inject the sidecar with envoy should start successfully, get all the discovery from the pilot and process the requests.

It is important to understand that all control plane components are stateless applications and can be horizontally scaled without problems. All data is in etcd as custom descriptions of Kubernetes resources.

Also, Istio (so far experimentally) has the ability to run outside the cluster and the ability to watch and fumble for service discovery between several Kubernetes clusters. Read more about it here .

In a multicluster installation, the following limitations should be taken into account:

This is the initial information that will help you get started with Istio. However, there are still many pitfalls. For example, the features of external traffic routing (out of the cluster), approaches to debugging sidecar's, profiling, setting up a mixer and writing a custom mixer backend, setting up a tracing mechanism and its work using envoy.

All this we will consider in the following publications. Ask your questions, I will try to highlight them.

About the mechanism of work can be read in the official documentation . Istio is a really powerful tool that allows you to solve many problems and problems. In this article I would like to answer the basic questions that usually arise at the beginning of working with Istio. This will help you deal with it faster.

Principle of operation

Istio consists of two main zones - the control plane and the data plane. Control plane contains the main components that ensure the correct operation of the rest. In the current version (1.0), the control plane has three main components: Pilot, Mixer, Citadel. We will not consider Citadel, it is needed to generate certificates for mutual TLS operation between services. Let's take a closer look at the device and purpose of the Pilot and Mixer.

')

Pilot is the main control component that propagates all the information that we have in the cluster - services, their endpoints and routing rules (for example, rules for Canary deployment or circuit breaker rules).

Mixer is an optional component of the control plane, which provides the ability to collect metrics, logs, and any information about network interaction. He also oversees the observance of Policy rules and the observance of rate limits.

Data plane is implemented using sidecar proxy containers. By default, the powerful proxy server envoy is used . It can be replaced with another implementation, for example nginx (nginmesh).

In order for Istio to work completely transparently for applications, there is an automatic injecting system. The latest implementation is suitable for versions Kubernetes 1.9+ (mutational admission webhook). For Kubernetes versions 1.7, 1.8 it is possible to use Initializer.

Sidecar containers are connected to Pilot using the GRPC protocol, which allows optimization of the pushing model of changes occurring in the cluster. GRPC began to be used in Envoy since version 1.6, in Istio it is used from version 0.8 and is a pilot-agent - a wrapper on golang over envoy, which configures launch parameters.

Pilot and Mixer are fully stateless components, all states are kept in memory. The configuration for them is given in the form of Kubernetes Custom Resources, which are stored in etcd.

The Istio-agent receives the Pilot address and opens the GRPC stream to it.

As I said, Istio implements all the functionality completely transparent to applications. Let's see how. The algorithm is as follows:

- Deploy new service version.

- Depending on the injecting approach of the sidecar container, the istio-init container and the istio-agent container (envoy) are added at the configuration application stage, or they can already be manually inserted into the Pod description of the Kubernetes entity.

- The istio-init container is a script that applies the iptables rules for the submission. There are two options for configuring traffic wrapping in an istio-agent container: use the redirect iptables rules, or TPROXY . At the time of this writing, the default approach is to use the redirect rules. In istio-init, it is possible to configure exactly which traffic to intercept and route to the istio-agent. For example, in order to intercept all incoming and all outgoing traffic, you need to set the parameters

-iand-bto*. You can specify specific ports to intercept. In order not to intercept a specific subnet, you can specify it using the-xflag. - After execution of the init containers, the main ones are started, including the pilot-agent (envoy). It connects to the already deployed Pilot GRPC and receives information on all existing services and routing policies in the cluster. According to the data received, he configures clusters and writes them directly the endpoints of our applications in the Kubernetes cluster. It is also necessary to note an important point: envoy dynamically configures listeners (pairs of IP, port), which begins to listen. Therefore, when requests enter the pod, are redirected using redirect iptables rules to the sidecar, envoy can already successfully handle these connections and understand where to further proxy traffic. Also at this stage the information is sent to the Mixer, which we will look at later, and the sending of the tracing span.

As a result, we get a whole network of envoy proxies, which we can configure from one point (Pilot). All inbound and outbound requests go through envoy. Moreover, only TCP traffic is intercepted. This means that Kubernetes service IP is resolved using kube-dns via UDP without modification. Then, after rezolv, the outgoing request is intercepted and processed by envoy, which already decides at which endpoint the request should be sent (or not, in the case of access policies or triggering the circuit breaker algorithm).

With the Pilot figured out, now you need to understand how the Mixer works and why it is needed. Read the official documentation on it here .

Mixer in its current form consists of two components: istio-telemetry, istio-policy (prior to version 0.8, this was one component of istio-mixer). Both are the mixers, each of which is responsible for its own task. Istio telemetry accepts GRPC from sidecar Report containers about who goes where and with what parameters. Istio-policy accepts Check requests to verify that Policy is compliant. Poilicy check is carried out, of course, not for each request, but is cached on the client (in the sidecar) for a certain time. Report checks are sent by batch requests. How to configure and which parameters need to be sent will see later.

Mixer is supposed to be a highly available component that ensures uninterrupted work on the assembly and processing of telemetry data. The system is ultimately obtained as a multi-level buffer. Initially, data is buffered on the sidecar side of containers, then on the mixer side and then sent to so-called mixer backends. As a result, if any of the system components fails, the buffer grows and after the system is restored, it crashes. Mixer backend's are end points for sending telemetry data: statsd, newrelic, and so on. You can write your backend, it's quite simple, and we will see how to do it.

To summarize, the scheme of working with istio-telemetry is as follows.

- Service 1 sends a request to service 2.

- When leaving the service 1, the request is wrapped in its own sidecar.

- Sidecar envoy monitors the progress of the request to service 2 and prepares the necessary information.

- It then sends it to istio-telemetry using the Report request.

- Istio-telemetry determines whether this Report should be sent to the backend, to which data it should be sent.

- Istio-telemetry sends Report data to the backend if necessary.

Now let's see how to deploy in the Istio system, consisting only of the main components (Pilot and sidecar envoy).

First, look at the basic configuration (mesh) that Pilot reads:

apiVersion: v1 kind: ConfigMap metadata: name: istio namespace: istio-system labels: app: istio service: istio data: mesh: |- # tracing (pilot envoy' , ) enableTracing: false # mixer endpoint', sidecar #mixerCheckServer: istio-policy.istio-system:15004 #mixerReportServer: istio-telemetry.istio-system:15004 # , envoy Pilot ( envoy proxy) rdsRefreshDelay: 5s # default envoy sidecar defaultConfig: # rdsRefreshDelay discoveryRefreshDelay: 5s # ( envoy) configPath: "/etc/istio/proxy" binaryPath: "/usr/local/bin/envoy" # sidecar (, , tracing span') serviceCluster: istio-proxy # , envoy , drainDuration: 45s parentShutdownDuration: 1m0s # REDIRECT iptables. TPROXY. #interceptionMode: REDIRECT # , admin sidecar (envoy) proxyAdminPort: 15000 # , trace' zipkin ( , ) zipkinAddress: tracing-collector.tracing:9411 # statsd envoy () # statsdUdpAddress: aggregator:8126 # Mutual TLS controlPlaneAuthPolicy: NONE # , istio-pilot , service discovery sidecar discoveryAddress: istio-pilot.istio-system:15007 All the main components of the control (control plane) will be placed in the namespace istio-system in Kubernetes.

The minimum we need to deploy only the Pilot. To do this, we use this configuration.

And manually configure the injecting sidecar of the container.

Init container:

initContainers: - name: istio-init args: - -p - "15001" - -u - "1337" - -m - REDIRECT - -i - '*' - -b - '*' - -d - "" image: istio/proxy_init:1.0.0 imagePullPolicy: IfNotPresent resources: limits: memory: 128Mi securityContext: capabilities: add: - NET_ADMIN And sidecar:

name: istio-proxy args: - "bash" - "-c" - | exec /usr/local/bin/pilot-agent proxy sidecar \ --configPath \ /etc/istio/proxy \ --binaryPath \ /usr/local/bin/envoy \ --serviceCluster \ service-name \ --drainDuration \ 45s \ --parentShutdownDuration \ 1m0s \ --discoveryAddress \ istio-pilot.istio-system:15007 \ --discoveryRefreshDelay \ 1s \ --connectTimeout \ 10s \ --proxyAdminPort \ "15000" \ --controlPlaneAuthPolicy \ NONE env: - name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace - name: INSTANCE_IP valueFrom: fieldRef: fieldPath: status.podIP - name: ISTIO_META_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: ISTIO_META_INTERCEPTION_MODE value: REDIRECT image: istio/proxyv2:1.0.0 imagePullPolicy: IfNotPresent resources: requests: cpu: 100m memory: 128Mi limits: memory: 2048Mi securityContext: privileged: false readOnlyRootFilesystem: true runAsUser: 1337 volumeMounts: - mountPath: /etc/istio/proxy name: istio-envoy In order for everything to start successfully, you need to have ServiceAccount, ClusterRole, ClusterRoleBinding, CRD for Pilot, descriptions of which can be found here .

As a result, the service into which we inject the sidecar with envoy should start successfully, get all the discovery from the pilot and process the requests.

It is important to understand that all control plane components are stateless applications and can be horizontally scaled without problems. All data is in etcd as custom descriptions of Kubernetes resources.

Also, Istio (so far experimentally) has the ability to run outside the cluster and the ability to watch and fumble for service discovery between several Kubernetes clusters. Read more about it here .

In a multicluster installation, the following limitations should be taken into account:

- Pod CIDR and Service CIDR must be unique across all clusters and must not intersect.

- All Pod CIDRs should be accessible from any Pod CIDR between clusters.

- All Kubernetes API servers must be accessible to each other.

This is the initial information that will help you get started with Istio. However, there are still many pitfalls. For example, the features of external traffic routing (out of the cluster), approaches to debugging sidecar's, profiling, setting up a mixer and writing a custom mixer backend, setting up a tracing mechanism and its work using envoy.

All this we will consider in the following publications. Ask your questions, I will try to highlight them.

Source: https://habr.com/ru/post/419319/

All Articles