A quick note on running vue.js in the kubernetes cluster

This is a small note on how to pack a vue.js application in a Dockerfile and then run it in a container in kubernetes.

What is he doing

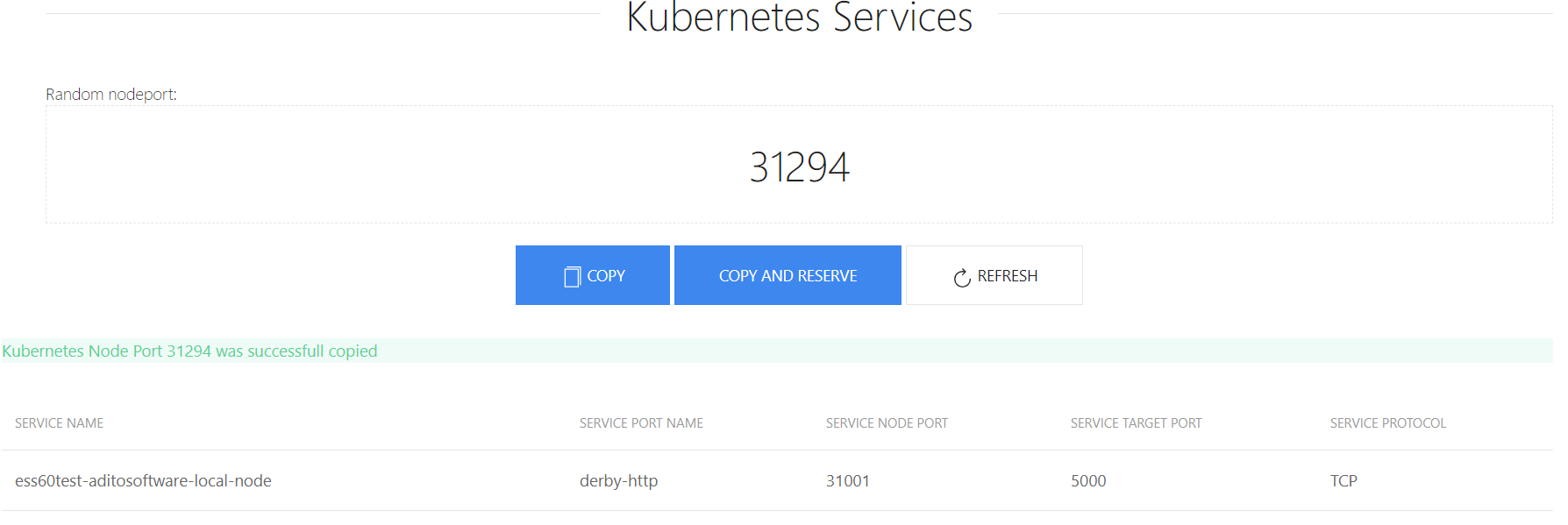

I wrote a small program that generates a free NodePort number. Actually, it doesn’t do anything particularly useful, but you can’t be soared with the search for the port, and so it’s interesting to see how this can be done.

Started

The whole project consists of druh parts - frontend and server. The front end asks the nodePort from the server, and the server part finds some free via kubernetes api.

Actually, in order for this all to work in the docker, you need to remove some variables from the application, such as the address of the kubernetes api, port, token, and so on.

It looks like this:

k8s-nodeport-gen/server/index.js: var k8sInfo = { url: process.env.K8SURL, port: process.env.K8SPORT, timeout: process.env.K8STIMEOUT || '30', respath: process.env.RESSPATH || '/api/v1/services', token: process.env.K8STOKEN, nodePortStart: process.env.K8SPORTSTART || '30000', nodePortEnd: process.env.K8SPORTEND || '32000' } app.listen(process.env.PORT || 8081) Let's say that everything has been tested and our application is working.

Create a docker image

Those who worked with vue.js know that there are a lot of any files there, for which they are all needed, I don’t know, but you need to see. But due to the fact that there is such a thing as vue-cli, everything can be quite simple to pack. Now we pack everything:

npm run build After that, we will have the folder "dist" and the file "index.html" in "k8s-nodeport-gen / client". And for work we need only them. That is, the idea is that the frontend needs some http server to work. But in this case, there is also a backend, which should also work. On this in my case as http server node.js express will work.

The files will be located in the k8s-nodeport-gen / public folder. To do this, add the option to server / index.js

app.use(express.static(__dirname + '/public')) Now that the files are parsed, you can create a Dockerfile. We only need to create files from the frontend for the "dist" folder. To do this, we will use this newfangled thing like multistage build .

FROM node:10-alpine AS base COPY client /portgen/client COPY server /portgen/server WORKDIR /portgen RUN cd client && npm i && npm run build FROM node:10-alpine WORKDIR /portgen COPY server/index.js /portgen/index.js COPY server/package.json /portgen/package.json COPY --from=base /portgen/client/dist ./public RUN npm i CMD ["/usr/local/bin/node", "/portgen/index.js"] That is, in the first container, run "npm run build", and in the second container, copy the files from "dist" to "public". In the end we get an image of 95mb.

Now we have a docker image, which I have already uploaded to hub.docker.com .

Launch

Now we want to run this image in kubernetes, in addition, we need a token that can see which ports are already being used through kubernetes api.

To do this, you need to create a server account , a role (you can use an existing one) and rolebinding (I don’t know how to translate correctly).

I already have a cluster role "view" in the cluster

ceku@ceku1> kubectl describe clusterrole view Name: view Labels: kubernetes.io/bootstrapping=rbac-defaults Annotations: rbac.authorization.kubernetes.io/autoupdate=true PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- bindings [] [] [get list watch] configmaps [] [] [get list watch] endpoints [] [] [get list watch] events [] [] [get list watch] limitranges [] [] [get list watch] namespaces [] [] [get list watch] namespaces/status [] [] [get list watch] persistentvolumeclaims [] [] [get list watch] pods [] [] [get list watch] pods/log [] [] [get list watch] pods/status [] [] [get list watch] replicationcontrollers [] [] [get list watch] replicationcontrollers/scale [] [] [get list watch] replicationcontrollers/status [] [] [get list watch] resourcequotas [] [] [get list watch] resourcequotas/status [] [] [get list watch] serviceaccounts [] [] [get list watch] services [] [] [get list watch] daemonsets.apps [] [] [get list watch] deployments.apps [] [] [get list watch] deployments.apps/scale [] [] [get list watch] replicasets.apps [] [] [get list watch] replicasets.apps/scale [] [] [get list watch] statefulsets.apps [] [] [get list watch] horizontalpodautoscalers.autoscaling [] [] [get list watch] cronjobs.batch [] [] [get list watch] jobs.batch [] [] [get list watch] daemonsets.extensions [] [] [get list watch] deployments.extensions [] [] [get list watch] deployments.extensions/scale [] [] [get list watch] ingresses.extensions [] [] [get list watch] networkpolicies.extensions [] [] [get list watch] replicasets.extensions [] [] [get list watch] replicasets.extensions/scale [] [] [get list watch] replicationcontrollers.extensions/scale [] [] [get list watch] networkpolicies.networking.k8s.io [] [] [get list watch] poddisruptionbudgets.policy [] [] [get list watch] Now create an account and rolebinding

account_portng.yml:

apiVersion: v1 kind: ServiceAccount metadata: name: portng-service-get namespace: internal labels: k8s-app: portng-service-get kubernetes.io/cluster-service: "true" rolebindng_portng.yml:

kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: namespace: internal name: view labels: k8s-app: portng-service-get kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: portng-service-get namespace: kube-system apiGroup: "" roleRef: kind: ClusterRole name: view apiGroup: "" Now we have an account, and it has a token. His name is written in the account:

ceku@ceku1 /a/r/aditointernprod.aditosoftware.local> kubectl get serviceaccount portng-service-get -n internal -o yaml apiVersion: v1 kind: ServiceAccount metadata: creationTimestamp: 2018-08-02T07:31:54Z labels: k8s-app: portng-service-get kubernetes.io/cluster-service: "true" name: portng-service-get namespace: internal resourceVersion: "7270593" selfLink: /api/v1/namespaces/internal/serviceaccounts/portng-service-get uid: 2153dfa0-9626-11e8-aaa3-ac1f6b664c1c secrets: - name: portng-service-get-token-vr5bj Now you just have to write deploy, service, ingress for the page. Let's start:

deploy_portng.yml

apiVersion: apps/v1beta1 # for versions before 1.6.0 use extensions/v1beta1 kind: Deployment metadata: namespace: internal name: portng.server.local spec: replicas: 1 template: metadata: labels: app: portng.server.local spec: serviceAccountName: portng-service-get containers: - name: portgen image: de1m/k8s-nodeport-gen env: - name: K8SURL value: ceku.server.local - name: K8SPORT value: '6443' - name: K8STIMEOUT value: '30' - name: RESSPATH value: '/api/v1/services' - name: K8SPORTSTART value: '30000' - name: K8SPORTEND value: '32000' - name: PORT value: '8080' args: - /bin/sh - -c - export K8STOKEN=$(cat /var/run/secrets/kubernetes.io/serviceaccount/token) && node /portgen/index.js Here you need to pay attention to two things, this is "serviceAccountName: portng-service-get" and the token for kubernetes, more precisely, how I added it.

Now we will write the service:

svc_portng.yml

apiVersion: v1 kind: Service metadata: name: portng-server-local namespace: internal spec: ports: - name: http port: 8080 targetPort: 8080 selector: app: portng.server.local And ingress, for him you must have an ingress controller installed

ingress_portng.yaml:

apiVersion: extensions/v1beta1 kind: Ingress metadata: name: portng.aditosoftware.local namespace: internal annotations: kubernetes.io/ingress.class: "internal" spec: rules: - host: portng.server.local http: paths: - path: / backend: serviceName: portng-server-local servicePort: 8080 Everything, it remains only to upload files to the server and run.

All this can be started as a container docker and even without it, but the part with accounts in kubernetes will still have to go through.

Resources:

Docker image on hub.docker.com

Git repository on github.com

As you can see, there is nothing special in this article, but for some I think it will be interesting.

')

Source: https://habr.com/ru/post/419033/

All Articles