Textures for 64k intro: how it is done today

This article is the second part of our H-Immersion series . The first part can be read here: Immersion Immersion .

When creating an animation of only 64 KB, it is difficult to use ready-made images. We can not store them in the traditional way, because it is not efficient enough, even if you use compression, such as JPEG. An alternative solution is procedural generation, that is, writing code that describes the creation of images during program execution. Our implementation of this solution was a texture generator - a fundamental part of our toolchain. In this post we will explain how it was developed and used in H - Immersion .

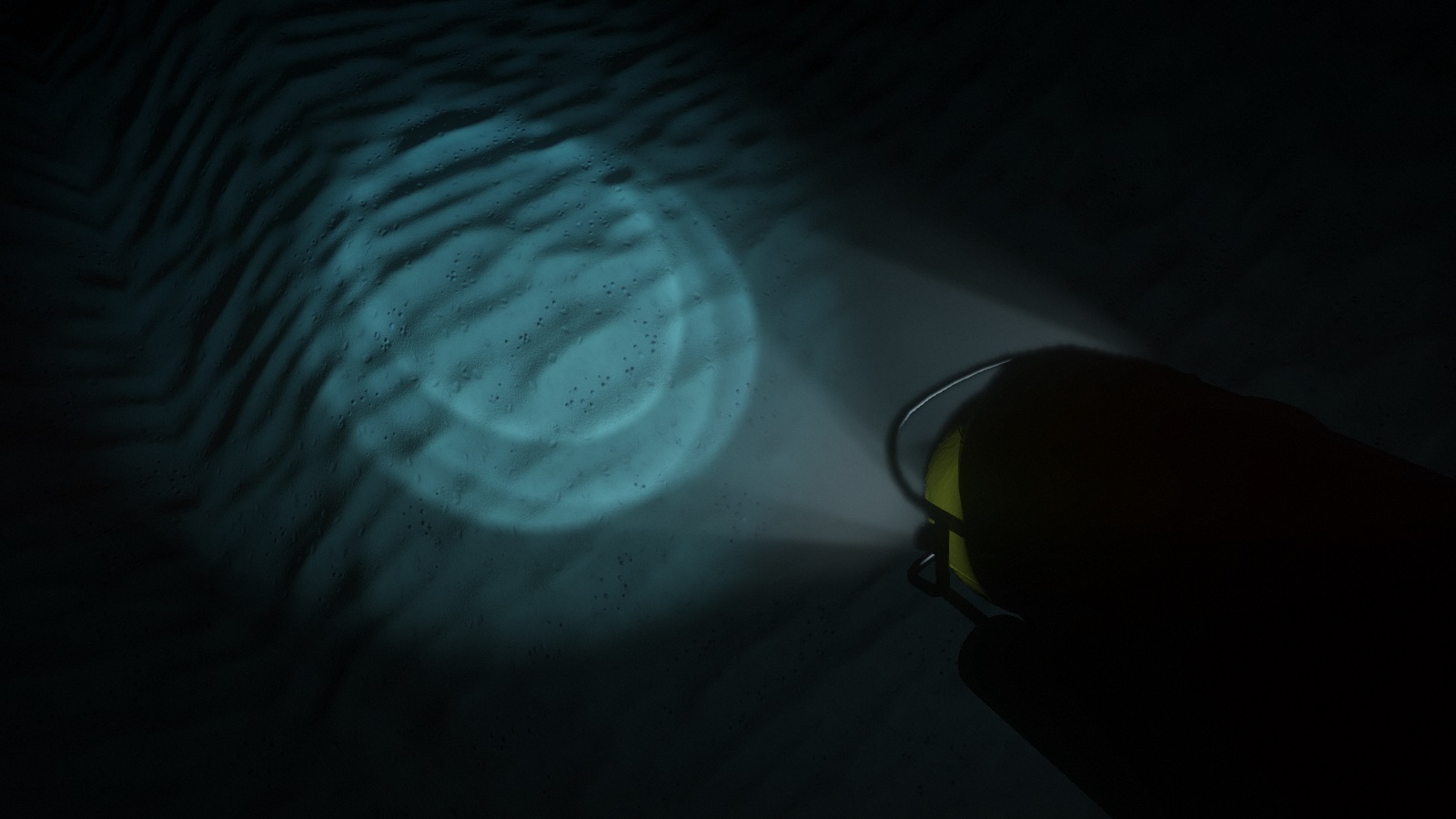

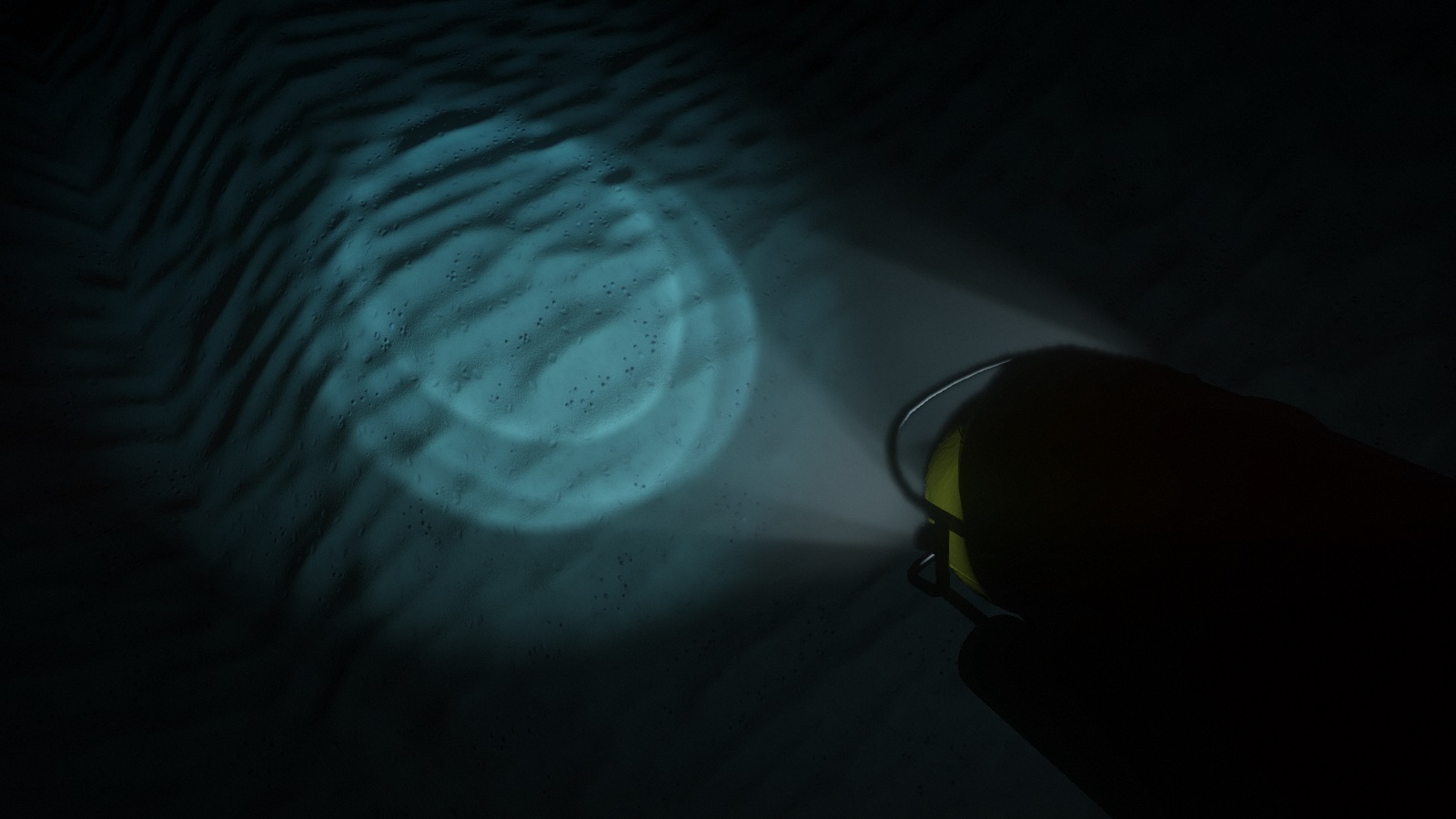

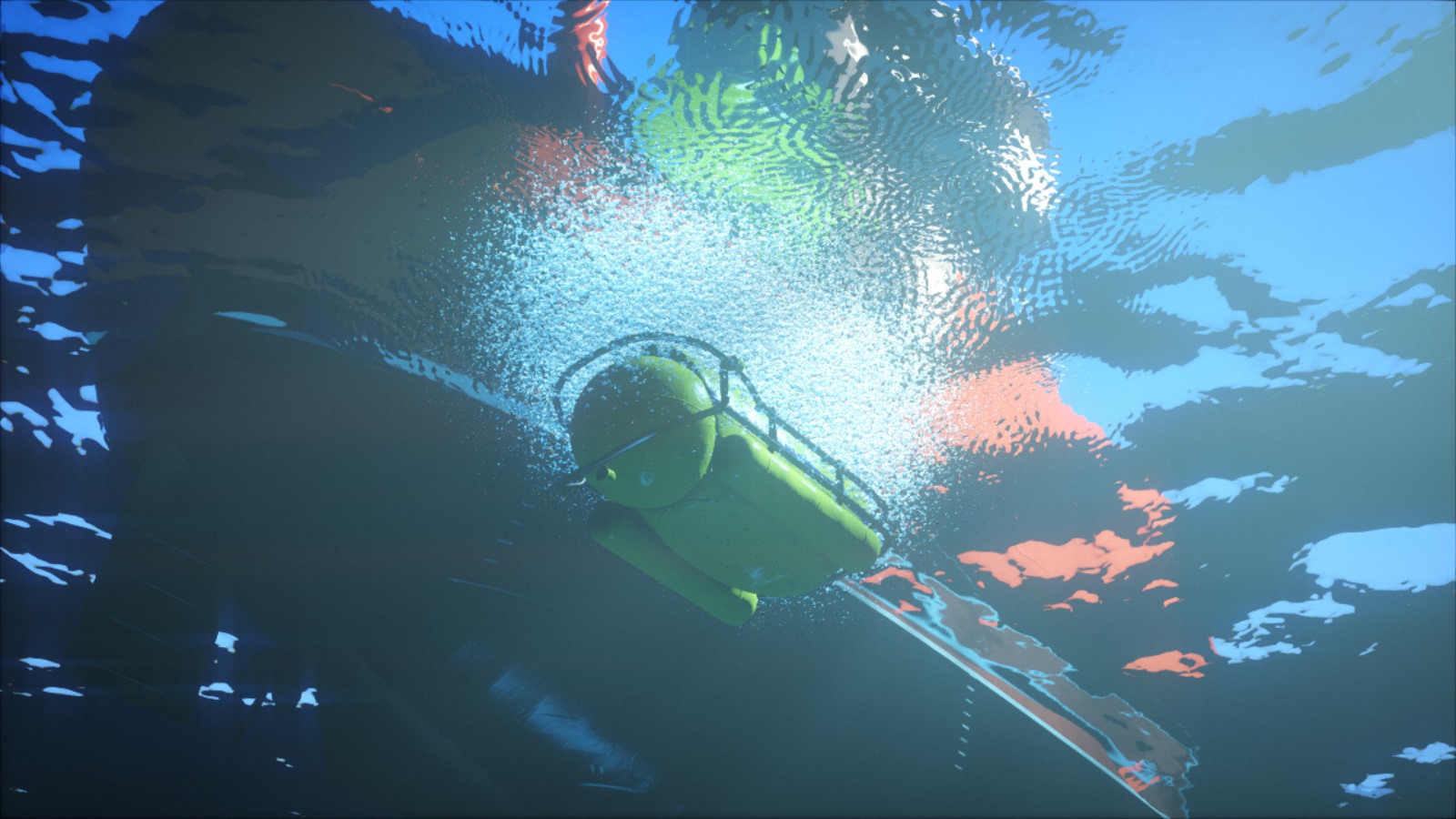

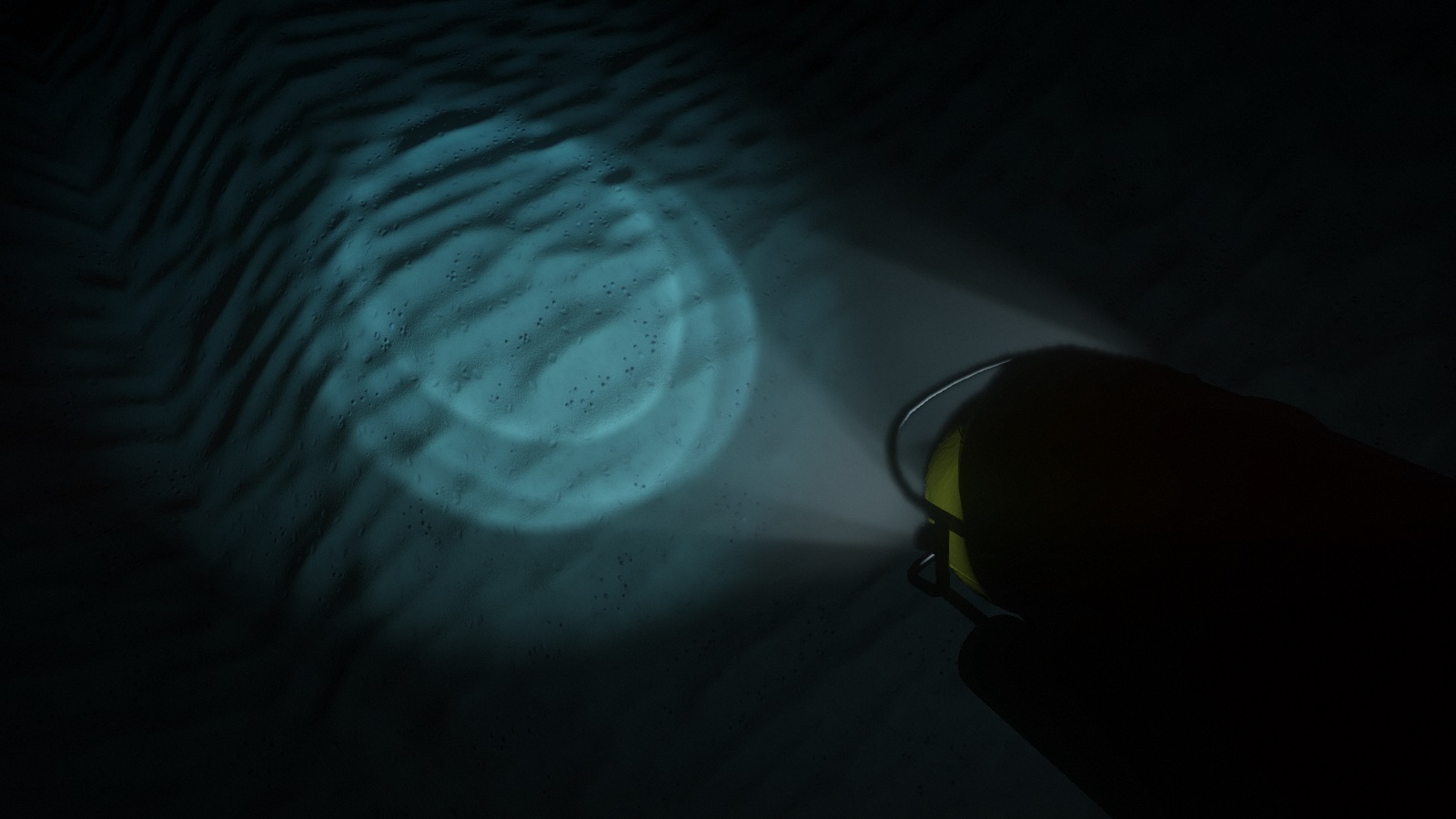

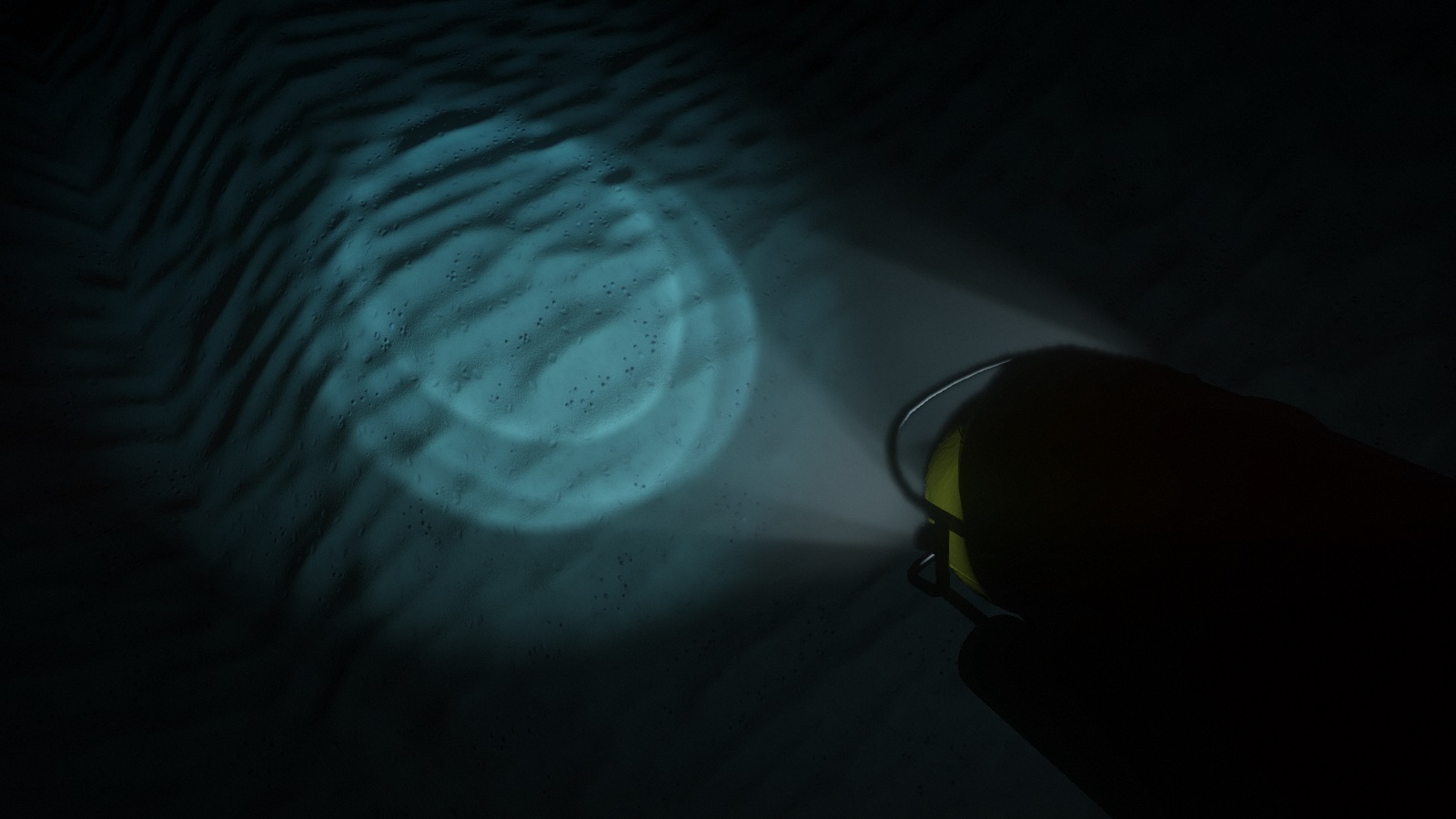

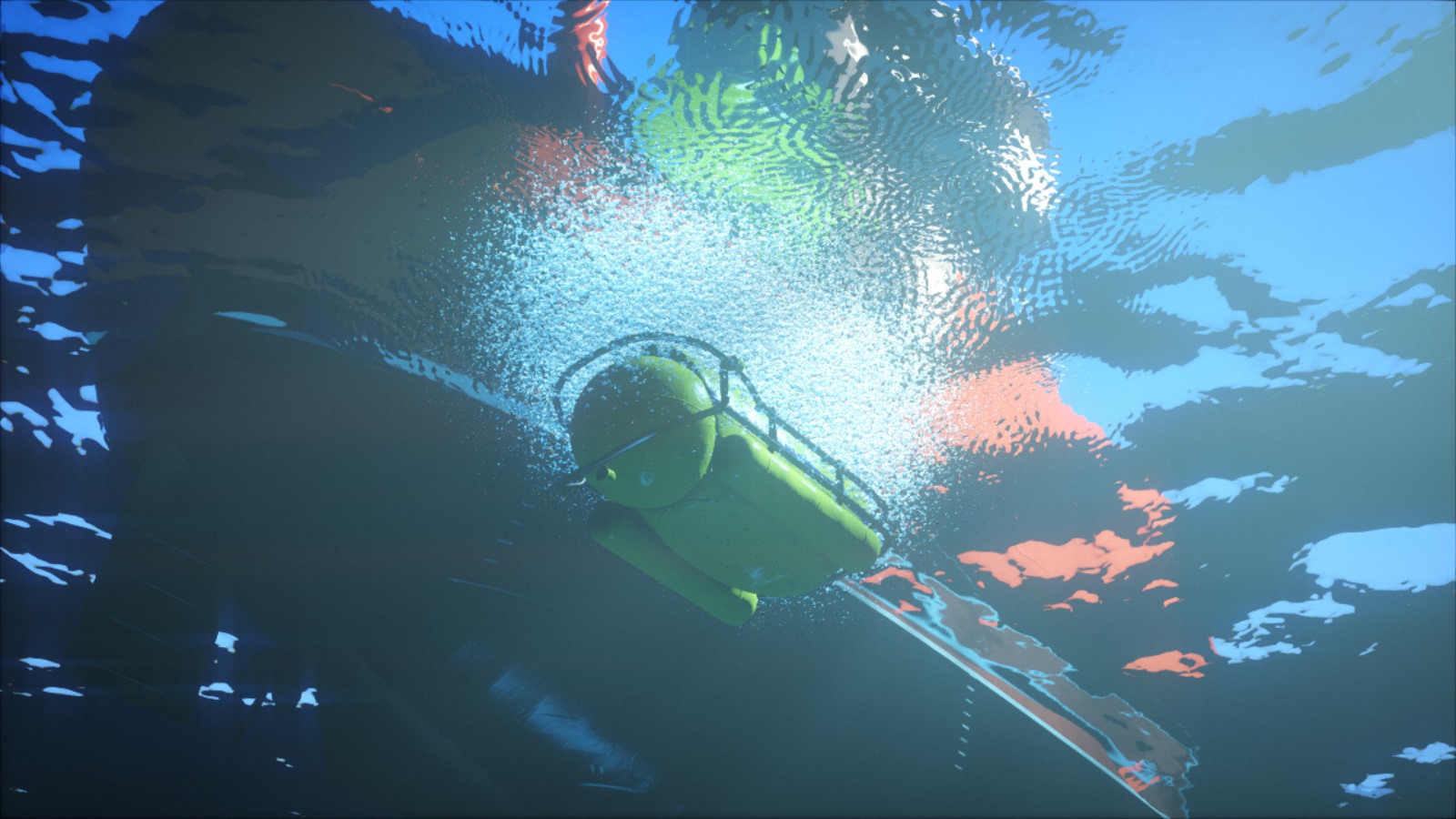

Submarine floodlights illuminate the details of the seabed.

Texture generation was one of the very first elements of our code base: procedural textures were already used in our first B - Incubation intro. The code consisted of a set of functions that fill, filter, transform and combine textures, as well as one large loop that bypasses all textures. These functions were written in pure C ++, but later the C API interaction was added so that they could be computed by the PicoC C interpreter . At that time, we used PicoC to reduce the time taken for each iteration: this was the way we managed to change and reload textures during program execution. Switching to subset C was a small sacrifice compared to the fact that now we could change the code and see the result immediately, without bothering to close, recompile, and reload the entire demo.

')

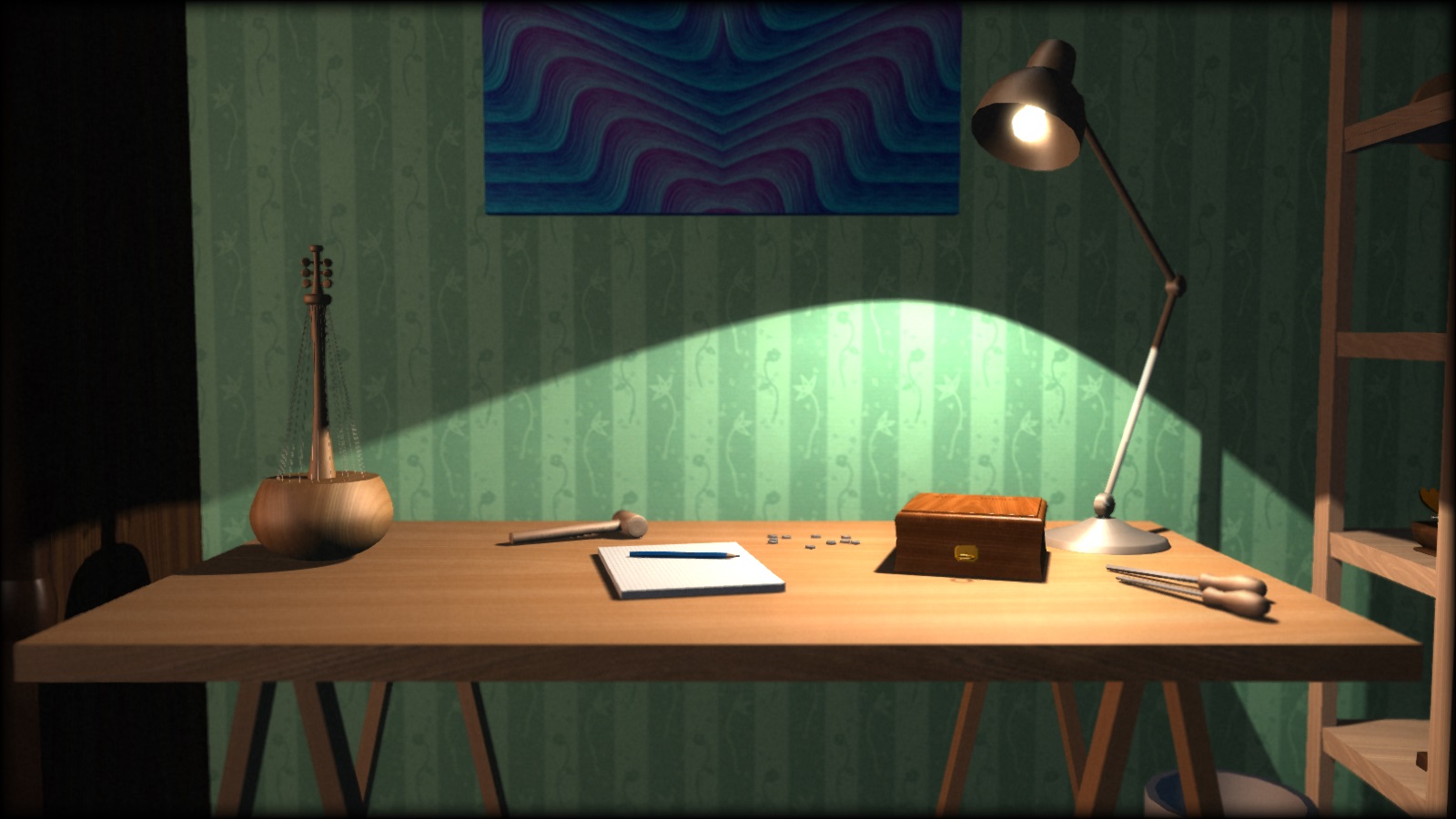

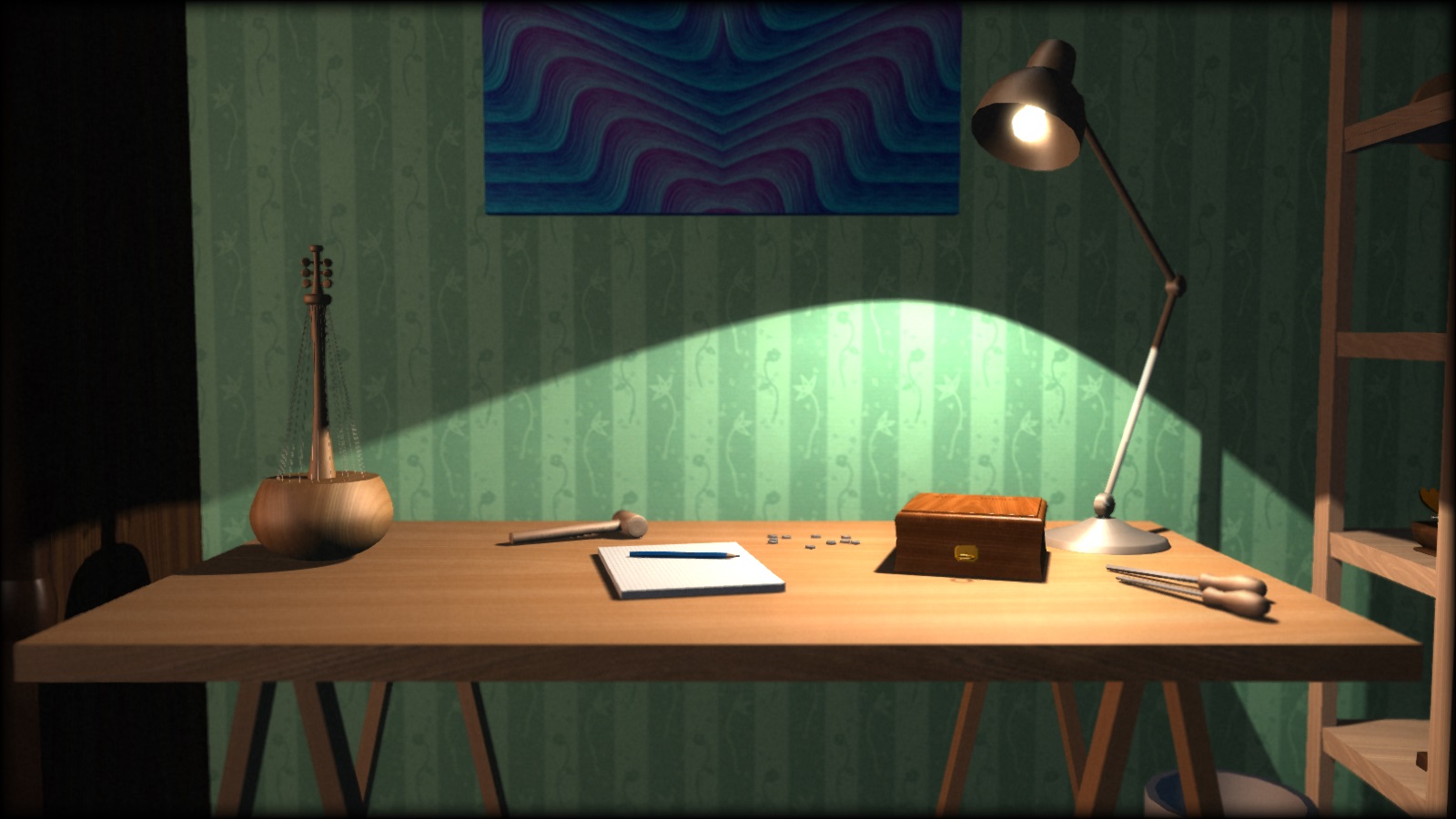

With the help of a simple pattern, a little noise and deformations, we can get a stylized wood texture.

In this scene from the F - Felix's workshop various wood textures were used.

For a while we explored the possibilities of this generator, and as a result we put it on a web server with a small PHP script and a simple web interface. We could write the texture code in the text field, and the script passed it to the generator, which then dumped the result as a PNG file for display on the page. Very soon, we started sketching right at work during the lunch break and sharing our small masterpieces with other members of the group. Such interaction strongly motivated us to the creative process.

Web gallery of our old texture generator. All textures can be edited in the browser.

For a long time, the texture generator almost did not change; we thought he was good, and our efficiency ceased to increase. But once we discovered that there are a lot of artists on Internet forums that demonstrate their fully procedurally generated textures, as well as arranging challenges on various topics. Procedural content was once a "trick" of the demo scene, but Allegorithmic , ShaderToy, and similar tools made it available to the general public. We did not pay attention to this, and they began to put us on the shoulder blades with ease. Unacceptable!

Fabric Couch . Fully procedural fabric texture created by Substance Designer. Posted by: Imanol Delgado. www.artstation.com/imanoldelgado

Forest Floor . Fully procedural forest soil texture created by Substance Designer. Posted by: Daniel Thiger. www.artstation.com/dete

We have long had to revise their tools. Fortunately, many years of work with the same texture generator has allowed us to realize its shortcomings. In addition, our nascent mesh generator also told us what the procedural content pipeline should look like.

The most important architectural error was the implementation of generating as a set of operations with texture objects. From a high-level perspective, this may be the right approach, but in terms of implementation, functions such as texture.DoSomething () or Combine (textureA, textureB) have serious drawbacks.

First, the OOP style requires declaring these functions as part of the API, no matter how simple they are. This is a serious problem because it doesn’t scale well and, more importantly, creates unnecessary friction in the creative process. We did not want to change the API every time we need to try something new. This complicates experimentation and limits creative freedom.

Secondly, from the point of view of performance, this approach requires processing texture data in cycles as many times as there are operations. This would not be particularly important if these operations were expensive in terms of the cost of accessing large chunks of memory, but this is usually not the case. With the exception of a very small fraction of operations, for example, Perlin noise generation or filling , they are basically very simple and require only a few instructions to a texture point. That is, we circumvented texture data to perform trivial operations, which is extremely inefficient from the point of view of caching.

The new structure solves these problems through the reorganization of logic. Most of the functions in practice independently perform the same operation for each texture element. Therefore, instead of writing the texture.DoSomething () function, bypassing all the elements, we can write texture.ApplyFunction (f) , where f (element) works only for a single texture element. Then f (element) can be written according to a specific texture.

This seems like a minor change. However, such a structure simplifies the API, makes the generation code more flexible and expressive, more cache-friendly, and allows parallel processing to be easy. Many of the readers have already understood that this is essentially a shader. However, the implementation in fact remains the C ++ code executed in the processor. We still retain the ability to perform operations outside the cycle, but use this option only when necessary, for example, performing a convolution.

It takes time to generate textures, and an obvious candidate to reduce this time is parallel code execution. At the very least, you can learn to generate multiple textures at the same time. This is what we did for the F - Felix's workshop , and this greatly reduced the load time.

However, this does not save time where it is needed most. Still, it takes a long time to generate one texture. This also applies to the change when we continue to reload the texture again and again before each modification. Instead, it is better to parallelize the internal code for generating textures. Since now the code essentially consists of one large function applied in a loop to each texel, parallelization becomes simple and efficient. Costs for experiments, customization and sketches are reduced, which directly affects the creative process.

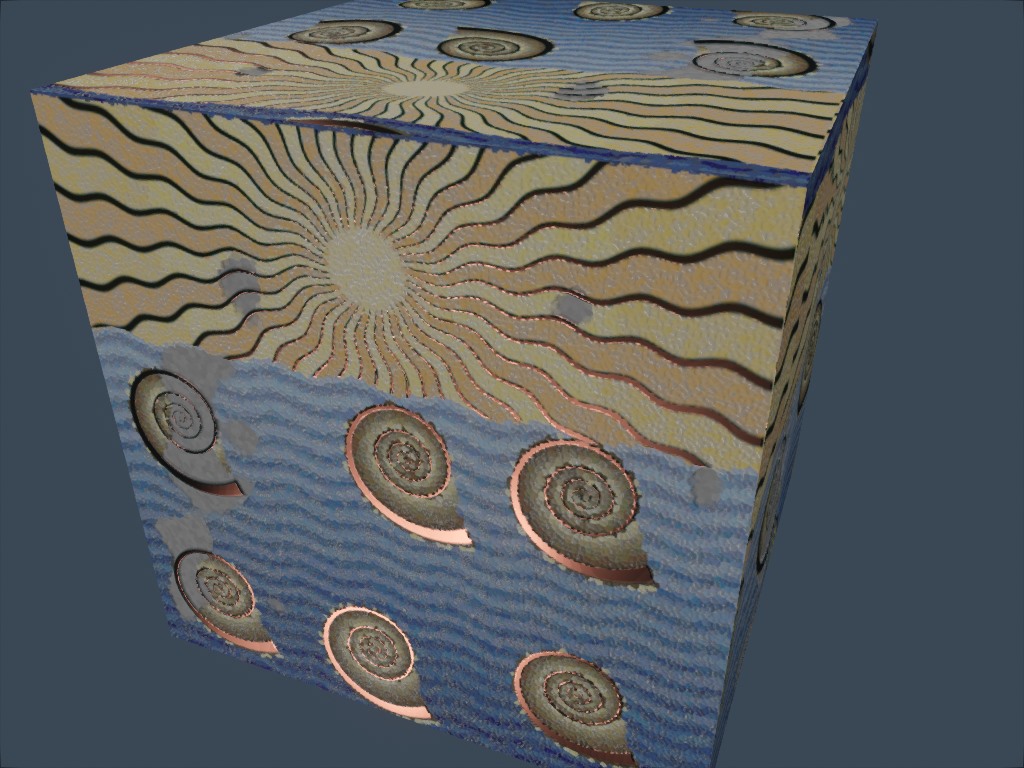

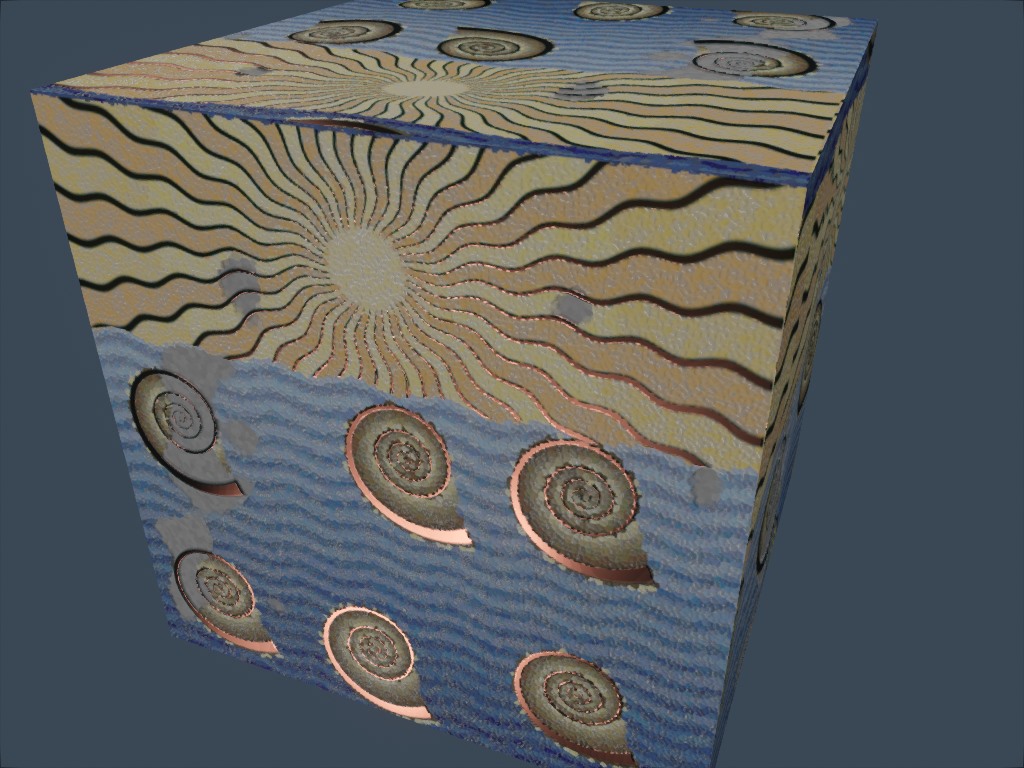

Illustration of the idea explored and rejected by us for H - Immersion : mosaic decoration with orichalka veneer. Here it is shown in our online editing tool.

If this is still not obvious, then I will say that texture generation is fully performed in the CPU. Perhaps one of you is reading these lines now and wondering “but why ?!”. It seems that the obvious step is to generate textures in the video processor. For a start, it will increase the generation rate by an order of magnitude. So why don't we use it?

The main reason is that the goal of our small redesign was to stay on the CPU. Going to a GPU would mean a lot more work. We would have to solve additional problems for which we still do not have enough experience. Working with the CPU, we have a clear understanding of what we want, and we know how to correct previous errors.

However, the good news is that thanks to the new structure, experiments with GPUs now seem rather trivial. Testing combinations of both types of processors will be an interesting experiment for the future.

Another limitation of the old design was that the texture was viewed only as an RGB image. If we needed to generate more information, say diffuse texture and normal texture for the same surface, then nothing prevented us from doing this, but the API didn’t help much. This has become particularly important in the context of physically accurate shading (Physically Based Shading, PBR).

In a traditional conveyor without PBR, color textures are usually used, in which a lot of information is baked. Such textures often represent the final appearance of the surface: they already have a certain volume, the cracks are darkened, and there may even be reflections on them. If several textures are used at the same time, then large-scale and small-scale details are usually combined to add normal or reflectivity maps.

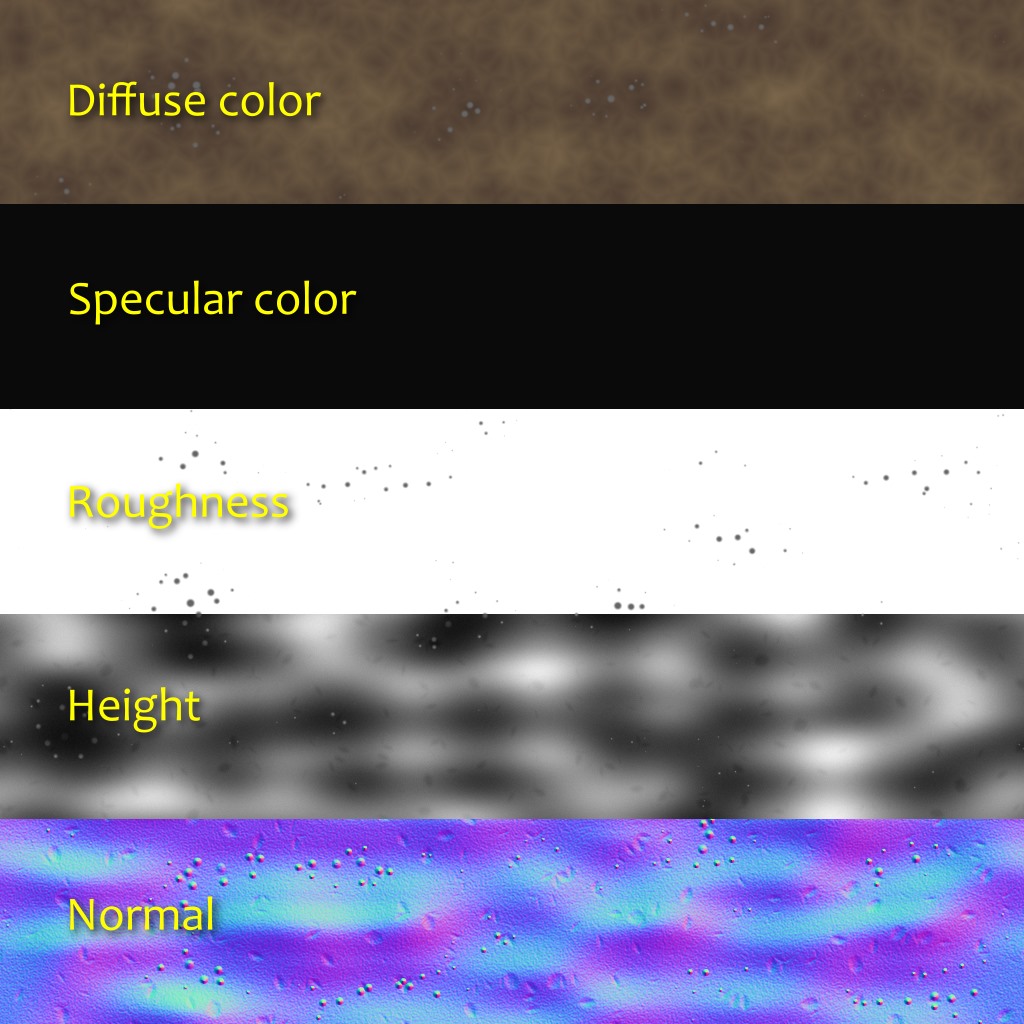

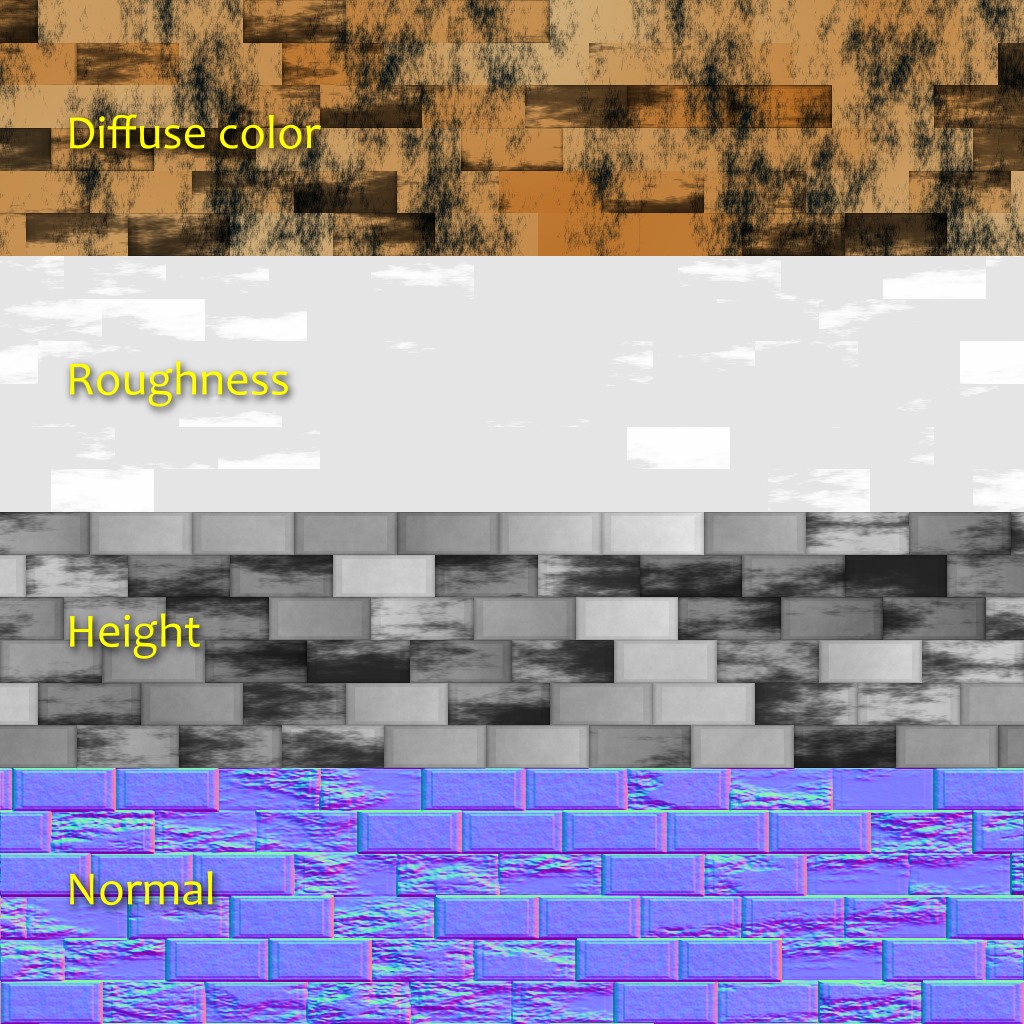

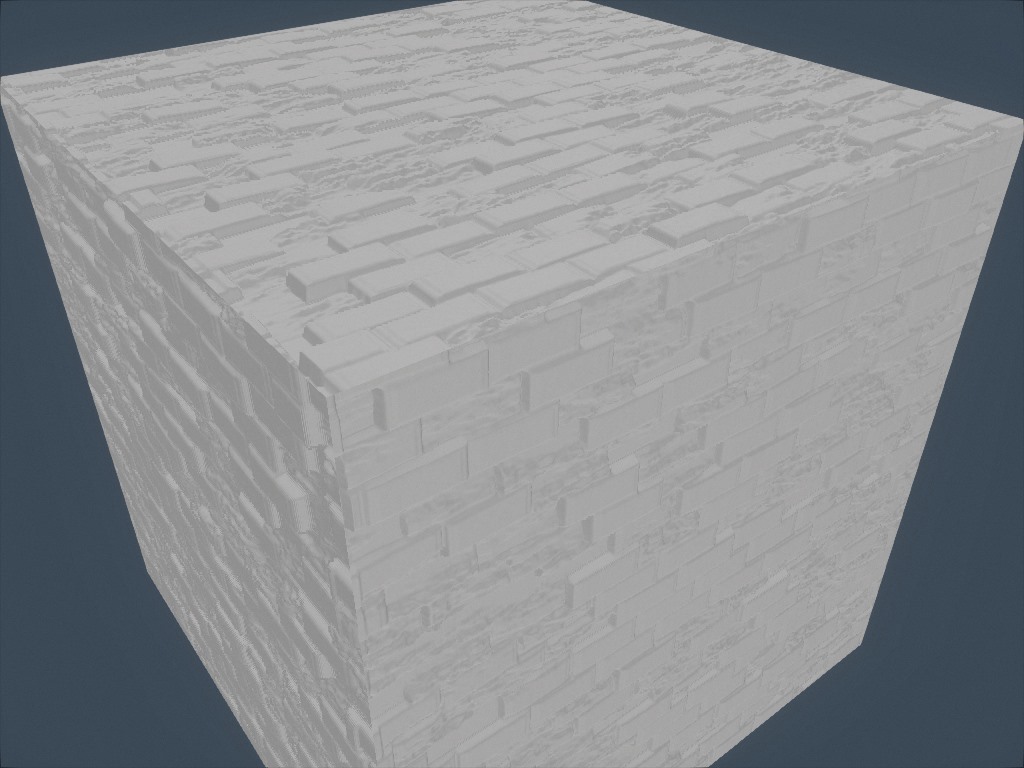

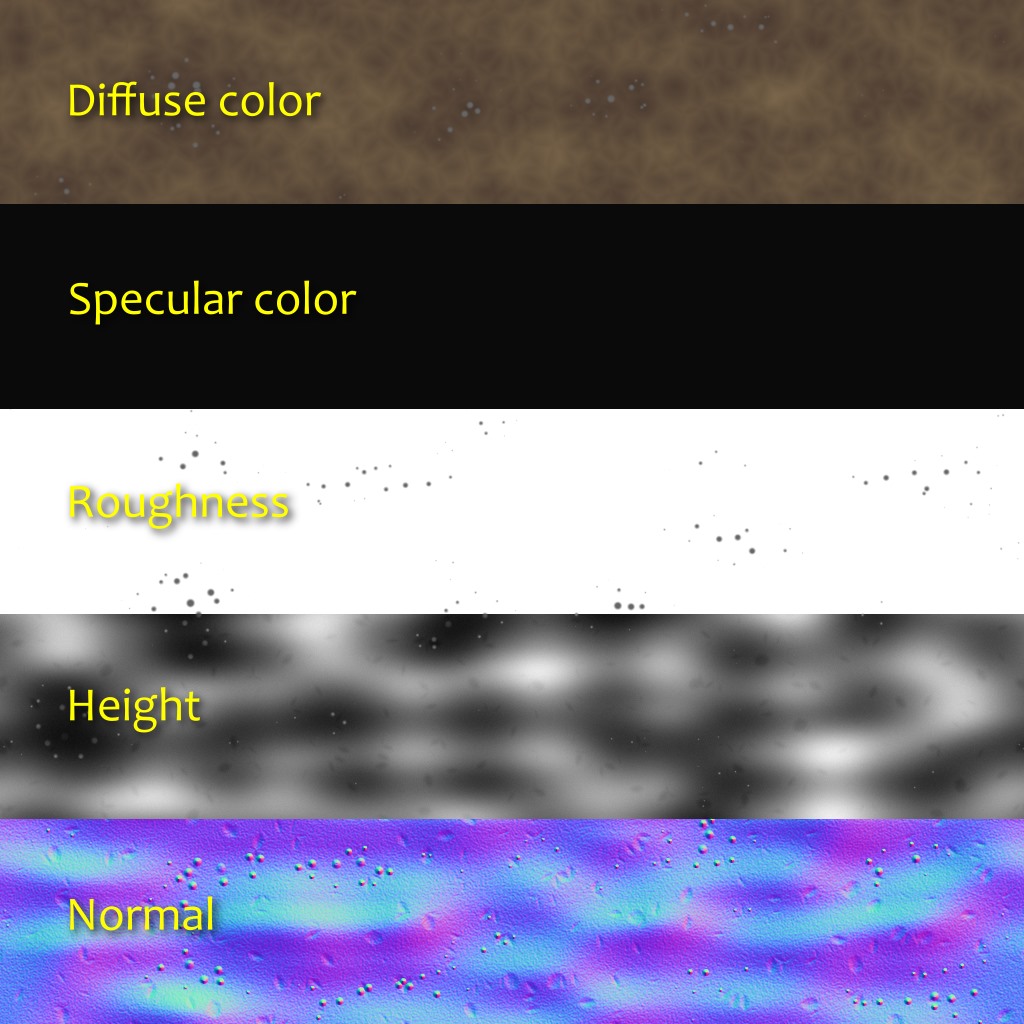

In a PBR surface conveyor, usually sets of several textures are used that represent physical values, rather than the desired artistic result. The diffuse color texture, which is closest to what is often called the "color" of the surface, is usually flat and uninteresting. The color specular is determined by the refractive index of the surface. Most of the details and variability are taken from the textures of normals and roughness (roughness) (which someone may consider to be the same, but with two different scales). The perceived reflectivity of the surface becomes a consequence of its roughness level. At this stage it will be more logical to think in terms of not textures, but materials.

The new structure allows us to declare arbitrary pixel formats for textures. By making it part of the API, we allow it to deal with all the boilerplate code. After declaring the pixel format, we can focus on the creative code, without wasting any extra effort on processing this data. At runtime, it will generate several textures and transparently transfer them to the GPU.

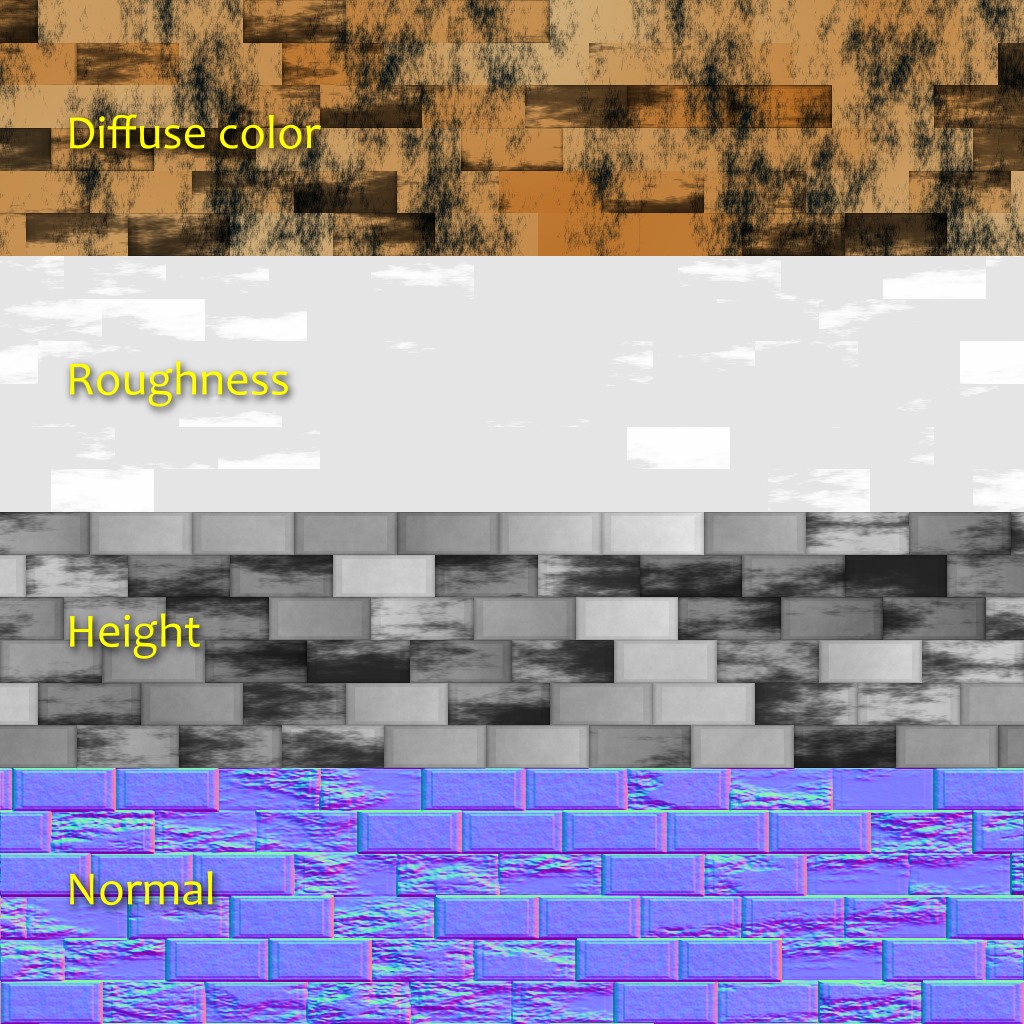

In some PBR pipelines, diffuse and specular colors are not transmitted directly. Instead, they use the parameters "base color" and "metalness", which has its advantages and disadvantages. In H - Immersion, we use the diffuse + specular model, and the material usually consists of five layers:

When using information about the emission of light was added directly to the shader. We did not find it necessary to have ambient occlusion, because in most scenes there is no ambient lighting at all. However, I would not be surprised that we will have additional layers or other types of information, for example, anisotropy or opacity.

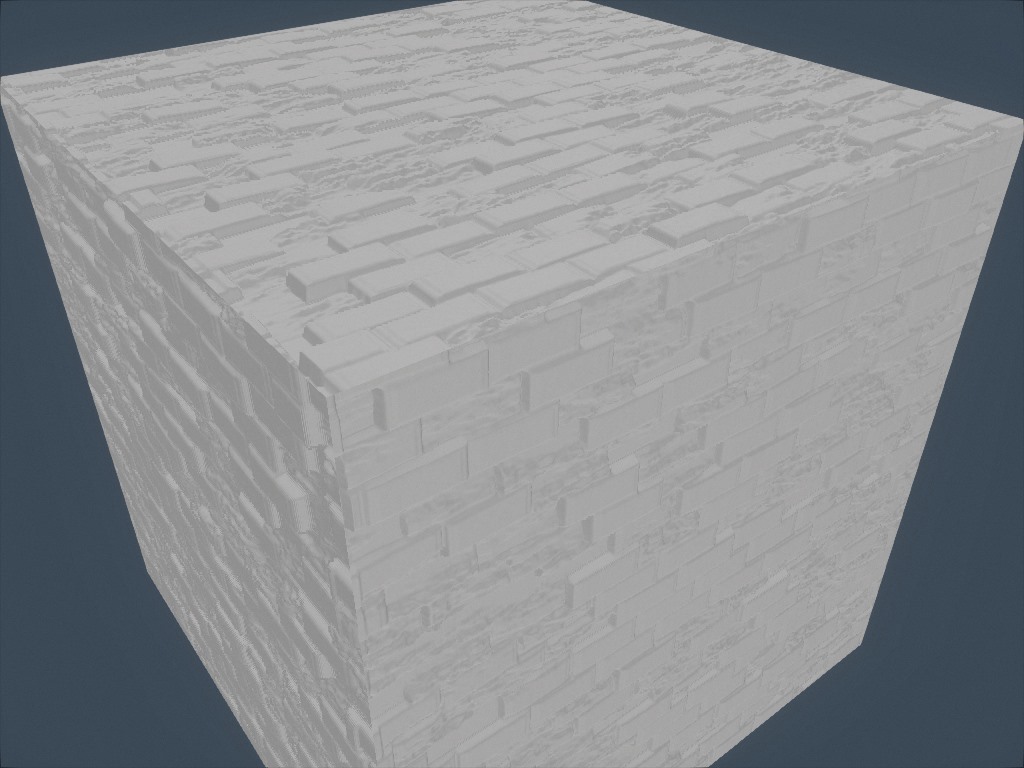

The images above show a recent experiment with generating local ambient occlusion based on height. For each direction we travel a given distance and keep the greatest slope (height difference divided by the distance). Then we calculate the occlusion from the average slope.

As you can see, the new structure has become a major improvement over the old one. In addition, she encourages creative expressiveness. However, she still has limitations that we want to eliminate in the future.

For example, although there were no problems with this intro, we noticed that memory allocation could be an obstacle. When generating textures, one array of float values is used. With large textures with multiple layers, you can quickly come to a problem with memory allocation. There are various ways to solve it, but they all have their drawbacks. For example, we can generate textures indiscriminately, while scalability will be better, however, the implementation of some operations, such as convolutions, becomes less obvious.

In addition, in this article, despite the use of the word "materials", we talked only about textures, but not about shaders. However, the use of materials should lead to shaders. This contradiction reflects the limitations of the existing structure: texture generation and shading are two separate parts separated by a bridge. We tried to make this bridge as easy as possible to cross, but actually we want these parts to become one. For example, if the material has both static and dynamic parameters, then we want to describe them in one place. This is a complex topic and we do not yet know whether there will be a good solution, but let's not get ahead of ourselves.

An experiment to create a fabric texture similar to the one shown above by Imadol Delgado.

When creating an animation of only 64 KB, it is difficult to use ready-made images. We can not store them in the traditional way, because it is not efficient enough, even if you use compression, such as JPEG. An alternative solution is procedural generation, that is, writing code that describes the creation of images during program execution. Our implementation of this solution was a texture generator - a fundamental part of our toolchain. In this post we will explain how it was developed and used in H - Immersion .

Submarine floodlights illuminate the details of the seabed.

Early version

Texture generation was one of the very first elements of our code base: procedural textures were already used in our first B - Incubation intro. The code consisted of a set of functions that fill, filter, transform and combine textures, as well as one large loop that bypasses all textures. These functions were written in pure C ++, but later the C API interaction was added so that they could be computed by the PicoC C interpreter . At that time, we used PicoC to reduce the time taken for each iteration: this was the way we managed to change and reload textures during program execution. Switching to subset C was a small sacrifice compared to the fact that now we could change the code and see the result immediately, without bothering to close, recompile, and reload the entire demo.

')

With the help of a simple pattern, a little noise and deformations, we can get a stylized wood texture.

In this scene from the F - Felix's workshop various wood textures were used.

For a while we explored the possibilities of this generator, and as a result we put it on a web server with a small PHP script and a simple web interface. We could write the texture code in the text field, and the script passed it to the generator, which then dumped the result as a PNG file for display on the page. Very soon, we started sketching right at work during the lunch break and sharing our small masterpieces with other members of the group. Such interaction strongly motivated us to the creative process.

Web gallery of our old texture generator. All textures can be edited in the browser.

Full redesign

For a long time, the texture generator almost did not change; we thought he was good, and our efficiency ceased to increase. But once we discovered that there are a lot of artists on Internet forums that demonstrate their fully procedurally generated textures, as well as arranging challenges on various topics. Procedural content was once a "trick" of the demo scene, but Allegorithmic , ShaderToy, and similar tools made it available to the general public. We did not pay attention to this, and they began to put us on the shoulder blades with ease. Unacceptable!

Fabric Couch . Fully procedural fabric texture created by Substance Designer. Posted by: Imanol Delgado. www.artstation.com/imanoldelgado

Forest Floor . Fully procedural forest soil texture created by Substance Designer. Posted by: Daniel Thiger. www.artstation.com/dete

We have long had to revise their tools. Fortunately, many years of work with the same texture generator has allowed us to realize its shortcomings. In addition, our nascent mesh generator also told us what the procedural content pipeline should look like.

The most important architectural error was the implementation of generating as a set of operations with texture objects. From a high-level perspective, this may be the right approach, but in terms of implementation, functions such as texture.DoSomething () or Combine (textureA, textureB) have serious drawbacks.

First, the OOP style requires declaring these functions as part of the API, no matter how simple they are. This is a serious problem because it doesn’t scale well and, more importantly, creates unnecessary friction in the creative process. We did not want to change the API every time we need to try something new. This complicates experimentation and limits creative freedom.

Secondly, from the point of view of performance, this approach requires processing texture data in cycles as many times as there are operations. This would not be particularly important if these operations were expensive in terms of the cost of accessing large chunks of memory, but this is usually not the case. With the exception of a very small fraction of operations, for example, Perlin noise generation or filling , they are basically very simple and require only a few instructions to a texture point. That is, we circumvented texture data to perform trivial operations, which is extremely inefficient from the point of view of caching.

The new structure solves these problems through the reorganization of logic. Most of the functions in practice independently perform the same operation for each texture element. Therefore, instead of writing the texture.DoSomething () function, bypassing all the elements, we can write texture.ApplyFunction (f) , where f (element) works only for a single texture element. Then f (element) can be written according to a specific texture.

This seems like a minor change. However, such a structure simplifies the API, makes the generation code more flexible and expressive, more cache-friendly, and allows parallel processing to be easy. Many of the readers have already understood that this is essentially a shader. However, the implementation in fact remains the C ++ code executed in the processor. We still retain the ability to perform operations outside the cycle, but use this option only when necessary, for example, performing a convolution.

It was:

// . // API . // - API. // . class ProceduralTexture { void DoSomething(parameters) { for (int i = 0; i < size; ++i) { // . (*this)[i] = … } } void PerlinNoise(parameters) { … } void Voronoi(parameters) { … } void Filter(parameters) { … } void GenerateNormalMap() { … } }; void GenerateSomeTexture(texture t) { t.PerlinNoise(someParameter); t.Filter(someOtherParameter); … // .. t.GenerateNormalMap(); } It became:

// . // API . // . // . class ProceduralTexture { void ApplyFunction(functionPointer f) { for (int i = 0; i < size; ++i) { // . (*this)[i] = f((*this)[i]); } } }; void GenerateNormalMap(ProceduralTexture t) { … } void SomeTextureGenerationPass(void* out, PixelInfo in) { result = PerlinNoise(in); result = Filter(result); … // .. *out = result; } void GenerateSomeTexture(texture t) { t.ApplyFunction(SomeTextureGenerationPass); GenerateNormalMap(t); } Parallelization

It takes time to generate textures, and an obvious candidate to reduce this time is parallel code execution. At the very least, you can learn to generate multiple textures at the same time. This is what we did for the F - Felix's workshop , and this greatly reduced the load time.

However, this does not save time where it is needed most. Still, it takes a long time to generate one texture. This also applies to the change when we continue to reload the texture again and again before each modification. Instead, it is better to parallelize the internal code for generating textures. Since now the code essentially consists of one large function applied in a loop to each texel, parallelization becomes simple and efficient. Costs for experiments, customization and sketches are reduced, which directly affects the creative process.

Illustration of the idea explored and rejected by us for H - Immersion : mosaic decoration with orichalka veneer. Here it is shown in our online editing tool.

GPU Generation

If this is still not obvious, then I will say that texture generation is fully performed in the CPU. Perhaps one of you is reading these lines now and wondering “but why ?!”. It seems that the obvious step is to generate textures in the video processor. For a start, it will increase the generation rate by an order of magnitude. So why don't we use it?

The main reason is that the goal of our small redesign was to stay on the CPU. Going to a GPU would mean a lot more work. We would have to solve additional problems for which we still do not have enough experience. Working with the CPU, we have a clear understanding of what we want, and we know how to correct previous errors.

However, the good news is that thanks to the new structure, experiments with GPUs now seem rather trivial. Testing combinations of both types of processors will be an interesting experiment for the future.

Texture generation and physically accurate shading

Another limitation of the old design was that the texture was viewed only as an RGB image. If we needed to generate more information, say diffuse texture and normal texture for the same surface, then nothing prevented us from doing this, but the API didn’t help much. This has become particularly important in the context of physically accurate shading (Physically Based Shading, PBR).

In a traditional conveyor without PBR, color textures are usually used, in which a lot of information is baked. Such textures often represent the final appearance of the surface: they already have a certain volume, the cracks are darkened, and there may even be reflections on them. If several textures are used at the same time, then large-scale and small-scale details are usually combined to add normal or reflectivity maps.

In a PBR surface conveyor, usually sets of several textures are used that represent physical values, rather than the desired artistic result. The diffuse color texture, which is closest to what is often called the "color" of the surface, is usually flat and uninteresting. The color specular is determined by the refractive index of the surface. Most of the details and variability are taken from the textures of normals and roughness (roughness) (which someone may consider to be the same, but with two different scales). The perceived reflectivity of the surface becomes a consequence of its roughness level. At this stage it will be more logical to think in terms of not textures, but materials.

The new structure allows us to declare arbitrary pixel formats for textures. By making it part of the API, we allow it to deal with all the boilerplate code. After declaring the pixel format, we can focus on the creative code, without wasting any extra effort on processing this data. At runtime, it will generate several textures and transparently transfer them to the GPU.

In some PBR pipelines, diffuse and specular colors are not transmitted directly. Instead, they use the parameters "base color" and "metalness", which has its advantages and disadvantages. In H - Immersion, we use the diffuse + specular model, and the material usually consists of five layers:

- Color Diffuse (RGB; 0: Vantablack ; 1: fresh snow ).

- Specular color (RGB: the proportion of light reflected under 90 °, also known as F0 or R0 ).

- Roughness (A; 0: perfectly smooth; 1: similar to rubber).

- Normals (XYZ; unit vector).

- Relief elevation (A; used for parallax occlusion mapping).

When using information about the emission of light was added directly to the shader. We did not find it necessary to have ambient occlusion, because in most scenes there is no ambient lighting at all. However, I would not be surprised that we will have additional layers or other types of information, for example, anisotropy or opacity.

The images above show a recent experiment with generating local ambient occlusion based on height. For each direction we travel a given distance and keep the greatest slope (height difference divided by the distance). Then we calculate the occlusion from the average slope.

Restrictions and work for the future

As you can see, the new structure has become a major improvement over the old one. In addition, she encourages creative expressiveness. However, she still has limitations that we want to eliminate in the future.

For example, although there were no problems with this intro, we noticed that memory allocation could be an obstacle. When generating textures, one array of float values is used. With large textures with multiple layers, you can quickly come to a problem with memory allocation. There are various ways to solve it, but they all have their drawbacks. For example, we can generate textures indiscriminately, while scalability will be better, however, the implementation of some operations, such as convolutions, becomes less obvious.

In addition, in this article, despite the use of the word "materials", we talked only about textures, but not about shaders. However, the use of materials should lead to shaders. This contradiction reflects the limitations of the existing structure: texture generation and shading are two separate parts separated by a bridge. We tried to make this bridge as easy as possible to cross, but actually we want these parts to become one. For example, if the material has both static and dynamic parameters, then we want to describe them in one place. This is a complex topic and we do not yet know whether there will be a good solution, but let's not get ahead of ourselves.

An experiment to create a fabric texture similar to the one shown above by Imadol Delgado.

Source: https://habr.com/ru/post/419007/

All Articles