MIT course "Computer Systems Security". Lecture 8: "Model of network security", part 1

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

Lecture 7: "Sandbox Native Client" Part 1 / Part 2 / Part 3

Lecture 8: "Model of network security" Part 1 / Part 2 / Part 3

Let's begin the next part of our fascinating journey into the world of computer security. Today we talk about web security. In fact, Internet security is one of my favorite topics of conversation, because it introduces you to the true horrors of this world.

')

Of course, it is easy to be a student and to think that everything will be great if you just graduate. However, today's and the next lecture will tell you that, in fact, it is not so, and you are waiting for continuous horrors.

So what is the internet? In the old days, the network was much simpler than today. Clients, that is, browsers, could not do anything with the display of fixed or active content. In essence, they could only receive static images and static texts.

But the server part was a bit more interesting, even if the client side had static content. The server could communicate with databases, it could “talk” with other machines on the server side. Thus, for a very long time, the concept of web security was, in principle, related to what the server is doing. In our lectures, we will, in fact, use the same approach.

We considered such a thing as a buffer overflow attack. Since clients can trick the server, forcing him to do what he does not want to do. You also looked at the OKWS server and how you can isolate privileges there.

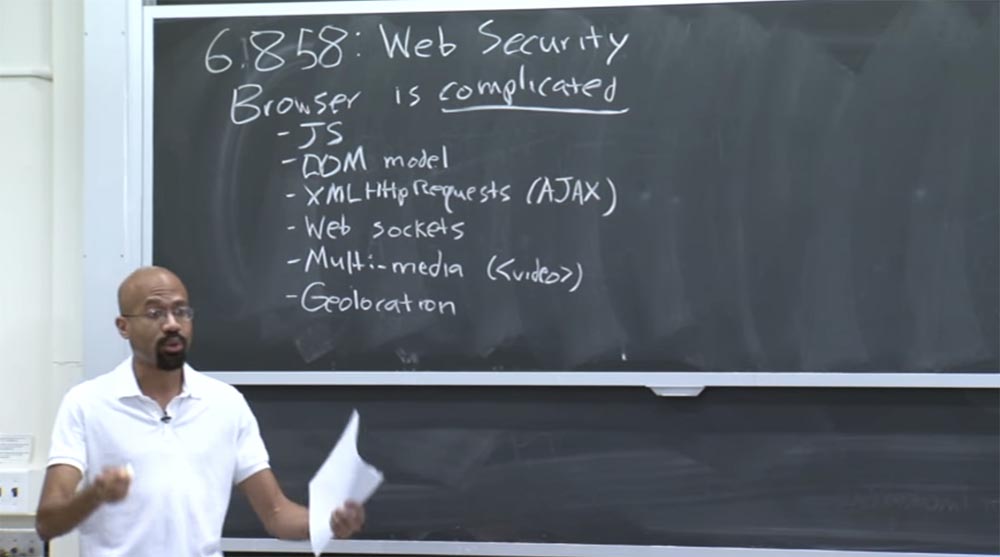

So far, we have considered security through experience, which was actually obtained by using the security resources themselves. But now browsers are very interesting objects from a security point, with which everything is very difficult.

Consider all kinds of crazy, dynamic things that a browser can do. For example, you probably heard about javascript. JavaScript now allows pages to execute code from the client. There is a DOM model, which we will talk about in more detail today. The DOM, in essence, allows JavaScript code to dynamically change the appearance of a page, for example, to style fonts and the like.

We have XML HTTP requests. This is basically a way for JavaScript to asynchronously receive content from servers. You can also hear about XML HTTP requests called AJAX - asynchronous sampling of JavaScript.

There are things like web sockets. This is the newly introduced API, programming interface. Web sockets allow full duplex communication between clients and servers, that is, communication in both directions.

We also have all kinds of multimedia support, for example, a tag:

<video> Allows a web page to play videos without using a Flash application. He can just play this video initially.

We also have geolocation. Now the web page can physically determine where you are. For example, if you use a webpage on a smartphone, the browser can actually access your device’s GPS module. If you access a webpage through a browser on your desktop, it can view your Wi-Fi connection and connect to Google’s Wi-Fi geolocation service to find out exactly where you are. It sounds crazy, is not it? But now web pages can do such things. We also mentioned such a thing as the Native Client, which allows browsers to run native code.

There are many other features in the browser that I did not mention here. But suffice it to say that a modern browser is an incredibly complex thing.

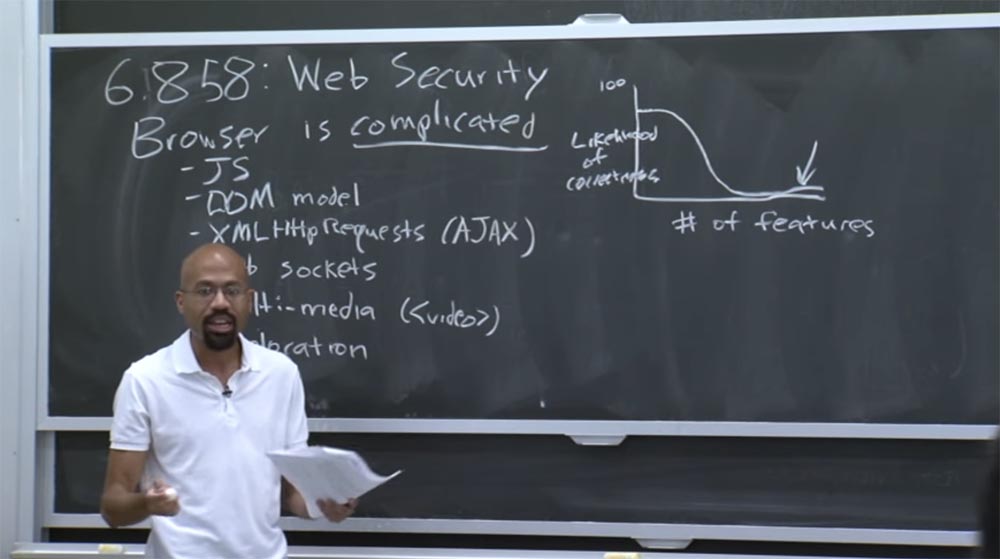

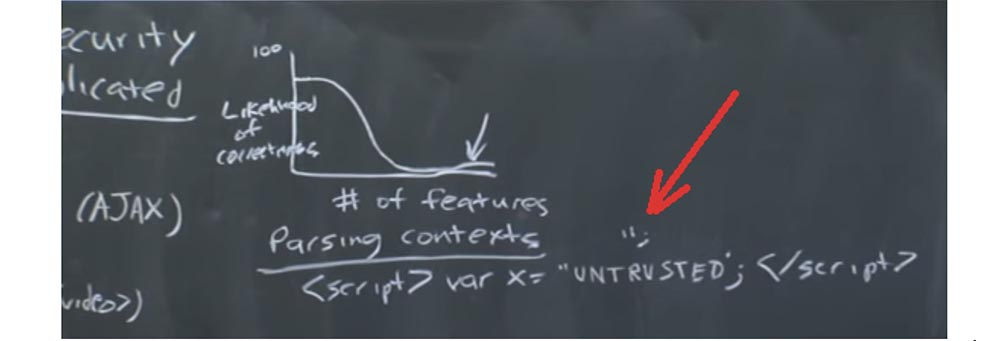

So what does this mean in terms of security? In general, this means that we are in great difficulty. Because there is truly a huge field of activity for security threats. Roughly speaking, when you think about security, you might think about a graph that looks something like this: the vertical axis is the probability that functions are performed correctly, and the horizontal axis is the number of functions available. The vertical axis is limited to the number 100, which we cannot achieve even with the simplest code.

In fact, this curve looks something like this, and web browsers are located right here, at the end of the graph below the arrow. Dependence is simple - the more processes in the system, the less likely they are to be correctly executed. So today we will discuss all kinds of foolish security mistakes that occur constantly. And as soon as the old ones are corrected, new errors immediately appear, because people continue to add new functions to the browser, often without thinking about what security implications they may cause.

Therefore, if you think about what a web application is these days, you can say that this is both a client and a server. A modern web application encompasses several programming languages, several computers, and many programs for hardware.

For example, you can use Firefox on a Windows computer, then this browser is going to talk to a machine in the cloud that runs Linux, and runs to the Apache server. Maybe it works on an ARM chip that is incompatible with the x86 platform, or vice versa. In short, there are problems of composition of different components. All these software levels and all these hardware levels can affect security.

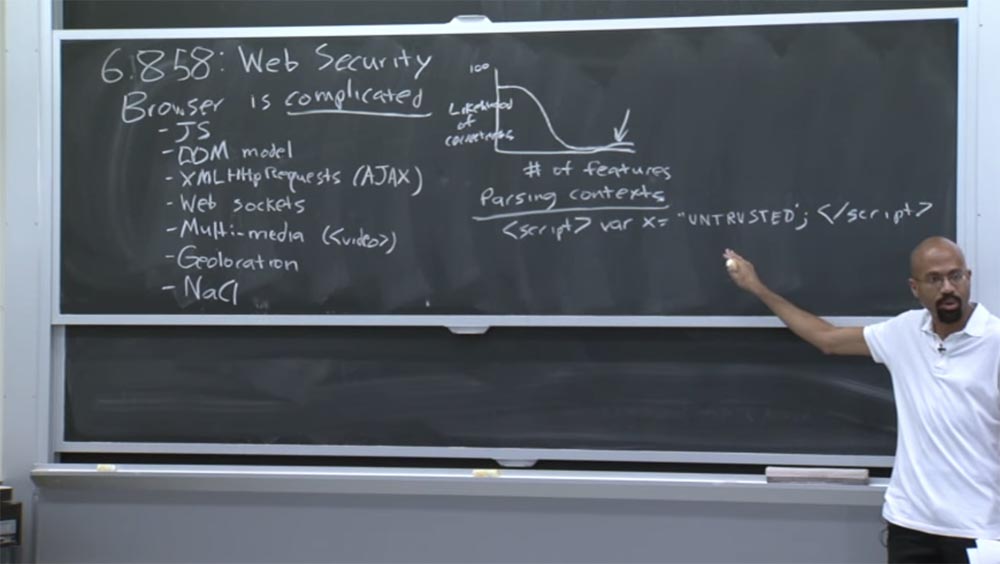

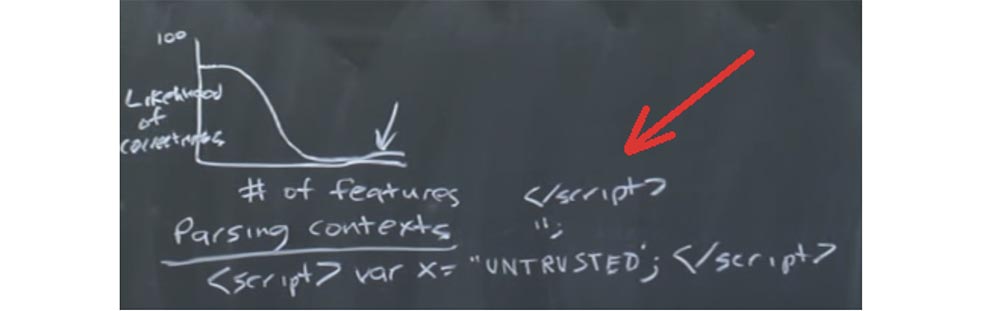

Therefore, all this is difficult, since we have no idea how to cover the whole composition of “software” and “hardware” entirely. For example, one of the common problems with the Internet is context parsing.

Suppose that the page had something like this:

<script> var x = 'UNTRUSTED'; </script>

You declare a script tag, inside it there is a variable that receives a value from the unreliable side — the user or another machine. Then we close the script tag, and this part can be trusted. That is, we have a string, at the edges of which are things that can be trusted, and in the middle - untrusted untrusted code. Why, then, can we have problems if we place a thing from the unverified side in the middle of the script?

Audience: you may have an incorrect closing quote somewhere inside this code that will break the script string.

Professor: quite right! The problem is different context, which can break this unreliable code into parts. For example, if the closing quote is located in the middle of the untrusted code, we close the definition of this JavaScript string.

So, after we add the context of the JavaScript string, we initiate the execution of this context. In this case, the attacker can simply put the closing script tag here, exit the JavaScript context and enter the HTML context, for example, to find new HTML nodes or something like that.

Therefore, you should consider such compositional problems everywhere on the Internet, as there are many different languages used here: HTML, CSS, JavaScript, possibly MySQL on the server side, and so on and so forth. Thus, I gave a classic example of why you should do what is called “content standardization”. Whenever you get unreliable input from someone, you need to analyze them very carefully to make sure that they cannot be used as an attack vector.

Another reason for the complexity of Internet security is that web specifications are incredibly long, tedious, boring and often inconsistent. When I mean web specifications, I mean things like JPEG definition, CSS definition, HTML definition. These documents are the same size as the EU Constitution and are just as difficult to understand. Ultimately, when browser vendors see all these specifications, they simply have to say to the developers: “OK, thanks for that,” and then read them and laugh at all this with their friends.

So these specifications are rather vague and do not always accurately reflect what real browsers do. If you want to understand this horror, you can visit the site https://www.quirksmode.org/ , but if you want to be happy, you better not go there. It actually documented all the terrible inconsistencies that browsers do when the user presses a key. On this site you can check out what happens.

In any case, in this lecture we will focus on the client side of the web application. In particular, we will look at how to isolate content coming from different web providers, which must somehow coexist on the same machine and in the same browser. There is a fundamental difference between what you usually think about the application on your desktop and what you think about the web application.

In abstract terms, most desktop applications that you use can be perceived as a product of a single developer, for example, Microsoft. Or perhaps you are using TurboTax software from Mr. and Mrs. TurboTax, and so on and so forth. But when you look at web applications, what visually looks to you as one, actually consists of a heap of applications of different content from a heap of different developers.

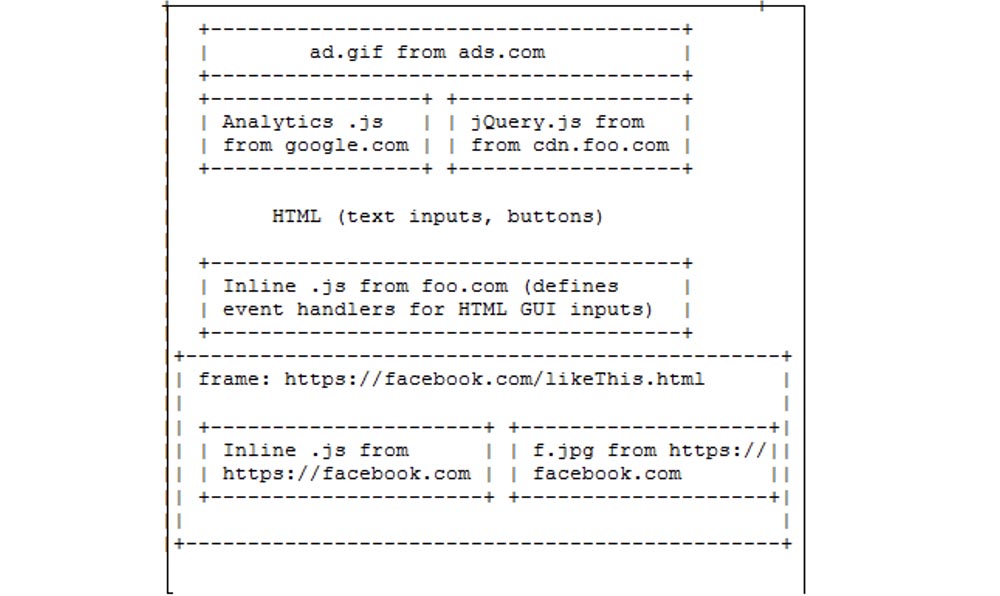

For example, you go to the CNN page, it seems to you that everything is located on one tab. But each of those visual things that you see can actually come from someone else. Let's look at a very simple example.

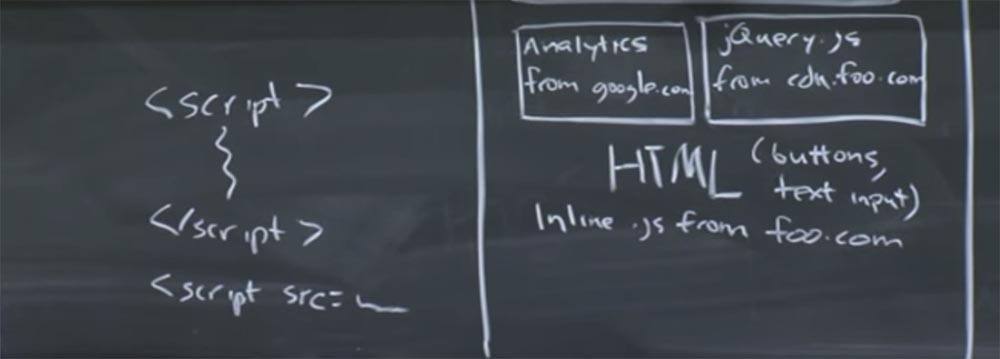

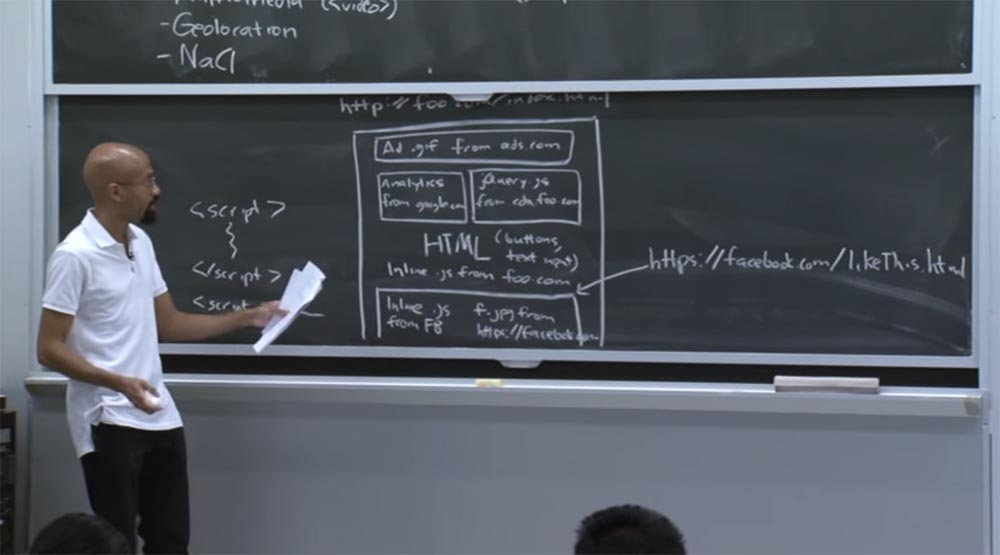

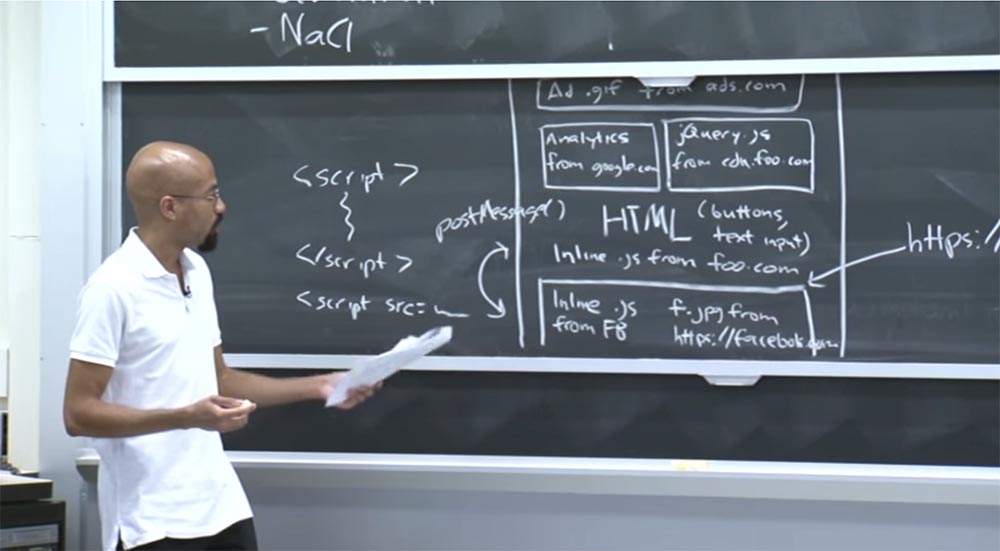

Suppose we went to the Internet at http://foo.com/index.html . What does the page in question consist of?

There may be ads at the top, which may have been downloaded from ads.com. On the left, there can be an analytics unit, for example, from google.com. These libraries are very popular for tracking the number of people who have downloaded this page, for observing which links people click on, which parts of the page they are interested in interacting with, and so on.

On the right, you can have another JavaScript library, say, jQuery, which comes from cdn.foo.com. This is some content provided for foo.com.

jQuery is a very popular library for GUI manipulation, so jQuery is available on many sites, although they get it from different places. Further on this page you can see some HTML text data, user buttons, text entry fields, and so on, and so on. So this is just plain HTML on the page.

Then you can see what they call inline JavaScript code from foo.com. For example, we have an opening tag on top, and the JavaScript code is embedded in the middle between them. In our case, there is what is called inline JavaScript - this is the top of the picture.

At the bottom of the line, I will draw what we call JavaScript, because there the content is equal to something that resides on a remote server. This is what is called the external definition of javascript content. The script and the embedded code are different from each other, and on our page there is exactly embedded JavaScript from foo.com.

And one more thing that can be here is a frame. The frame can be represented as a separate JavaScript universe. This is a bit equivalent to a UNIX process. Maybe this frame comes from facebook.com/likethis.html and inside it we have built-in javascript from facebook.

Next we may have some f.jpeg images that also come from facebook.com . So, all this looks like a single tab, although it consists of different content that can potentially be based on completely different principles. Therefore, you can ask a whole bunch of interesting questions about the application that looks that way.

For example, can this google.com analytic code have access to the JavaScript content that is in the jQuery code? In the first approximation, perhaps this seems like a bad idea, because these two pieces of code came from different places. But again, it may be that this is actually a good thing, because, apparently, foo.com has placed both these libraries here so that they can work with each other. So who knows?

Another question you might have is whether analytics code can actually interact with text placed in the bottom HTML block. For example, can analytics code affect event handlers?

JavaScript is a managed single-threaded model, so every frame has an event loop that is constantly processed — key processes take place here, network event timers work, and the like. And if this JavaScript code notices that there are some other handlers that are trying to manage these events, then it will get rid of them.

So who should be able to define event handlers for this HTML? First, google.com should be able to do this. It may also be foo.com, or it may not.

Another question is, what connects this Facebook frame with the general, big foo.com frame? The Facebook frame is HTTPS, that is, secure, foo.com is HTTP, that is, an insecure connection. So how can these two things interact?

To answer these questions, browsers use a security model called the same-origin policy, or a policy of the same origin. This is a kind of vague goal, because a lot of things that relate to web security are rather vague, because no one knows exactly what they are doing. But the basic idea is that two websites should not be able to interfere with each other’s work if they don’t want it. Thus, it was easier to determine what such intervention meant when the Internet itself was simpler. But as we continue to add new APIs, it’s harder and harder for us to understand what the purpose of the non-intervention policy means. For example, it’s obviously bad if two websites that do not trust each other can display their data on a common display. This seems like a bad thing, and obviously a good thing is when two websites that want to collaborate are able to share data in some safe way.

You may have heard of mixed sites, this is exactly what I said. Therefore, on the Internet, you will meet with such things when someone takes data from a Google map and places the location of trucks with products on them. Thus, you have this amazing “mashed potatoes”, which allows you to eat cheaply and at the same time avoid salmonellosis. But how exactly are compositions of this type created?

There are other difficult things. For example, if the JavaScript code comes from origin X inside the origin Y page, then what should be the content of this code? Thus, the strategy that policies of the same origin use can be roughly described as follows.

Each resource is assigned its source of origin, and JavaScript code can access only resources that have such a source. This is the top-level strategy used by politicians of the same origin.

But the devil is in the details, so there are a bunch of exceptions that we will look at in a second. But before we continue, let's define what is origin.

Basically, origin is a network protocol scheme plus a host name plus a port. For example, we might have something like http: // foo.com/index.html.

So, our network protocol scheme is HTTP, the host name is foo.com, and port 80. In this case, the port is implicit. Port is the port on the server side that the user uses to connect to the server. So if you see a URL with an HTTP scheme, where there is no explicitly specified port, then port 80 is used.

If we consider something like https: // foo.com/index.html, then these two addresses have the same host name, but in fact they have different schemes - the https protocol versus http. In addition, there is implicitly port 443, which is the default port for the secure HTTPS protocol. So these two URLs have different origins.

As a final example, consider the site http: // bar.com:8181/…

An ellipsis after a slash indicates that these things are irrelevant to a policy of the same origin, at least in relation to this very simple example.

We see that we have an HTTP scheme, the host name is bar.com, and here we have an explicitly specified port. In this case, this is a non-standard port 8181. In fact, this is the source of origin. Roughly speaking, you can think of the origin as a UID in Unix, where the frame is treated as a process.

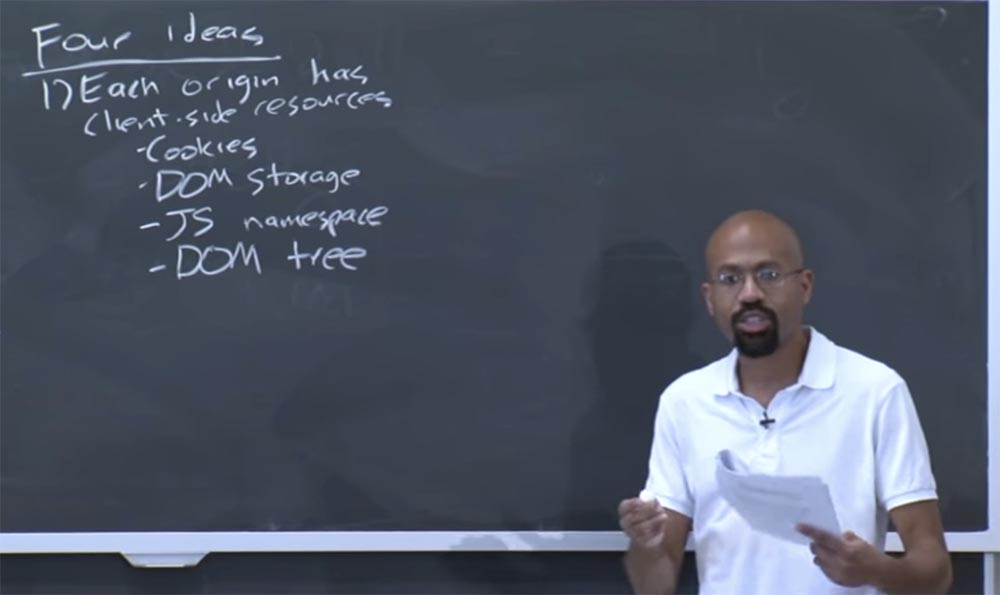

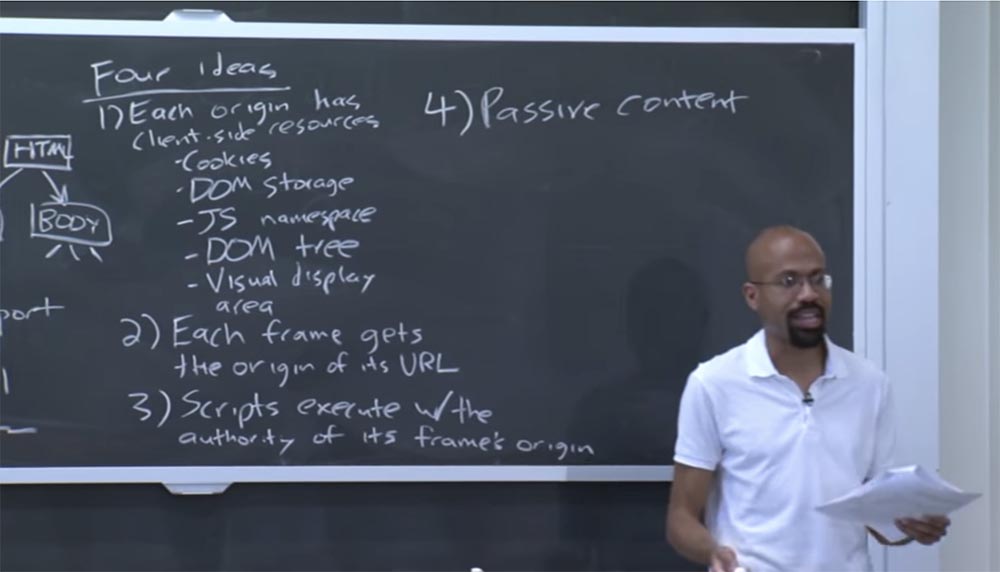

Thus, there are four main ideas underlying the implementation of a policy of the same origin for the browser.

First idea: each source of origin has a client part of the resource. This client part is a cookie. Cookies can be considered as a very simple way to implement a state in a non-persistent protocol like HTTP.

In principle, a cookie is a tiny file that is associated with each original source. Later we will talk a little about this specificity.

But the basic idea is that when the browser sends a request to a specific site, it includes any cookies that the client has for this site. And these cookies can be used for things like remembering a password.

For example, if you are going to an e-commerce site, these cookies may contain a reference to the goods in the user's basket, and so on.

Thus, cookies are one thing with which every source of origin can be associated. In addition, you perceive the repository of object models of documents DOM as another source of these resources. This is a fairly new interface, but it is already of key importance as an interface for structuring HTML and XML documents.

Thus, the DOM repository allows the source to say: “Let me associate a given key, which is a string, with this given value, which is also a string.”

Another thing related to origin is the javascript namespace. This namespace determines which functions and interfaces are available for the origin.

Some of these interfaces are built-in, for example, string prototypes and the like. The application can then actually fill the JavaScript namespace with other content.

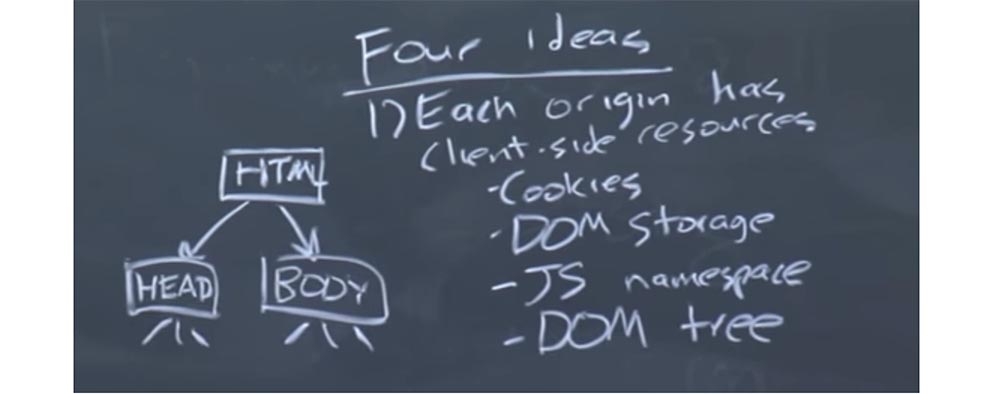

There is also such a thing as a DOM tree. As you know, DOM means Document Object Model. And the Dom tree is, in fact, a reflection of HTML on the page using JavaScript.

So you can imagine that at the top of the DOM tree there is an HTML node, below is the node for the head tag of the message head and the node for the body tag of the message body and so on.

So many dynamic web pages are modified by JavaScript code, which can access the data of this structure in JavaScript, which reflects the HTML content.

Thus, you can imagine that the animation on the browser page is due to changing some of the tree nodes in order to implement different organizations of different tabs. This is what the DOM tree is. There is also a visual display area, which, as we will see later, interacts very strangely with the same origin policy, and so on and so forth.

Thus, at a high level, each source has access to a certain set of client resources of the types we have listed.

The second idea is that each frame receives the source of its URL. As I mentioned earlier, the frame is about the same as the Unix process. This is a kind of namespace that brings together a bunch of other different resources.

The third idea is that the scripts, or JavaScript code, are executed with permissions corresponding to the authority of the source of the frame.

This means that when foo.com imports the javascript file from bar.com, the javascript file will be able to operate with foo.com privileges. Roughly speaking, this is similar to what happens in the Unix world when you need to run a binary file belonging to someone else’s home directory. This is something that must be done according to your privileges.

The fourth idea is passive content. CSS , , .

, . . , , Google Analytics jQuery foo.com. , cookie, , .

Facebook foo.com, , , . . , . , Post Message. .

Post Message , Facebook , , , foo.com. , foo.com , Facebook , , , .

, JavaScript , Facebook, XML HTTP foo.com, , . - , Facebook.com origin, foo.com, HTML-.

, , , ads.com. , , , . , .

, – , !

The fact is that there are security considerations. This is the subtlety that is hidden in the 4th idea.

28:00 min

Continued:

MIT course "Computer Systems Security". Lecture 8: "Model of network security", part 2

Full version of the course is available here .

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps until December for free if you pay for a period of six months, you can order here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/418229/

All Articles