MIT course "Computer Systems Security". Lecture 6: "Opportunities", part 3

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

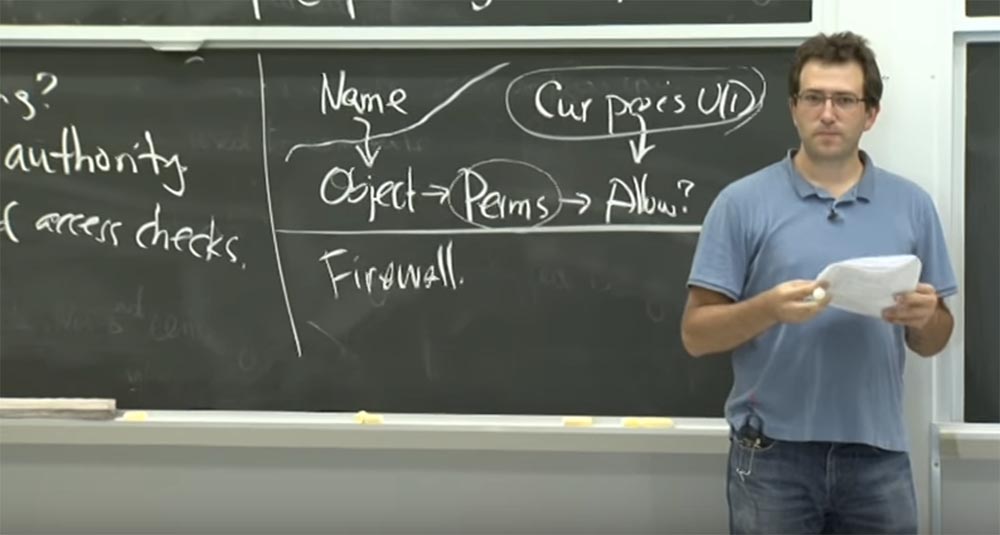

Audience: if this UID provides read-only access to this file, and you also have a file descriptor, then if you lose the UID , can you still get permission to read this file?

')

Professor: yes, since it will appear in directories. Because as soon as you add the ability to the file, that’s it. It is open for you with special privileges and so on. But the problem is that they have this hybrid design.

That is, they say - you can really add features to directories and you can open a new file, as you work in parallel. It may be that you add the ability to a directory, for example / etc , but you do not have mandatory access to all files in the / etc directory. But as soon as you enter the features mode, you can try to open these files, knowing that you have access to the / etc directory. After all, it is already open, so why don't you give me a file called “password” located in this directory?

And then the kernel needs to decide whether to allow a file in this directory to be opened in read or write mode, or what you have. So I think that this is the only place where you still need this external privilege, because they tried to create a compatible design, where they used not too natural semantics to describe the work of directories. This seems to be the only place where the principles of Unix file system configuration remained.

Audience: are there other places like this?

Professor: good question. I think I’ll have to get their previous source code to figure out what is going on, but most other situations do not really require a UID check. Because, for example, it does not apply to networks. These are probably the usual file system operations - if you have a shared memory segment, you just open it, that's all.

Audience: could you explain again what the user ID value is, if you have the Capability capability?

Professor: this is important when you have the opportunity for a directory. The question is, what does this opportunity represent to you? Consider one interpretation, for example, the state of capabilities of a pure system, not Capsicum . If in such a system you have the opportunity for a directory, then you have unconditional access to all files in that directory.

On Unix, this is usually not the case. You can open the directory as / etc , but it contains many system files that can store sensitive information, for example, the server's private key. And the fact that you can open and browse this directory does not mean that you cannot open files in this directory. That is, having access to the directory, you have access to the files.

In Capsicum , if you open the / etc directory and then enter the features mode, the following happens. You say: “I do not know what kind of directory it is. I just add a file descriptor to it. ” There is a file called "key". Why don't you open this key file? Just because at that moment, you probably don’t want this feature-based process to open the file, because you don’t need it. Although this allows you to bypass the Unix file permissions.

Therefore, I think that the authors of this article carefully approached the development of a system that would not violate the existing security mechanisms.

Audience: so you say that in some cases you can use a combination of these two security factors? That is, although a user can change a file in a directory, then which files he gets access to depends on his user ID?

Professor: yes, exactly. In practice, in Capsicum , before you enter the opportunity mode, you have to guess which files you may need later. You may need shared libraries, text files, templates, network connections, and so on. Therefore, you open all this in advance. And it is not always necessary to know which file you need. So these guys made it so that you can simply open the file descriptor of the directory and view the files you need later. However, it may be that these files do not have the same permissions that you have. It is precisely for this reason that a combination of two security factors is used - a capability check and a user uid . So this is part of the core mechanism.

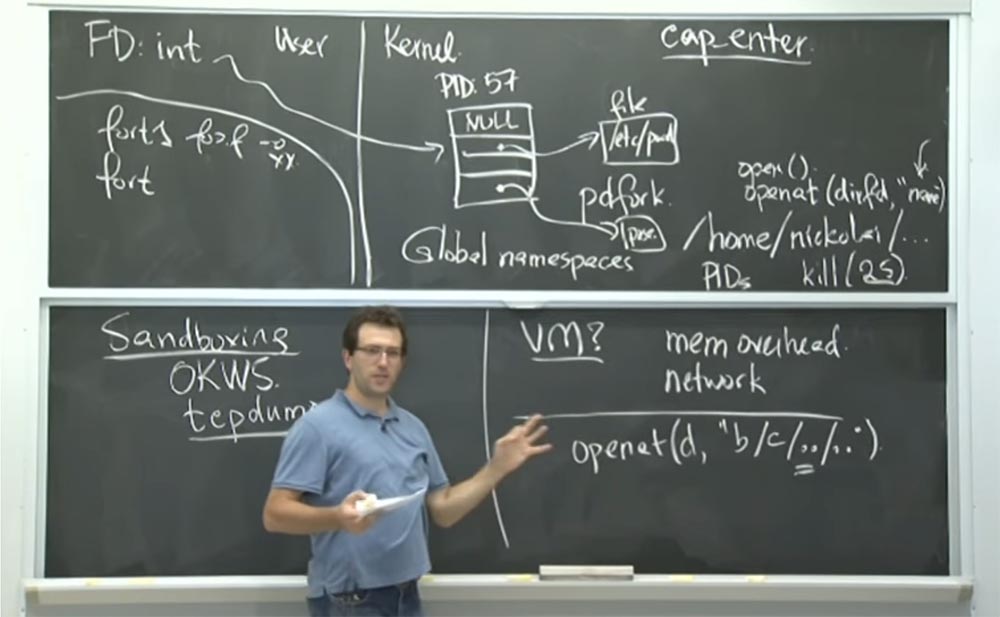

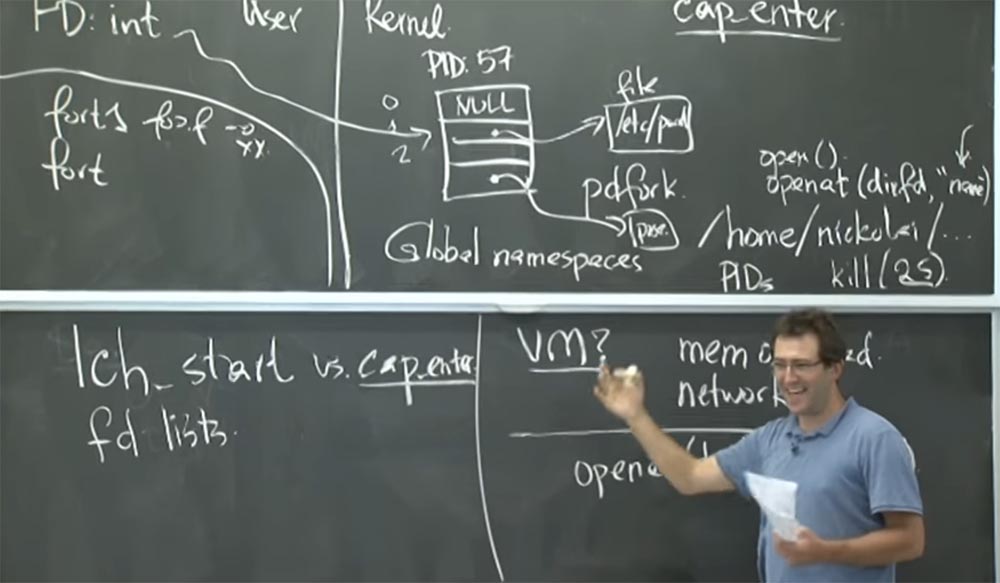

Why do they need a library like libcapsicum ? I think there are two main things that they support in this library.

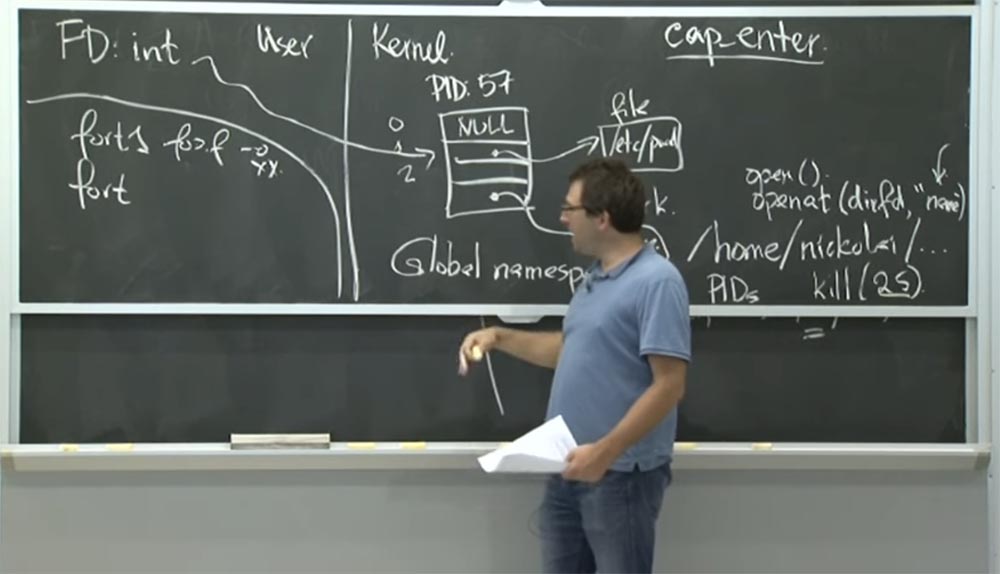

One of them is that they implement a function called lch_start , which you should use instead of the cap_enter function. Another function that the libcapsicum library provides is the concept of fd lists, which serves to transfer file descriptors by numbers. The purpose of this list fd lists is easy to explain. This is basically a summary of how Unix manages and transfers file descriptors between processes. In the traditional Unix and Linux that you use today, when you start a process, some file descriptors are passed to it. You simply open some file descriptors with integer values from this table and start the child process you need. Or you run a specific binary file, and it inherits all these open slots in the fd table. There is no other good way to name these things except using numbers as names.

Consider an example in which slot 0 will be used for input data, slot 1 for output data, slot 2 for printing error messages. This is how it works in Unix . And it works fine if you just transfer these three files or three data streams to the process.

But in Capsicum it happens that you “pass” a lot more file descriptors on your way. So, when you pass a file descriptor for some files, you go through a file descriptor for a network connection, a descriptor for a shared library that you have, and so on. Managing all these numbers is quite tiring. Therefore, in fact, libcapsicum provides the ability to abstract from the name of these past file descriptors between processes using a hierarchical name that is used instead of these incomprehensible integers.

This is one of the simple things they provide in their library. So I can pass the file descriptor to the process and give it a name, but it does not matter what number it is designated. This method is much easier.

They still have another, more intricate launching mechanism for the sandbox. This is the lch , the API host for running the sandbox, which is used instead of simply entering Capability mode. Why did they need more than just entering the mode of opportunity? What usually bothers you when creating a sandbox?

Audience: lch probably erases all inherited items in order to ensure a “clean” start of the system.

Professor: yes. I think they are worried about trying to accommodate everything the sandbox has access to. The fact is that if you just call cap_enter , technically, at the level of the kernel mechanism, it will work. Right? It just prevents you from discovering some new features.

But the problem is that there may be many existing things to which the process already has access.

Therefore, I think that the simplest example is the presence of open file descriptors there, which you have forgotten, and they will simply be inherited by this process.

For example, they looked at tcpdump . First, they changed tcpdump by simply calling cap_enter before they even got together to parse all the incoming network connections. In a sense, this works well because you cannot get more Capability capabilities. But then, looking at the open file descriptor, they realized that you have full access to the user terminal, because you have an open file descriptor for it. So you can intercept all the keystrokes that the user makes, and the like. So this is probably not a good plan for tcpdump . Because you do not want someone to intercept your keyboard activity.

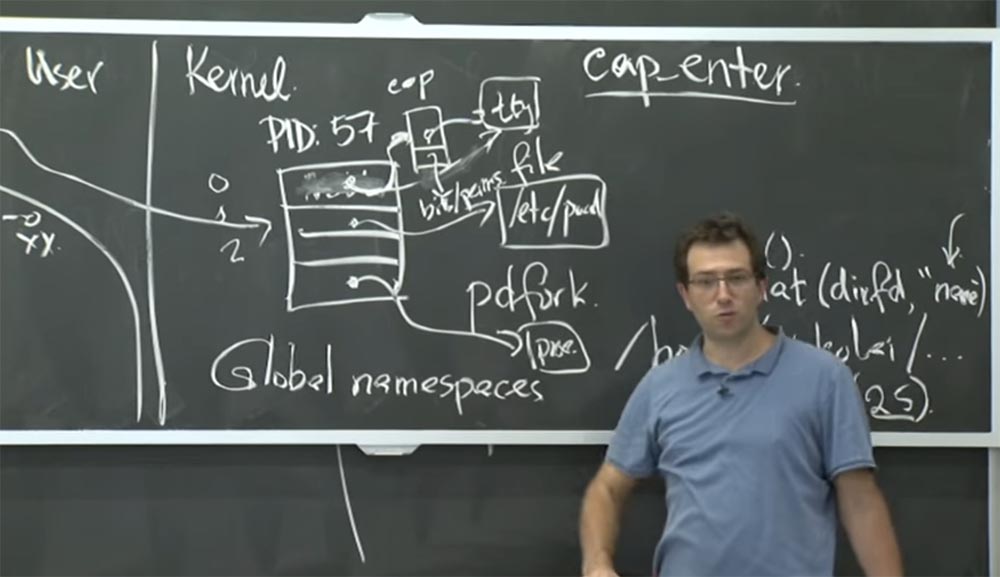

Therefore, in the case of tcpdump, they manually changed the file descriptors and added some bits of capabilities to them to limit the types of operations you can perform. If you remember, in Capsicum, the ability also has these additional bits, indicating the class of operations that can be performed for a given file descriptor. Thus, they basically accept that the file descriptor is 0. It points to the tty user terminal . Originally, it was just a direct pointer to the tty structure in the kernel. In order to limit the type of operations that can be performed for this descriptor, they have introduced some intermediate beta structure of possibilities in the middle. Thus, the file descriptor points to this capability structure, and it already points to the real file you are trying to access. And this capability structure contains some restrictive bits or permissions for a file descriptor object that can be implemented.

Thus, with standard data entry in tcpdump, you cannot do anything with it. You can just see that it exists, and that’s it. For the output file descriptor, they provided the opportunity when you can write something to it, but you cannot change the record, that is, you cannot undo the changes you have made.

So what else could you worry about running a sandbox ? I think about the state of the file descriptor. Anything else matters? I think in Unix this is file descriptors and memory.

Another thing is that these guys are worried that there may be confidential information in your address space that was previously allocated. And the process that you are going to isolate in the sandbox can read all the available memory. So if there is some password that you checked before when the user logs in and you have not yet cleared the memory, then this process will be able to read it and do something “interesting”.

They solved this problem in this way: in lch_start, you should run a “fresh” program. You take the program and pack all the arguments into it, all the file descriptors you want to provide to it. Then you start a new process or start the operation of reinitializing the entire virtual memory space. And then there is no doubt that this process will not receive any additional privileges to affect the collection of confidential data. This is exactly what you passed to lch_start functions, in terms of binary names, arguments, and program features.

Audience: what happens if the process you are running contains a binary setuid = 0 ?

Prof: I think these guys don't use setuid “binaries” in features mode to avoid some strange interactions that may appear. They implement this rule: you can have a setuid program that gets its privileges from a binary setuid file, and then it can call cap_enter or lch_start . But as soon as you enter the mode of opportunity, you cannot regain additional preferences.

In principle, this could work, but it would be very strange. Because, if you remember, the only thing where a UID matters when you're in opportunity mode is opening files inside a directory. Therefore, it is unclear whether this is really a great plan for obtaining additional privileges or whether there are flaws in it.

Audience: Earlier, we talked about why the library does not actually support a strict separation between these two security factors. But we don't have to use lch_start, are we ?

Professor: that's right. Suppose you have such a thing as tcpdump , or gzip - this is another thing that they work with. You assume that the application is probably not compromised, and there is some basic function of the application, and you care about how it behaves in the sandbox. In the case of tcpdump, this is the parsing of packets coming from the network; in the case of gzip, it is unpacking the files. And until a certain moment you assume that the process is doing everything correctly, and there will be no vulnerabilities. Therefore, you trust him to launch lch_start and consider that he will correctly create an image, correctly set up all the possibilities, and then restrict himself from any further system calls outside the capabilities mode.

And then you run dangerous things. But by the time the installation happened correctly, and you have no way to get out of this sandbox. So, I think you need to see how you can actually use the feature mode for sandbox applications.

Therefore, we talked a little about tcpdump . How do you isolate this process? Another interesting example is gzip , which compresses and unpacks files. So why are they worried about the sandbox? I think they’re worried that the decompression code could potentially be “buggy”, or there might be errors in memory management, buffer management during decompression, and so on.

Another interesting question is - why changes to gzip seem much more complicated than to tcpdump ? I think this is related to how the application is structured inside, isn't it? Therefore, if you have an application that simply squeezed one file or unpacked one file, then normally just launch it, without changing, in features mode. You simply give it a new standard to unpack something, and the standard provides unpacked data at the output, and this will work fine.

The problem, as is almost always the case with such sandbox methods, is that the application actually has a much more complex process logic. For example, gzip can compress several files at the same time, and so on. And in this case, you have some kind of lead process at the head of the application, which actually has additional rights to open several files, package them, and so on. And the basic logic often has to be another supporting process. In the case of gzip, the application was not structured in such a way that compression and decompression would act as separate processes. Therefore, they had to change the implementation of the gzip kernel and some of the structure of the application itself, so that besides just transferring the data to the unpacking function, they were sent via an RPC call or actually recorded into some kind of file descriptor. This was to prevent the emergence of third-party problems and perform unpacking with virtually no privileges. The only thing gzip could do was return the unpacked or compressed data to the process that called it.

Another thing that concerns homework is how do you actually use Capsicum in OKWS ? What do you think of it? Will this be helpful? Would the guys from OKWS be happy to upgrade to FreeBSD , because it's so much easier to use? How would you use Capsicum in FreeBSD ?

Audience: one could get rid of some strict restrictions.

Professor: yes, it is, we could completely replace them with the presence of a file descriptor directory and capabilities. You wouldn’t need to configure intricate chroot . Instead of using chroot with many little things, you could just set permissions carefully. You could just open exactly the files you need. So this seems like a big plus.

Next, in OKWS , you have an OK startup service that should start all parent processes. As soon as they die, the signal returns to okld to restart the “dead” process. And this thing would have to run root , because it must isolate the processes in the sandbox. But there are a number of things in OKWS that can be improved with Capsicum .

For example, you could give okld much less privileges. Because, for example, to get port 80 requires root-rights. But after that, you can safely put everything else in the sandbox, because root-rights are no longer needed. So this is pretty cool. Perhaps you can even delegate to the process the right to respond to requests from someone else, for example, the process monitoring system, which just has this process descriptor or process descriptor for the child process, and every time such a process crashes, it starts a new one. Therefore, I think that the ability to create a sandbox without root-rights is very useful.

Audience: you can give each process a file descriptor that only allows you to add entries to the log.

Professor: yes, and that's pretty cool. As we said last time, oklogd can “mess up” with the log file. And who knows what the kernel will allow it to do when it has a file descriptor for the log file itself. However, we can more accurately determine the capabilities of the file descriptor by giving it a log file and indicating that it can only write, but not search for anything. Thus, we get the append-only function if you are the only “writer” for this file. It is very convenient. You can give the process permissions to write to a file, but not read it, which is very difficult to do only with Unix permissions.

Capsicum ?

: , , , . , , , .

: , . Capsicum , , . .

: , , okld . , .

: , . . , , Capability , . , , , , , .

, OKWS . . , , , . .

, , «». , . , , , , . .

: «» , , ?

: , FreeBSD . , - , . FreeBSD Casper , , Capability . , «».

- , , , - , , Casper .

, «» . . , , , Casper - .

, , - . , Unix FD . , . , , .

, FreeBSD , DNS . DNS -, «». , tcpdump . tcpdump , IP-. , , DNS -.

, , DNS- DNS-, . Casper , DNS-.

, , , Capsicum? ? ? , , . Capsicum , ?

tcpdump GZIP , , . ?

: , , , . , Capability .

: , . Capsicum , , . , , . , , , , Capsicum , .

, «». , TCP — helper , , , , . , , , .

: , .

: , . . , . lth_start , . , , , - .

, , Capsicum . , , , . Unix - , .

, . , . , , . , .

, Chrome . , , , Unix, -, Unix .

, ?

: .

: . . , . , , , , .

Unix , . , . , . . , .

. Linux , setcall , , . , Capsicum , , Capsicum , . Linux setcall , . , , Linux .

, — , , , , . , - , «//», . Capsicum where you can run the process for a specific file, and not for the entire home directory.

Full version of the course is available here .

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

3 months for free if you pay for new Dell R630 for half a year - 2 x Intel Deca-Core Xeon E5-2630 v4 / 128GB DDR4 / 4x1TB HDD or 2x240GB SSD / 1Gbps 10 TB - from $ 99.33 a month , only until the end of August, order can be here .

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/418221/

All Articles