MIT course "Computer Systems Security". Lecture 6: "Opportunities", part 2

Massachusetts Institute of Technology. Lecture course # 6.858. "Security of computer systems". Nikolai Zeldovich, James Mykens. year 2014

Computer Systems Security is a course on the development and implementation of secure computer systems. Lectures cover threat models, attacks that compromise security, and security methods based on the latest scientific work. Topics include operating system (OS) security, capabilities, information flow control, language security, network protocols, hardware protection and security in web applications.

Lecture 1: "Introduction: threat models" Part 1 / Part 2 / Part 3

Lecture 2: "Control of hacker attacks" Part 1 / Part 2 / Part 3

Lecture 3: "Buffer overflow: exploits and protection" Part 1 / Part 2 / Part 3

Lecture 4: "Separation of privileges" Part 1 / Part 2 / Part 3

Lecture 5: "Where Security Errors Come From" Part 1 / Part 2

Lecture 6: "Opportunities" Part 1 / Part 2 / Part 3

Audience: can we conclude that there is one process for each opportunity?

')

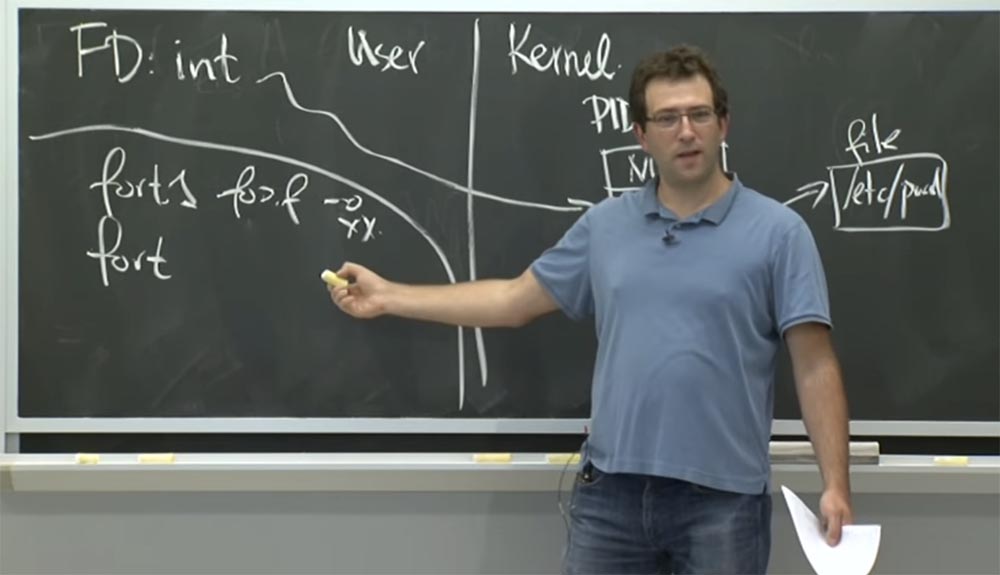

Professor: I doubt it. You can have as many processes as you want, for one possibility there may be several processes. Simply put, you don't necessarily need a separate process for each opportunity. Because here there is a process fort1 , which can open many files and can transfer many possibilities to the privileged component fort .

The reason that you think you need a separate process for every opportunity we are dealing with concerns this strange interaction between opportunities and external privileges.

Because fort1 has an external privilege. And what we are doing is basically transforming this external privilege into Capability in this process of fort1 . So if you have several different types of external privileges or several different privileges that you want to use with caution, then you will probably want a separate process with this privilege. And whenever you want to use a specific set of privileges, you will ask for the appropriate process to do the separation, and if you succeed, you will ask the process to give you back the Capability .

In fact, there was such a design of the operating system, which is completely based on capabilities and had no external privileges. And it's cool, but not very practical for use in a real system. It turns out that in reality you want not so much external privileges as an opportunity to call an object and tell someone about this object without transferring rights to this object without fail.

I may not know what privileges you may have with respect to a common document, but I want to inform you that we have this common document. If you can read it, read it. If you write it to him, great, write. But I do not want to transfer any rights to it. I just want to tell you: “Hey, here’s this thing, go, try it!” So this is an inconvenience in the world of opportunity, because it really forces you never to talk about objects without transferring rights to this object.

Therefore, it is important to know about this and use this feature in some parts of the system, but do not expect that the system security solution is exhausted by this.

Audience: Suppose that a process has the capabilities given to it by some other process, but it turns out that in relation to an object, it already has great capabilities. Can the process compare them to make sure they relate to the same object? Or will he use great opportunities?

Professor: the fact is that the process does not use the possibilities in an implicit way, so this is a very useful feature of the possibilities. You must absolutely definitely indicate which of the possibilities you are using. So think about it in terms of the file descriptor. Suppose I give you an open file descriptor for a particular file, and it is read only. Then someone else gives you another opportunity for other files, which may include this file. And the new feature allows you to read and write to files.

In this case, if you try to write to the first file, you will undoubtedly succeed, because an additional file descriptor will be opened for it, allowing not only reading, but also writing. So this is a kind of cool thing when you don’t need extra external privileges. You simply have all these possibilities, because people actually built such libraries and, in principle, they manage your capabilities for you. They kind of collect them. And when they try to perform an operation, they look for opportunities and find those that make it work.

This brings you back to the external control of the ambient authority that you tried to avoid. The positive feature of opportunities lies in the fact that this software design, which simplifies your life. This is a rarity in security solutions. This property makes it easier for you to write code that indicates exactly the privileges you want to use from a security point of view. And this is pretty easy to write code.

However, Capability is able to solve other problems. So, privilege management problems often appear when you need to run some kind of unreliable code. Because you really want to control what privileges you give, because otherwise there is a risk of misuse of any privileges you provide. And this is a slightly different point of view from which the authors of the article on Capsicum approach the possibilities. They, of course, are aware of the problem of external powers, but this is a slightly different problem that you can or cannot solve. But basically, they are concerned that they have a really large privileged application, and they worry that there will be errors in different parts of the source code of this application. Therefore, they would like to reduce the privileges of the various components of this application.

In this sense, the story is very similar to OKWS . So, you have a large application, you break it into components and limit the privileges for each component. This undoubtedly makes sense in OKWS . Are there any other situations where you might be concerned with the separation of privileges? I think that in their article they describe examples that I should try to perform, for example, tcpdump and other applications that analyze network data. Why are they so worried about applications that analyze network inputs? What happens in tcpdump ? What is the reason for their paranoia?

Audience: An attacker can control what is sent and what is called for execution, for example, packets.

Professor: yes, they really worry about these kinds of attacks and whether the attacker can really control the input data? Because it is quite problematic if you write C code that must process data structures. Obviously, you will perform many manipulations with pointers by copying bytes into arrays that allocate memory. At the same time it is easy to make a mistake with memory management, which will lead to rather disastrous consequences.

So this is the reason why they decided to parse the work of their network protocol and other things in the sandbox.

Another real-world example in which privilege sharing is needed is your browser. You will probably want to isolate your Flash plugin, or your Java extension, or something else. Because they represent a wide field for attacks, which are used quite aggressively.

So this seems like a reasonable plan. For example, if you are writing some piece of software, you want to check the behavior of its components in the sandbox. More generally, this refers to what you have downloaded from the Internet and are about to launch with less privileges. Is this the isolation style offered by Capsicum ? I could download some random screensaver or any game from the Internet. And I want to run them on my computer, but first make sure that they do not spoil everything that I have. Would you use Capsicum for this?

Audience: you can write a program for the sandbox in which you will use Capsicum .

Professor: right. And how would you use it? Well, you would simply enter sandbox mode with the cap_enter command , and then launch the program. Do you expect it to work? I think there will be a problem here. It will be connected with the fact that if the program does not expect that it will be isolated by Capsicum , then it may try to open the shared library, but it cannot do this, because it cannot open something like / lib / ... , because such is not allowed in Capability mode.

Therefore, these sandboxes methods should be used for those things for which the developer has provided that they can be performed in this mode. There are probably other sandbox methods that can be used for unmodified code, but then the requirements may change a little. Therefore, the creators of Capsicum are not very worried about backward compatibility. If we have to open files differently - we open them differently. But if you want to leave the existing code, you need something more, for example, a full-fledged virtual machine, so that you can run the code in it. Here the question arises - should we use virtual machines for the Capsicum sandbox?

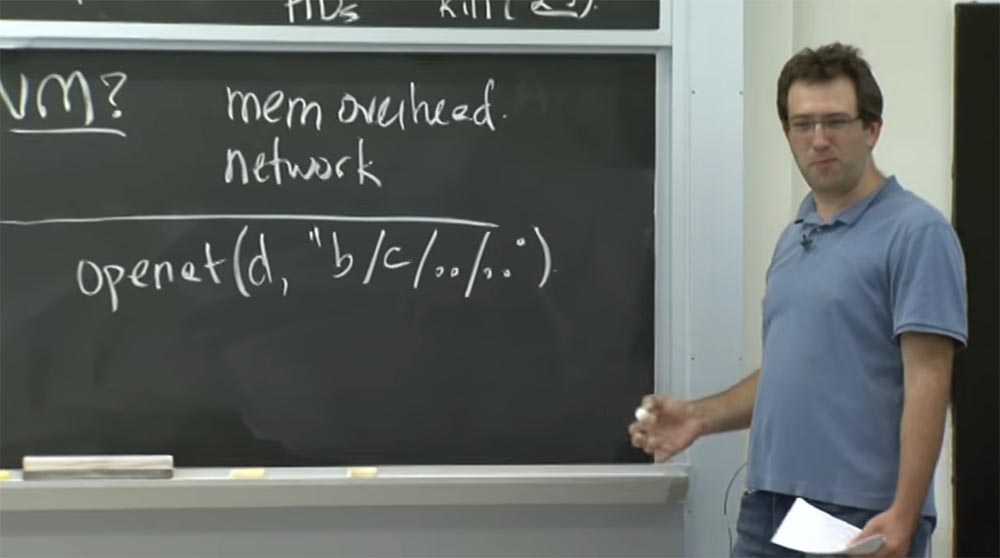

Audience: this may cause memory overruns.

Professor: yes it is. But what if we don’t care about memory? So, perhaps, virtual machines are very good, besides, they do not use a lot of memory. So why else should we not use a VM in Capsicum ?

Audience: difficult to control network activity.

Professor: Right! It is difficult to control what is happening on the network, because either you do not give the virtual machine access to the network, or you are connected to the network through NAT mode, or you use Preview or VMWare . But then your sandbox can access the entire Internet. Therefore, you will have to manage the network in more detail, perhaps setting firewall rules for a virtual machine and so on. This is not too good.

But what if you don't care about the network? Suppose you just have some video What to do if you are processing some simple video or analyzing tcpdump . In this case, you just start the virtual machine, it starts to parse your tcpdump packets and throws you back after the presentation that tcpdump wants to write to the user, because there is no real network I / O. So, is there any other reason?

Audience: because initialization costs are still high.

Professor: Yes, it can be the initial costs of running a virtual machine that reduce performance. So it's true.

Audience: well, you may still want to have rights to the database and the like.

Professor: yes. But more generally, this means that you have real data that you work with and is really difficult to share. Thus, virtual machines are indeed a much larger separation mechanism, due to which you cannot easily share things. So it’s good for situations where you have a completely isolated program that you want to run, and you don’t want to share any files, directories, processes and just let them work separately.

So this is great. This is probably, in some way, stronger isolation than the one that Capsicum provides, because there are fewer opportunities for things to go wrong. However, this isolation is not applicable in many situations where you want to use Capsicum . Because in Capsicum you can share files with great precision using the capabilities of the sandbox.

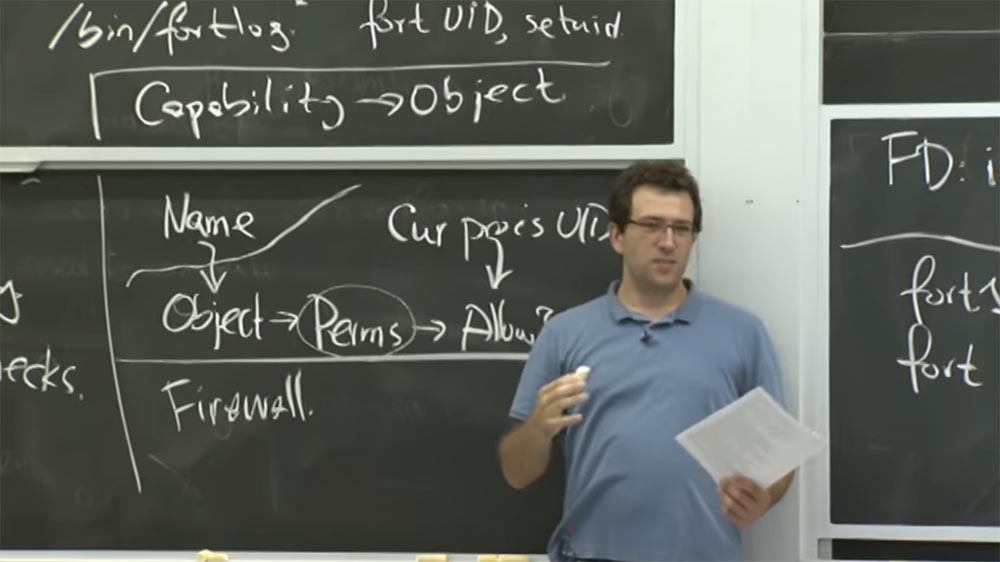

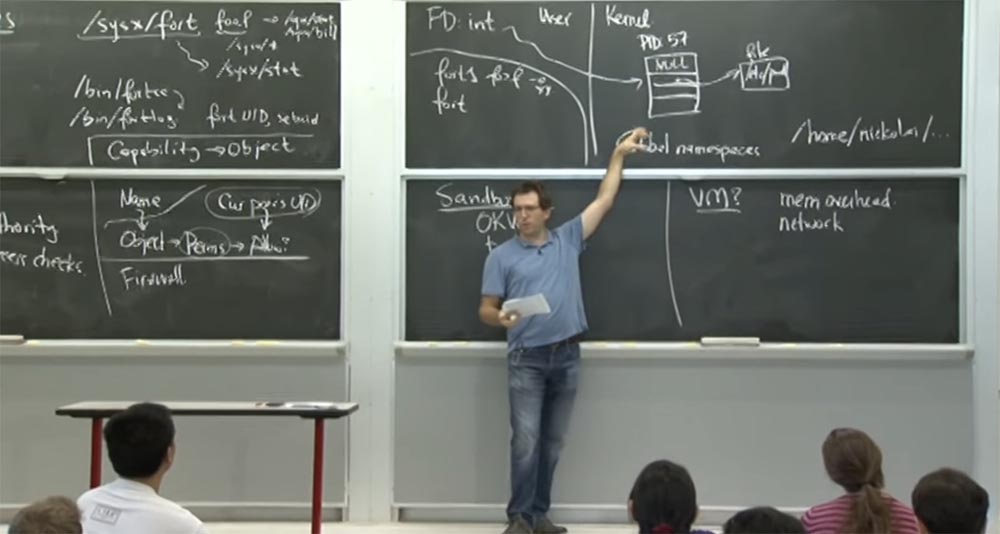

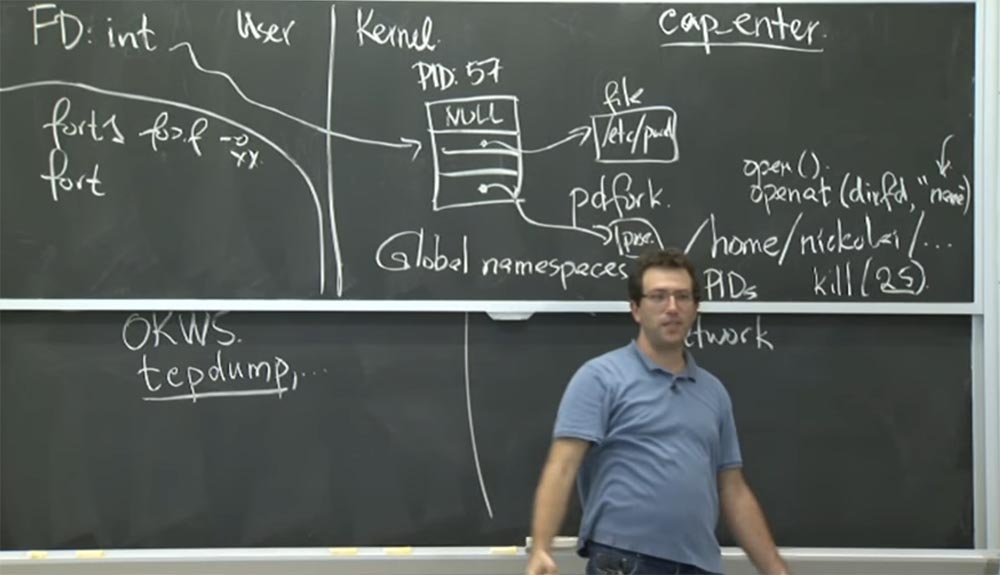

So let's take tcpdump and see why it is difficult to isolate it using the Unix mechanism. If you remember, in Capsicum, the way tcpdump works is that it opens some special sockets and then runs the parsing logic on network packets, after which it is printed on the user terminals. So, what is needed for a Unix- based tcpdump sandbox? Are your privileges limited? The problem with Unix is that the only way to really change privileges is to change the input data in the decision function, which decides whether or not you can access an object. And the only thing you can really change is the privilege of the process. This will mean that the process will be able to send the UID to someone else.

Or you can change the permissions for various objects that are on your system. In fact, you can use both of these solutions.

If you want to isolate tcpdump in a sandbox, you may have to select an additional user ID and switch to it during operation. But this is not a perfect plan, since you are not going to run multiple instances of tcpdump under the same user ID . Therefore, if I compromise one instance of tcpdump , it will not mean that I want to allow an attacker to use this factor to control other instances of tcpdump running on my machine. So potentially this is a bad decision - use the uid in this case.

Another problem is that in Unix you must have root-permissions in order to change the user ID, its privileges, process or something else or switch them to something else. This is also bad.

And another problem is that, no matter what your ID is , there may be open access files. So there may be a whole group of readable or writable files on your system, for example, a password file. After all, no matter what ID you have, the process will still be able to read this password. So this is also not very nice.

Thus, to organize a sandbox in Unix , you probably have to perform both actions - change the UID and carefully review the permissions for all objects to make sure that you do not have uninsulated open files that are sensitive to being overwritten or available for reading by a hacker. I think that with this you get another mechanism that you can use. If you submit it to the end, you may see difficulty in sharing files or sharing directories.

Now let's see how Capsicum is trying to solve this problem. Here, as soon as we enter the sandbox mode, everything will be available only through features. Therefore, if you do not have the Capability capability, you simply cannot access any objects.

These guys in the article make a huge bet on the global namespace. So what is this global namespace, and why are they so worried about it?

With them, the file system itself is a kind of vivid example of a global namespace. You can write a slash and list behind it any file you want. For example, go to someone in the home directory, for example, / home / nickolai / ... Why is it bad? Why are they against the global Capsicum namespace? What do you think?

Audience: if you have the wrong permissions, then you can get into trouble if you use credentials.

Professor: yes. The problem is that this is still Unix . Thus, there are still regular permissions on the file. Therefore, it is possible, if you really want to isolate some process in the sandbox, you cannot read or write anything in the system at all. But if you manage to find in the home directory of some stupid user file with the ability to write, it will be quite unpleasant for the client "sandbox".

More generally, their idea was to accurately list all the objects that the process has. Because you can simply list all the features in the file descriptor table or in any other place where opportunities are stored for you. And this is the only thing that can be touched by the process.

But if you have access to the global namespace, then this is potentially impracticable. Because even if you have a limited set of features, you could still start the line with a slash and write some new file, and you will never know the set of operations or objects to which this process can access.

That is why they are so worried about the global namespace, because it contradicts their goal of precisely controlling everything that the sandbox process should have access to. Thus, they attempted to eliminate global namespaces through a variety of kernel changes in FreeBSD . In their case, the kernel had to make sure that all operations go through some possibilities, namely, through a file descriptor.

Let's check if we really need kernel changes. What if we just do this in the library? After all, we are implementing Capsicum , which already has a library. And all we do is change all these functions, such as “open, read, write”, to use only the capabilities of Capability . Then all operations will go through some possibilities, search for them in the file table, and so on. Will it work?

Audience: you can always make a syscall system call.

Professor: yes. The problem is that there was a set of system calls that the kernel accepts, and even if you implement a good library, this does not prevent the possibility that a bad or compromised process will make the system call directly. Therefore, you must somehow strengthen the core.

In the compiler, the threat model is not in the compromised compiler process and not in arbitrary code, but in the inattention of the programmer. So if the developer of the program does not make mistakes and does the right thing, then the library will probably be quite enough.

On the other hand, if we are talking about a process that can execute arbitrary code and try to circumvent our mechanisms in any possible way, then we must have clear enforced boundaries. But since the library does not provide any strong coercion, the core can go on about the process.

What do they actually do in terms of changes in the core? The first is the system call, which they call cap_enter . And what happens when you run cap_enter ? What happens if you embed it in your process?

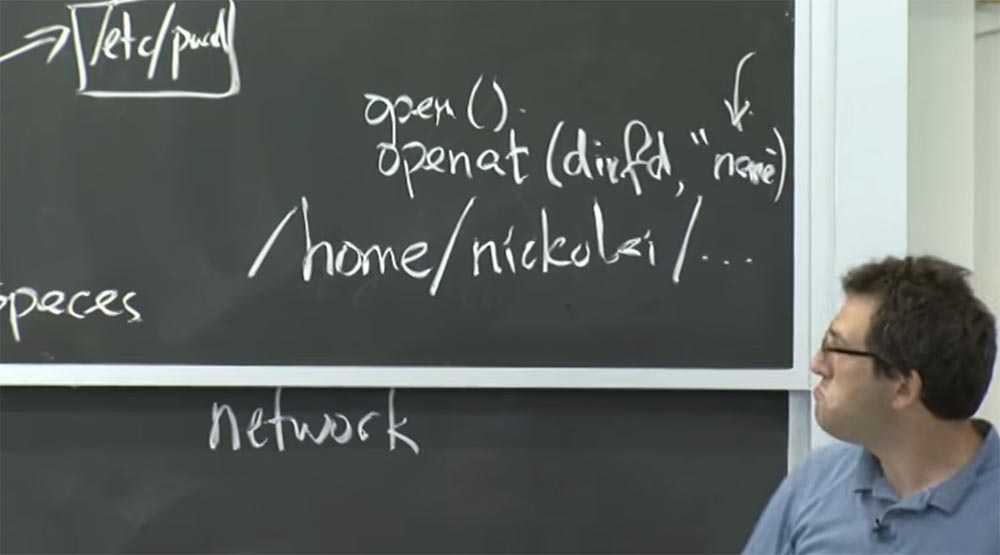

, , . , , , . cap_enter , open () , openat .

Unix- , , open , openat , , , — : openat (dirfd,“name) . openat «name» , .

, Capability open , , , . . - ? -, ? , – . , ?

: , .

: . , , . , , . , . , , . -, .

, , , , , . , , .. , , , . ?

. ? , Unix PID . , kill (25) PID = 25 . , . Capsicum ? ?

: .

: . -, . , Unix , . , PID , , fork , pdfork , « ». , - .

. , . , - : « «» , , , , ». . , , - .

, , , . , . , , .

. , «-» . , openat , «-». , «-», .

? , «-» ?

: , , . , Capability .

: , .

: , , - …

: .

: - .

: , . «-» ? , openat - b/c/../.. ?

, , ? - , . , , , openat (d, “b/c/../..) «c» , - .

. , , , . «-», . , , . , , . , UID - ?

: , .

: , . , , «» UID ? : cap_enter UID . , . ? ?

: , UID , , , , , - .

: , . «» UID . , , UID. It turns out that I have hundreds of running processes in my computer, but I have no idea what it is. Therefore, the destruction of a UID is not a good plan for management purposes.

54:14 min

Continued:

MIT course "Computer Systems Security". Lecture 6: "Opportunities", part 3

Full version of the course is available here .

Thank you for staying with us. Do you like our articles? Want to see more interesting materials? Support us by placing an order or recommending to friends, 30% discount for Habr users on a unique analogue of the entry-level servers that we invented for you: The whole truth about VPS (KVM) E5-2650 v4 (6 Cores) 10GB DDR4 240GB SSD 1Gbps from $ 20 or how to share the server? (Options are available with RAID1 and RAID10, up to 24 cores and up to 40GB DDR4).

Dell R730xd 2 times cheaper? Only we have 2 x Intel Dodeca-Core Xeon E5-2650v4 128GB DDR4 6x480GB SSD 1Gbps 100 TV from $ 249 in the Netherlands and the USA! Read about How to build an infrastructure building. class c using servers Dell R730xd E5-2650 v4 worth 9000 euros for a penny?

Source: https://habr.com/ru/post/418219/

All Articles