Intel 8086 - the processor that opened the era

The history of the creation of the legend

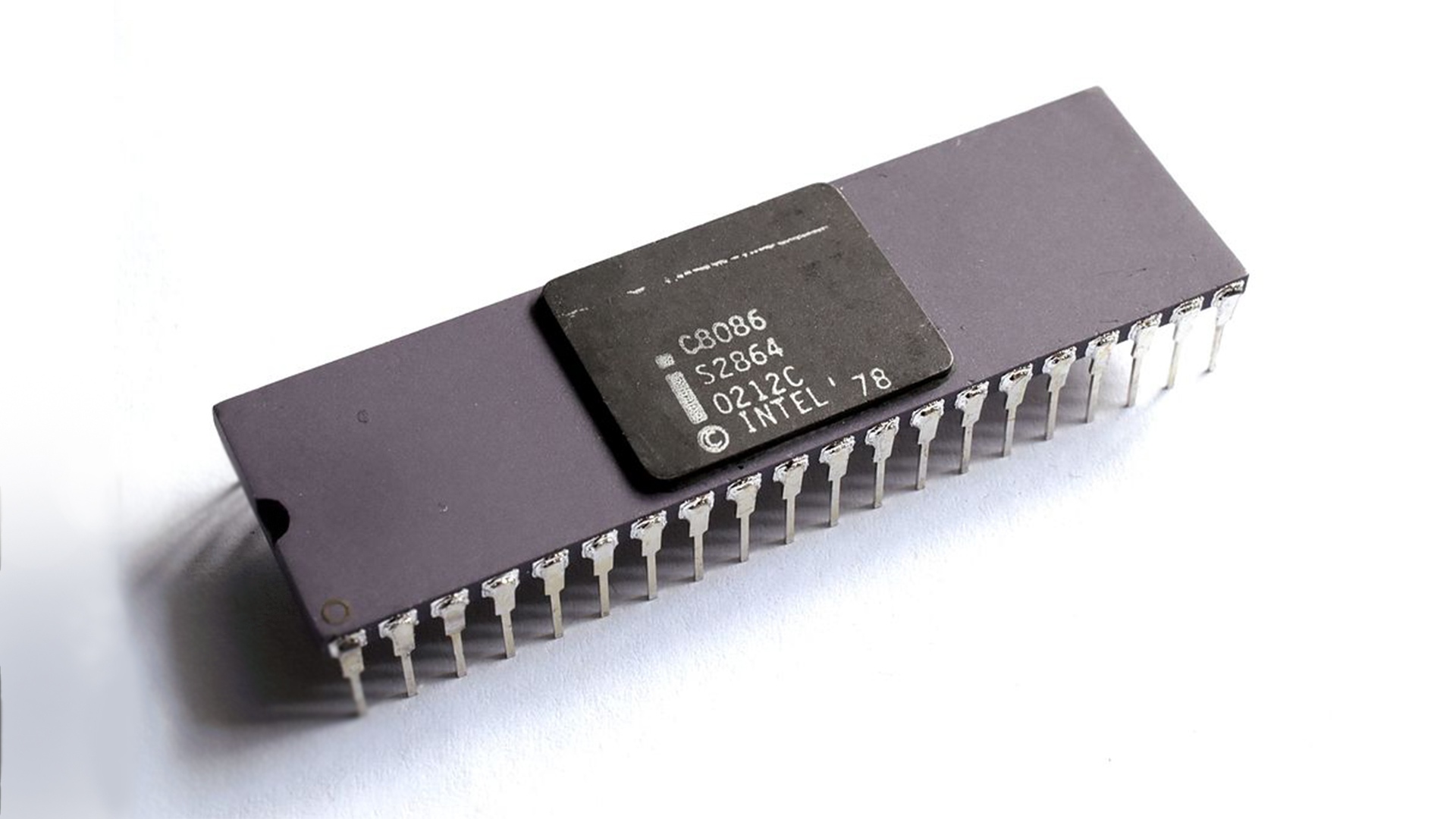

Today, in 2018, we celebrate the fortieth anniversary, perhaps the key one in the history of personal computers of the processor, namely, the Intel 8086.

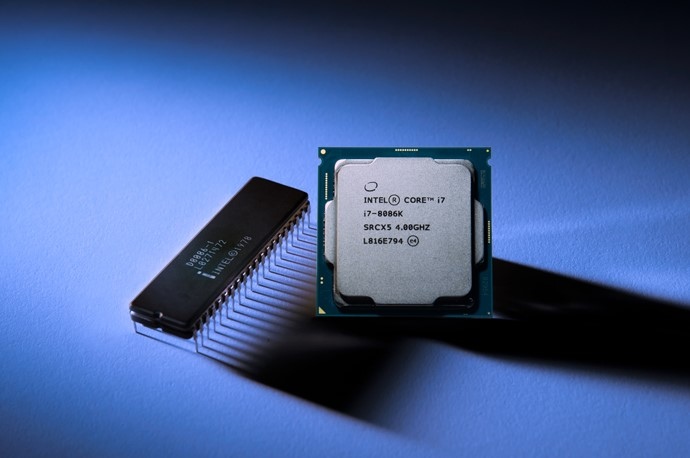

The epoch of x86 architecture began with it, which laid the foundation for the development of processors for many years and decades in advance. We owe it to him that the popularity of a computer as an individual unit, accessible to every user, is taking off. In honor of the 40th anniversary of the processor, from which Intel began to transform into a multi-billion dollar corporation, the company introduced a small symbolic gift to its fans - it was the anniversary i7-8086K, the first processor in Intel history, capable of operating at 5 GHz directly out of the box.

')

But today we will not sing the praises of the engineers of the modern processor leaders, but return to the distant past, in 1976, where the story of the Intel 8086 began. And it began with a completely different processor.

In 1976, Intel set a serious task for its engineers - to create the world's first microprocessor that supports multi-tasking, as well as having a memory controller built into the chip. Now these technological features can be easily detected even in the most affordable processors on the market, but 42 years ago, similar technological innovations promised to outrun an entire era - Intel planned to switch to 32-bit computing at a time when 8-bit systems dominated, and even 16 -bits were very far away. Unfortunately, or fortunately, the ambitions of Intel executives have faced a harsh reality in the form of several postponements, technological problems and the realization that the technologies of 1976 have not yet gone so far as to bring such bold ideas to life. And most importantly, Intel was so fascinated by the creation of, as they would say in the west, over-engineered architecture, that it overlooked practicality in terms of software. It was the impracticality and deliberate complexity of the system that was criticized at a meeting by a visiting expert named Stephen Morse, a 36-year-old microelectronics engineer, then specializing in software. However, Intel was in no hurry to take into account the criticisms, so Morse’s notes went into debt.

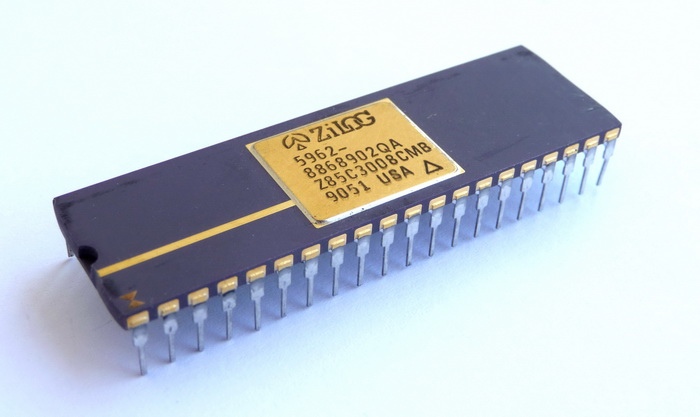

But as it turned out later, they were extremely useful - in July 1976, a small company Zilog, founded by inventor of Intel 4004 and Intel 8008 Federico Fagin, as well as Intel manager Ralph Ungermann and another 4004 developer, Japanese Masatoshi Sima, introduced its processor The Z-80, which became the actual bug fix based on the Intel 8080.

Having improved the architecture of the original Intel processor, the Zilog team proposed an inexpensive and efficient processor, immediately beloved by many hardware manufacturers and leading platforms of the time. That Z-80 formed the basis of the legendary ZX Spectrum, and was also installed in the equally well-known Commodore 128 as a coprocessor. The Z-80 was incredibly successful in many parts of the world, and this success could not go unnoticed - Intel urgently decided that the Z-80 needed a decent competitor.

It was here that company leaders remembered the comments of Stephen Morse, and offered to head the creation of a fundamentally new processor, designed to compete with the new product from Zilog. Intel saw no particular reason to set the framework in this project - then it seemed to everyone that the new processor would be a quick response to the Z-80, and would be forgotten over the next years, so Morse got the green light for any experiments. It is the obsessive idea that the processor should be built around the efficiency of working with software, as it turned out later, became the key to the development of the entire industry.

In May 1976, Steve Morse began work on the architecture of the new processor. In essence, the task assigned to Morse was simple. If the new 16-bit chip should give a significant increase in speed compared to the 8-bit 8080th, it should differ in a number of parameters. But Intel wanted to ensure that consumers turn to it again. As one of the ways to achieve this, the possibility of transferring to a higher level of a system designed for a less powerful processor was considered, replacing which with a new one, it will work. For this, ideally, the new processor must be compatible with any program written for the 8080.

Morse had to start from the 8080 project, according to which the processor assigned an “address” to each place where the numbers were stored, like classifier labels. Addresses were 16-bit binary numbers, which made it possible to designate 65,536 different addresses. This ceiling was acceptable when developers needed to use memory economically. However, now consumers needed more volume, they insisted on overcoming the barrier of 64 KB.

In July 1978, a new processor, called the Intel 8086, appeared on the market.

His exit did not become a sensation or incredible success. For the first time, the processor hit the shelves as part of several budget computers that are not popular, and was also used in various terminals. A little later, he formed the basis of the NASA microcontroller, where he was used to control diagnostic systems for rocket launch until the early 2000s.

Morse left Intel in 1979, just before the company introduced the Intel 8088, an almost identical 8086 microprocessor that ensured compatibility with 8-bit systems by dividing the 16-bit bus into two cycles. Morse himself called this processor "castrated" version of the 8086.

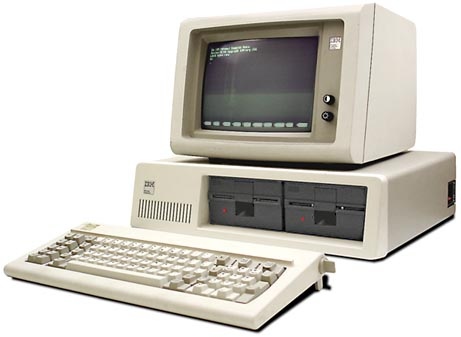

The legendary status of 8088 was obtained later, when in 1980 IBM first thought about conquering the personal computer market and creating a computer that was quite inexpensive and included middle-class components. It is the IBM 5150, better known under the IBM PC brand, that was based on the 8088 processor (in fact, the same 8086), thanks to which Intel became widely known even in the circles of ordinary users. But after all, Motorola 68000 (the basis of the first Apple Macintosh) also claimed the place of 8088, but IBM preferred Intel.

The IBM PC quickly became the main force in the computer systems market, and Intel, following the “next-best” logic, continued to produce processors — 80186, 80286, 80386, 80486, Pentium, and so on — based on the very foundation of Stephen Morse. they are still in 8086. It is thanks to the last two figures that the architecture became known as "x86", and the incredible popularity of IBM computers provided Intel with huge profits and recognition as a brand.

Architectural features 8086

In terms of architectural features, the Intel 8086 relied heavily on the experience of developing the 8080 processor, and its enhanced counterpart 8085, which entered the market in the summer of 1976. Despite some parallels, the 8086 became the first 16-bit processor of the company, which had 16 data channels and 20 address channels capable of processing up to 1 MB of data, and also had a wide set of instructions allowing, among other things, to carry out division / multiplication operations. The feature of the 8086 was the presence of two modes - Minimum and Maximum, the last of which implied the use of a processor in a system with several processors, and the first - in classical systems with one processor.

The Intel 8086 first appeared a queue of instructions that allows you to store up to six bytes of instructions directly from memory, significantly reducing the time to process them. The 16-bit nature of the processor was not based on just a few components, because 8086 was a 16-bit ALU, 16-bit registers, as well as an internal and external data bus that processed data using 16-bit instructions, thanks to which the system worked much faster than with earlier Intel processors.

Of course, because of such a large-scale set of innovations, the 8086 was much more expensive than its predecessor, but in a similar vein, the consumer had a choice - Intel offered to buy a new product in several options, depending on the processor frequencies - they ranged from 5 to 10 MHz.

In terms of architecture, the Intel 8086 microprocessor consisted of two hardware modules — an execution module and a bus interface module. The execution module instructed the bus interface module from where to receive the instructions data, and then proceeded to prepare and execute them. Its essence was to manage data using the instruction decoder and the ALU, while the module itself had no direct connection to the data buses, and worked exclusively through the bus interface module.

The execution module contained an ALU block designed to perform logical and arithmetic operations, such as multiplication, division, addition, subtraction, or operations of type OR, AND, and NOT. There was also a 16-bit flag register that stored various states of operations in the accumulator - there were 9 of them in total, 6 of which were status flags, and 3 were system flags reflecting the device operation status.

The first were: carry flag, parity flag, auxiliary carry flag, zero flag, sign flag and overflow flag. The system flags included: the trace flag, the interrupt enable flag, and the direction flag.

In addition to the flags, the operation execution module contained 8 general-purpose registers that were used to transfer data via a 16-bit bus. At the same time, compatibility with the previous generation of software for 8-bit systems was maintained, because general-purpose registers (AX, BX, CX, DX) could work both in the 16-bit bus mode and in the data reading mode from the lower (AL, BL, CL, DL) and high (AH, BH, CH, DH) registers simultaneously, providing two-channel operation in the format of an 8-bit bus. It is due to the emphasis on compatibility with previous platforms from the point of view of software, the x86 architecture became the key and served as the basis for most subsequent processors.

Finally, the last of the registers in the module was a 16-bit pointer register, which saved the address of the data segment in the memory buffer needed to perform the operation. The remaining functional parts belonged to the adjacent bus interface module.

The bus interface module contained much more functional components - it was responsible for processing all data and sending instructions to the execution module, reading addresses from computer memory and information from all available I / O ports, as well as writing data to available memory and through the above ports. Due to the fact that the execution module did not have a direct connection to the bus interface module, the interaction of the blocks occurred through the internal data bus.

This module contains one of the key architectural features of the 8086 processor - a queue of instructions. The bus interface module includes a queue of instructions capable of storing up to 6 bytes of instructions in a buffer, sending new instructions along the pipeline after the corresponding request is received from the execution module. The term pipelining appeared precisely when the 8086 processor entered the market, since it means preparing the next instruction at the moment when the previous one is in the process of implementation.

Here there are 4 segment registers that are responsible for buffering the addresses of instructions and the data accompanying them in the computer's memory, and thus providing access to the desired segments of the central processor. The register also contains a command pointer (IP) containing the address of the next instruction intended for the execution module.

Finally, the last of the registers is a 16-bit instruction pointer, containing the address of the next instruction to execute.

The Intel 8086 became the company's first 16-bit processor, available in a 40-pin DIP (di-pi) package, which, along with many other features, became one of the standards in microelectronics of the next years.

Impact and Legacy

Stephen Morse, creating the concept of a small "daughter" processor within the walls of Intel, could hardly have imagined that he was on the verge of creating a historical microprocessor. The output of the Intel 8086 was modest and ambiguous, but its younger brother, the 8088, gained fame as part of the IBM PC / XT, allowing Intel to gain fame and make huge profits.

The x86 architecture formed the basis of all further Intel processors, which realized the convenience and versatility of the Morse concept “first software - then stuffing”. Each next processor was built on the foundation of the previous one, overgrown with new technologies, instructions and blocks, but in its essence differed little from the 8086.

And even today, looking at the i7 8086K, you need to understand that somewhere deep inside it are still the roots of the very processor that saw the light of 40 years ago, which marked the opening of the x86 era.

The author of the text is Alexander Lis.

Our video based on this article:

Source: https://habr.com/ru/post/417983/

All Articles