Reduce the number of layers of architecture from 5 to 2

Working on several open-source projects, one day I decided to simplify my life and developed an Upstream-module for nginx, which helped me remove the cumbersome layers of multi-layer architecture. It was a fun experience that I want to share in this article. My code is publicly available here: github.com/tarantool/nginx_upstream_module . You can raise it from scratch or download the Docker image from this link: hub.docker.com/r/tarantool/tarantool-nginx .

On the agenda:

- Introduction and theory.

- How to use these technologies.

- Performance evaluation.

- Useful links.

Introduction and theory

')

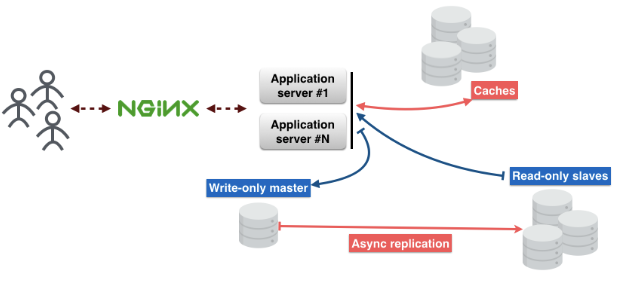

Here is the standard microservice architecture. User requests come through nginx to the application server. On the server there is a business logic with which users interact.

The application server does not store the state of objects, so they need to be stored somewhere else. For this you can use the database. And do not forget about the cache, which will reduce the delay and ensure faster delivery of content.

Split it into layers:

1st layer - nginx.

2nd layer - application server.

3rd layer - cache.

The 4th layer is a proxy database. This proxy is required to ensure fault tolerance and to maintain a permanent connection to the database.

The 5th layer is the database server.

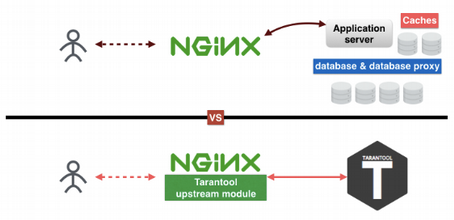

Thinking about these layers, I figured out how to exclude some of them. What for? Many reasons. I like simple, understandable things; I do not like to support a large number of different systems in prodution; and last but not least, the smaller the layers, the fewer the points of failure. As a result, I made the Tarantool Upstream module under nginx, which helped reduce the number of layers to two.

How does tarantool help reduce the number of layers? The first layer is nginx, the second, third and fifth layers are replaced by Tarantool. The fourth layer, the database proxy, is now in nginx. The trick is that Tarantool is a database, cache and application server, three in one. My upstream module connects nginx and Tarantool with each other and allows them to work smoothly without the other three layers.

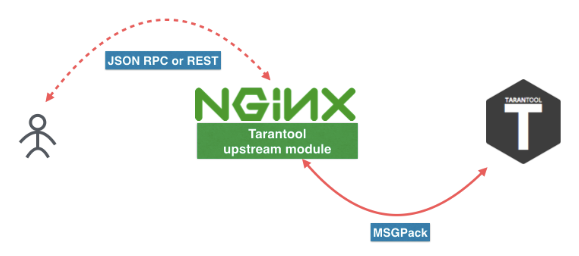

This is what a new microservice looks like. The user sends a request to REST or JSON RPC in nginx with the Tarantool Upstream module. A module can be connected directly to Tarantool, or the load can be balanced across multiple Tarantool servers. Between nginx and Tarantool we use an efficient protocol based on MSGPack. For more information, see this article .

You can also follow these links to download Tarantool and the nginx module. But I would advise you to install them through the package manager of your distribution kit or use the Docker-way (

docker pull tarantool/tarantool-nginx ).Docker images: hub.docker.com/r/tarantool/tarantool

Tarantool NginX upstream module

Binary packages: Tarantool - Download

Source Code: Tarantool

tarantool / nginx_upstream_module

How to use these technologies

Here is an example of the nginx.conf file. As you can see, this is the usual upstream nginx. Here we have a

tnt_pass that directly tnt_pass nginx which way to place the upstream tarantool.nginx-tnt.conf

http { # upstream upstream tnt { server 127.0.0.1:3301; keepalive 1000; } server { listen 8081; # gateway location /api/do { tnt_pass_http_request parse_args; tnt_pass tnt; } } } Here are the links to the documentation:

nginx.org/en/docs/http/ngx_http_upstream_module.html

github.com/tarantool/nginx_upstream_module/blob/master/README.md

Configured a bunch of nginx and Tarantool, then what? Now you need to register the handler function for our service and place it in the file. I put it in the “app.lua” file.

Here is the link to the Tarantool documentation: tarantool.io/ru/doc/1.9/book/box/data_model/#index

-- Bootstrap Tarantool box.cfg { listen='*:3301' } -- Grants box.once('grants', function() box.schema.user.grant('guest', 'read,write,execute', 'universe') end) -- Global variable hello_str = 'Hello' -- function function api(http_request) local str = hello_str if http_request.method == 'GET' then str = 'Goodbye' end return 'first', 2, { str .. 'world!' }, http_request.args end Now consider the Lua code.

Our

Box.cfg {} tells Tarantool to start listening on port 3301, but it can accept other parameters.Box.once tells Tarantool to call a function once.function api () is a function that I will call soon. It takes an HTTP request as the first argument and returns an array of values.I saved this code to a file and called it “app.lua”. You can run it by simply running the Tarantool application.

$> tarantool app.luaCall our function using a GET request. I use wget for this. By default, “wget” saves the response to a file. And I use “cat” to read data from a file.

$ wget '0.0.0.0:8081/api/do?arg_1=1&arg_2=2' $ cat do* { “id”:0, # — unique identifier of the request “result”: [ # — is what our Tarantool function returns [“first”], [2], [{ “request”:{“arg_2”:”2",”arg_1":”1"} “1”:”Goodbye world!” }] ]} Performance evaluation

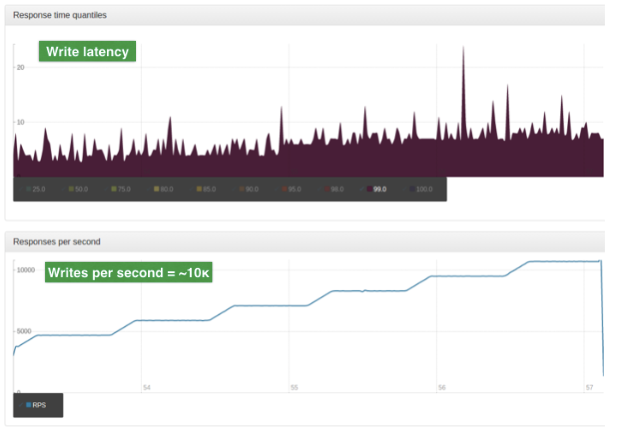

The evaluation was conducted on data from production. Input data is a large JSON object. The average size of such an object is 2 Kb. One server, 4-core CPU, 90 GB RAM, OS Ubuntu 14.04.1 LTS.

For this test, we use only one nginx worker. This worker is a balancer with a simple ROUND-ROBIN algorithm. It balances the load between the two Tarantool nodes. The load is scaled using sharding.

These graphs show the number of reads per second. The upper graph shows the delays (in milliseconds).

And these graphs show the number of write operations per second. The upper graph shows the delays (in milliseconds)

Impressive!

In the next article I will talk in detail about REST and JSON RPC.

English version of the article: hackernoon.com/shrink-the-number-of-tiers-in-a-multitier-architecture-from-5-to-2-c59b7bf46c86

Source: https://habr.com/ru/post/417829/

All Articles