Package Manager for Kubernetes - Helm: Past, Present, Future

Note trans. : With this article we open the cycle of publications about the package manager for Kubernetes, which we actively use in our daily work - Helm. The original author of the material is Matt Butcher - one of the founders of the Helm project, who works on open source projects at Microsoft and has written 8 technical books (in particular, “Go in Practice”). However, the article is supplemented with our (in some places - extensive) comments, and soon will be further expanded with new notes on a more practical Helm. UPDATE (09/03/2018): continued - “ Practical familiarity with the package manager for Kubernetes - Helm ”.

In June, Helm moved from the status of the leading project Kubernetes to the Cloud Native Computing Foundation (CNCF). CNCF is becoming the parent organization for the best-of-its-kind open source cloud native tools. Therefore, it is a great honor for Helm to become part of such a fund. And our first significant project under the auspices of CNCF is truly ambitious: we create Helm 3.

')

Helm originally appeared as the open source project of the company Deis. It was modeled in the likeness of Homebrew (package manager for macOS - approx. Transl. ) , And the challenge for Helm 1 was a lightweight opportunity for users to quickly install their first workloads on Kubernetes. The official announcement of Helm was held at the first KubeCon San Francisco conference in 2015.

Note Transl .: From the first version, which was called dm (Deployment Manager), YAML syntax was chosen to describe Kubernetes resources, and Jinja templates and Python scripts were supported when writing configurations.

A simple web application template could look like this:

When describing the components of the application being rolled out, the name of the used template and the necessary parameters of this template are indicated. In the example above, the

Already in this version, you can use resources from the general knowledge base, create your own template repositories and build complex applications using template parameters and nesting.

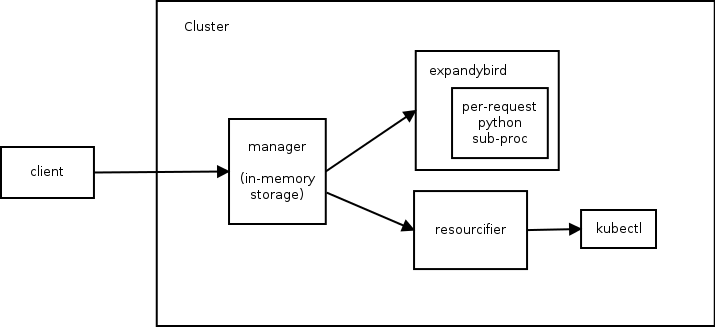

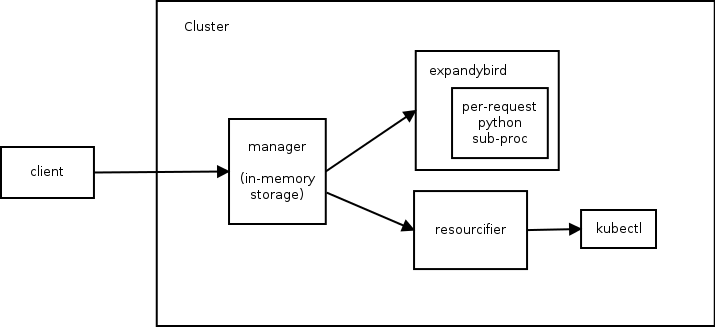

The architecture of Helm 1 consists of three components. The following diagram illustrates the relationship between them:

To understand the features of the first version of Helm, I’ll give you a help on the

Now back to the original text about the history of Helm ...

A few months later, we joined forces with the Kubernetes Deployment Manager team from Google and started working on Helm 2. The goal was to keep Helm easy to use by adding the following to it:

To achieve these goals, a second component has been added to the Helm ecosystem. It was Tiller, located inside the cluster, which provided for the installation and management of the Helm charts.

Note Perev .: Thus, in the second version of Helm, the only component left in the cluster is responsible for the installation life cycle ( release ), and the preparation of configurations is transferred to the Helm client.

If the cluster reboot using the first version of Helm resulted in a complete loss of service data (because they were stored in RAM), then in Helm 2 all data is stored in

Since the release of Helm 2 in 2016, the Kubernetes project has experienced explosive growth and the emergence of significant new opportunities. Role-based access control (RBAC) has been added. Presented many new types of resources. Inventory third party resources (Custom Resource Definitions, CRD). And most importantly, the best practices have appeared. Passing through all these changes, Helm continued to serve the needs of Kubernetes users. But it became obvious to us that the time had come to make major changes to it so that the needs of this developing ecosystem would continue to be met.

So we came to Helm 3. Next, I will talk about some of the innovations that appear on the roadmap of the project.

In Helm 2, we presented the templates. Early in the development of Helm 2, we supported the Go, Jinja templates, clean Python code, and we even had a prototype of support for ksonnet. But the presence of multiple engines for templates has created more problems than it has solved. Therefore, we have come to choose one.

Go templates had four advantages:

Although we saved the Helm interface to support other template engines, the Go templates have become our default standard. And the next few years of experience showed how engineers from many companies created thousands of charts using Go templates.

And we found out about their disappointments:

The most important thing is that using the template language, we essentially “cut off” Kubernetes objects to their string representation. (In other words, template developers had to manage Kubernetes resources as text documents in YAML format.)

Again and again we heard from users a request for the ability to inspect and modify Kubernetes resources as objects, not strings. At the same time, they were adamant that, whatever the path of realization we choose for this, it should be easy to learn and well maintained in the ecosystem.

After months of research, we decided to provide a built-in scripting language that can be packed into a sandbox and customized. Among the 20 leading languages there was only one candidate who met the requirements: Lua .

In 1993, a group of Brazilian IT engineers created a lightweight scripting language to integrate into their tools. Lua has a simple syntax, it is widely supported and has long been featured in the list of the top 20 languages . It is supported by IDE and text editors, there are many manuals and teaching books. We would like to develop our solution on such an already existing ecosystem.

Our work on Helm Lua is still at the concept proof stage, and we expect a syntax that is both familiar and flexible. Comparing the old and new approaches, you can see where we are going.

Here is the example of the Alpine hearth template in Helm 2:

In this simple template, you can immediately see all the built-in template directives, such as

And here is the definition of the same presentation in the preliminary version of the Lua code:

It is not necessary to examine each line of this example in order to understand what is happening. It is immediately evident that in the code it is defined under. But instead of using YAML strings with embedded template directives, we define it as an object in Lua.

Since we are working directly with objects (instead of manipulating a large glob of text), we can take full advantage of scripting. The possibilities of creating shared libraries that appear here look really attractive. And we hope that by submitting specialized libraries (or by allowing the community to create them), we can reduce the code above to something like this:

This example uses the ability to work with the definition of a resource as an object that is easy to set properties, while maintaining the brevity and readability of the code.

Although templates are not so wonderful for all tasks, they still have certain advantages. Go templates are a stable technology with an established user base and many existing charts. Many chart developers claim they like writing templates. Therefore, we are not going to remove template support.

Instead, we want to allow both templates and Lua to be used simultaneously. Lua scripts will have access to the Helm templates both before and after rendering, which will allow advanced chart developers to perform complex transformations on existing charts, while retaining the simple ability to create Helm charts with templates.

We are very excited about supporting Lua scripts, but at the same time getting rid of a significant part of the Helm architecture ...

During the development of Helm 2, we introduced Tiller as a component of integration with the Deployment Manager. Tiller played an important role for teams working on the same cluster: he made it possible to interact with the same set of releases for many different administrators.

However, Tiller worked as a giant sudo server, issuing a wide range of rights to everyone who has access to Tiller. And our default installation scheme was permissive configuration. Therefore, DevOps and SRE engineers had to learn additional steps to install Tiller in multi-tenant category clusters.

Moreover, with the emergence of CRD, we could no longer reliably rely on Tiller to maintain state or function as a central hub for information on the Helm release. We could only store this information in the form of separate records in Kubernetes.

The main goal of Tiller can be achieved without Tiller itself. Therefore, one of the first decisions taken at the planning stage of Helm 3 was a complete rejection of Tiller.

Without Tiller, the Helm security model is radically simplified. User authentication is delegated to Kubernetes. And authorization too. Helm rights are defined as Kubernetes rights (via RBAC), and cluster administrators can restrict Helm rights at any level of detail required.

In the absence of Tiller, to maintain the state of various releases within the cluster, we need a new way for all customers to interact (release management).

For this, we have submitted two new entries:

With these features, Helm user teams will be able to track Helm installation records in a cluster without the need for Tiller.

In this article I tried to talk about some of the major changes in Helm 3. However, this list is not at all complete. The Helm 3 plan includes other changes, such as improvements in the chart format, improvements in performance for the chart repositories, and a new event system that can be used by chart developers. We are also making efforts to define what Eric Raymond called archeology code , clearing the code base and updating components that have lost their relevance in the last three years.

Note trans. : Paradox, but the package manager Helm 2, if

With the addition of Helm to CNCF, we are inspired not only by Helm 3, but also by the Chart Museum , the wonderful utility Chart Testing , the official chart repository and other projects sponsored by Helm at CNCF. We believe that good package management for Kubernetes is just as important for a cloud-based cloud ecosystem as good package managers for Linux are.

Read also in our blog:

In June, Helm moved from the status of the leading project Kubernetes to the Cloud Native Computing Foundation (CNCF). CNCF is becoming the parent organization for the best-of-its-kind open source cloud native tools. Therefore, it is a great honor for Helm to become part of such a fund. And our first significant project under the auspices of CNCF is truly ambitious: we create Helm 3.

')

A brief history of Helm

Helm originally appeared as the open source project of the company Deis. It was modeled in the likeness of Homebrew (package manager for macOS - approx. Transl. ) , And the challenge for Helm 1 was a lightweight opportunity for users to quickly install their first workloads on Kubernetes. The official announcement of Helm was held at the first KubeCon San Francisco conference in 2015.

Note Transl .: From the first version, which was called dm (Deployment Manager), YAML syntax was chosen to describe Kubernetes resources, and Jinja templates and Python scripts were supported when writing configurations.

A simple web application template could look like this:

Yaml

resources: - name: frontend type: github.com/kubernetes/application-dm-templates/common/replicatedservice:v1 properties: service_port: 80 container_port: 80 external_service: true replicas: 3 image: gcr.io/google_containers/example-guestbook-php-redis:v3 - name: redis type: github.com/kubernetes/application-dm-templates/storage/redis:v1 properties: null When describing the components of the application being rolled out, the name of the used template and the necessary parameters of this template are indicated. In the example above, the

frontend and redis use templates from the official repository.Already in this version, you can use resources from the general knowledge base, create your own template repositories and build complex applications using template parameters and nesting.

The architecture of Helm 1 consists of three components. The following diagram illustrates the relationship between them:

Managerperforms the function of a web server (communication with clients occurs via the REST API), manages deployments in the Kubernetes cluster and is used as a data warehouse.- The

expandybirdcomponent leads the user configurations to a flat form, i.e. applies Jinja templates and runs Python scripts. - After receiving the flat configuration, the

resourcifiermakes the necessary calls to kubectl and returns the status and error messages to themanager, if any.

To understand the features of the first version of Helm, I’ll give you a help on the

dm command :Help output from dm

Usage: ./dm [<flags>] <command> [(<template-name> | <deployment-name> | (<configuration> [<import1>...<importN>]))] Commands: expand Expands the supplied configuration(s) deploy Deploys the named template or the supplied configuration(s) list Lists the deployments in the cluster get Retrieves the supplied deployment manifest Lists manifests for deployment or retrieves the supplied manifest in the form (deployment[/manifest]) delete Deletes the supplied deployment update Updates a deployment using the supplied configuration(s) deployed-types Lists the types deployed in the cluster deployed-instances Lists the instances of the named type deployed in the cluster templates Lists the templates in a given template registry (specified with --registry) registries Lists the registries available describe Describes the named template in a given template registry getcredential Gets the named credential used by a registry setcredential Sets a credential used by a registry createregistry Creates a registry that holds charts Flags: -apitoken string Github api token that overrides GITHUB_API_TOKEN environment variable -binary string Path to template expansion binary (default "../expandybird/expansion/expansion.py") -httptest.serve string if non-empty, httptest.NewServer serves on this address and blocks -name string Name of deployment, used for deploy and update commands (defaults to template name) -password string Github password that overrides GITHUB_PASSWORD environment variable -properties string Properties to use when deploying a template (eg, --properties k1=v1,k2=v2) -regex string Regular expression to filter the templates listed in a template registry -registry string Registry name (default "application-dm-templates") -registryfile string File containing registry specification -service string URL for deployment manager (default "http://localhost:8001/api/v1/proxy/namespaces/dm/services/manager-service:manager") -serviceaccount string Service account file containing JWT token -stdin Reads a configuration from the standard input -timeout int Time in seconds to wait for response (default 20) -username string Github user name that overrides GITHUB_USERNAME environment variable --stdin requires a file name and either the file contents or a tar archive containing the named file. a tar archive may include any additional files referenced directly or indirectly by the named file. Now back to the original text about the history of Helm ...

A few months later, we joined forces with the Kubernetes Deployment Manager team from Google and started working on Helm 2. The goal was to keep Helm easy to use by adding the following to it:

- chart templates ("chart" - an analogue of the package in the Helm ecosystem - approx. transl. ) for customization;

- management within the cluster for teams;

- full repository of charts;

- stable and signed package format;

- strong commitment to semantic versioning and maintaining backward compatibility from version to version.

To achieve these goals, a second component has been added to the Helm ecosystem. It was Tiller, located inside the cluster, which provided for the installation and management of the Helm charts.

Note Perev .: Thus, in the second version of Helm, the only component left in the cluster is responsible for the installation life cycle ( release ), and the preparation of configurations is transferred to the Helm client.

If the cluster reboot using the first version of Helm resulted in a complete loss of service data (because they were stored in RAM), then in Helm 2 all data is stored in

ConfigMaps , i.e. resources inside Kubernetes. Another important step was the transition from the synchronous API (where each request was blocking) to the use of asynchronous gRPC.Since the release of Helm 2 in 2016, the Kubernetes project has experienced explosive growth and the emergence of significant new opportunities. Role-based access control (RBAC) has been added. Presented many new types of resources. Inventory third party resources (Custom Resource Definitions, CRD). And most importantly, the best practices have appeared. Passing through all these changes, Helm continued to serve the needs of Kubernetes users. But it became obvious to us that the time had come to make major changes to it so that the needs of this developing ecosystem would continue to be met.

So we came to Helm 3. Next, I will talk about some of the innovations that appear on the roadmap of the project.

Welcome lua

In Helm 2, we presented the templates. Early in the development of Helm 2, we supported the Go, Jinja templates, clean Python code, and we even had a prototype of support for ksonnet. But the presence of multiple engines for templates has created more problems than it has solved. Therefore, we have come to choose one.

Go templates had four advantages:

- library built into Go ;

- patterns are executed in a tightly sandboxed environment;

- we could insert arbitrary functions and objects into the engine;

- They worked well with YAML.

Although we saved the Helm interface to support other template engines, the Go templates have become our default standard. And the next few years of experience showed how engineers from many companies created thousands of charts using Go templates.

And we found out about their disappointments:

- The syntax is difficult to read and poorly documented.

- Language problems, such as immutable variables, intricate data types, and restrictive in-scope rules, have turned simple things into complex ones.

- The inability to define functions within templates has further complicated the creation of reusable libraries.

The most important thing is that using the template language, we essentially “cut off” Kubernetes objects to their string representation. (In other words, template developers had to manage Kubernetes resources as text documents in YAML format.)

Work on objects, not pieces of YAML

Again and again we heard from users a request for the ability to inspect and modify Kubernetes resources as objects, not strings. At the same time, they were adamant that, whatever the path of realization we choose for this, it should be easy to learn and well maintained in the ecosystem.

After months of research, we decided to provide a built-in scripting language that can be packed into a sandbox and customized. Among the 20 leading languages there was only one candidate who met the requirements: Lua .

In 1993, a group of Brazilian IT engineers created a lightweight scripting language to integrate into their tools. Lua has a simple syntax, it is widely supported and has long been featured in the list of the top 20 languages . It is supported by IDE and text editors, there are many manuals and teaching books. We would like to develop our solution on such an already existing ecosystem.

Our work on Helm Lua is still at the concept proof stage, and we expect a syntax that is both familiar and flexible. Comparing the old and new approaches, you can see where we are going.

Here is the example of the Alpine hearth template in Helm 2:

apiVersion: v1 kind: Pod metadata: name: {{ template "alpine.fullname" . }} labels: heritage: {{ .Release.Service }} release: {{ .Release.Name }} chart: {{ .Chart.Name }}-{{ .Chart.Version }} app: {{ template "alpine.name" . }} spec: restartPolicy: {{ .Values.restartPolicy }} containers: - name: waiter image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}" imagePullPolicy: {{ .Values.image.pullPolicy }} command: ["/bin/sleep", "9000"] In this simple template, you can immediately see all the built-in template directives, such as

{{ .Chart.Name }} .And here is the definition of the same presentation in the preliminary version of the Lua code:

unction create_alpine_pod(_) local pod = { apiVersion = "v1", kind = "Pod", metadata = { name = alpine_fullname(_), labels = { heritage = _.Release.Service or "helm", release = _.Release.Name, chart = _.Chart.Name .. "-" .. _.Chart.Version, app = alpine_name(_) } }, spec = { restartPolicy = _.Values.restartPolicy, containers = { { name = waiter, image = _.Values.image.repository .. ":" .. _.Values.image.tag, imagePullPolicy = _.Values.image.pullPolicy, command = { "/bin/sleep", "9000" } } } } } _.resources.add(pod) end It is not necessary to examine each line of this example in order to understand what is happening. It is immediately evident that in the code it is defined under. But instead of using YAML strings with embedded template directives, we define it as an object in Lua.

Let's cut this code

Since we are working directly with objects (instead of manipulating a large glob of text), we can take full advantage of scripting. The possibilities of creating shared libraries that appear here look really attractive. And we hope that by submitting specialized libraries (or by allowing the community to create them), we can reduce the code above to something like this:

local pods = require("mylib.pods"); function create_alpine_pod(_) myPod = pods.new("alpine:3.7", _) myPod.spec.restartPolicy = "Always" -- set any other properties _.Manifests.add(myPod) end This example uses the ability to work with the definition of a resource as an object that is easy to set properties, while maintaining the brevity and readability of the code.

Templates ... Lua ... Why not all together?

Although templates are not so wonderful for all tasks, they still have certain advantages. Go templates are a stable technology with an established user base and many existing charts. Many chart developers claim they like writing templates. Therefore, we are not going to remove template support.

Instead, we want to allow both templates and Lua to be used simultaneously. Lua scripts will have access to the Helm templates both before and after rendering, which will allow advanced chart developers to perform complex transformations on existing charts, while retaining the simple ability to create Helm charts with templates.

We are very excited about supporting Lua scripts, but at the same time getting rid of a significant part of the Helm architecture ...

Saying goodbye to tiller

During the development of Helm 2, we introduced Tiller as a component of integration with the Deployment Manager. Tiller played an important role for teams working on the same cluster: he made it possible to interact with the same set of releases for many different administrators.

However, Tiller worked as a giant sudo server, issuing a wide range of rights to everyone who has access to Tiller. And our default installation scheme was permissive configuration. Therefore, DevOps and SRE engineers had to learn additional steps to install Tiller in multi-tenant category clusters.

Moreover, with the emergence of CRD, we could no longer reliably rely on Tiller to maintain state or function as a central hub for information on the Helm release. We could only store this information in the form of separate records in Kubernetes.

The main goal of Tiller can be achieved without Tiller itself. Therefore, one of the first decisions taken at the planning stage of Helm 3 was a complete rejection of Tiller.

Security enhancement

Without Tiller, the Helm security model is radically simplified. User authentication is delegated to Kubernetes. And authorization too. Helm rights are defined as Kubernetes rights (via RBAC), and cluster administrators can restrict Helm rights at any level of detail required.

Releases, ReleaseVersions and State Storage

In the absence of Tiller, to maintain the state of various releases within the cluster, we need a new way for all customers to interact (release management).

For this, we have submitted two new entries:

Release- for a specific installation of a specific chart. If we dohelm install my-wordpress stable/wordpress, a release calledmy-wordpresswill be created and maintained throughout the life of this WordPress installation.ReleaseVersion- with each update of the Helm chart you need to consider what has changed and whether the change was successful.ReleaseVersionbound to a release and stores only records with information about updates, rollbacks and deletions. When we runhelm upgrade my-wordpress stable/wordpress, the originalReleaseobject will remain the same, but aReleaseVersionchild object will appear with information about the update operation.

Releases and ReleaseVersions will be stored in the same namespaces as chart objects.With these features, Helm user teams will be able to track Helm installation records in a cluster without the need for Tiller.

But wait, that's not all!

In this article I tried to talk about some of the major changes in Helm 3. However, this list is not at all complete. The Helm 3 plan includes other changes, such as improvements in the chart format, improvements in performance for the chart repositories, and a new event system that can be used by chart developers. We are also making efforts to define what Eric Raymond called archeology code , clearing the code base and updating components that have lost their relevance in the last three years.

Note trans. : Paradox, but the package manager Helm 2, if

install or upgrade successful, i.e. having release in the success state does not guarantee that the application resources have been successfully rolled out (for example, there are no errors like ImagePullError ). Perhaps the new event model will allow adding additional hooks for resources and better control of the rollout process - we will soon find out about it.With the addition of Helm to CNCF, we are inspired not only by Helm 3, but also by the Chart Museum , the wonderful utility Chart Testing , the official chart repository and other projects sponsored by Helm at CNCF. We believe that good package management for Kubernetes is just as important for a cloud-based cloud ecosystem as good package managers for Linux are.

PS from translator

Read also in our blog:

- “ Practical acquaintance with the package manager for Kubernetes - Helm ”;

- " Practice with dapp. Part 2. Deploying Docker images in Kubernetes with the help of Helm ”;

- “ Build and heat applications in Kubernetes using dapp and GitLab CI ”;

- “ Best CI / CD practices with Kubernetes and GitLab ” (review and video of the report) ;

- " Our experience with Kubernetes in small projects " (video of the report, which includes an introduction to the technical device Kubernetes).

Source: https://habr.com/ru/post/417079/

All Articles