Chemistry of the computer world

School science is often taught in a dry and uninteresting form. Children learn to memorize mechanically to pass the exam, and do not see the connection of science with the outside world.

These words belong to the great physicist who never gives up and believes in miracles, Stephen Hawking. But not the words about education are important, but the second part of the quotation about the connection of science with the outside world. Science follows us every day. She is everywhere, whether we see it or not. We feel its influence regardless of our religion, place of residence or occupation. Damn, science was even before the term was coined. Our entire Universe is full of processes that are described by various sciences. For the most part, preference is still given to physics. Science, capable of calling chaos order, and order chaos. And explain why it is so, and not otherwise. However, I would like to touch science, which, like physics, is present in our life, having an incredible influence on its course. I don’t see any reason to keep intrigue for a long time, because everything is already from the title to this essay and so understood that it will be a question of chemistry. But not just about chemistry, as about science, but about how it manifests its power and beauty in the computer world.

Of course, for most of us, memories of school chemistry lessons are not nostalgia, but rather relief from the realization that this nightmare is finally over. However, it is impossible to diminish the value of this science. It was chemistry that gave us the opportunity to create faster and more powerful computers, increase the amount of hard drives, even bring the picture quality on our monitors to unreal.

Over time, the computer world is rapidly improving. One of the most noticeable aspects of this process is increasing power and reducing the size of certain devices that we use. For example, microchips, and therefore silicon transistors. And this whole process of computer evolution is constantly confronted with the inexorable laws of physics. The constant increase in the number of transistors in microchips gives more power and more headache to their creators. This is where chemistry comes to the rescue.

')

Transistor chemistry

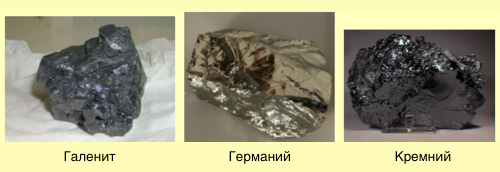

Transistors work because the semiconductors they are made of (silicon, germanium) have a very unusual and very useful property - they conduct electrical current better than insulators (glass, for example), but not as good as conductors (aluminum, for example ).

Scientists can manipulate the conductivity of semiconductors, increasing or decreasing it, by adding a small amount of impurities (often used for boron or arsenic). "Diluting" silicon with other substances, scientists change its properties. It can, as a result, act as an insulator, or as a metal. What directly affects the ability of transistors to perform their functions.

Silicon - a semiconductor used to manufacture transistors - is the most common material in the world at the moment. It makes up 27.7% of the mass of the earth's crust and is the main constituent of sand.

Although the first transistor, created at Bell Labs in 1947, was made on the basis of germanium, there are a number of reasons why Silicon Valley is not called Germanium.

Bell labs

The most commonplace reason is the inaccessibility and high cost of germanium. A much more serious problem was the chemical properties of the insulating form of this substance called germanium oxide. It dissolves in water, so in the polishing process required to create several transistors on a single microchip, it would simply "disappear". Thus, spilling a glass of water on your “germanium” laptop, you would simply throw it away.

This is what prompted scientists to use silicon, which, in turn, also has some drawbacks. About them a little later.

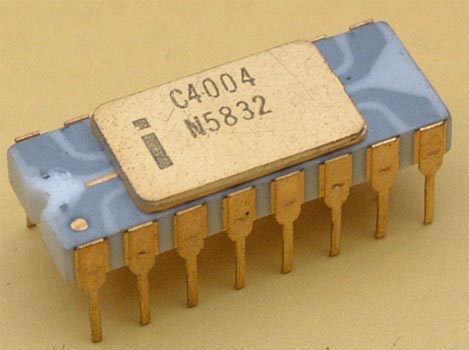

Intel 4004

A small excursion into history. In 1971, Intel released the first Intel 4004 microprocessor, which contained 2,300 transistors. Now, one microprocessor contains several hundred million transistors and their number is growing every year.

This is a direct confirmation of Moore's law (co-founder of Intel, Gordon Moore), which states that the number of transistors on one chip will double every 2 years. Very bold, but incredibly accurate prediction. However, now microchips are becoming smaller and smaller in size. Their power at the same time increases precisely due to an increase in the number of transistors. And while chemistry is coping with this process, “size smaller / more power”. Unfortunately, there is one “But” - when the components of the microchip are reduced, the space where the connecting wires of the transistors are joined to the silicon wafer also decreases. Roughly speaking, by reducing the transistors and the microchips themselves, the components connecting everything into one should also decrease. And in the end, without thinking how to reduce these components, the power and size of the microchips will remain at the current level.

The solution for this problem for Intel was the change of metal (microchips become smaller, and the resistance is greater). When one conductor ceases to be effective, begin to use another. In the distant 1980s, tungsten was used, then titanium in the early 90s, later cobalt, and nickel, which is used now. Each of the new metals improved microchips, since the level of resistance at the junction points decreased.

However, the constant transition from one metal to another carries a lot of headaches for manufacturers of microchips. Every time there are new difficulties. After long use of tungsten (about 5 years, according to Intel) it was necessary to change the equipment for depositing (storing) materials. We also had to switch from heating semiconductor wafers in special furnaces to the use of gas-discharge lamps, since this accompanies a stronger connection of the new material with silicon. The main task now is to develop a methodology that allows changing the necessary materials without special costs for the manufacturer, both financial and temporary.

Another big problem was the connection of the transistors and the board itself. Or rather, the desire to move from aluminum to copper. The point is that copper is a better conductor than aluminum, but its use is impossible in view of its susceptibility to corrosion. However, discarding this material is stupid, it is better to help how to solve the problem with corrosion.

Titanium

And so, in the early 90s, scientists came to the conclusion that a thin layer of titanium over copper can prevent corrosion. This problem was solved, but there was one more. Aluminum compounds could be applied to the microprocessor using standard lithographic methods. What can be said about copper. In addition, copper should not come into contact with silicon in view of the occurrence of certain interactions between materials that can damage transistors.

An important aspect of the constant reduction of microprocessors is not only the combination of materials. The efficiency of the transistor gates is directly dependent on a thin insulating layer of silicon dioxide. The reduction of transistors led to a decrease in this layer, the thickness of which now ranges from 3 to 4 atoms.

The downside of this thickness is current leakage. That is, instead of such positions as on or off, we get the positions on and off with a leak. The smaller the microprocessors become, the more power they require for normal operation.

Thus, turning off the transistor, it is impossible to prevent the loss of current. The Pentium microchip consumes about 30-40 watts with 1 watt loss. Now we need about 100 watts for the normal operation of modern microprocessors, and as a result, about half of the current is lost. And this process also accompanies strong heat generation. That is, in laptops you cannot use 100-watt chips, the ceiling for these devices is 30-40 watts.

Thus, if all of the above problems are not solved, Moore's law will become history, and the further evolution of microchips will have to wait for a very long time.

DNA instead of silicon

Some researchers are thinking about a complete replacement of silicon with something more perfect. Already used gallium arsenide, which has some advantages over silicon. First, the speed of such microprocessors is much higher. Secondly, they are extremely sensitive to various radio waves, which makes them ideal for mobile phones and wireless Internet connection cards. However, large power consumption requirements have limited the use of gallium arsenide-based transistors exclusively in communication chips.

Also do not forget about the research of carbon nanotubes. The use of hollow cylinders will require significantly less energy than those made from silicon.

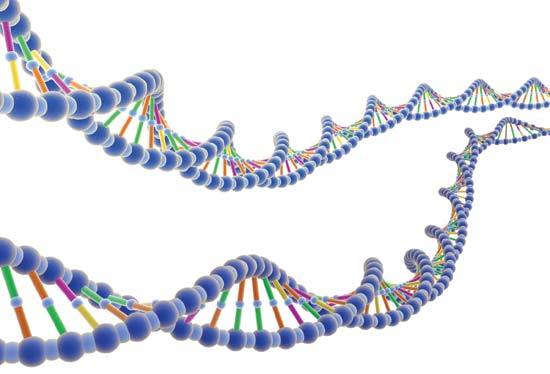

However, if you slightly shift your thinking from science to science fiction, then why not use DNA. This option looks almost unreal. However, it is worth looking at the possible benefits, as this idea will seem like a cake, which is still worth the candle. Or rather:

- DNA strands already encode information, and scientists already manage to change it by copying, deleting, or moving certain segments of the chain;

- storing data on DNA will significantly increase processing speed and reduce energy consumption (theoretically);

- also the material itself (DNA) is very affordable, cheap, like nanotubes, incredibly small in size.

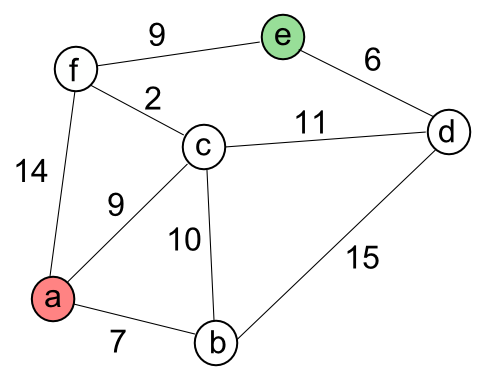

Schematic example of the traveling salesman problem

The idea of using DNA in computer technology is not new. Back in 1994, Leonard Max Adleman, a computer science theorist in the University of Southern California, used DNA to solve the traveling salesman problem (finding the best way between several cities with the condition of visiting each one only once). It took several days, so no super-fast DNA computers can be expected in the near future.

Chemistry HDD

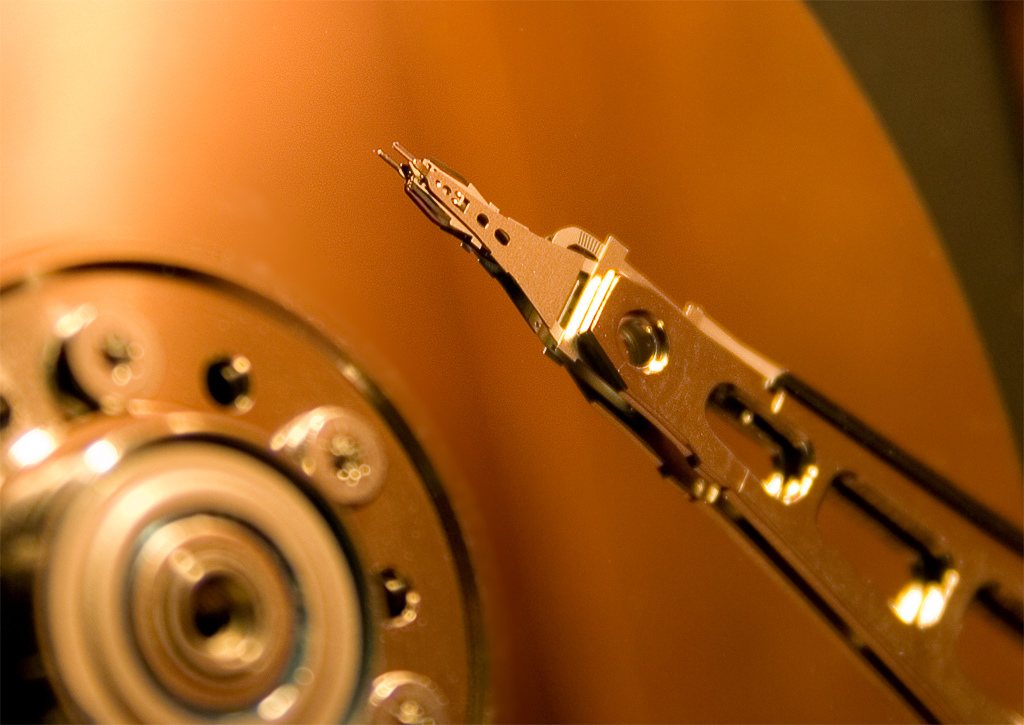

Hard disks or HDDs (hard disk drives) are among the most common means of storing and processing data, especially in laptops and personal computers. On the HDD, information is recorded on hard plates of aluminum or glass, covered with a layer of ferromagnetic material.

The process of recording data on the HDD occurs by magnetizing one or another HDD sector. More precisely, the rigid plate rotates at high speed, and the writing head, located at a distance of 10 nm, transmits an alternating magnetic field that changes the magnetization vector of the domain, which is at this moment right under the head. Roughly speaking, the empty sector (domain) has no charge, and the filled information has a certain magnetic vector (north-south), the combination of which creates a logical sequence of 0 and 1, due to which the information itself is formed.

As a result, we have several elements that can be improved through the use of new chemical elements: rigid plates, read-write head. Attempts to reduce the physical size of the plates while increasing the volume of stored and processed information face new problems that chemistry can solve.

At the moment, HDD plates are made of Kobolt, Chromium and Platinum. The first two materials are necessary to create magnetism and occupy about 50-60% of the total "mixture." Platinum also prevents the uncontrolled change of the magnetic vector of the domain of the plate.

When reducing the thickness of the plate, a new problem arises. Now magnetic particles are measured within 10 nanometers. Being so small, they begin to vibrate, during heating. Platinum is still able to compensate for this effect, but its possibilities are not limitless.

Thus, while reducing the size of the plate, platinum will not be able to prevent the uncontrolled change of the magnetic vector of the domain. So far this size limit has not been reached, but researchers have already set themselves a very ambitious task - to reduce from 10 nanometers to 5. This can be achieved by changing the temperature at which the layers are formed, or by using a certain material under the magnetic layer. For example, the use of nickel allows you to break the plate into a larger number of domains.

An even more serious problem is that on typical disks magnetic particles do not break up into identical regions, one region may be larger than another. Simply put, a change in the polarity of the magnetic region is further complicated by the fact that we do not know the exact position of the region due to non-uniform distribution.

The chemistry of magnetic disk heads has also evolved.

The reading heads of hard disks during operation do not come into contact with the surface of the plates due to the intercalation of air flow that forms at the surface during rapid rotation (usually 5400 or 7200 rpm). The distance between the head and the disk in modern disks is about 10 nm. Such a small distance is due to the need to transfer an alternating magnetic field from the head to the plate.

Initially, the read heads were made of nickel (80%) and iron (20%). Later the ratio was changed to 45% / 55%. However, this was not enough to solve the problem, because they began to use an alloy of cobalt and iron.

Another problem is the physical damage to the plate by the read / write head. As we have said, the plate rotates very quickly, creating a vibration, and the head is located on a critically small infusion. And sometimes the head can hit the plate surface, damaging it, respectively, leading to the problem of reading data.

The solution to this problem was the use of a thin, but solid, diamond-like carbon coating, both a disk and a read head. Also between them was a layer of lubricant with a thickness of 1 molecule. Thus, if the head hits the disc, their surfaces will slide over the lubricant and there will be no damage.

However, collisions often occur, and it is not possible to increase the thickness of the lubricant layer. How to make it more durable? The answer to this question was perfluorinated ether. This substance has a unique property - self-recovery. Because of the consistency, any damage to the lubricating layer is itself inhibited, as well as if you hold the knife on the surface of the honey.

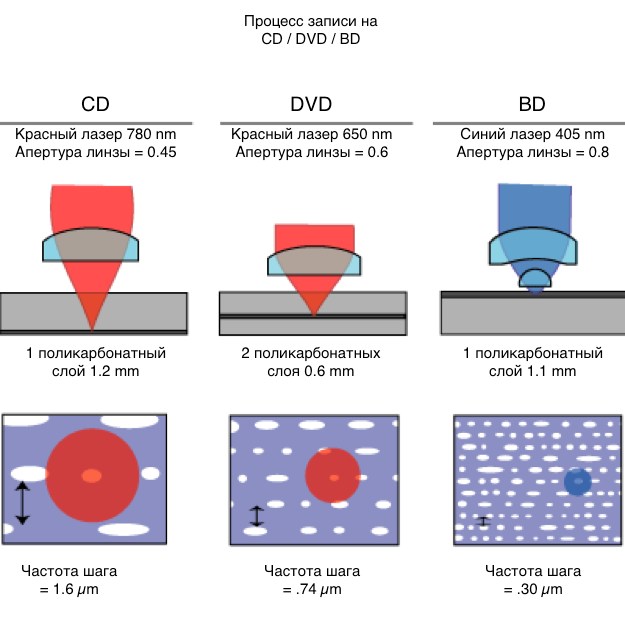

CD / DVD

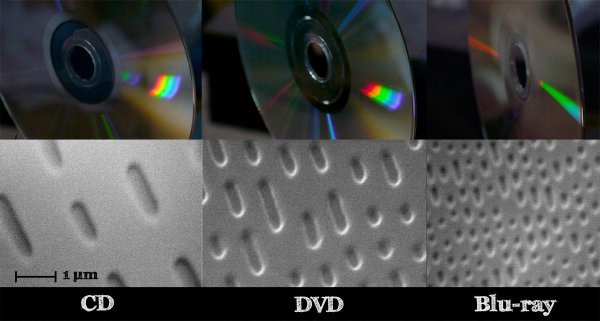

Quite different methods of chemistry and physics are used in the work of optical disks, the similarity of which to hard ones is limited by rotation and the presence of a reading head. However, their production does not use magnetic elements at all.

Among the various CDs and DVDs, the most chemically interesting are the owners of the rewrite function. On such disks a special coating with a phase transition is used. The oldest and most common materials for the creation of this alloy are germanium, antimony and tellurium.

The phase transition coating has an amazing property - its atoms can randomly form a chaotic state or an ordered one (chaotic - a disassembled cube-rubik, an ordered - folded cube-rubic). Chaotic atoms look dim, and orderly brilliantly, which suggests an analogy with zeros and ones.

The drive uses a laser with three power levels to read and write data. During the reading, the laser operates at the lowest power. It focuses on the phase transition layer, which can be located deep from the disk surface. The optical sensor recognizes from which atoms the beam bounces off, from dim or shining.

The process of recording is a bit more complicated. At high power, the laser generates an increase in temperature, certain areas of the layer melt, the atoms are transferred to their chaotic (dim) position. When the average laser power is heated areas of the layer, rather than melting, and the atoms are lined up in a perfect (radiant) position. After the laser finishes recording, it returns to the minimum power and reads the data on the disk.

Monitor chemistry

Modern LCDs give us the opportunity to use thinner and less energy-intensive monitors with less damage to the eyes.

The first CRT monitor, introduced in 1927 by Phil Farnsworth, was a revolutionary discovery. But such a monitor was very energy-intensive and had a number of other shortcomings.

Philo Farnsworth

The principle of operation of CRT was as follows - phosphoric points covering the entire surface of the glass, shone due to the constant reading of their electron beam. Thus, certain points were highlighted and an image was formed. However, if the entire dot matrix is updated several times a second, then the illusion of movement is added. When color monitors appeared, they were equipped with phosphor dots of three colors - red, green, blue. Chemists have found a variety of alloys that allow the emission of a certain color. Properly blended, zinc sulfide with copper and aluminum gives green, and silver with blue. For red, europium, oxygen, and yttrium (found in moonstones) are needed.

However, many of these alloys are extremely dangerous for the environment. Zinc sulfide, for example, is very toxic. And discarded old monitors emit all these terrible substances into the groundwater.

Among other things, the electron beam requires more power to operate. , , (, , ). , , - .

— . 1889 , « » . .

1971 , . LCD-.

This type of display establishes a column of liquid crystals between filters that are polarized at an angle of 90 degrees to each other.

White light passes through the first filter, then through a layer of liquid crystals and a second filter. Interacting with the electric field, the liquid crystals change their structure, blocking the light. To obtain a color image, red, blue and green filters are used above each pixel.

The absence of the need for an electron beam significantly reduces the energy consumption of the monitor and removes the limitation in its size.

P.S

. . . , - . , , .

As advertising. , NL/US 4- : E5-2650v4/10GB DDR4/240GB SSD/1 Gbps — $29. Geektimes .

? DELL R730XD c 9000 , 10 / : 2 Intel Dodeca-Core Xeon E5-2650 v4 128GB DDR4 6 x 480GB SSD 1Gbps 100 — $249 / .

Source: https://habr.com/ru/post/404003/

All Articles