After 11,500 crashes, a quadrocopter with AI learned to fly indoor

If a fly in front of you is endlessly dug out of the window - you should not make a premature conclusion that it is stupid. Perhaps this is a miniature robot with an AI system in self-learning mode.

The UAV crashed into the surrounding objects 11,500 times, flying randomly chosen trajectories.

How to teach an unmanned aerial vehicle to move along a given route, dodging obstacles? Is it possible to do without a digital 3D map when it comes to indoor navigation? To solve this problem, there are several suitable technologies, including simulation training , in which the “teacher” trains the drone to fly along different paths, correcting its actions if necessary. Gradually, the UAV learns the routes. But this approach is clearly limited by the set of input data: the teacher can not endlessly accompany the drone.

')

In recent years, the system of machine learning without a teacher (the self-supervised

learning). They proved to be excellent in a number of tasks: navigation , grabbing objects (in robotics) and tasks of “push / pull” (intuitive physics). But can self-learning systems master such a difficult task as indoor navigation - and overcome the limitations of imitational training?

Previous studies have shown that such systems are indeed capable of learning without a teacher in a simulator, and that trained knowledge can be transferred to the real world. But in practice, another question is more relevant: does self-study in the real world work in an arbitrary room, without a simulator and a pre-made map? After all, this is exactly the task that each of us will face when he buys a robot and brings it home. He must independently study the situation and begin to orient himself in any house (it is better to remove all fragile objects from the rooms beforehand and also to hide oneself).

Researchers at Carnegie Mellon University (USA) set the most difficult task by placing a quadrocopter with a neural network for self-study in the most difficult for navigation room with a large number of rooms and furniture. The authors emphasize that in other studies they try to simplify the environment in order to avoid collisions. They, on the contrary, wanted to push the UAV to the maximum number of collisions and accidents, so that the robot could learn from this experience. Scientists have designed a system of self-study, which takes into account this negative experience, as well as the positive experience of successful flight along trajectories.

The AR Drone 2.0 quadcopter, under the control of a machine learning system, was tested in 20 rooms at home — and as a result, learned to effectively avoid collisions in each of these rooms. Duration of training - 40 flight hours. Researchers say the cost of the drone parts is small and easy to replace, so the probability of catastrophic accidents could be neglected.

All collisions were completely random. The UAV was placed at an arbitrary point in space - and it flew in a random direction. After the accident, he returned to the starting point - and again flew in a random direction, until again he crashed somewhere.

The quadcopter camera shoots at 30 frames / s, and after the accident, all frames are divided into two parts: frames with a good trajectory are placed in the positive group, and frames taken just before the collision are placed in the negative group. During training, the drone crashed into surrounding objects 11,500 times - and collected one of the largest UAV accidents bases in the world. This "negative experience" contains information about all possible ways that a quadcopter can crash somewhere.

The groups of positive and negative experiences were transmitted as input to the neural network, which learned to make predictions about whether a particular positive experience from the current trajectory would lead to the appearance of a negative experience from a collisional sample. That is, the neural network began to predict where to fly.

The neural network diagram is shown in the illustration below. The weights of the convolutional layers (gray) were calculated in advance according to the ImageNet classification, but in the combined layers (orange), the weights were chosen randomly, and the optimal values were assimilated during the self-learning process, based entirely on accident data. The illustration shows the input data - frames from the camera (left) and the output of the neural network (the decision to fly straight, turn left or right).

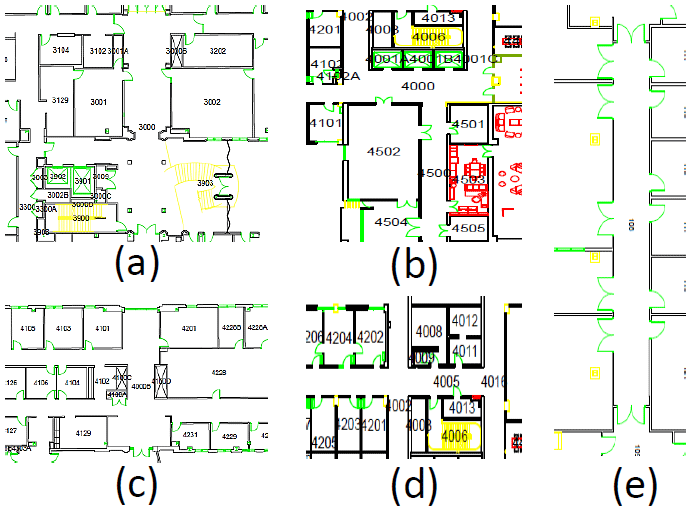

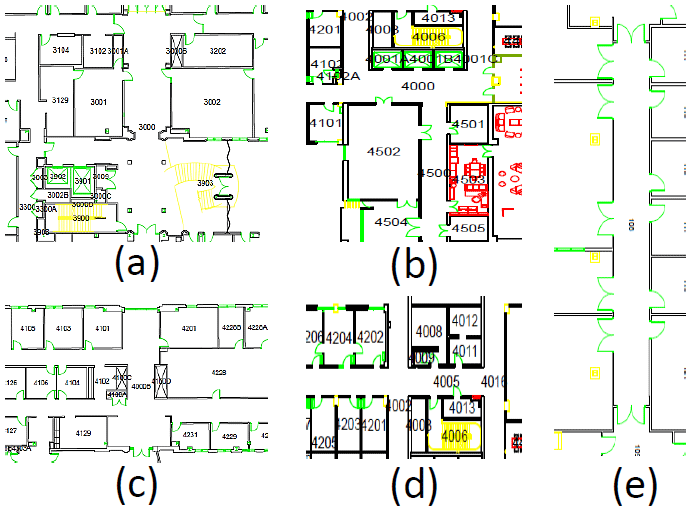

The following diagram shows the test site - a four-storey house, where the drone was self-trained.

The output was surprisingly effective navigation system for UAVs. A fairly simple approach to self-learning is very effective especially for rooms with a large number of obstacles, including moving obstacles, such as people.

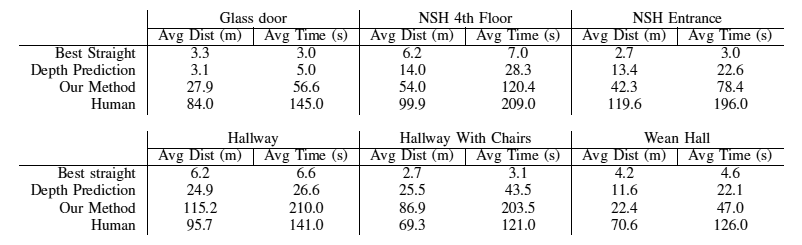

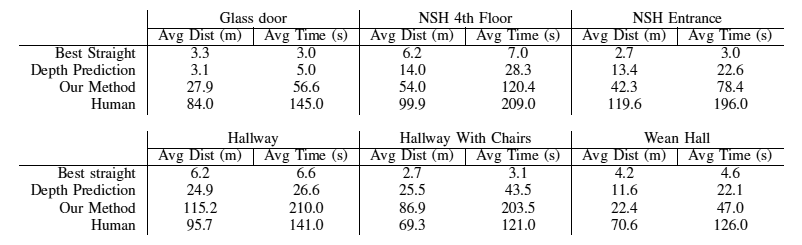

Comparative testing has shown that this navigation system is 2-10 times more efficient than self-learning systems with monocular distance estimation . Especially the difference is manifested next to the glass walls and unmarked walls, which traditionally complicate the work of the latter.

The scientific work was published on April 19, 2017 on the site of preprints arXiv.org (arXiv: 1704.05588v2).

The UAV crashed into the surrounding objects 11,500 times, flying randomly chosen trajectories.

How to teach an unmanned aerial vehicle to move along a given route, dodging obstacles? Is it possible to do without a digital 3D map when it comes to indoor navigation? To solve this problem, there are several suitable technologies, including simulation training , in which the “teacher” trains the drone to fly along different paths, correcting its actions if necessary. Gradually, the UAV learns the routes. But this approach is clearly limited by the set of input data: the teacher can not endlessly accompany the drone.

')

In recent years, the system of machine learning without a teacher (the self-supervised

learning). They proved to be excellent in a number of tasks: navigation , grabbing objects (in robotics) and tasks of “push / pull” (intuitive physics). But can self-learning systems master such a difficult task as indoor navigation - and overcome the limitations of imitational training?

Previous studies have shown that such systems are indeed capable of learning without a teacher in a simulator, and that trained knowledge can be transferred to the real world. But in practice, another question is more relevant: does self-study in the real world work in an arbitrary room, without a simulator and a pre-made map? After all, this is exactly the task that each of us will face when he buys a robot and brings it home. He must independently study the situation and begin to orient himself in any house (it is better to remove all fragile objects from the rooms beforehand and also to hide oneself).

Researchers at Carnegie Mellon University (USA) set the most difficult task by placing a quadrocopter with a neural network for self-study in the most difficult for navigation room with a large number of rooms and furniture. The authors emphasize that in other studies they try to simplify the environment in order to avoid collisions. They, on the contrary, wanted to push the UAV to the maximum number of collisions and accidents, so that the robot could learn from this experience. Scientists have designed a system of self-study, which takes into account this negative experience, as well as the positive experience of successful flight along trajectories.

The AR Drone 2.0 quadcopter, under the control of a machine learning system, was tested in 20 rooms at home — and as a result, learned to effectively avoid collisions in each of these rooms. Duration of training - 40 flight hours. Researchers say the cost of the drone parts is small and easy to replace, so the probability of catastrophic accidents could be neglected.

All collisions were completely random. The UAV was placed at an arbitrary point in space - and it flew in a random direction. After the accident, he returned to the starting point - and again flew in a random direction, until again he crashed somewhere.

The quadcopter camera shoots at 30 frames / s, and after the accident, all frames are divided into two parts: frames with a good trajectory are placed in the positive group, and frames taken just before the collision are placed in the negative group. During training, the drone crashed into surrounding objects 11,500 times - and collected one of the largest UAV accidents bases in the world. This "negative experience" contains information about all possible ways that a quadcopter can crash somewhere.

The groups of positive and negative experiences were transmitted as input to the neural network, which learned to make predictions about whether a particular positive experience from the current trajectory would lead to the appearance of a negative experience from a collisional sample. That is, the neural network began to predict where to fly.

The neural network diagram is shown in the illustration below. The weights of the convolutional layers (gray) were calculated in advance according to the ImageNet classification, but in the combined layers (orange), the weights were chosen randomly, and the optimal values were assimilated during the self-learning process, based entirely on accident data. The illustration shows the input data - frames from the camera (left) and the output of the neural network (the decision to fly straight, turn left or right).

The following diagram shows the test site - a four-storey house, where the drone was self-trained.

The output was surprisingly effective navigation system for UAVs. A fairly simple approach to self-learning is very effective especially for rooms with a large number of obstacles, including moving obstacles, such as people.

Comparative testing has shown that this navigation system is 2-10 times more efficient than self-learning systems with monocular distance estimation . Especially the difference is manifested next to the glass walls and unmarked walls, which traditionally complicate the work of the latter.

The scientific work was published on April 19, 2017 on the site of preprints arXiv.org (arXiv: 1704.05588v2).

Source: https://habr.com/ru/post/403855/

All Articles