Virtual Reality with Google

Author: Nikolai Khabarov, Embedded Expert DataArt, Evangelist for Smart Home Technologies, inventor.

This article is a brief insight into the basics of working with virtual and augmented reality. I did not set the task to fully reveal all the features of the Google VR SDK and planned to give a minimal base to those who are interested in these technologies and would like to start working with them.

')

From the history of 3D

To see a three-dimensional image, the first thing we need to do is to organize the transfer of two different images for the left and right eyes. It is clear that when we look at any object in everyday life, our eyes see it from different angles, because they are at some distance from each other. Actually, due to this we perceive the three-dimensional space around.

The idea of a 3D image is quite old, there are many technologies that allow to reproduce a three-dimensional image. For example, everyone saw in cinemas anaglyph glasses with lenses of different colors. The image is fed to the screen from two angles and a color spectrum divided into two parts. In this case, each angle is passed through one of the filters, due to which the illusion of volume appears. The main disadvantage of this method is a noticeable loss of color rendition.

At the next stage of development of technology, the so-called shutter glasses, which are also called light valves, appeared - they are also used in cinemas. The device of such glasses is different: on each lens there is a small screen with a resolution of one pixel, it can either completely close the image, or completely skip it. The glasses alternately switch between the screens, allowing us to see the image with one eye and then with the other. At the same time, in order to see the three-dimensional image, we need to synchronize the switching of points with the TV or movie screen. This can be done over the radio channel, or, as often happens, with the help of an infrared receiver mounted on the glasses and a transmitter located near the screen. Another disadvantage of this method is the loss of brightness - after all, half of the time we see nothing with one eye. In addition, a high frame rate is required for the playback equipment itself.

An interesting development of the idea was the polarization technology - using a filter, we can cut off only the necessary part of the waves polarized in different directions. Surely everyone is familiar with polarizing filters for cameras that allow you to get rid of glare. In the case of a 3D image, a screen is specially prepared, some lines of which begin to emit a vertically polarized light, others - light that is horizontally polarized. Circular polarization is also used in cinemas when light is twisted to the right or left.

Simplicity is the key to success

The inventors of Google Cardboard did everything simply and brilliantly, deciding to use an ordinary smartphone to transfer three-dimensional images. Prior to this, there were many options for glasses equipped with various screens, but the resolution of the matrix of such screens was very modest. Moreover, these glasses were very expensive. And the proposed Google Cardboard is a piece of ordinary cardboard with two lenses in which it remains to put in your phone. This is enough to see a three-dimensional picture with your own eyes.

The further development of Cardboard was the appearance

The screen of any phone has a set of characteristics, like a Cardboard lens. The classic Cardboard is marked as a QR-code, and before placing your phone into it, you had to read this code in order to enter the lens parameters into the phone. Combining the parameters, the device could calculate how to display the image so that it reached you with minimal distortion.

When using Daydream, it is enough to simply put the device so that it considers the parameters of the glasses themselves with the help of NFC. The label itself is hidden in the back cover.

Also, the Cardboard has a small control panel; Many manufacturers have produced analogs of Cardboard and their own remotes, the most diverse: inertial, gravitational, just joysticks. Daydream has two buttons and a small touchpad that allows you to move objects in space or move yourself by moving your finger. Cardboard had only one button, on the case of the glasses themselves, which simply fixed the pressure and transmitted this information to the phone screen.

Cardboard could be used with any phone that physically fit into the box itself and was very cheap. To work with Daydream at the time of this writing, only three phones are certified: ZTE Axon 7, Google Pixel and Motorola Moto Z, they plan to add new models to them, but to use Daydream, an operating system of at least Android 7.0 is required.

Interaction with virtual reality

The most interesting thing begins when we do not just look at the three-dimensional image (3D can be seen on TV), but begin to rotate our heads.

Any modern phone is equipped with microelectromechanical sensors. These are microscopic devices in which electronics and small moving mechanical parts are assembled on a chip, allowing to track various external factors. Probably everyone knows what a gyroscope is - a device with which you can find out the angle of rotation. In the case of MEMS sensors, the gyroscope does not give the angle of rotation, but the angular velocity. Inside it there is a small element that moves under the influence of Coriolis force (I hope you remember what it is, from a physics course). This allows us to find out the angular velocity, and already by integrating it, we can determine the angle of rotation. True, this method is bad because, turning the phone in different directions, we most likely will not be able to return to the same point where we were originally.

The second interesting sensor is the accelerometer. It determines the forces acting on the phone, in particular, the acceleration of gravity. At rest, it will show the usual acceleration of free fall on our planet, which corresponds to approximately 9.8 m / s². But, if we start to move or rotate the phone, we will give it acceleration. As a result, the sensor will not give the necessary readings at these moments. Only when we stop moving will the system calm down and we will be able to recognize the force directed towards the ground. As you can see, in order to simply determine the position of the phone in space, neither of the two sensors we mentioned is suitable.

However, given that the gyroscope “runs away” with time, and the accelerometer “runs away” only at the moment of rotation, you can calculate the position of the device using various mathematical methods, for example, the Kalman filter. If you programmed for Android, you probably noticed that in the Android SDK, among the list of sensors, there is not only an accelerometer and a gyroscope, but also the so-called TYPE_ORIENTATION. This is just one of these mathematical methods that calculate the position of the phone using information from both sensors. They themselves, as a rule, are assembled on a single chip, very often they also additionally put a magnetic field sensor, that is, a compass.

Those who are interested in quadcopters probably noticed that the inscription “barometer” appeared on the new models. Obviously, this device is not intended for weather analysis. This sensor is used to measure atmospheric pressure to determine altitude. It also works in the phone, and we can calculate its movement along the vertical axis with an accuracy of centimeters. Naturally, the readings need to be smoothed with a filter.

Practice

Google has released the Google VR SDK for its Cardboard and Daydream. The latter is a set of components that help us develop applications specifically for displaying 3D reality, it is available on both iOS and Android. This SDK allows you to easily build applications for both virtual and augmented reality.

Google VR SDK takes care of all the work with the sensors, thanks to him, we don’t need to calculate quaternions to figure out how to rotate the scene in OpenGL when you turn your head. Simply put View to activate, and the SDK will do everything on its own.

Let's take a quick look at how we can quickly get started with the Google VR SDK and how to create a simple Android application. First, you need the pre-installed Android Studio.

The SDK itself is downloaded from the official git repository . SDK documentation is available here .

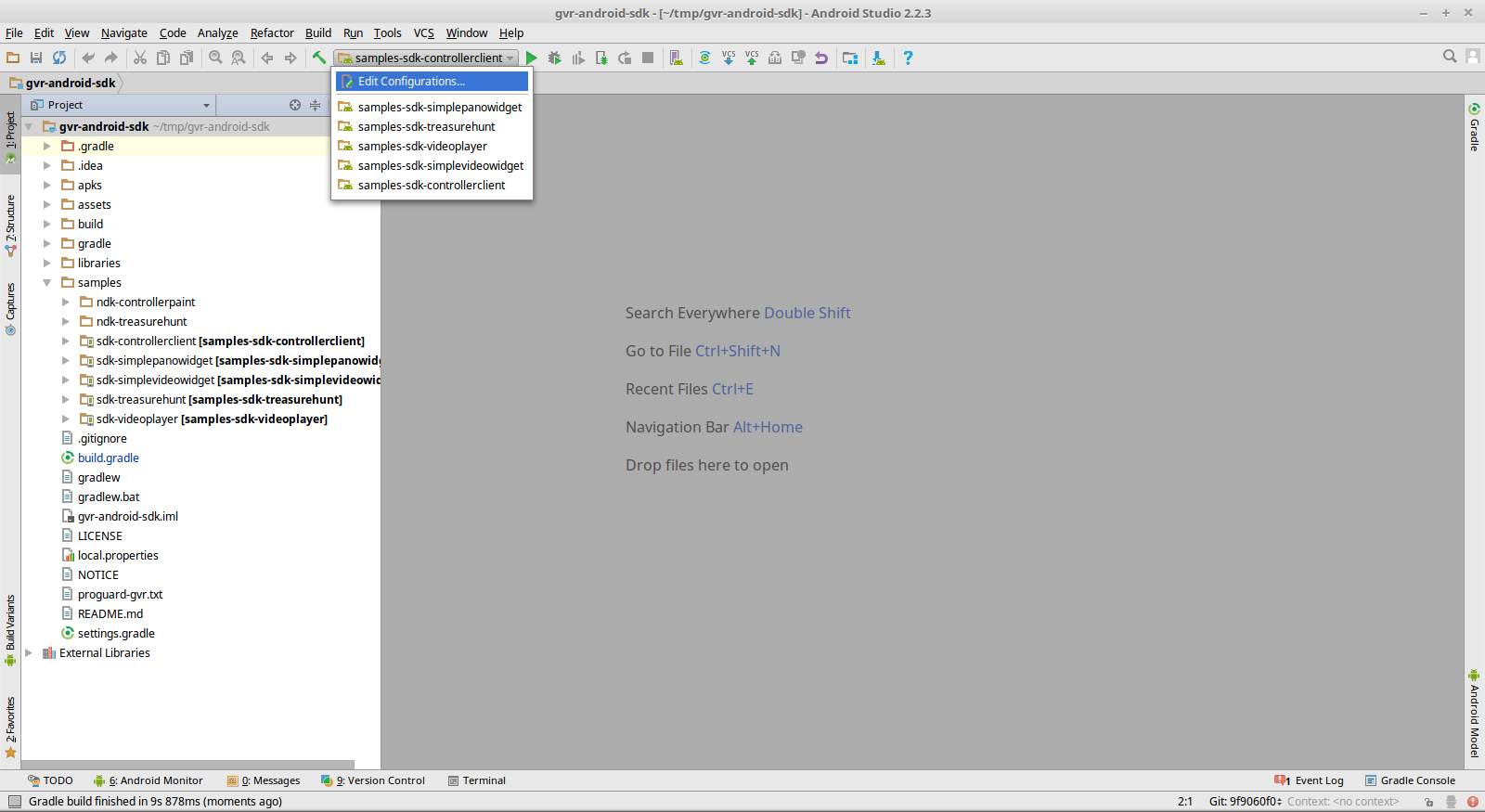

Now just open the root directory of the repository using Android Studio. There you can find examples that can be compiled from source and run on your Android device. The project immediately has the configuration to run the examples.

Daydream also has its own small controller, which the SDK also supports. It has a set of callback functions, and you can safely use this controller in your designs. The SDK has a special example of 'sdk-controllerclient'.

For the most realistic perception, I would like to feel the sound, and so that it was clear where it comes from, where it is directed and in what environment it is distributed. The library for this is GvrAudioEngine, which resembles simplified Open Audio Library or Direct3D Sound. It is enough to communicate the coordinates of the sound source in space, and the library itself mixes the sound as you need. You can set different media materials, for example, build a stage on which different music is played from the speakers on the right and left, and you can specify more sound sources.

The 'sdk-treasurehunt' example is a fairly simple game implemented on pure OpenGL. It also uses GvrAudioEngine to give the game surround sound effects.

There is a Google VR SDK and a wonderful, albeit small, set of views that can be embedded in your applications. In particular, VrPanoramaView is a component for displaying panoramic photos at 360 degrees, with a stereographic display. You can simply put a photo into the application resources and call VrPanoramaView.loadImageFromBitmap () in just one line to take this particular image and display it. As a result, you get a ready-made component that the user can see in his application - this is a regular View, the same as everyone else in Android.

You can display several of these components at once on one screen, you can display one full screen, and at the same time to switch between glasses and a simple display, you just need to click on the button that will be drawn by the library. It is also possible to add video using the VrVideoView component. And if you get tired of watching at some point, you can rewind the video.

Two samples from the SDK 'sdk-simplepanowidget' and 'sdk-simplevideowidget' show how easy it is to create applications with such built-in objects.

The question arises, where to get these videos and pictures? But some companies have already begun to produce cameras in the form of small trinkets that have lenses on both sides. With their help, you can make panoramic photos at 360 degrees - they can even connect them on their own - but the video will not work. To do this, you need a rather expensive design, and special software that can glue the resulting images and turn them into content suitable for display in virtual reality.

Odyssey solution includes 16 synchronized cameras.

Application

Where can the described technologies be applied? The first option is obviously a game. As a rule, OpenGL is a shooter and other games where we need depth perception. Netflix recently launched a service for displaying 3D content, this library can also help you write some custom solution for displaying movies.

It is possible to enable customers in the store, using telephones, to see an additional description of them next to the price tags of the goods.

You can apply them in real estate sales. The buyer and seller to meet, as a rule, uncomfortable. And with the help of such a video, it will be much easier for the buyer to assess in advance whether he should go to watch this or that object at all.

Another ideal place to use technology is museums. In modern museums, inside the hall there are often computers through which you can access additional information, listen to something. But these computers are regularly occupied, and the phone is always at hand with everyone, and he is able to add elements of augmented reality to the exposition. The library will work with almost any model of the device, and if desired, we will be able to convey to the museum visitors more information.

Here is a small Google ad that shows what Google Earth looks like with virtual reality glasses. You can watch such videos on a regular monitor, but the same pictures with glasses will look voluminous. Movement in space is carried out with the help of Daydream remote control, and for some phones it is possible to use a barometer and move, simply by turning your head.

Another very interesting idea of using such technologies is 3D printing.

One startup suggested that users resort to augmented reality to build models together with any other modeling tools. Using a conventional controller, you can not get a model of high accuracy - the error will be very large. But we can make a small souvenir with the help of such controllers and directly print it from the application to print it on a 3D printer. This idea is good and is monetized very soon, especially since printers for such products are gradually getting cheaper.

Source: https://habr.com/ru/post/401809/

All Articles