Algorithm of deep learning diagnoses skin cancer better than a qualified dermatologist

Depth learning is a promising method of learning algorithms that is involved in a large number of areas (information security, analysis of research results, image recognition). As for image recognition, it’s not just that the machine can distinguish a cat from a dog, as was the case with Google’s neural network . No, this technology may be useful in medicine, in particular, in oncology.

Scientists from Stanford have created a system that can diagnose by analyzing a photograph of the patient's skin. Recent tests have shown impressive results: the algorithm diagnoses as precisely as dermatologists with extensive experience and serious qualifications. To compare the possibilities of the technology, the authors of the project asked to make a diagnosis on the image of skin areas of various people by professional dermatologists (with verification of the diagnosis), and then the same images were shown to the machine.

“We have created a very powerful depth learning algorithm that is capable of learning using data,” said Andre Esteva, one of the authors of the study. “Instead of hard-coding such a system, we allowed it to make decisions itself.”

')

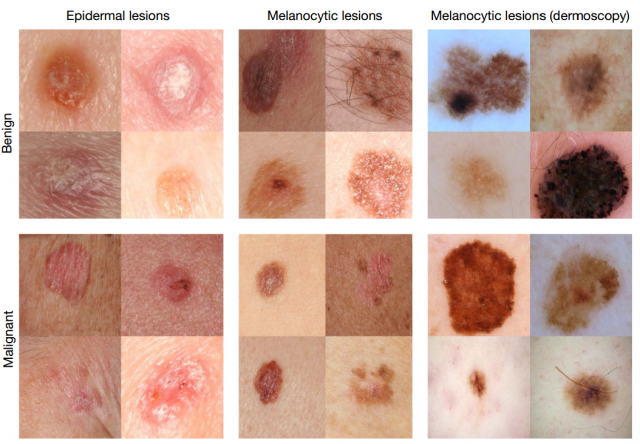

The algorithm is called the “deep convoluted neural network”. Its capabilities are based on Google Brain , a Google corporation project whose goal is to explore machine learning opportunities. The computing power of the Google Brain system enables third-party developers to create various machine learning projects. When scientists began work, the neural network could identify more than 1.28 million objects in images divided into several thousand different categories. But the researchers had a clear goal - they needed to train the neural network to correctly identify carcinoma and seborrheic keratosis, and also to teach the system to distinguish these two diseases from each other by taking pictures of areas of affected human skin.

In addition, the computer needed to distinguish these elements from the usual pigment spots, rashes, and other possible changes in the structure of the skin. A doctor with a great store of knowledge and experience is able to do this with almost no mistakes. And scientists have set themselves the task of “educating” such a professional from the neural network.

The problem was also the fact that the specialists did not have a sufficiently large sample of images that could be used to train the system. Therefore, they had to create a database of images on their own. “We collected photos from the Internet and asked the doctors to help us in sorting the images,” says one of the authors of the study. Some authors took pictures from foreign sites, so it was sometimes simply impossible to understand what was written in the description, since the accompanying texts were in Arabic, German, Latin, and other languages.

In order to examine the condition of a patient’s skin area, dermatologists often use a medical instrument called a dermatoscope. It gives a certain level of magnification, so that the doctor can see the skin in detail. The device provides approximately the same “picture”, so that a photograph of a skin area made with this tool is understandable to any dermatologist from any country in the world. Unfortunately for the study participants, not all photos from the Internet were taken using a dermatoscope. The shooting angle, illumination, degree of increase - all this was different.

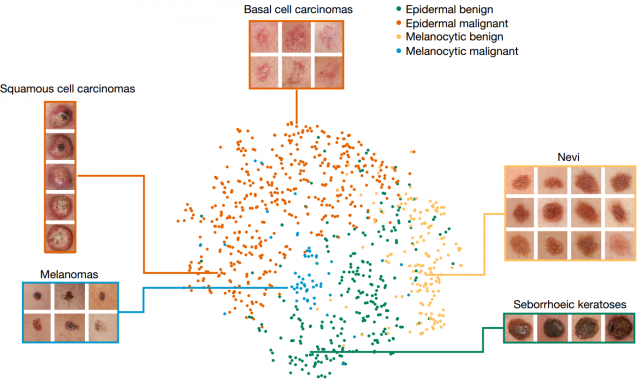

As a result, scientists, analyzing 130,000 images, identified about 2000 different types of skin diseases. They created a data set for the library of images, and then “fed” all this to the neural network. Each image was represented by a separate block, "pixel", with a brief description of the disease. Then the algorithm was “asked” to show the stages of development of the same disease, after identifying the patterns of increasing the focus.

Different categories of images into which the algorithm broke the original base of photos

After everything was ready, the project authors compared the results of the diagnosis made with the system with the known results of the diagnosis of skin diseases of patients set by two dozen dermatologists from Stanford Medical School. To test the operation of the algorithm, scientists used only high-quality images made by professionals. The accuracy of diagnosis was 91%, both in the algorithm and in the doctors.

The authors plan to develop their development gradually. In particular, the researchers want to create an application that will directly work with photographs of skin areas with problem areas that are loaded by the patients themselves. This, according to researchers, will simplify access to medical services for a large number of patients. And smartphones here can provide invaluable help. “My main point at Eureka was when I realized how smartphones would be ubiquitous,” says one of the project initiators, describing the process of implementing the work from idea to working service. “Every person now has a powerful computer with a large number of sensors, including a camera. What if you can use it to get photos of skin cancer or other types of diseases? ”

In any case, researchers need to conduct more tests before putting their technology to the masses in order to finally tweak the algorithm. In this case, it is extremely important to know how the machine classifies images.

“The possibilities of computerized image classification are a great help to dermatologists who can make more accurate diagnoses. But in the future, it is necessary to confirm the efficiency of the algorithm, it needs to be done before introducing this practice in hospitals, ”said Susan Svetter, professor of dermatology at Stanford.

Source: https://habr.com/ru/post/400967/

All Articles