Project FlyAI: artificial intelligence ensures the existence of a colony of flies

For a month now the life of a colony of flies, located in the city of Duluth, Minnesota, USA, is completely dependent on the operation of the software. This is a self-learning weak form of AI, which fully ensures the life of insects inside a special vessel. In particular, the AI provides the flies with food (powdered milk with sugar) and water.

The life of insects depends on how correctly the computer identifies the object in front of the cameras. If the system detects this object as a fly and decides that insects need feeding, they will receive it. In the event of an error, the flies will not receive food and water and will suffer (as far as possible for flies) from hunger and thirst for a long time. The project itself was named FlyAI, this is a kind of parody of a computer controlled settlement of people. In any case, the author of the project sees all this.

“We need to be aware of what to expect from artificial intelligence, because in any case it will appear,” says David Bowen of the University of Minnesota at Duluth. He believes that it is necessary to think now about what will be a real artificial intelligence, so that later there will be no problems. According to the professor, people, despite the problems, will be able to do so that the AI will be useful to a person and will not harm him.

')

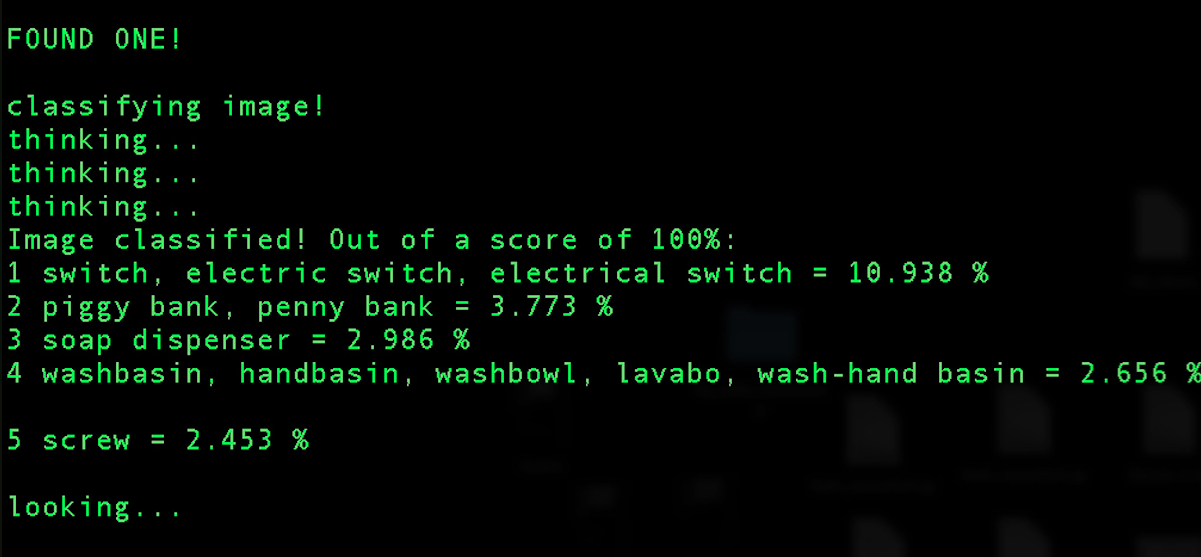

The central element of the FlyAI project is a self-learning neural network. It is clear that, like most modern AI systems, this is not an ideal project. FlyAI lacks an image database for effective learning. Therefore, periodically the system is mistaken, identifying flies as switches or other objects. In case of incorrect identification, the computer does not “turn on” the life support system, and the flies are starving and suffering from thirst, as already mentioned above. But in any case, so far the colony lives, for a month now and flies are feeling pretty good. If something goes completely wrong, the developer of the system will save the insects, not allowing their mass death. “They die, I think, for natural reasons,” says Bowen. "They get the opportunity to grow old here."

The project, he said, is a stylized embodiment of the thoughts of AI specialist Nick Bostrom, who works at Oxford. Bostrom believes that the openness of research specialists working on the creation of artificial intelligence, can be a problem. In particular, this concerns the Open AI project, about which information has already been published on Geektimes. “If you have a“ make everybody bad ”button, you are unlikely to want to share it with everyone,” Bostrom says. But in the case of OpenAI, this button will be available to everyone.

However, Bostrom believes that artificial intelligence should be good for man. “It is important for us to create an artificial intelligence that is smart enough to learn from its mistakes. He will be able to perfect himself infinitely. The first version will be able to create the second, which will be better, and the second, being smarter than the original, will create an even more advanced third, and so on. Under certain conditions, such a process of self-improvement can be repeated until an intellectual explosion is reached - the moment when the intellectual level of the system jumps in a short time from a relatively modest level to the level of super-intelligence, ”he said .

But Bowen argues that the risk to humanity is not the limitless possibilities of a future strong form of AI, but the software on which human life will depend (of which he has no doubt). “One of the problems is that we don’t even fully understand what artificial intelligence is,” says Bowen. "And it looks scary."

The strong form of AI, of course, will be nothing like the modern voice search engines and digital assistants. The possibilities of artificial intelligence are likely to be extensive, but there are risks. The same Stephen Hawking believes that AI is the greatest mistake of mankind. Bill Gates claims that in a few decades, artificial intelligence will already be sufficiently developed to be a cause for human concern. And Ilon Musk called AI "the main threat to human existence."

Software platform, which is the center of FlyAI, is often mistaken

Of course, the project itself may seem somewhat ridiculous, but its author does not think so. Actually, all he wanted was to draw public attention to his project and the problem of AI. This he, it can be said, succeeded. In any case, we do not yet know when the full AI will appear, and whether it will appear at all. This can happen tomorrow, and maybe in a few decades. In order to be safe for humans, machine intelligence must be aware of the value of human life. Yet man is not a fly.

Source: https://habr.com/ru/post/400045/

All Articles