Your brain makes decisions without you.

In a world full of ambiguities, we see what we want

Palmer Stadium at Princeton, 1951. The classic match of the undefeated American football team, the Princeton Tigers, with taylbekom star Dick Katzmayer - a talented player and runner, who received a record number of votes in the competition for the prize of Heisman [annual award of outstanding players in university football - approx. transl.] - against Dartmouth. Princeton won the game, full of penalties, but not without problems: more than ten players were injured, Katsmayer himself broke his nose and suffered a concussion. It was a “rough game,” as The New York Times described it, while softening the expression, “which led to the counter-accusations of both rivals.” Each side blamed the other for cheating.

They wrote about the game not only on the sports media pages, but also in the Journal of Psychology Journal of Abnormal and Social Psychology. Shortly after the game, psychologists Albert Hastorf and Hadley Cantril interviewed students and showed them the recording of the game. They wanted to know, "Which team first started playing hard?". The answers were so different that the researchers came to a surprising conclusion: "These studies show that there is no objective game that people would simply watch." Everyone saw the game they wanted. But how did this happen? This may have been an example of what Leon Festinger, the father of “cognitive dissonance,” had in mind when he observed how “people comprehend and interpret information in order to fit it into their existing beliefs”.

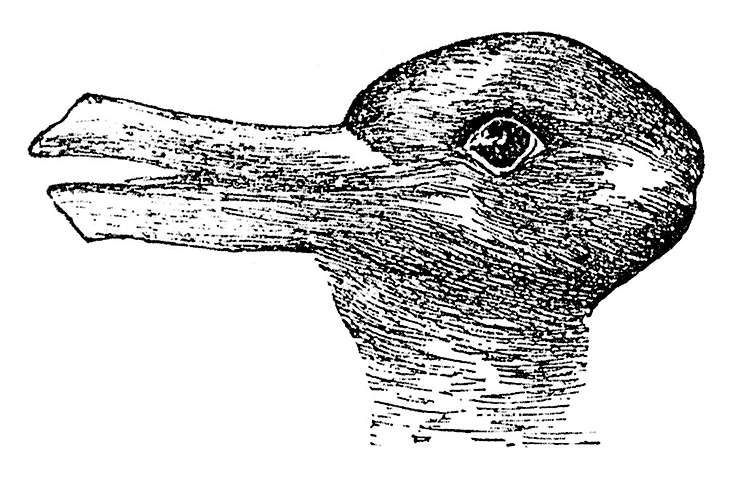

The duck / rabbit illusion, first shown by psychologist Joseph Jastrow

')

Observing and interpreting the recording of the game, the students behaved like children who are shown the famous picture with the illusion of a duck / rabbit. At Easter [the Easter Bunny is an Easter symbol in the culture of some Western European countries, Canada and the USA - approx. transl.] more children see the rabbit, and on other days most see the duck [1]. The picture allows both interpretations to be made, and switching from one to the other requires effort. When I showed it to my 5-year-old daughter, she said she saw a duck. When I asked if she saw anything else, she leaned over and frowned. "Maybe there is some kind of animal here?" I suggested, trying not to push. Suddenly, signs of understanding and smile. "Rabbit!"

I had nothing to worry about. As the experiment of Allison Gopnik [Allison Gopnik] and her colleagues showed, not a single child from 3 to 5 years independently changed the interpretation of a similar pattern (in their case, a vase / face pattern) [2]. When checking older children, a third found a second interpretation. Most of the rest could see her when ambiguity was described to them. Interestingly, children who independently noticed two interpretations showed themselves better in testing the “theory of consciousness” - in fact, the ability to assess their own state in relation to the world. For example, they were shown a box of colored crayons, which turned out to be candles, and then asked to predict what the other child would think about the contents of this box.

And if you did not immediately see a duck / rabbit, or ambiguity in another picture, do not immediately panic. In any research, adults are involved, according to the description of the scientists, "possibly having complex subject-pictorial capabilities" who are unable to switch. And the “correct” interpretation does not exist either - despite the small “duck” tendency, “rabbit” people are quite enough. Studies trying to find a connection between interpretation and right-handedness and left-handedness ended in nothing. My wife sees a rabbit, I am a duck. We are both left-handed.

But although anyone can be shown both a duck and a rabbit, there is one thing that no one sees: you cannot see a duck and a rabbit at the same time.

When I asked Lisa Feldman Barrett [Lisa Feldman Barrett], head of the interdisciplinary emotion research laboratory at Northeastern University, whether we live, metaphorically, in the duot / rabbit world, she quickly replied: "I don’t think this is a metaphorical topic." According to her, the structure of the brain is such that there are much more connections between neurons than those that transmit sensory information from the outside. The brain adds detail to the incomplete image and seeks meaning in ambiguous input. According to her, the brain is the "organ of generating conclusions." It describes a working hypothesis that receives more and more evidence, called predictive coding, according to which sensations emanate from the brain and are corrected by information coming from outside. Otherwise, the brain could not cope with too much information coming to the sensory input. “This is ineffective,” she says. “The brain has to look for other ways to work.” So he constantly predicts. And when “the incoming sensory information does not match your predictions,” she says, “you either change the predictions or change the sensory information you receive.”

The connection of sensory input and prediction with the formation of expectations was observed in the laboratory. In a study published in the journal Neuropsychologia, when people were asked to think about the veracity of the statement linking the object and the color — for example, “yellow banana” —the same brain areas were activated that worked with normal color recognition. As if reflections on the fact that the banana is yellow, do not differ from the actual observation of the yellow color - a kind of pre-perception that occurs during memories. Although the researchers warned that the sensation and presentation of knowledge is not the same phenomenon.

We form our ideas based on information coming from the outside world through the window of sensations, but then these ideas work like lenses, focusing on what they want to see. In the psychological laboratory of New York University, a group of subjects watched a 45-second video of a fierce skirmish between a policeman and an unarmed man [3]. From the video it was impossible to unequivocally conclude whether the police officer was behaving improperly, trying to put handcuffs on the person resisting the arrest. Before watching the video, the subjects were asked to describe how they relate to the police in general. Then the subjects, whose eye movements were tracked, were asked to rate the video. It is not surprising that people who didn’t like the police very much thought that the policeman should be punished. But this applied only to those people who paid attention to a police officer when watching a video. The decisions on punishment made by people who practically did not look at the policeman were the same, regardless of their attitude towards the police.

As Emily Balcetis, co-author and manager of the Social Perception and Motivation Lab at New York University, told me, we often consider the decision-making process responsible for bias. But, she asks, “which aspects of recognition precede this decision?”. She believes that attention can be "presented as something that we allow our eyes to look at." In the police video, "your eye movements determine the difference in the understanding of the facts." People who are more opposed to the police spend more time looking at the policeman (perhaps, as is the case with the duck / rabbit, they were not able to look at two people at the same time). “If you feel that this is a stranger,” says Balchetis, “you look at him more. You are looking at a person who may be a danger to you. "

But what is important in the appearance of such assessments? This is also vague. Many studies suggest bias in the subjects, looking at photos of people in their racial group. But then it can be said to the subjects that the person in the photo was ranked as a fictional group, to which the subject himself belongs. "In the first 100 ms, we solve the duck / rabbit problem," says Jay Van Bavel, a professor of psychology at New York University. Are we looking at a member of our group or a person of a different race? In his study, it turned out that the members of the „group“ got a more positive nervous activity, and the race almost disappeared (as if we, like in the case of duck / rabbit, see only one interpretation at a time) [4].

We live in a world in which, “in a sense, practically everything we see can be interpreted in many ways,” says Bevel. As a result, we constantly choose between a duck and a rabbit.

And we stubbornly stick to our decisions. In the study, Balchetis and colleagues showed the subjects pictures of either “sea creatures” or “farm animals”. The subjects were asked to recognize the pictures, they received pros and cons for each correct recognition. If they ended the game in the black, they got marmalades. In the red - a can of canned beans. The catch was that in the last picture there was an image that looked like a horse and a seal at the same time (it was a little harder to look at the seal). In order not to eat beans, the subjects needed to see which of the variants of the picture would give them advantages. Most often they saw it. But what if the subjects saw both options, and simply reported on the one that was preferable to them? The experiment was conducted again, with a new group of subjects, and with tracking eye movements. Those who had more motivation to see an animal from the farm often first looked at the button “animal from the farm” (a click on which marked their answer and translated into the next picture), and vice versa. A glance at the "right" button gave them out as special signs in poker, showing their subconscious intentions. Their vision was adjusted to their advantageous choice.

But when the experimenters pretended that an error occurred, and said they needed to see a sea animal in the picture, most of the subjects remained at the first interpretation of the picture - even after the change of motivation. “They could not re-interpret the image already formed in their heads,” she says, “because attempts to understand the meaning of the ambiguous picture remove ambiguity from it.”

A recent study by Kara Federmeier and colleagues suggests that something similar is happening in the formation of memories [5]. They looked at the example of a person with a wrong opinion about the position of a political candidate on a certain issue (most people once incorrectly thought that Michael Dukakis, and not George Bush, announced that he would be “president of education”). Investigating the work of the subjects' brain through the ECG, they found that the “memory signals” with regard to incorrect information were the same as the signals to the information that they had correctly memorized. Their interpretation of events turned into the truth.

This transformation can occur subconsciously. In a study published in the journal Pediatrics, more than 1,700 patients received information from one of four pilot campaigns designed to reduce the misperception of the dangers of the measles, mumps and rubella vaccine (MMR) [6]. None of the campaigns convinced parents of the need for vaccination of children. For parents who were the least willing to get vaccinated, the campaigns reduced their belief that MMR causes autism. But their desire to be vaccinated also decreased. The demonstration of photographs with symptoms of diseases, designed to show the danger of refusing vaccination, only strengthened people's faith in having dangerous side effects for vaccines.

How information is transformed into truthful from the point of view of the brain, and what can influence a person’s change of opinion, and make it necessary to change the interpretation of a duck / rabbit, is unclear. It has long been arguing over what exactly affects the process of changing interpretations. Someone thinks that interpretation arises "from the bottom up". It may be that the neurons that produce the interpretation of "duck" are tired, or "saturated," and suddenly a new interpretation appears, the rabbit. Perhaps it matters how the image is drawn, or how it is shown to the subjects.

The opposite theory speaks of work “from top to bottom,” that is, about some higher nervous activity of the brain, which favors a change in interpretation: we have learned about it, we expect it, we are looking for it. If people are asked not to change the interpretation, they do it less often, and if they are asked to do it faster, the number of shifts increases [7]. Others believe that the model is hybrid, and it works simultaneously from top to bottom and bottom to top [8].

Jürgen Kornmeier from the Institute for Advanced Psychology and Mental Health and his colleagues propose one hybrid model that questions the differences between top-down and bottom-up approaches. As Kornmaier describes, the very first activity of the eyes and the earliest pattern recognition systems betray the influence from top to bottom - and the flow of information cannot be one-way. They believe that even while we do not notice the rabbit and the duck, our brain can already subconsciously determine the unreliability of the image, and decide, so to speak, not to spread about it. In his opinion, the brain itself is deceiving you.

All this does not contribute to the conviction that policy issues or other problems can be solved simply by providing people with accurate information. A study by professor of psychology and law Dan Kahan from Yale University shows that a person cannot decide on issues such as climate change, because one part of it reflects analytically, and the second makes an illogical contribution, or brings heuristic bias [9 ]. People who performed well in tests for “cognitive thinking” and scientific literacy, were very likely to show what he calls “ideologically motivated recognition”. They paid more attention to the problem, seeing the duck, which, as they know, is there.

Links

1. Brugger, P. & Brugger, S. The Easter Bunny in October: Is it disguised as a duck? Perceptual and Motor Skills 76, 577-578 (1993).

2. Mitroff, SR, Sobel, DM, & Gopnik, A. Reversing: Spontaneous alternating by uninformed observers. Perception 35, 709-715 (2006).

3. Granot, Y., Balcetis, E., Schneider, KE, Tyler, TR. Journal of Experimental Psychology: General (2014).

4. Van Bavel, JJ, Packer, DJ, & Cunningham, WA The neural substrates in-group bias. Psychological Science 19, 1131-1139 (2008).

5. Coronel, JC, Federmeier, KD, & Gonsalves, BD Social Cognitive and Affective Neuroscience 9, 358-366 (2014).

6. Nyhan, B., Reifler, J., Richey, S. & Freed, GL. Pediatrics (2014). Retrieved from doi: 10.1542 / peds.2013-2365

7. Kornmeier, J. & Bach, M. Ambiguous figures — what does the stimulus. Frontiers in Human Neuroscience 6 (2012). Retrieved from doi: 10.3389 / fnhum.2012.00051

8. Kornmeier, J. & Bach, M. Object perception: When it’s our brain. Journal of Vision 9, 1-10 (2009).

9. Kahan, DM Ideology, motivated reasoning, and cognitive reflection: An experimental study. Judgment and Decision Making 8, 407-424 (2013).

Source: https://habr.com/ru/post/399539/

All Articles