How artificial intelligence is changing the chip market

In less than 12 hours, three different people offered me money to talk to an unknown person on the phone for an hour.

They all said that they liked my article about how Google is creating a new computer chip for AI , and they all begged me to discuss this topic with their client. Each described his client as a manager of a large hedge fund, but did not name him.

')

Inquiries came from so-called expert networks - research firms that connect investors with people who can help first understand certain markets and provide a competitive advantage (sometimes, judging by everything, through insider information ). These expert networks wanted me to explain how Google's AI processor will affect the chip market. But first, they demanded a non-disclosure agreement for them. I refused.

These self-initiated, concrete and assertive requests - which happened three weeks ago - underline the radical changes that should be expected in the highly profitable market of computer chips, changes inspired by the development of AI. The managers of those hedge funds have seen these changes, but they don’t know exactly how they will play.

Naturally, no one knows exactly how they will play.

Today, Internet giants such as Google, Facebook, Microsoft, Amazon and Baidu are exploring a wide range of technologies that can lead to breakthroughs in AI, and their decisions will change the revenues of companies such as Intel and nVidia. But now even the computer scientists of these online giants do not know what awaits us in the future.

Take it deeper

Companies manage their online services from data centers containing thousands of servers, each of which is equipped with a central processor, CPU. But gradually mastering one of the forms of AI called deep neural networks , these companies complement the CPU with other processors. Neural networks are trained in tasks by analyzing large amounts of data, from individuals and objects in photographs to translation between languages , and they need more than processor power.

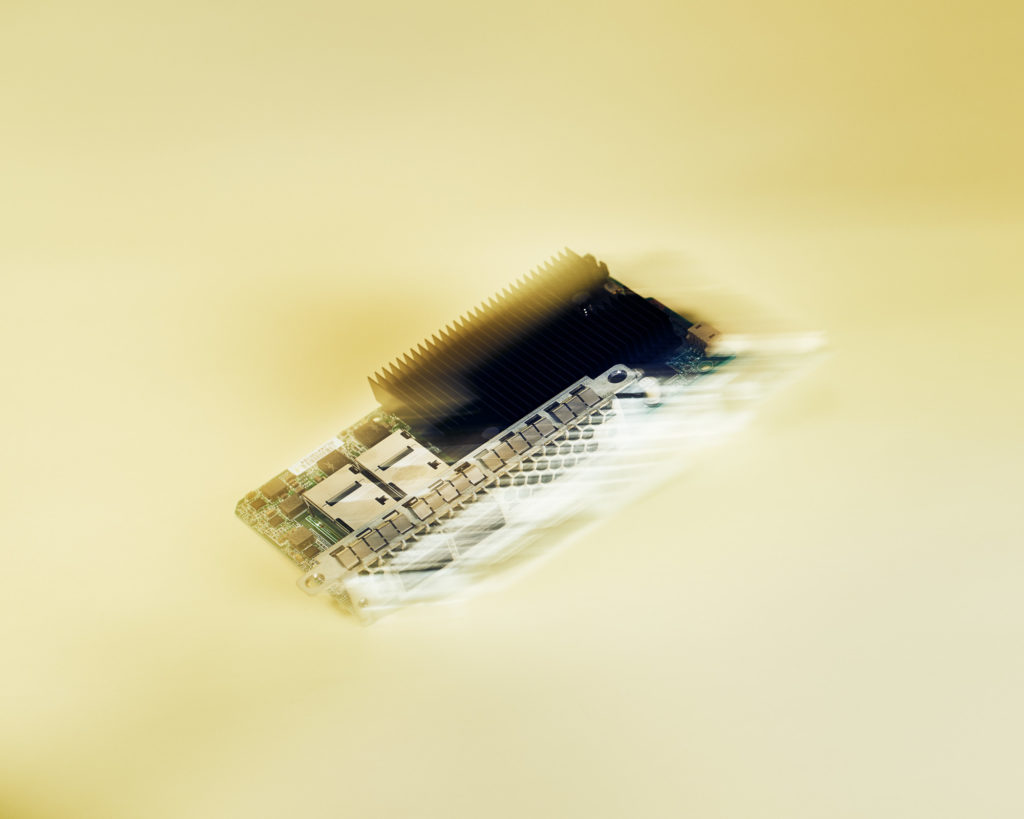

So Google created the Tensor Processing Unit , or TPU. Microsoft uses a processor called a user-programmable gate array ( Field-Programmable Gate Array , FPGA). Many companies use computers equipped with graphics processors , GPUs. All of them are looking for a new generation of chips that can speed up the work of AI in smartphones and other devices.

Because of the large scale of activity of these companies, all decisions made by them are important. They buy and use more computer hardware than everyone else on the planet, and this gap will only widen due to the increasing importance of cloud computing. If Google makes a choice in favor of a processor, this may change the fundamentals of the chip industry.

TPU is a threat to companies like Intel and nVidia because Google makes it himself. But GPUs play a big role in Google and similar companies, and nVidia is the main manufacturer of these chips. Meanwhile, Intel is entering the industry by acquiring Altera, a company that sells FPGAs to Microsoft. It was the largest purchase of Intel for all time ($ 16.7 billion), and this underlines how much the chip market is changing.

First, training, then, performing

To understand all this is difficult - for example, due to the fact that neural networks operate in two stages. The first is a training session in which a company like Google teaches a neural network to perform a task, for example, recognizing faces in a photo or translating from one language to another. The second is the implementation, during which ordinary people, like you and me, use the neural network - we post a photo of the alumni meeting on Facebook, and it automatically marks people on it. These two stages are very different, and each of them requires different approaches, incl. and processor.

Today, GPUs are best for training. They were developed for rendering images in games and other graphical applications, but in recent years Google has found that these chips can efficiently, in terms of energy, process the huge amounts of data that is required for training neural networks. This means that you can train more neural networks using less equipment. Microsoft AI researcher Syudon Huang [XD Huang] calls the GPU "a real weapon." Recently, his team completed the creation of a system that recognizes human speech, which they spent a year on. Without a GPU, he said, it would take five years. After publishing the work on this system, he opened champagne at the home of Jensun Huang [Jen-Hsun Huang], director of nVidia.

To smartphones

At the same time, other companies are creating chips for neural networks on smartphones and other devices. IBM is working on such a chip, although many have doubts about its effectiveness. Intel decided to acquire the company Movidius, already supplying chips for mobile devices.

Intel understands that the market is changing. Four years ago, the company said that it sells more server processors at Google than all other companies, with the exception of four. This shows how Google and other similar companies can influence the chip market. Now Intel is betting in all areas. In addition to the acquisition of Altera and Movidius, she also decided to buy the company Nervana, producing AI-chips.

This makes sense, since the development of the market is just beginning. “We are at the foot of a new big wave of growth,” Intel Vice President Jason Waxman told me, “and it is fed by the AI.” The only question is where this wave will bring us.

Source: https://habr.com/ru/post/399021/

All Articles