Helios headset uses Intel RealSense technology to help people with visual impairments

The goal of the HELIOS project is to expand and complement the possibilities of human perception through modern vision technologies. A study published by the World Health Organization says that about 285 million people in the world suffer from visual impairment: there are 39 million blind and 246 million people with impaired vision. We believe that it is very important to increase mobility, safety and access to knowledge for people with visual impairments.

We use computer vision, artificial intelligence and Intel RealSense technology to create modern solutions that can help people with visual impairments to solve a variety of everyday problems. Our approach is to develop an intelligent headset that helps people with partial or complete loss of vision.

HELIOS headsets provide a range of special features for people with visual impairments, helping them to perform various tasks and activities easier and more confidently.

')

The HELIOS Touch is designed for people with severe visual impairment or blindness. This solution uses the HTI interface to transmit visual data to the user through tactile signals. Due to this, the possibilities of orientation in the near space and avoidance of obstacles are realized.

Three-dimensional model HELIOS Touch

The HELIOS Light headset helps people with impaired vision. With the help of technologies of augmented and virtual reality, the headset expands the possibilities of users' visual perception. Streams of color and depth data from Intel RealSense form adaptable visual cues to help you perform a variety of everyday tasks.

Three-dimensional model HELIOS Light

The main purpose of HELIOS is to provide the user with more complete information about the surrounding space, which significantly increases the freedom of movement and safety of the user.

Another important feature is the ability to read text without using Braille. Headset HELIOS can read the contents of books, magazines and other printed materials, such as menus in restaurants.

In addition, HELIOS provides a new level of context for personal interaction by recognizing the faces of friends and social signals.

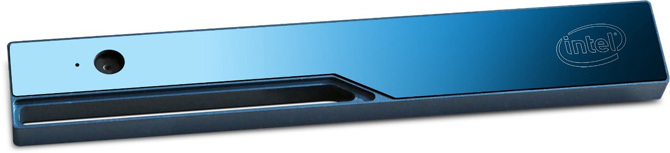

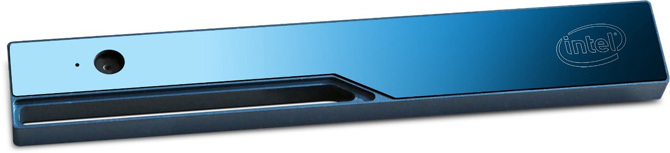

Intel RealSense cameras provide color and distance measurement. Thanks to this, the HELIOS system receives high-quality depth data and a color image. Due to their functionality, performance and compactness, these components are ideally suited for integration into the HELIOS system.

Camera Intel RealSense R200. For more information, see this article.

Razer Stargazer - Third-party Intel RealSense SR300 Camera Version

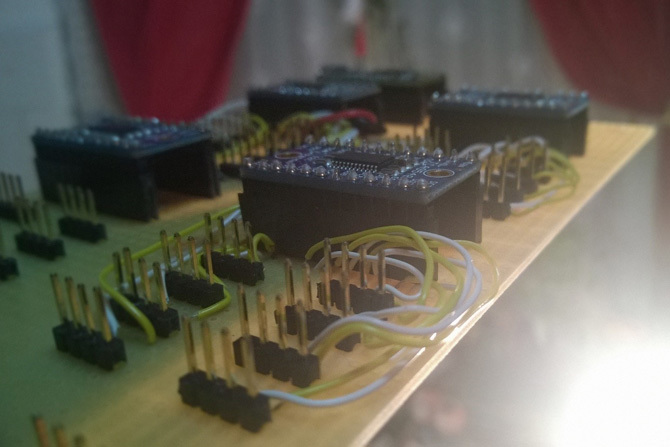

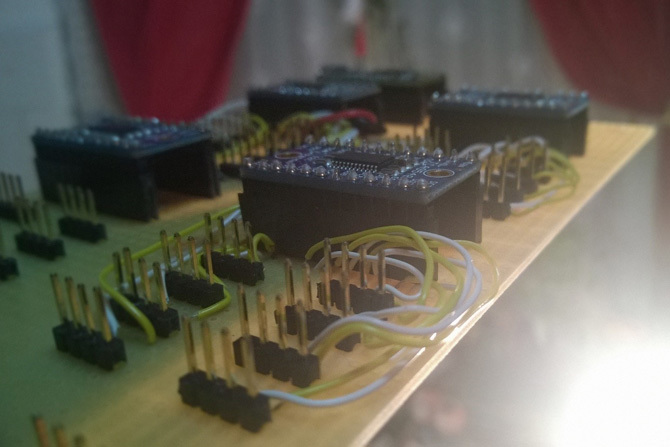

HTI is a hardware component of HELIOS Touch developed by our team. It is designed to convert visual data into tactile signals, providing an additional level of information that is presented accurately and unobtrusively.

HTI Test Board

The Razer OSVR Hacker Development Kit is a virtual and augmented reality platform with extensive customization options. It is the perfect off-the-shelf component for HELIOS Light due to the use of open source code, expansion options and a successful hardware design.

Razer OSVR HDK

The latest generation of Intel Compact PCs is a powerful platform for running HELIOS software components in real time with high performance, low power consumption and high mobility.

Intel NUC

The Intel RealSense SDK is the main software component of the HELIOS system. This package, without additional configuration, provides access to a color image at a high frame rate, image depth and infrared image streams, supports a wide range of computer vision algorithms for tasks such as tracking a person, recognizing faces, creating three-dimensional maps. The SDK comes with a huge collection of sample projects, and extensive documentation has been prepared for it.

The following code example shows the main components for developing a text-to-speech module using RealSense and UWP (Windows Universal Platform):

Mihai Leoveanu has a congenital severe visual impairment, but this did not prevent him from becoming an outstanding person.

He is a convinced optimist and one of the best students in his graduating class. He is currently working on a thesis project dedicated to equipping a historic site - the royal court of Targovishte - in order to increase convenience in terms of special features. Thanks to the proposed improvements, visually impaired tourists will be able to get more complete information about this historic place.

Mihai became the first tester of our headset.

Mihai tests HELIOS in action

Mihai reads with HELIOS

During the experiments, Mihai provided feedback on all the capabilities of the HELIOS system he used. He naturally received new sources of information and after a few minutes he was able to successfully use the headset to obtain more accurate information about the surrounding space.

The results of the development and testing are very encouraging. For users, tasks such as perceiving the world around and reading without using Braille are greatly simplified. With further development, the HELIOS system will become an indispensable and very useful assistant for people with visual impairments.

We use computer vision, artificial intelligence and Intel RealSense technology to create modern solutions that can help people with visual impairments to solve a variety of everyday problems. Our approach is to develop an intelligent headset that helps people with partial or complete loss of vision.

Models and capabilities of headsets HELIOS

HELIOS headsets provide a range of special features for people with visual impairments, helping them to perform various tasks and activities easier and more confidently.

')

▍HELIOS Touch

The HELIOS Touch is designed for people with severe visual impairment or blindness. This solution uses the HTI interface to transmit visual data to the user through tactile signals. Due to this, the possibilities of orientation in the near space and avoidance of obstacles are realized.

Three-dimensional model HELIOS Touch

▍HELIOS Light

The HELIOS Light headset helps people with impaired vision. With the help of technologies of augmented and virtual reality, the headset expands the possibilities of users' visual perception. Streams of color and depth data from Intel RealSense form adaptable visual cues to help you perform a variety of everyday tasks.

Three-dimensional model HELIOS Light

The main purpose of HELIOS is to provide the user with more complete information about the surrounding space, which significantly increases the freedom of movement and safety of the user.

Another important feature is the ability to read text without using Braille. Headset HELIOS can read the contents of books, magazines and other printed materials, such as menus in restaurants.

In addition, HELIOS provides a new level of context for personal interaction by recognizing the faces of friends and social signals.

Equipment description

Intel Intel RealSense Technology

Intel RealSense cameras provide color and distance measurement. Thanks to this, the HELIOS system receives high-quality depth data and a color image. Due to their functionality, performance and compactness, these components are ideally suited for integration into the HELIOS system.

Camera Intel RealSense R200. For more information, see this article.

Razer Stargazer - Third-party Intel RealSense SR300 Camera Version

▍Tactile HTI Interface *

HTI is a hardware component of HELIOS Touch developed by our team. It is designed to convert visual data into tactile signals, providing an additional level of information that is presented accurately and unobtrusively.

HTI Test Board

▍ Virtual reality and open source

The Razer OSVR Hacker Development Kit is a virtual and augmented reality platform with extensive customization options. It is the perfect off-the-shelf component for HELIOS Light due to the use of open source code, expansion options and a successful hardware design.

Razer OSVR HDK

▍Intel NUC

The latest generation of Intel Compact PCs is a powerful platform for running HELIOS software components in real time with high performance, low power consumption and high mobility.

Intel NUC

Software. Intel RealSense SDK

The Intel RealSense SDK is the main software component of the HELIOS system. This package, without additional configuration, provides access to a color image at a high frame rate, image depth and infrared image streams, supports a wide range of computer vision algorithms for tasks such as tracking a person, recognizing faces, creating three-dimensional maps. The SDK comes with a huge collection of sample projects, and extensive documentation has been prepared for it.

The following code example shows the main components for developing a text-to-speech module using RealSense and UWP (Windows Universal Platform):

Code example

public async void StartRealSenseStreaming() { Status streamingStatus; // Set RealSense sample reader and bind SetOcrFrame event SampleReader sampleReader = SampleReader.Activate(senseManager); sampleReader.SampleArrived += SetOcrFrame; // Set RGB stream profile and device info filter Dictionary<StreamType, PerceptionVideoProfile> profiles = new Dictionary<StreamType, PerceptionVideoProfile>(); profiles[StreamType.STREAM_TYPE_COLOR] = ColorProfile; sampleReader.EnableStreams(profiles); readers.Add(sampleReader); if (currentRealSenseDevice != null) senseManager.CaptureManager.FilterByDeviceInfo(currentRealSenseDevice.DeviceInfo); // Set streaming status message if ((streamingStatus = await senseManager.InitAsync()) == Intel.RealSense.Status.STATUS_NO_ERROR) { if ((streamingStatus = senseManager.StreamFrames()) == Intel.RealSense.Status.STATUS_NO_ERROR) { StatusMessage = "Streaming started"; } else { StatusMessage = "Failed to stream: " + streamingStatus.ToString(); } } else { StatusMessage = "Initialization failed: " + streamingStatus.ToString(); } IsStreaming = true; } private void SetOcrFrame(Object module, SampleArrivedEventArgs args) { // Setting current frame for OCR processing Sample sample = args.Sample; if (sample == null) return; var localOcrFrame = sample.Color; if (localOcrFrame == null) return; lock (sample) { ocrFrame = localOcrFrame.SoftwareBitmap; } } private async void TextToSpeech() { // setup OCR engine for English OcrEngine ocrEngine = OcrEngine.TryCreateFromLanguage(new Language("en")); // recognize text from the RealSense OcrFrame var ocrResult = await ocrEngine.RecognizeAsync(RealSense.OcrFrame); if (!String.IsNullOrEmpty(ocrResult.Text)) { // setup speech synthesizer var voice = SpeechSynthesizer.AllVoices; using (SpeechSynthesizer speechSynthesizer = new SpeechSynthesizer()) { speechSynthesizer.Voice = voice.First(v => v.Gender == 0); var voiceStream = await speechSynthesizer.SynthesizeTextToStreamAsync(ocrResult.Text); // setup playback of voice synthesis PlaybackVoice(voiceStream); } } } Testing and validation

Mihai Leoveanu has a congenital severe visual impairment, but this did not prevent him from becoming an outstanding person.

He is a convinced optimist and one of the best students in his graduating class. He is currently working on a thesis project dedicated to equipping a historic site - the royal court of Targovishte - in order to increase convenience in terms of special features. Thanks to the proposed improvements, visually impaired tourists will be able to get more complete information about this historic place.

Mihai became the first tester of our headset.

Mihai tests HELIOS in action

Mihai reads with HELIOS

During the experiments, Mihai provided feedback on all the capabilities of the HELIOS system he used. He naturally received new sources of information and after a few minutes he was able to successfully use the headset to obtain more accurate information about the surrounding space.

Conclusion

The results of the development and testing are very encouraging. For users, tasks such as perceiving the world around and reading without using Braille are greatly simplified. With further development, the HELIOS system will become an indispensable and very useful assistant for people with visual impairments.

Source: https://habr.com/ru/post/399019/

All Articles