"Photoshop" for human speech

On November 3, 2016, at the Adobe MAX technology conference, Adobe presented a very interesting scientific and technical development, which in the future could turn into a popular software application. If we describe the invention briefly, then this is a program for semantic editing of human speech. It applies not just the standard method of synthesis of collected phonemes (compilation synthesis), but also auxiliary methods that increase realism. This is the intellectual choice of triphons and the use of the specic characteristics of the voice sample.

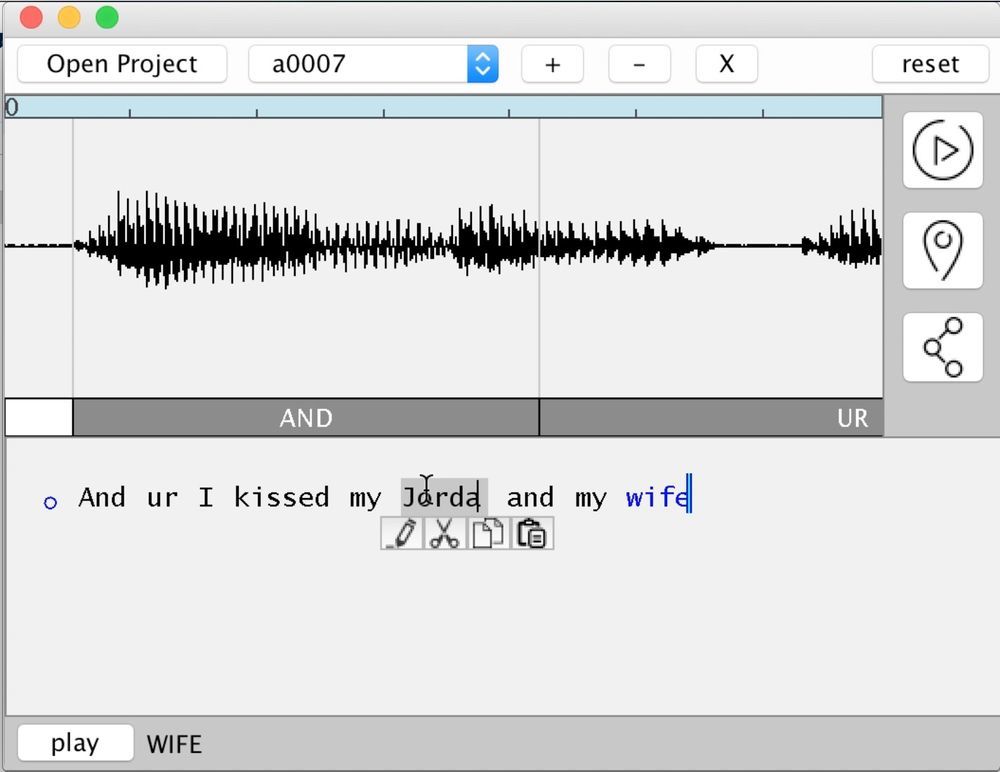

As a result, the user writes an arbitrary text - and the program voices it with the voice to which it was trained. You can quickly add to the speech any words or cut unnecessary.

In practice, the program presented in the framework of the VoCo project works as follows. First, the phoneme base is assembled for the voice of a specific person in a particular language. For realistic results, the program needs at least 20 minutes of human speech. The bigger, the better. On the basis of the collected phonemes (triphons), the program can then collect almost any new words from the bricks.

')

Fragment of VoCo presentation at MAX conference

In a sense, VoCo works, reminiscent of the work of the context brush in Photoshop. She also takes fragments from different places of the picture - and collects a new image from these fragments. A piece of forest from a photo of a forest, a piece of grass from another image and a girl from a third photo - and we get a completely new photorealistic work with forest, grass and a girl in the foreground. If the work is done professionally, the installation is very difficult to determine. So in Soviet times they erased from the history of people who suddenly became enemies of the people. There was a person in the photo - and now there is a void or another person.

So VoCo technology allows you to complement a person’s speech with arbitrary words and phrases.

At the MAX conference, one of the developers Zeyu Jin held a presentation. In a previously published scientific paper, he is listed as an employee of Princeton University, along with a colleague Adam Finkelstein. The technology was developed by Adobe Research in conjunction with Princeton University.

As planned by Adobe, the technology will help content creators to more easily edit audio tracks: dialogs and voice-over to quickly correct a mistake or make changes to the storyline.

Adobe emphasizes that in this case it is more appropriate to speak of “voice conversion” than of classical voice synthesis. The goal of a voice conversion is that the original voice is transformed so that to the listener it appears to be the voice of another person, following the pattern of the latter’s voice.

The technical fundamentals of voice conversion are described in more detail in the aforementioned research paper prepared jointly with Princeton University. Its authors show that the developed CUTE technique qualitatively surpasses other methods of voice conversion. Alternative conversion methods are usually based on the parallel analysis of identical phrases of the source and the target, followed by the calculation of certain conversion vectors in an address space. After that, any arbitrary fragment of the original voice can be converted using the vectors obtained. But these methods suffer from unpleasant side effects - the speech synthesized in this way is deaf, slurred.

Adobe researchers managed to overcome the shortcomings of other techniques using the hybrid method CUTE. Four main components of this technique are encrypted in the title: compilation synthesis (Concatenative synthesis); Unit selection; preliminary selection of triphons, that is, units of three phonemes (Triphone pre-selection); use of sample properties (Exemplar-based features).

Compilation synthesis is reduced to composing a message from a pre-recorded phoneme dictionary. This is the main method of speech synthesizers, which are equipped with various devices: from military aircraft to home appliances, in the reference services of mobile operators, etc.

As the name implies, the developed hybrid technique combines several methods of speech synthesis and voice conversion.

The scientific work presents the results of comparative tests with other methods of voice conversion, in which CUTE significantly exceeds competitors. At the same time, some of his shortcomings are mentioned: he, like everyone, suffers from an insufficient number of phonemes in the database during the synthesis of new words, which is why phonetically correct but not very realistic results are generated. In addition, it depends on the operation of the speech recognition engine for correct phonetic segmentation.

It is not yet known whether Adobe is going to implement this promising development in the form of a real commercial product. But now we can say that such a program would become very popular, provided that the synthesis of voice from phonemes is realistic. For example, podcasters could use it to generate podcasts from text. It can also be used to sound audiobooks using the voice of an arbitrary person (for example, your own girlfriend). This technology will certainly be used in Hollywood for the voice of personnel in the absence of an actor. For example, if a contract was broken with him or he died in the middle of filming.

Source: https://habr.com/ru/post/398865/

All Articles