The robot has learned to play Lego, watching the man

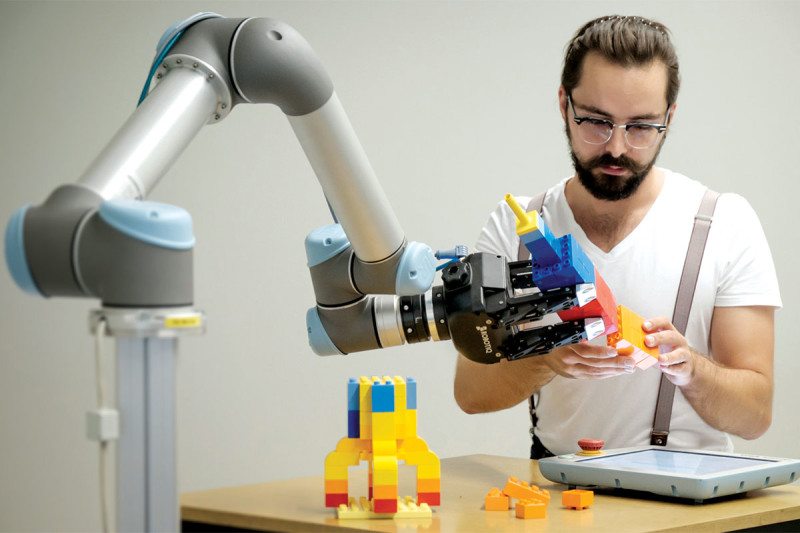

David Vogt teaches a robot to assemble a rocket from Lego cubes (Source: Arizona State University / TU Freiburg)

David Vogt is a professor of robotics at the Freiberg Mining Academy (Freiberg University of Mining and Technology). He has a son who loves to play Lego, as well as many details of this designer. One day, a scientist had the idea to test the possibility of learning a robot to assemble various models from Lego parts.

“My son and I thought that it would be nice to create a robot that could do the same thing as when we play,” says Vogt. A specialist with a group of colleagues decided to purchase a robotic industrial manipulator for their laboratory. On arrival at the academy, the robot was equipped with a Kinect camera.

With this camera, the robot could observe people who, using tags to track movements, collected a rocket from Lego bricks. People collected a rocket of the same design several times, and the robot gradually received more and more information about the assembly process of this object. After a while, he was able to interact with a human partner, helping his partner build a model. At the same time, the robot did not always find the necessary parts where it expected it, but he already knew how to search for them independently. This, according to experts, is just one example of learning robots by demonstrating how a person performs a process.

')

People learn by observing other people without any problems. For example, children are the only way to receive information about the world and the actions of other people. But it’s very difficult to program a robot to perform a new task. We purely intuitively understand how to perform this or that task, but the intuition is inaccessible to the robot - all actions must be rigidly set using the program.

But now specialists in robotics are trying to master a new method of learning robots - a method where computer systems observe human actions by collecting observation data in a database. Some scientists try to control the actions of robots using a computer or phone, while others give their wards complete freedom of action, allowing them to learn from their mistakes. And robots are taught not only to collect objects from Lego. For example, last year, scientists from the University of Maryland began to train their system to cook, letting it watch culinary videos from YouTube.

Google Inc. recently decided to conduct its own experiment with self-learning robots. For this, industrial manipulators were purchased, which were connected to a single database. Each action of any of the robots was recorded, and then this data was processed by a neural network. After analysis, the robot received instructions with a description of the optimal action algorithm. The job they were trying to teach the system was opening the door with a handle.

In one of the experiments conducted, the robot was assigned to study a variety of objects. These are water bottles, stationery, books. The system quickly got used to the task and gave the “colleagues” information about the necessary sequence of their actions. After that, the system was given a new task - to move a certain object to a given point. The robot did not receive any instructions about the characteristics of the object, and the objects were constantly changing. As it turned out, robots can perform such tasks well, using the data accumulated in the process of studying such objects. The machines managed to calculate the consequences of moving the object along the surface to the desired point.

As for the door opening, in one of the cases a person helped the robots. Other machines received the necessary training data from a robot interacting with a human partner, and later used this information to repeat the actions of the “colleagues”. As it turned out, systems over time were fairly effectively trained by trial and error. After the manipulators began to open doors with different types of handles without problems, the conditions of the problem were changed. For example, the position of the door, the angle of its opening, etc. were changed. In the final experiment, it turned out that robots can open the door and lock, which they have not yet encountered, without human assistance.

“By performing certain actions, we often act intuitively, we can probably transfer this intuition to robots, who as a result learn and work faster,” says one of the Google developers.

One of the problems for this type of robot training is the translation of information into a form that is understandable for the machine. Most robots do not have sensors that allow them to perceive the environment and the phenomena occurring in it like humans. Therefore, such information each time you need to adapt for robots and their control systems. “A good teacher for a robot understands that he is dealing with a machine that has a different way of perceiving the surrounding reality,” says Oude Billard of the Federal Polytechnic School of Lausanne (Switzerland).

David Vogt and his team are confident that humans can train robots by demonstrating their actions. Perhaps this method of training can be used in enterprises. Thus, robotized systems can be taught new functions without using the labor of programmers. And so an ordinary worker will be able to show the robot what to do, and he will start doing a new job for himself.

“Ideally, humans and robots should be able to perform such activities that they cannot perform individually,” said Vogt. The results of the work will soon be presented by the professor and his colleagues at the International Conference of Humanoid Robots in Cancun, Mexico.

Source: https://habr.com/ru/post/398751/

All Articles