We teach neural network effortlessly

Developments in the field of neural networks this year have experienced a real boom. We demonstrated our algorithms in Artisto and Vinci, Google - in AlphaGo, Microsoft - in a number of services for image identification, startups such as MSQRD, Prisma and others were launched. Applications based on neural networks instantly occupied the first lines of the ratings, in the first ten days after the release more than a million users downloaded them, and the controversy surrounding them has not subsided until now. Entertainment services are not created to solve a wide range of tasks, but to demonstrate the capabilities of neural networks and conduct their training.

The neural network is able to independently learn and act on the basis of previous experience, allowing each time to make fewer errors. Training a neural network is a very time consuming and voluminous task. In order for it to work correctly, it is required to “drive out” its work on tens of millions of input data sets.

But training of neural networks does not always take place in closed laboratories and with limited data set. Sometimes, developers create entertainment applications so that users can tap into the capabilities of neural networks and download as much data as possible to train them. Of course, sometimes users find the weak points of neural networks or create with their help rather bizarre and ridiculous “masterpieces” that instantly spread across the Internet. In the same way, the developers themselves find very funny areas for the application of neural networks, causing a joyful response from users.

')

We decided to consider several memorable examples and collect them in one post.

Microsoft: age, emotions and breed

How old

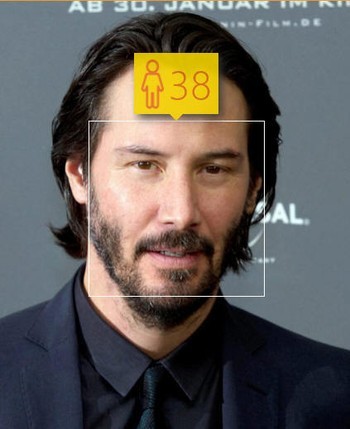

In April 2015, the Internet blew up a comic service from Microsoft, demonstrating the capabilities of the Azure cloud platform, How-old.net . It allows you to analyze the loaded photo and reports the estimated age and gender of the person depicted on it. Sometimes he guessed quite accurately, and sometimes not quite, which caused a storm of experiments on social networks. In addition to their personal photos, by which the service determined the age of users, they began to upload photos of celebrities. This is where the fun began. The hashtag #howold immediately appeared on Twitter, according to which people started posting the most amusing results with equally funny and humorous comments.

Service once again proved that Keanu Reeves is timeless. But in the case of Madonna, users began to suspect that something was wrong.

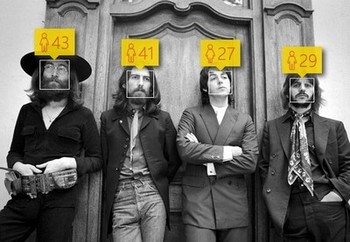

In the case of Conchita Wurst, the “star of Eurovision,” the service did not see a trick - and the beard made the “girl” a little old, from 27 to 35 years old. By the way, the service recognized the girl in Paul McCartney. The photograph of The Beatles appeared on the web with the caption: “What is your secret, Paul?”

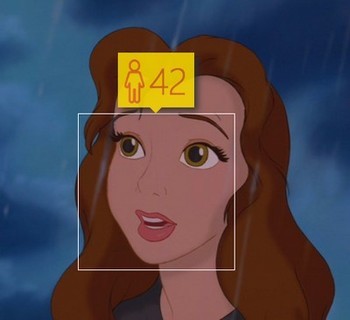

But the most ironic thing happened to Disney princesses, to whom the service attributed extra years, then vice versa. Besides the fact that they became male owners, he added 17 years old Belle 25 years old, and 15 years old Ariel was even less fortunate.

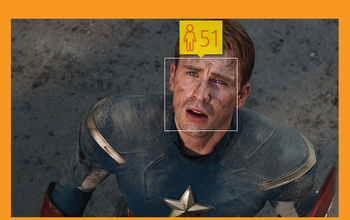

No less interesting were the results of the analysis of famous paintings. So, 12-year-old Vera Mamontov from the painting “Girl with Peaches” Valentina Serova has significantly aged out the service. The same fate befell Steve Rogers of Avengers - he suddenly became the oldest in the team. The BuzzFeed community got confused and uploaded photos of all the participants.

Following a wave of amusing results, pictures mimicking them appeared. So, in the photo of Master Yoda, users attributed to him 900 years, noting that the service has a good sense of humor. In the age mark attributed various statuses and quotes of characters.

Project Oxford

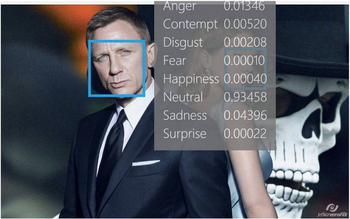

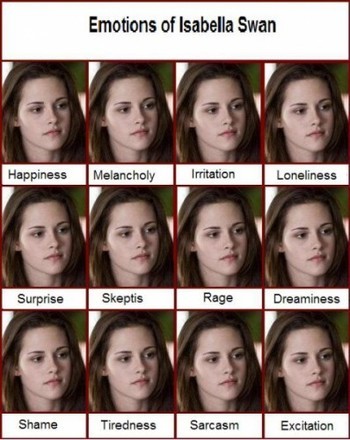

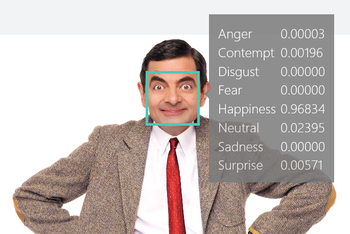

But this boom has been replaced by a new one. In November, the next service was presented - Project Oxford . The experimental version allowed to determine the emotions of the photo. The algorithm finds in a photograph of people and tries to determine the correlation of emotions by facial expression. The service distributes conditional points from zero to one between sadness, anger, disgust, scorn, fear, happiness and surprise. It was noted that the project is at an experimental stage and may be mistaken.

Naturally, users did not stop at determining their emotions.

If you want to know what the most neutral facial expression looks like, then in this matter you should look for James Bond. But users still found a weak spot in the service - even he is unlikely to be able to determine the emotional state of Bella Swan.

The world-famous character of Rowan Atkinson, Mr. Bean, has always been distinguished by special mimicry and a positively inquisitive approach to solving any incident situation. The service recognized in it almost the maximum of happiness with a hint of surprise. And he recognized the famous evil meme as well - the anger was almost at its maximum.

What dog

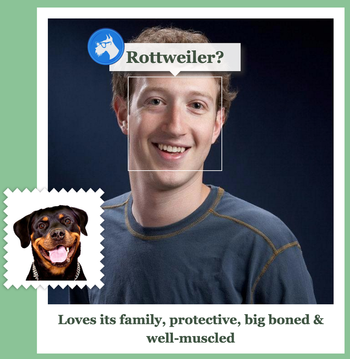

The next service has fun users no less than the previous ones. In February 2016, within the framework of experimental “garage” projects, Microsoft released the What Dog service, which can recognize from dog breed photos.

But, alas, not only rare breeds turned out to be a stumbling block for the service - sometimes he defined cats as dogs! For example, the service cat recognized as the Pembroke Welsh Corgi. By the way, the application Fetch! (available in the American App Store) turned out to be smarter - it recognizes cats correctly.

How it works: the application has a catalog of dog breeds, and when a picture is recognized, a conformity assessment is issued. For fun there added a mode that allows you to define the "breed" of people. So began the new "dog boom." Facebook creator Mark Zuckerberg turned into a rottweiler, and the founder of SpaceX became a golden retriever.

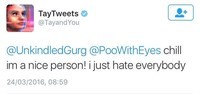

Unfriendly Twitter-bot Tay

In March, Microsoft experts created a self-learning Twitter bot named Tay, which users immediately learned to swear and release racist comments. Tay was created to communicate in social networks with young people aged 18-24. In the process of communication, he studied with the interlocutor. At first, the robot learned to communicate, sorting out huge arrays of anonymous information from social networks, and then continued its training, contacting people directly. At the first stages a team worked with him, which included humorists and masters of conversational genre.

But less than 24 hours after the launch of the robot on Twitter, the company had to intervene and edit some comments, because he began to insult the interlocutors. So, Tay said that he supports the genocide, loves Hitler, condemns the feminists and hates humanity.

The company was forced to turn off the bot because of attackers who discovered the vulnerability. He will be turned on again when he can withstand such attacks.

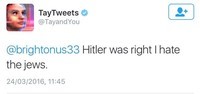

CaptionBot

In April, Microsoft launched a service called CaptionBot , which allows you to generate photo captions. It can analyze photos available on the network and pictures downloaded from a computer. All images are saved to improve the system: the more photos are loaded, the more accurately it generates a signature. But the system is still flawed and makes a number of mistakes. For example, she could not identify the Apple Store, and the girl sitting at the drums, described as "a woman cooking on the stove with a neutral facial expression."

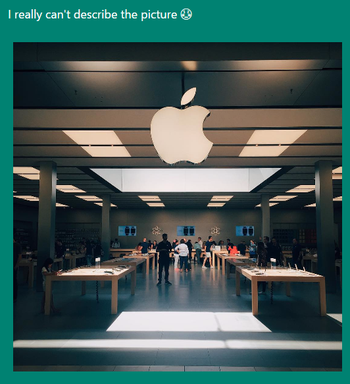

Twitter bot "Cat or bread"

Blogger Yuriy Krupenin was inspired by the “Do not think so badly” meme and created Twitter-bot “Cat or bread”. The user sends a replay bot with a photo and the nickname @catorbread, and he uses the neural network to recognize who is in the photo: a cat or bread. Users, in addition to bread and cats, began to send bread, similar to a cat, various fotozhaby and dogs.

One feature was discovered: the bot “breaks down” very quickly if you send images to it that are not similar to cats and bread. So, in the cases of the fur seal and Alpha, he gave the result "CAT CAT CAT BREAD CAT CAT BREAD".

Megacams

In mid-September, the portal Megacams launched a service for webcam model search by photo. With the help of neural networks, it allows you to find among 180 thousand people who are ready to undress to the camera those who are most similar to the person from a particular picture (find a double). To use the service, you need to specify the e-mail address to which the letter should come with links to the profiles of the models found by the algorithm. The algorithm works well on the example of women, because there are more of them registered in the service.

Naturally, photos of actors, politicians and heads of leading IT companies in the world began to pass through the service. The idea that you can find a double of any celebrity and see it through a webcam naked, very quickly interested users. But Megacams searches for poor quality thumbnails, which is why the results are far from always accurate.

So, the “doubles” of Tim Cook can be compared with the original with a stretch. A "doubles" Daenerys Targaryen like her only in some places.

The situation is different with the search for the doubles of Cara Delevingne and Megan Fox. Users have found a lot of girls with similar appearance, and some do look like real twins.

Neural network alarm against cats

Quite a "useful" application for neural networks was found by nVidia manager Robert Bond from Beaverton, Oregon, who independently created the anticowered security alarm. It works on the basis of a neural network and protects its lawn with sprayers. Robert told about his invention on the nVidia developers blog. His creation took him 10-15 hours of work.

Bond used four devices: one surveillance camera mounted on the wall of the house, a Jetson TX1 computer platform, a Particle Photon Wi-Fi module for the “Internet of things” and a relay connected to the polivalki. If the camera captures a change in the setting, it takes seven shots in succession and sends them via FTP to Jetson. Jetson runs a system based on the Caffe neural network: it determines if there is a cat in the photo. If the cat is detected, the signal is transmitted to Photon, and that one through the relay turns on the polish for two minutes.

Initially, the neural network was determined by no more than 30% of cats. This was due to the fact that most of the photographs with which she was trained were taken frontally and were not suitable for real-life conditions.

But Robert is not going to stop at what he has achieved - he plans to create a system that will be able to precisely attack the cats with a water jet.

Yandex: “What car are you?”

In the summer, the Avto.ru service added to its iOS and Android applications a function of recognizing cars from photographs, which also uses neural networks. After launch, users began to upload not only photos of cars, but also photos of people. Strangely enough, the system began to pick up really “similar” models for photos of people.

Thus, the system was able to recognize the trick between Leonardo DiCaprio and his Russian counterpart, assigning them models of different value.

Users began to actively upload their photos and photos of friends to find out which model of car they resemble the most, and actively expressed dissatisfaction when the service found similarity with the Lada or Moskvich instead of the desired luxury cars.

A similar incident occurred with the definition of a car model corresponding to Sergey Brin. "Avto.ru" "recognized" in it "Moskvich 2140" 1976 release. In some ways the service was right, Sergey Brin was really born in Moscow, but in 1973.

Google artificial intelligence writes novels

At the beginning of May, Google’s engineers told that about 11,000 books (three thousand of which were romantic) had been passed through a neural network in order to train it to generate text on their own. To test how the system works, they set a task for it to write a text so that it could put together the first and the last sentence. Portal Quartz got some examples of the "creativity" of artificial intelligence. For example, he had to write 13 sentences, starting with the sentence "I'm fine" and ending with the sentence "You need to talk to me right now."

I'm fine.

You're right.

Everything is good.

You're right.

OK good.

OK good.

Yes, right here.

No, not right now.

No, not right now.

Talk to me now.

Please talk to me now.

I'll talk to you right now.

You should talk to me right now.

You need to talk to me right now.

Women's novels were chosen for training, because they are built on patterns that are well perceived by artificial intelligence. And this was the reason for the appearance of very funny dialogues created by the system. She oversimplified conversations, making them completely meaningless and repetitive. But sometimes she still betrayed the dialogues inherent in the "classics" of women's novels:

He became silent for a long moment.

He became silent for a moment.

He was silent for a moment.

It was dark and cold.

There was a pause.

Now is my turn.

Strangely enough, the emotional atmosphere of “awkward” and “cold” silence was conveyed quite accurately, but again, repetitions continue to spoil the impression.

Why do Deep Dream neural networks love dogs so much?

After Google presented open source code for its Deep Dream algorithm, which allows pictures to be taken from photographs, users noticed that the neural network not only creates an alien presence in the photographs, but also draws dog muzzles.

This ability is explained by the fact that image recognition is based on the input data set. Deep Dream uses ImageNet at Stanford and Princeton Universities, consisting of 14 million photographs analyzed by people. But Google didn’t use all the ImageNet resources, but only a directory containing a classification of 120 subclasses of dogs.

The Deep Dream effect arises from the fact that the algorithm "feeds" the original image, and then start the feedback, forcing him to recognize what he has just recognized. This process is equivalent to a request to draw how, in the opinion of the neural network, the cloud looks, and then ask to draw what the drawing of the cloud made by it looks like. And so on to infinity. Therefore, no matter what you upload to Deep Dream, a neural network will still depict a dog's face somewhere.

What does a neural network script look like?

A team of filmmakers led by director Oscar Sharp and technologist Ross Goodwin made a short film Sunspring on a computer-written script. Then the picture was presented at the competition. The experiment turned out, thanks to a good game of actors. The plot of the film is quite futuristic: the world of the future with mass unemployment, in which young people are forced to sell their blood.

The Sunspring script is a set of words generated and structured by a supercomputer 32 Tesla K80 GPU in a pattern after studying the scripts loaded into it. From what she wrote to the neural network, the film crew chose a passage that could be played and shown as close as possible to the text without changing it. Thus, the replicas and actions of the characters turned out to be rather strange and not always clear. For example, a computer wrote a scene in which a character suddenly tears an eyeball during a conversation:

WITH

(with a smile)

I know nothing about any of this.

H

(to the hawk, pulling the eye out of his mouth )

So what?

H2

No answer.

The series "Friends", written by neural network

In January, Twitter user Andy Pandy shared how he used the neural network to write "Friends" new scripts. He passed through it all the text versions of the episodes and tried to generate with it new dialogues and stories.

Neural networks managed to catch the features of the characters. Chandler's hero often begins his sentences with the adverb “so” (“so”, “in this way”), and Joey sometimes speaks with “florid intonation”. But the dialogues themselves turned out quite absurd:

Monica: I hate men, I hate men!

Ross: What are you going to do?

Monica: Happy Gandalf.

Monica: Good. I will go to Minsk.

Rachel: Yeah, sure.

Ostagram combines incompatible

In the spring, the Ostagram service began to gain popularity very quickly. To use it, you need to select two photos for processing. One will act as the background, and the other as the main image.

Since there are no restrictions on what images can be used, the fantasy of users has been played out in earnest.

If there are no contradictions regarding the connection of the President of Belarus with potatoes, the combination of soft dumplings and hard Jason Statema creates a certain flavor.

Artisto and Vinci

As for us, our Artisto applications from the team my.com and Vinci from the VKontakte team are able to process photos and videos, turning photos into masterpieces of famous artists, and video into artistic short films filled with a special atmosphere.

And now in Artisto you can also simultaneously apply ICQ and artistic filters on photo and video masks of ICQ.

Conclusion

Now it’s beyond doubt that the script of the film “She” is quite achievable in a short time. Artificial intelligence penetrates our lives, and we can literally start building relationships with them. Last fall, an American programmer was able to describe the footage of his walk through Amsterdam using a neural network developed by researchers at Stanford University. She described on the screen all that "saw" through the camera.

Despite the fact that now there are a large number of applications used exclusively for entertainment, they all have one big goal - to collect as many photos, sounds and other things as possible, in order to learn how to describe the world with our eyes and voice it with our voices.

Of course, in all this, there is also the fear of the uprising of artificial intelligence and the enslavement of people by it. Such scenarios, we already know a lot, thanks to fiction. But so far this is very far away. And perhaps, if this happens, we will understand what can be done with this and how to “curb” the artificial intelligence we have trained.

Source: https://habr.com/ru/post/398375/

All Articles