Thanks for the memory: how cheap memory changes computation

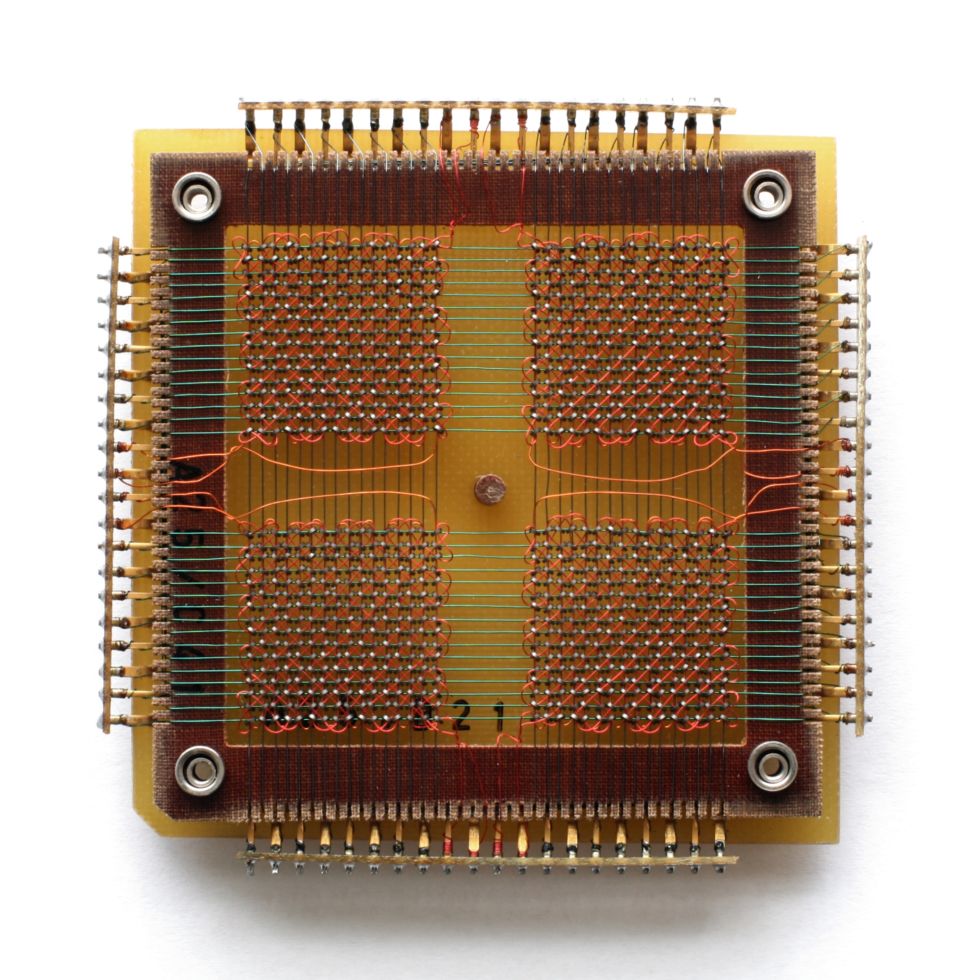

Early Micron DRAM, capacity 1 Mbit

RAM (random access memory, random access memory) is present in any computer system, from small embedded controllers to industrial servers. Data is stored in SRAM (static RAM) or DRAM (dynamic RAM) while the processor is working with them. With the fall in prices for the RAM, the model of moving data between RAM and a permanent storage location may disappear.

RAM is highly susceptible to market fluctuations, but in the long run its value goes down. In 2000, a gigabyte of memory cost more than $ 1000, and now - only $ 5. This allows you to imagine a completely different system architecture.

')

Databases are usually stored on disks, from where the necessary information is read into memory, if necessary, and then processed. It is usually considered that the amount of memory in the system is several orders of magnitude smaller than the volume of disks - for example, gigabytes against terabytes. But with increasing memory it becomes more efficient to load more data into memory, reducing the number of reads and records. With a decrease in the cost of RAM, it becomes possible to load the entire database into memory, perform operations on them and write them back. Now we have reached the point where some databases are not written back to the disk, and are constantly hanging in the memory.

Megabit chip from Carl Zeiss

Until 1975, RAM was a magnetic core memory.

4 megabyte EPROM chip erasable with ultraviolet light guided through a window

A bunch of modern DRAM

Memory access speeds are measured in nanoseconds, and disk access time is measured in milliseconds — that is, memory is a million times faster. The data transfer rate in memory, of course, is not a million times faster - these are gigabytes per second against several hundred megabytes per second for fast hard - but at least the RAM speed exceeds the speed of the drives by an order of magnitude.

In the real world, the differences are not so serious, but reading the data from the disk to RAM and writing it back is a serious bottleneck, as well as a field for errors. The disappearance of this step leads to a simplification of instructions, an increase in simplicity and efficiency.

With the fall in prices for RAM in large companies and data centers, it becomes popular to provide servers with terabytes of memory. But besides the size, the database in memory usually does not want to keep for reasons of reliability. RAM loses content when power is lost or the system is compromised. These problems are encountered when trying to meet the ACID database reliability standard (atomicity, consistency, isolation, durability, Atomicity, Consistency, Isolation, Durability).

Problems can be avoided with the help of casts and logs. Just as you can make backup copies of the database from the disks, the database in memory can be copied to the storage. Creating impressions prevents other processes from reading data, so the frequency of control points is a trade-off between speed and reliability. And this, in turn, can be smoothed by recording transactions, or by logging, recording data changes so that later state can be recreated from an early copy. But still, when the database is completely in memory, a certain percentage of redundancy is lost.

In-memory database management programs (IMDBS) allow you to create hybrid systems in which some database tables are in memory, while others live on disk. This is better than caching, and is convenient in cases where it is meaningless to keep the entire database in memory.

Databases can be compressed, especially in systems with columns that store tables as sets of columns, not rows. Most compression technologies prefer adjacent data to be of the same type, and columns in tables almost always contain data of the same type. And although compression implies an increase in the load on calculations, storing columns is well suited for complex queries in very large data sets — so big data users and scientists are interested in them.

On a large scale, companies like Google have switched to RAM so that a large number of search queries are processed at an acceptable rate. There are also problems in accessing large amounts of memory, since the amount of RAM connected to one motherboard is limited, and the organization of shared access leads to additional delays.

Life after ram

But you cannot guarantee that working with data in memory is the future of data processing. An alternative method is the use of non-volatile RAM (non-volatile RAM, NVRAM), familiar to users in the form of SSDs , offering an architecture compatible with disk systems. They now work on NAND flash memory, which offers high read and write speeds compared to mechanical hard drives. But she has her own problems. Flash memory requires relatively high voltages to write data, and it gradually degenerates, with which special algorithms are called upon to fight, leading to a gradual slowdown.

The cost of memory and storage from time to time (dollars per megabyte)

As can be seen from the graph, over time, the cost of drives decreases about the same as the cost of RAM. The decreasing cost of SSDs has led to their proliferation in data centers and in the workplace, but it is not yet clear what future this technology has. A study from Google, published in February 2016, based on six years of use, concluded that flash memory is much less reliable than hard drives — for example, it produces fatal errors — although it needs more rare replacements. And SSD for corporate use does not differ in quality from consumer options.

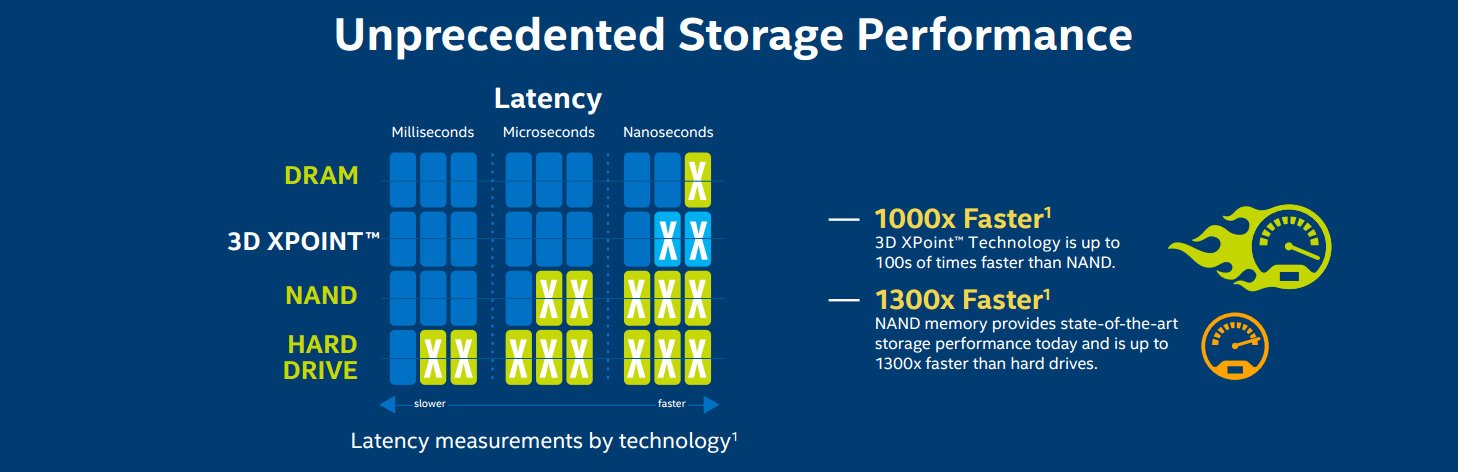

But new types of NVRAM are already emerging. Ferroelectric RAM (FRAM) was once supposed to be a replacement for RAM and flash drives in mobile devices, but now attention has shifted to magnetoresistive RAM (MRAM). In terms of speed, it approaches RAM, and the delay in access to it is 50 nanoseconds — this is slower than 10 ns for DRAM, but 1000 times faster than microseconds for NAND.

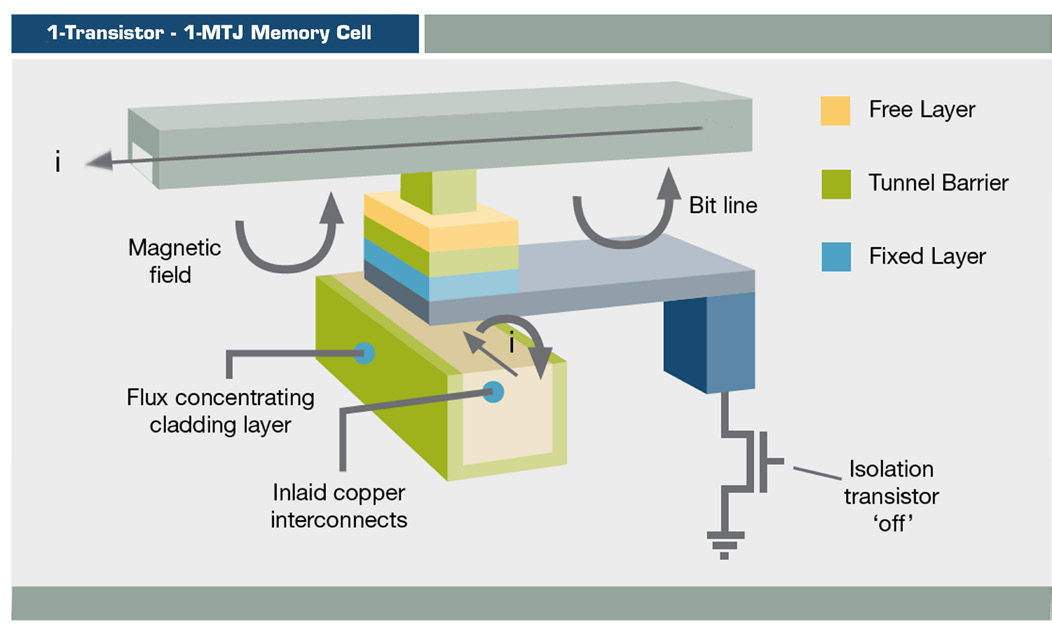

MRAM scheme

MRAM stores information using a magnetic orientation, rather than an electric charge, using a thin-film structure and a magnetic tunnel junction. Switching MRAM is already used in products such as Dell's EqualLogic data storage arrays from Dell, but for now only for journaling.

Backward Transmission MRAM (ST-MRAM) uses a more complex structure, potentially allowing an increase in density. Now Everspin is launching it on the market, recently released on the NASDAQ under the code MRAM. Other firms exploring this opportunity are Crocus, Micron, Qualcomm, Samsung, Spin Transfer Technologies (STT) and Toshiba.

XPoint 3D memory

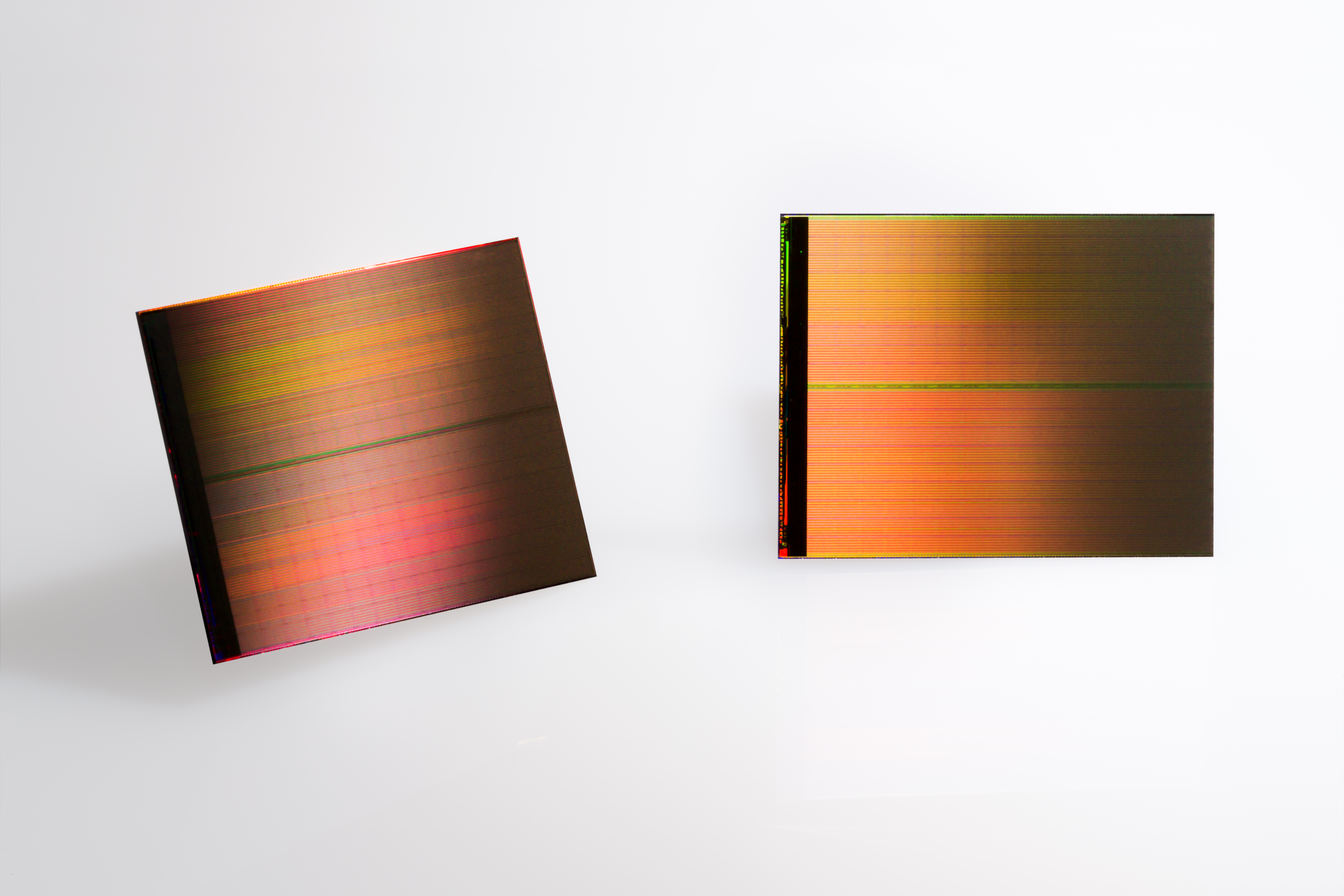

Two 3D XPoint chips of 128 GB each

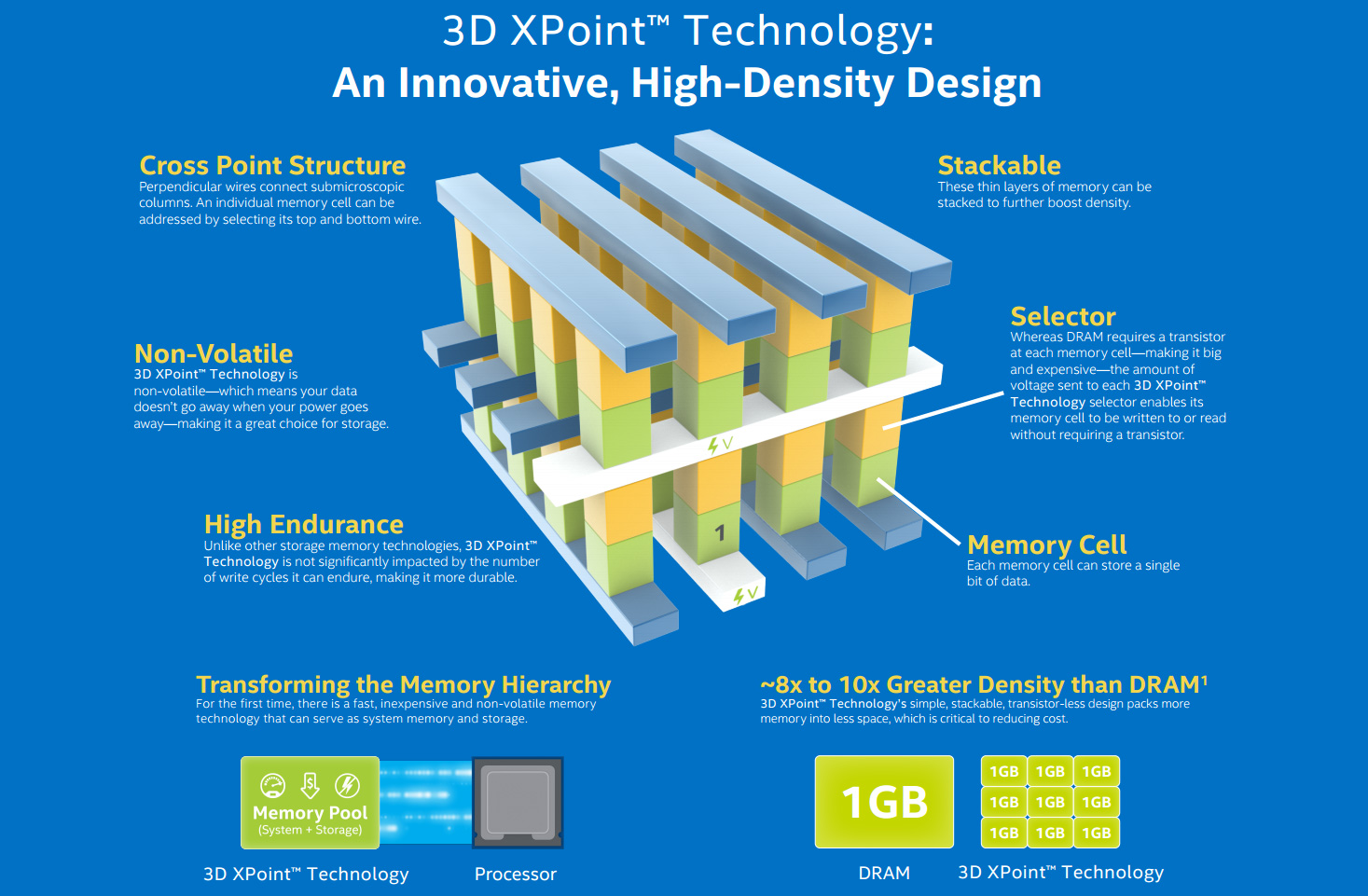

Chart for Intel / Micron 3D XPoint

Speed comparison

Meanwhile, Intel is working with Micron on a variant of NVRAM called 3D XPoint (pronounced "crosspoint"). This form of phase change memory (PCM), known as resistive RAM (ReRAM), was first made public in 2015. “3D” means the possibility of multi-layer memory construction. Intel believes that XPoint can work 1000 times faster than NAND, and be 10 times more capacious, although recently these statements have slightly decreased. It is expected that the price will be between the flash memory and DRAM. Because of this, it is unlikely to take root in houses, but on a large scale it can replace RAM and SSD.

IBM is also working on phase change memory . Like Intel, their technology is based on chalcogenide glass used in rewritable optical media. Using electricity to convert a material from an amorphous state into one of three crystalline states, the company boasts a breakthrough in capacity that will make the cost of memory lower than that of DRAM.

RAM-race will affect all levels of computer development. Increasing memory from 8 to 16 GB on end-user desktops will speed up multitasking and increase the efficiency of memory-demanding programs.

In ultrabooks, SSDs are already the norm, and growing capacities are already making them candidates for replacing hard drives. The next generation of three-dimensional NAND (V-NAND, vertical) promises greater efficiency and recording density. Samsung predicts that by 2020 there will be SSD for end users at 512 GB at the price of today's terabyte screw.

For medium-sized businesses and academic institutions, more cheap RAM means better analytics with data in memory - if software keeps up with it. SAP HANA is a database contained in memory, a platform for the widespread use of cloud and local solutions, allowing not very large companies to work with big data. IBM with Oracle has similar databases.

RAM is democratizing technology - technology is getting cheaper, and the difference between large and small organizations is erased.

Google data center with custom servers

Supercomputer sequoia

Sunway TaihuLight supercomputer, the world's fastest computer, 93 petaflops

Titan, the fastest US supercomputer

EcoPod HP data center

Last but not least, the point is the need for memory of supercomputers. The fastest for today, the Chinese SC Sunway TaihuLight contains 1,300 TB of DDR3 DRAM, which is relatively low for its speed of 93 petaflops (a quadrillion floating-point operations per second). In particular, because of this, its power consumption is only 15.3 MW, but this can be a limiting factor.

Now everyone wants an exaflops prefix, or 1000 petaflops. The Japanese post-K computer, developed by Riken and Fujitsu, will be ready by 2020 and will contain a Hybrid Memory Cube from Micron - a multi-layered implementation of DRAM, and it will also be possible to use 3D XPoint NVRAM. The European project NEXTGenIO in the Edinburgh Supercomputer Center intends to achieve exaflops by 2022, also using 3D XPoint.

In the US, the Exascale Computing Project, developed as part of the NSCI initiative, by 2023 should present as many as two supercomputers of this speed. Their architecture is still being worked out, but since speed and energy efficiency are in priority, RAM will play a central role in it.

Source: https://habr.com/ru/post/398373/

All Articles