Ensuring privacy by mathematical methods: a new approach to data integrity

In 1997, when in the state of Massachusetts, researchers in the medical field began to provide access to medical records of officials, the government removed from the lists the names of patients, their addresses and social security number. William Weld, then governor, assured the public that it would be impossible to recover the person by appointment.

Within a few days, a letter sent by a student from the Massachusetts Institute of Technology arrived at Weld’s office. Extracts from the governor’s medical record were attached to the envelope.

Although the obvious identifiers were removed, the officials decided to leave the date of birth, gender, and zip code (ZIP code). Having carried out a cross-comparison of these data with the recordings of the registration of votes, Latanya Sweeney was able to calculate Weld's medical card.

')

Sweeney’s work and other breakthroughs in privacy over the past 15 years raise security concerns about allegedly anonymous data.

“We found that people's intuition about which data to be considered private works poorly,” says Frank McSherry of Microsoft Research Silicon Valley. “Computers are constantly improving their ability to get individual data from an array of information that a man in the street would consider safe.”

Studying case histories can help in the search for genes responsible for the risk of Alzheimer's disease, reduce the number of errors in hospitals, or find the best medicine for any disease. The only question is how to get all this data without giving out personal information? A ten-year study on this topic is already approaching the development of a universal solution.

This approach is called “differential privacy”, and allows you to publish data, while protecting personal information. The data differentiation algorithm allows researchers to form any request to a database containing sensitive information and receive an answer that is “blurred” in such a way that it does not reveal any personal data - even the availability of data of a particular person in this database.

“The idea is that you can allow your data to be used without risking it,” said Cynthia Dwork of Microsoft Research Silicon Valley. Dwork introduced the concept of differential privacy (RP) in 2005 with the help of MacSherry, Cobby Nissim and Adam Smith.

The method retains the right to “plausible denial,” as Avrim Blum of Carnegie Mellon University says. “If I want to pretend that my personal information is different from what it actually is, I can do it. The output of the RP mechanism in practice will differ little, whether it includes the real one or the fake one — so I can deny everything I want. ”

Such a high level of privacy seems unattainable. Indeed, there is no useful RP algorithm that would produce a completely identical result in cases where you provide your real or fictional data. But you can allow the algorithms to produce almost identical data. The degree of difference is calibrated and is a quantification of privacy. People or communities can decide which value of this parameter corresponds to an acceptable degree of loss of privacy, and then select the appropriate algorithms.

Privacy experts have developed a wide range of RP-algorithms for processing different data and answering different questions about them. Most of the work is difficult for people who are not experts in the field to understand, so scientists are developing standardized computer languages that will allow not only experts to publish sensitive data in the RP-way, simply by creating a simple program. “The ER is a promising and very interesting technology,” says Aaron Roth, an information technology specialist at the University of Pennsylvania.

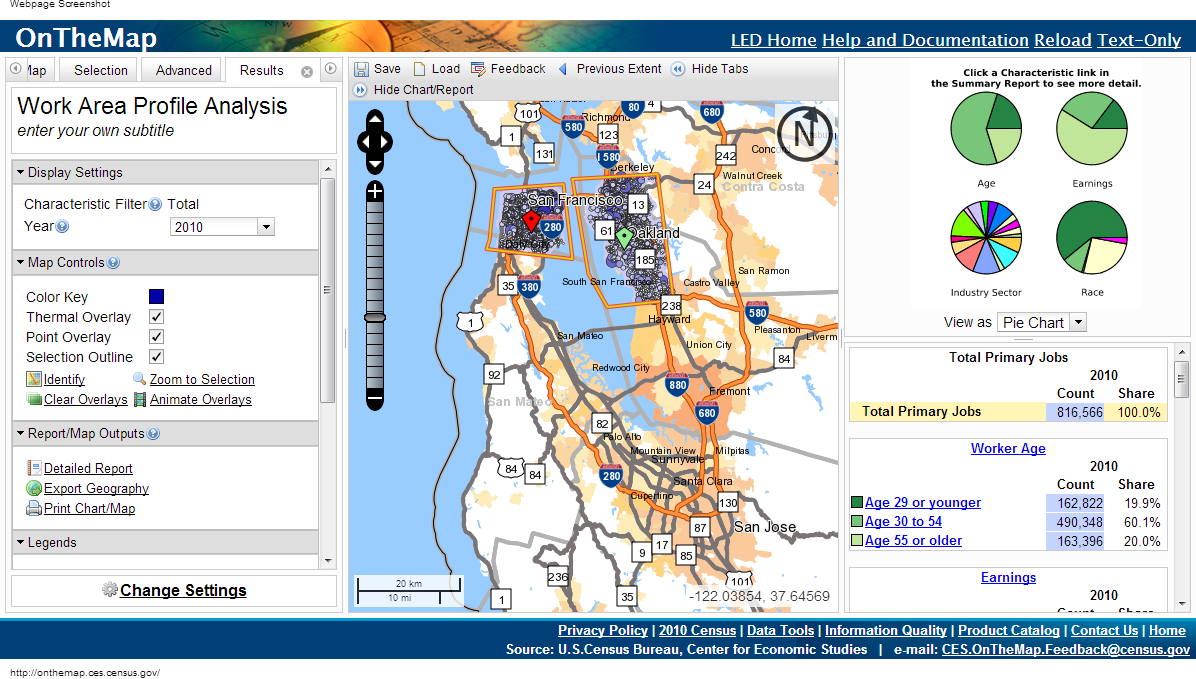

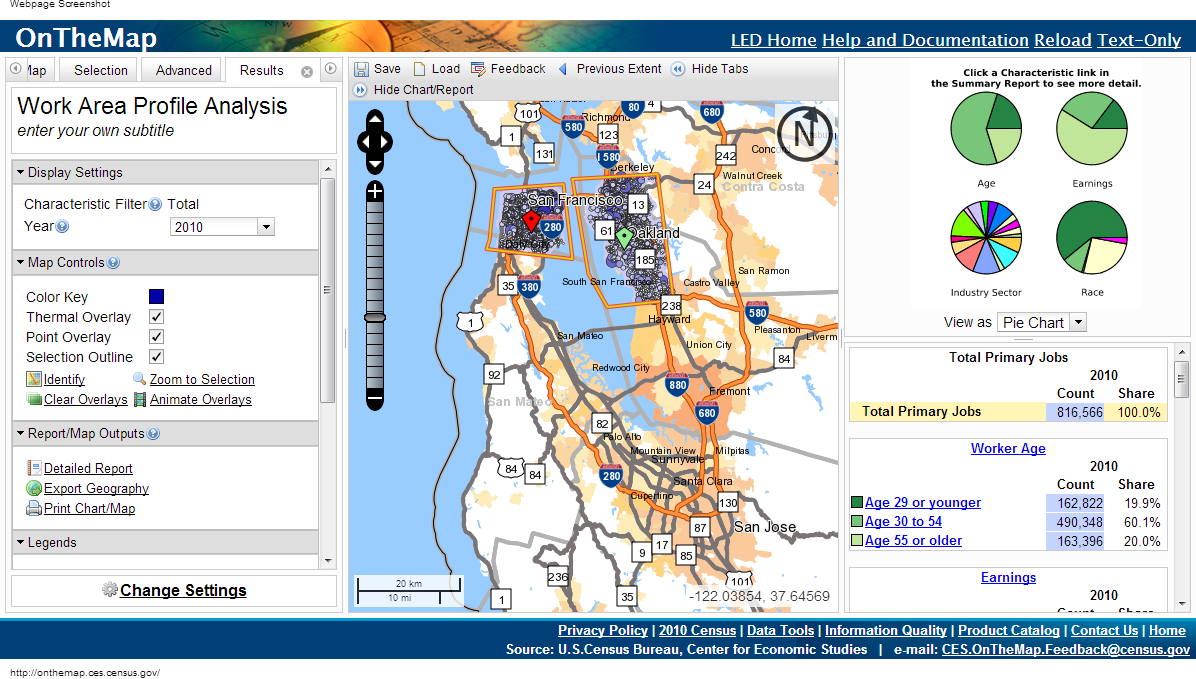

The OnTheMap Census Committee uses differential privacy by publishing data but not disclosing personal information.

It seems that to solve problems with privacy you just need to release aggregated data related to large groups of people. But such an approach is fraught with violation of the integrity of personal data.

Suppose you need to find out if the author has diabetes, and you know that my data is in the database. One could subtract the results of two queries: “How many people have diabetes in the database” and “How many people in the database with a name other than Eric Clarreich suffer from diabetes”.

The combination of two such requests violates my privacy. But it is not always clear which combinations of questions can violate privacy. Finding such combinations is an NP-complete task, that is, there is no effective computer algorithm for tracking such “attacks”.

In 2008, researchers showed the danger of publishing cumulative information obtained from general genetic studies - one of the main tools for finding the dependence of diabetes on genes. These studies imply deciphering the genes of the test group from 100 to 1000 patients with the same disease, with subsequent calculation of the average frequency with which one of the 100,000 mutations occurs. If one of the mutations occurs in a group much more often, it is noted as potentially associated with the disease.

A team of researchers led by Niels Homer, who was a graduate student at the University of California at the time, showed that in many cases, knowing the patient's genome, you can find out if he belonged to the genome testing group. After that, the association of medical institutes recalled the decree that the data obtained in genetic studies should be published.

Even more surprising conclusion was made by researchers in 2011 - it is possible to extract personal information about purchases, guided by the recommendation system from Amazon, which gives results like “bought And also bought B and C”. By observing changes in recommendations and comparing them with reviews that people make about purchased items, in several cases, researchers were able to say that a particular buyer bought a particular thing on a particular day — even before he posted a review of that thing.

In all cases, privacy measures seem to be adequate as long as they are insufficient. But already at this time a new approach to protecting privacy was being prepared, obtained by searching for an answer to the main question: what does it mean to protect privacy?

If researchers study a database of patients and find a link between smoking and some form of cancer, differential privacy will not protect a person smoking in a public place from the label of a person with an increased risk of getting sick. But if smoking is a person’s secret hidden in the database, the RP will protect him.

“Differential” means taking into account the difference between two worlds: in one of them you allow your personal data to be included in the database, and in the other you do not allow it. The two worlds will not be absolutely identical, but they can be made as similar as possible, and it will be almost impossible to distinguish them. This is the goal of the RP.

The RP focuses on algorithms that produce data, accept requests to the database and issue answers - not exact, but randomly changed. If you ask the same question on two databases that differ only in data for one person, you will receive essentially the same answers.

RP-representation of the location of users who searched for the word “cricket” in a search engine

More precisely, for any possible answer, the probability of receiving it should be the same for both databases; the ratio of these two probabilities should be limited to a number R close to one. The closer R is to 1, the more difficult it is for an attacker to find out if he is receiving information from base A or from base B, and the more protected X will be. Because if an attacker cannot know whether the data he received includes information on X, he will not be able to understand which data is related to X.

RP researchers usually speak of the logarithm of R, and denote it by. The parameter quantifies the leakage of personal data. The closer Ɛ to zero, the better the algorithm protects privacy.

To understand how you can construct a RP-algorithm, let's look at the simplest example of such an algorithm. It uses a script in which the questioner can only do quantitative queries. For example: “how many people have a property C in the database?”

Suppose that the real answer to one of the questions is 157. The RP-algorithm will add “noise” to it — before issuing the answer, it adds or subtracts a random number. As a result, we get 153, 159, or 292. The questioner knows the probability distribution used by the algorithm, so it’s pretty clear to him how distorted the actual result was (otherwise the output would be completely useless). But which random number was added to the answer is unknown to him.

The distribution formula must be chosen carefully. To understand what kind of distribution guarantees privacy, let us imagine that the persistent questioner is trying to find out if I am in the database. He asks: "How many people by the name of Eric Clarreich are in the database?" Suppose he gets a response of 100. Since this is a rare name, he realizes that the answer was actually 0 or 1. He has two possibilities:

A) the answer is 0, and the algorithm added 100 as noise

B) the answer is 1, and the algorithm added 99

The probability of choosing 100 and 99 should be the same. Then the questioner cannot distinguish between these two cases. More precisely, the ratio of these two probabilities should not exceed a predetermined R. And such a condition must be maintained not only for pairs 100 and 99, but also for any two consecutive numbers.

The Laplace distribution has this property. Its sharp peak is at zero, and then the graph gradually decreases in both directions. For it, the condition for the existence of the number R (the width of the distribution) is satisfied, such that for any two consecutive numbers the ratio of their probabilities is R.

For each width, there is one possible Laplace distribution. Therefore, you can play with the width to get a distribution that provides exactly the degree of privacy we need. For strong privacy, the distribution will be relatively wide and flat. Far from 0 numbers will fall out with almost the same probability as numbers close to zero. Data will be blurred enough.

Naturally, there is a confrontation between privacy and utility. The more privacy you need, the more noise you have to add, and the less helpful the answer will be. When using the Laplace distribution, the amount of added noise is back Ɛ. If the privacy setting is 0.01, then the algorithm will blur quantitative indicators by about 100.

The larger the dataset, the smaller the specified blur will affect the utility. In a database of hundreds of records, a blur of 100 will interfere more than in a database of millions of records. According to Dwork, for Internet-scale data, that is, hundreds of millions, the algorithm already provides enough privacy for practical use.

And “noise” in Laplace is only the first stage of the implementation of the RP. Researchers have already come up with a huge number of much more complex algorithms, many of which have a ratio of utility and privacy that exceeds the Laplace one in certain situations.

“People are finding ways to improve all the time, and there is still room for improvement,” says Dwork. Regarding more modest data sets than the Internet, in her words, "algorithms for many tasks appear."

With the RP algorithm, there is no need to carefully analyze the issues for violation of privacy - this protection is already built into the algorithm. Since questions interfering in their affairs usually come down to small numbers associated with specific people, and questions of a different nature study the behavior of large groups, the amount of additional noise that negates individual characteristics will have little effect on the answers to legitimate questions.

With the help of the RP, the problems of data researchers, such as cross-comparison with external sources, disappear. The mathematical approach does not expect the attacker to have limited sources of external information.

“The RP approach means that the attacker is omnipotent,” says McSherry. “Even if the attacker returns in 100 years, accumulating ideas and computer technologies all the time, they still won’t be able to find out if you are in the database.” RP is protected from the future. ”

So far, we have considered a situation in which someone makes requests to the database with a number as an answer. But the reality is more complicated. Researchers want to ask the base a lot of questions. And over time, pieces of your personal information will get into different databases, each of which will give data without asking the others.

The ER provides an accurate and easy way to assess the overall threat to privacy when researchers ask all the databases in which your data is present a few questions. Suppose if your data is in two databases, and this data is given using algorithms that provide privacy parameters Ɛ1 and Ɛ2, then the total amount of leaked personal information will be no more than Ɛ1 + 2. The same formula works for a single database with multiple queries. For m queries, the leakage will be bounded above m * Ɛ.

In theory, the database curator may allow researchers to ask as many questions as they like, adding the necessary amount of Laplace noise to each answer so that the total number of leaked personal data does not exceed a certain limit.

It turns out that the restriction on numerical answers is not that critical. Many other queries can be changed so that they become quantitative. For example, if you need to create a list of hundreds of the most popular names for babies born in 2012, you could, for example, ask a sequence of questions like “How many children were given names beginning with A?” And process the results.

“One of the early results of learning machine learning asserts that everything that is in principle possible to study can be studied using numerical queries,” says Aaron Roth. “Such requests are not toys in themselves, but a fundamental primitive,” that is, building blocks, on the basis of which more complex algorithms can be built.

But there is a catch. The more questions we can allow to ask, the more noise will be added to each answer. Refer to the example with the names. If we decide to limit the maximum privacy expense Ɛ to 0.01, and the names will be 10,000, then the privacy limit of each question will be Ɛ / 10,000, or 0.000001. The noise level will be 10,000 / Ɛ, or 1,000,000 - and this level simply levels out the real results.

In other words, the “head on” approach with the addition of Laplace noise to each answer is limited by the number of possible questions. To remedy the situation, programmers had to develop more suitable primitives - algorithmic "bricks", with which, given the structure of a specific database and tasks, they can answer more questions with greater accuracy.

For example, in 2005, Smith discovered that a task with names has a special structure: removing information about one person from the database changes the answer for only one of 10,000 names. Therefore, we can add no more than 1 / Ɛ noise to each answer, instead of 10,000 / Ɛ, and the privacy of the answer will remain within our limit. Such a primitive can be applied to any “histogram” query — that is, a query about how many people fall into one of several mutually exclusive categories, such as names.

When Smith told Dwork about this, “something inside me was jubilant,” Dwork says. - I realized that we can use the structure of the query or calculation and get a much greater accuracy of the answer. "

Since then, computer scientists have developed a large library of such primitives. And since the additive rule explains what happens to privacy when combining algorithms, programmers can assemble these building blocks into complex structures, while tracking the limit on privacy leaks.

To simplify access to the RP system for people who are not experts, several groups are working to create a programming language that will allow one to abstract from the mathematical foundations of algorithms.

PINQ - one of the examples of PL for RP

Difference privacy programming languages such as PINQ provide an interface for sensitive data and allow you to ask questions about it, correcting the answers to protect privacy. “If you are in charge of a data set, you don’t have to worry about what people do with it while their requests are made using this PL,” says MacSherry, who created the PINQ language. "The program guarantees the security of requests."

Since a simple additive rule for Ɛ sets the exact upper limit on the loss of privacy when different databases containing your data give answers to queries, this rule, according to McSherry, turns privacy into currency.

For example, if you decide what limit on the loss of privacy is acceptable for you personally in your entire life, you can then decide to “spend” this privacy — change it for money, or support a good research project. Every time you allow your data to be used in a new release of information, you will know what the rest of your “budget” of privacy is.

And the data set curator can decide how to spend the amount of privacy he chooses to release - for example, consider proposals from research projects that describe not only available requests, but also the amount of privacy used in projects. Then the curator will be able to choose which projects will best use the available “budget” of this set of information. After the budget is spent, the data set is closed.

“Privacy is a non-renewable resource,” says McSherry. “As soon as you spend it, it disappears.”

The question of what value represents a permissible limit on the loss of privacy should be answered by society, not programmers. And everyone can have their answer. And although the prospect of assigning a price tag to such an intangible thing as privacy may look frightening, analogs of this already exist.

“There is another resource with the same properties — the clock of your life,” says McSherry. - Their number is limited, and after you spend them, they disappear. But since we have currency and a labor market, our society has figured out how to assign a price tag to human time. You can imagine how the same thing happens with privacy. ”

Within a few days, a letter sent by a student from the Massachusetts Institute of Technology arrived at Weld’s office. Extracts from the governor’s medical record were attached to the envelope.

Although the obvious identifiers were removed, the officials decided to leave the date of birth, gender, and zip code (ZIP code). Having carried out a cross-comparison of these data with the recordings of the registration of votes, Latanya Sweeney was able to calculate Weld's medical card.

')

Sweeney’s work and other breakthroughs in privacy over the past 15 years raise security concerns about allegedly anonymous data.

“We found that people's intuition about which data to be considered private works poorly,” says Frank McSherry of Microsoft Research Silicon Valley. “Computers are constantly improving their ability to get individual data from an array of information that a man in the street would consider safe.”

Studying case histories can help in the search for genes responsible for the risk of Alzheimer's disease, reduce the number of errors in hospitals, or find the best medicine for any disease. The only question is how to get all this data without giving out personal information? A ten-year study on this topic is already approaching the development of a universal solution.

This approach is called “differential privacy”, and allows you to publish data, while protecting personal information. The data differentiation algorithm allows researchers to form any request to a database containing sensitive information and receive an answer that is “blurred” in such a way that it does not reveal any personal data - even the availability of data of a particular person in this database.

“The idea is that you can allow your data to be used without risking it,” said Cynthia Dwork of Microsoft Research Silicon Valley. Dwork introduced the concept of differential privacy (RP) in 2005 with the help of MacSherry, Cobby Nissim and Adam Smith.

The method retains the right to “plausible denial,” as Avrim Blum of Carnegie Mellon University says. “If I want to pretend that my personal information is different from what it actually is, I can do it. The output of the RP mechanism in practice will differ little, whether it includes the real one or the fake one — so I can deny everything I want. ”

Such a high level of privacy seems unattainable. Indeed, there is no useful RP algorithm that would produce a completely identical result in cases where you provide your real or fictional data. But you can allow the algorithms to produce almost identical data. The degree of difference is calibrated and is a quantification of privacy. People or communities can decide which value of this parameter corresponds to an acceptable degree of loss of privacy, and then select the appropriate algorithms.

Privacy experts have developed a wide range of RP-algorithms for processing different data and answering different questions about them. Most of the work is difficult for people who are not experts in the field to understand, so scientists are developing standardized computer languages that will allow not only experts to publish sensitive data in the RP-way, simply by creating a simple program. “The ER is a promising and very interesting technology,” says Aaron Roth, an information technology specialist at the University of Pennsylvania.

The OnTheMap Census Committee uses differential privacy by publishing data but not disclosing personal information.

Needle in a haystack

It seems that to solve problems with privacy you just need to release aggregated data related to large groups of people. But such an approach is fraught with violation of the integrity of personal data.

Suppose you need to find out if the author has diabetes, and you know that my data is in the database. One could subtract the results of two queries: “How many people have diabetes in the database” and “How many people in the database with a name other than Eric Clarreich suffer from diabetes”.

The combination of two such requests violates my privacy. But it is not always clear which combinations of questions can violate privacy. Finding such combinations is an NP-complete task, that is, there is no effective computer algorithm for tracking such “attacks”.

In 2008, researchers showed the danger of publishing cumulative information obtained from general genetic studies - one of the main tools for finding the dependence of diabetes on genes. These studies imply deciphering the genes of the test group from 100 to 1000 patients with the same disease, with subsequent calculation of the average frequency with which one of the 100,000 mutations occurs. If one of the mutations occurs in a group much more often, it is noted as potentially associated with the disease.

A team of researchers led by Niels Homer, who was a graduate student at the University of California at the time, showed that in many cases, knowing the patient's genome, you can find out if he belonged to the genome testing group. After that, the association of medical institutes recalled the decree that the data obtained in genetic studies should be published.

Even more surprising conclusion was made by researchers in 2011 - it is possible to extract personal information about purchases, guided by the recommendation system from Amazon, which gives results like “bought And also bought B and C”. By observing changes in recommendations and comparing them with reviews that people make about purchased items, in several cases, researchers were able to say that a particular buyer bought a particular thing on a particular day — even before he posted a review of that thing.

In all cases, privacy measures seem to be adequate as long as they are insufficient. But already at this time a new approach to protecting privacy was being prepared, obtained by searching for an answer to the main question: what does it mean to protect privacy?

The privacy of two worlds

If researchers study a database of patients and find a link between smoking and some form of cancer, differential privacy will not protect a person smoking in a public place from the label of a person with an increased risk of getting sick. But if smoking is a person’s secret hidden in the database, the RP will protect him.

“Differential” means taking into account the difference between two worlds: in one of them you allow your personal data to be included in the database, and in the other you do not allow it. The two worlds will not be absolutely identical, but they can be made as similar as possible, and it will be almost impossible to distinguish them. This is the goal of the RP.

The RP focuses on algorithms that produce data, accept requests to the database and issue answers - not exact, but randomly changed. If you ask the same question on two databases that differ only in data for one person, you will receive essentially the same answers.

RP-representation of the location of users who searched for the word “cricket” in a search engine

More precisely, for any possible answer, the probability of receiving it should be the same for both databases; the ratio of these two probabilities should be limited to a number R close to one. The closer R is to 1, the more difficult it is for an attacker to find out if he is receiving information from base A or from base B, and the more protected X will be. Because if an attacker cannot know whether the data he received includes information on X, he will not be able to understand which data is related to X.

RP researchers usually speak of the logarithm of R, and denote it by. The parameter quantifies the leakage of personal data. The closer Ɛ to zero, the better the algorithm protects privacy.

To understand how you can construct a RP-algorithm, let's look at the simplest example of such an algorithm. It uses a script in which the questioner can only do quantitative queries. For example: “how many people have a property C in the database?”

Suppose that the real answer to one of the questions is 157. The RP-algorithm will add “noise” to it — before issuing the answer, it adds or subtracts a random number. As a result, we get 153, 159, or 292. The questioner knows the probability distribution used by the algorithm, so it’s pretty clear to him how distorted the actual result was (otherwise the output would be completely useless). But which random number was added to the answer is unknown to him.

The distribution formula must be chosen carefully. To understand what kind of distribution guarantees privacy, let us imagine that the persistent questioner is trying to find out if I am in the database. He asks: "How many people by the name of Eric Clarreich are in the database?" Suppose he gets a response of 100. Since this is a rare name, he realizes that the answer was actually 0 or 1. He has two possibilities:

A) the answer is 0, and the algorithm added 100 as noise

B) the answer is 1, and the algorithm added 99

The probability of choosing 100 and 99 should be the same. Then the questioner cannot distinguish between these two cases. More precisely, the ratio of these two probabilities should not exceed a predetermined R. And such a condition must be maintained not only for pairs 100 and 99, but also for any two consecutive numbers.

The Laplace distribution has this property. Its sharp peak is at zero, and then the graph gradually decreases in both directions. For it, the condition for the existence of the number R (the width of the distribution) is satisfied, such that for any two consecutive numbers the ratio of their probabilities is R.

For each width, there is one possible Laplace distribution. Therefore, you can play with the width to get a distribution that provides exactly the degree of privacy we need. For strong privacy, the distribution will be relatively wide and flat. Far from 0 numbers will fall out with almost the same probability as numbers close to zero. Data will be blurred enough.

Naturally, there is a confrontation between privacy and utility. The more privacy you need, the more noise you have to add, and the less helpful the answer will be. When using the Laplace distribution, the amount of added noise is back Ɛ. If the privacy setting is 0.01, then the algorithm will blur quantitative indicators by about 100.

The larger the dataset, the smaller the specified blur will affect the utility. In a database of hundreds of records, a blur of 100 will interfere more than in a database of millions of records. According to Dwork, for Internet-scale data, that is, hundreds of millions, the algorithm already provides enough privacy for practical use.

And “noise” in Laplace is only the first stage of the implementation of the RP. Researchers have already come up with a huge number of much more complex algorithms, many of which have a ratio of utility and privacy that exceeds the Laplace one in certain situations.

“People are finding ways to improve all the time, and there is still room for improvement,” says Dwork. Regarding more modest data sets than the Internet, in her words, "algorithms for many tasks appear."

With the RP algorithm, there is no need to carefully analyze the issues for violation of privacy - this protection is already built into the algorithm. Since questions interfering in their affairs usually come down to small numbers associated with specific people, and questions of a different nature study the behavior of large groups, the amount of additional noise that negates individual characteristics will have little effect on the answers to legitimate questions.

With the help of the RP, the problems of data researchers, such as cross-comparison with external sources, disappear. The mathematical approach does not expect the attacker to have limited sources of external information.

“The RP approach means that the attacker is omnipotent,” says McSherry. “Even if the attacker returns in 100 years, accumulating ideas and computer technologies all the time, they still won’t be able to find out if you are in the database.” RP is protected from the future. ”

Fundamental primitive

So far, we have considered a situation in which someone makes requests to the database with a number as an answer. But the reality is more complicated. Researchers want to ask the base a lot of questions. And over time, pieces of your personal information will get into different databases, each of which will give data without asking the others.

The ER provides an accurate and easy way to assess the overall threat to privacy when researchers ask all the databases in which your data is present a few questions. Suppose if your data is in two databases, and this data is given using algorithms that provide privacy parameters Ɛ1 and Ɛ2, then the total amount of leaked personal information will be no more than Ɛ1 + 2. The same formula works for a single database with multiple queries. For m queries, the leakage will be bounded above m * Ɛ.

In theory, the database curator may allow researchers to ask as many questions as they like, adding the necessary amount of Laplace noise to each answer so that the total number of leaked personal data does not exceed a certain limit.

It turns out that the restriction on numerical answers is not that critical. Many other queries can be changed so that they become quantitative. For example, if you need to create a list of hundreds of the most popular names for babies born in 2012, you could, for example, ask a sequence of questions like “How many children were given names beginning with A?” And process the results.

“One of the early results of learning machine learning asserts that everything that is in principle possible to study can be studied using numerical queries,” says Aaron Roth. “Such requests are not toys in themselves, but a fundamental primitive,” that is, building blocks, on the basis of which more complex algorithms can be built.

But there is a catch. The more questions we can allow to ask, the more noise will be added to each answer. Refer to the example with the names. If we decide to limit the maximum privacy expense Ɛ to 0.01, and the names will be 10,000, then the privacy limit of each question will be Ɛ / 10,000, or 0.000001. The noise level will be 10,000 / Ɛ, or 1,000,000 - and this level simply levels out the real results.

In other words, the “head on” approach with the addition of Laplace noise to each answer is limited by the number of possible questions. To remedy the situation, programmers had to develop more suitable primitives - algorithmic "bricks", with which, given the structure of a specific database and tasks, they can answer more questions with greater accuracy.

For example, in 2005, Smith discovered that a task with names has a special structure: removing information about one person from the database changes the answer for only one of 10,000 names. Therefore, we can add no more than 1 / Ɛ noise to each answer, instead of 10,000 / Ɛ, and the privacy of the answer will remain within our limit. Such a primitive can be applied to any “histogram” query — that is, a query about how many people fall into one of several mutually exclusive categories, such as names.

When Smith told Dwork about this, “something inside me was jubilant,” Dwork says. - I realized that we can use the structure of the query or calculation and get a much greater accuracy of the answer. "

Since then, computer scientists have developed a large library of such primitives. And since the additive rule explains what happens to privacy when combining algorithms, programmers can assemble these building blocks into complex structures, while tracking the limit on privacy leaks.

To simplify access to the RP system for people who are not experts, several groups are working to create a programming language that will allow one to abstract from the mathematical foundations of algorithms.

PINQ - one of the examples of PL for RP

Difference privacy programming languages such as PINQ provide an interface for sensitive data and allow you to ask questions about it, correcting the answers to protect privacy. “If you are in charge of a data set, you don’t have to worry about what people do with it while their requests are made using this PL,” says MacSherry, who created the PINQ language. "The program guarantees the security of requests."

Non-refreshable resource

Since a simple additive rule for Ɛ sets the exact upper limit on the loss of privacy when different databases containing your data give answers to queries, this rule, according to McSherry, turns privacy into currency.

For example, if you decide what limit on the loss of privacy is acceptable for you personally in your entire life, you can then decide to “spend” this privacy — change it for money, or support a good research project. Every time you allow your data to be used in a new release of information, you will know what the rest of your “budget” of privacy is.

And the data set curator can decide how to spend the amount of privacy he chooses to release - for example, consider proposals from research projects that describe not only available requests, but also the amount of privacy used in projects. Then the curator will be able to choose which projects will best use the available “budget” of this set of information. After the budget is spent, the data set is closed.

“Privacy is a non-renewable resource,” says McSherry. “As soon as you spend it, it disappears.”

The question of what value represents a permissible limit on the loss of privacy should be answered by society, not programmers. And everyone can have their answer. And although the prospect of assigning a price tag to such an intangible thing as privacy may look frightening, analogs of this already exist.

“There is another resource with the same properties — the clock of your life,” says McSherry. - Their number is limited, and after you spend them, they disappear. But since we have currency and a labor market, our society has figured out how to assign a price tag to human time. You can imagine how the same thing happens with privacy. ”

Source: https://habr.com/ru/post/398011/

All Articles