Overview of position tracking techniques and technologies for virtual reality

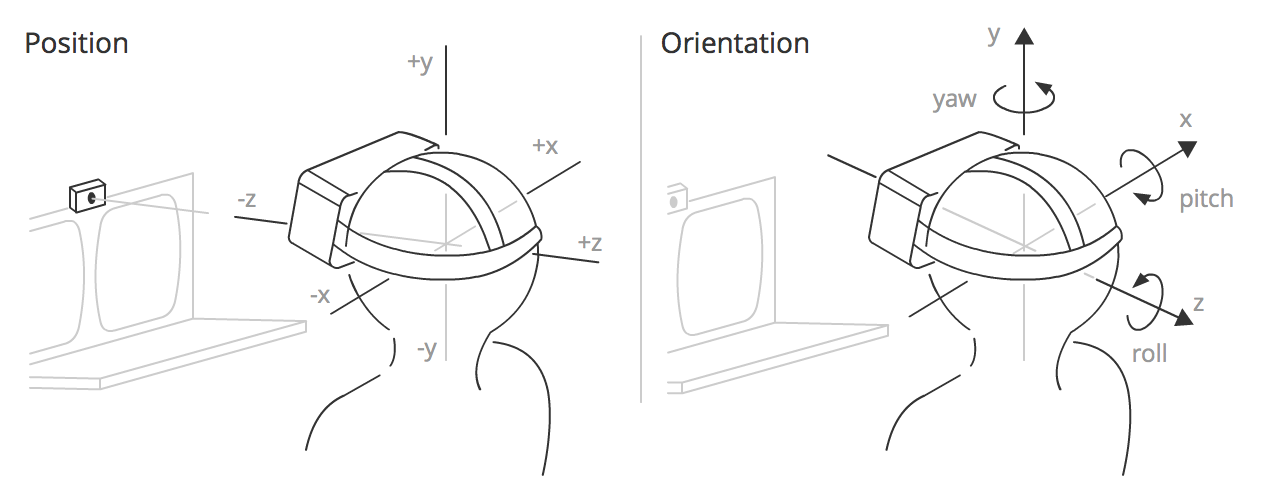

Position tracking (positional tracking) is a combination of hardware and software that allows you to determine the absolute position of an object in space. This technology is critical to achieve the effect of immersion in virtual reality. In combination with orientation tracking, it becomes possible to measure and transmit to the VR all 6 degrees of freedom (6-DoF) of the real world. In the course of working with virtual reality technologies in our company, we gained some experience in this matter and would like to share them by telling us about the existing ways of tracking the situation for virtual reality, as well as about the pros and cons of a particular solution.

Small classification

The set of methods and approaches to solving this problem can be divided into several groups:

- Acoustic

- Radio frequency

- Magnetic

- Optical

- Inertial

- Hybrid

Human perception places high demands on accuracy (~ 1mm) and delays (<20 ms) in BP equipment. Optical and inertial methods are closest to these requirements, and are most often used together, complementing each other. Consider the basic principles on which the above methods are built.

Acoustic methods

Acoustic tracking devices use ultrasonic (high-frequency) sound waves to measure the position and orientation of a target object. To determine the position of an object, the time of flight ( time-of-arrival ) of the sound wave from the transmitter to the receivers, or the phase difference of a sinusoidal sound wave during transmission and reception, is measured. Intersense develops ultrasound-based position tracking devices.

Acoustic trackers, as a rule, have a low update rate, caused by the low speed of sound in the air. Another problem is that the speed of sound in air depends on such environmental factors as temperature, barometric pressure and humidity.

')

Radio frequency methods

There are many methods based on radio frequencies. In many respects according to the principles of determining the position, they are similar to acoustic tracking methods (the only difference is in the nature of the wave). The most promising at the moment are UWB (Ultra-Wide Band) methods, but even in the best solutions based on UWB, accuracy reaches only about centimeters ( DW1000 from DecaWave , Dart from Zebra Technologies , Series 7000 from Ubisense and others). Perhaps in the future, startups like Pozyx or IndoTraq will be able to achieve sub-millimeter accuracy. However, while UWB position tracking solutions are not applicable for virtual reality.

Other methods of positioning at radio frequencies are described in more detail in this article .

Magnetic methods

Magnetic tracking is based on measuring the intensity of a magnetic field in various directions. As a rule, in such systems there is a base station that generates an alternating or permanent magnetic field. Since the magnetic field strength decreases with increasing distance between the measurement point and the base station, it is possible to determine the location of the controller. If the measurement point rotates, the distribution of the magnetic field changes along different axes, which allows you to determine the orientation. The most well-known products based on magnetic tracking are the Razer Hydra controller and the STEM system from Sixense.

The accuracy of this method can be quite high under controlled conditions (Hydra specifications refer to 1 mm of positional accuracy and 1 degree of orientation accuracy), however magnetic tracking is subject to interference from conductive materials near the emitter or sensor, from magnetic fields created by other electronic devices and ferromagnetic materials in tracking space.

Optical methods

Optical methods are a combination of computer vision algorithms and tracking devices, which are visible or infrared cameras, stereo cameras and depth cameras.

Depending on the choice of reference system, two approaches are distinguished for tracking the position:

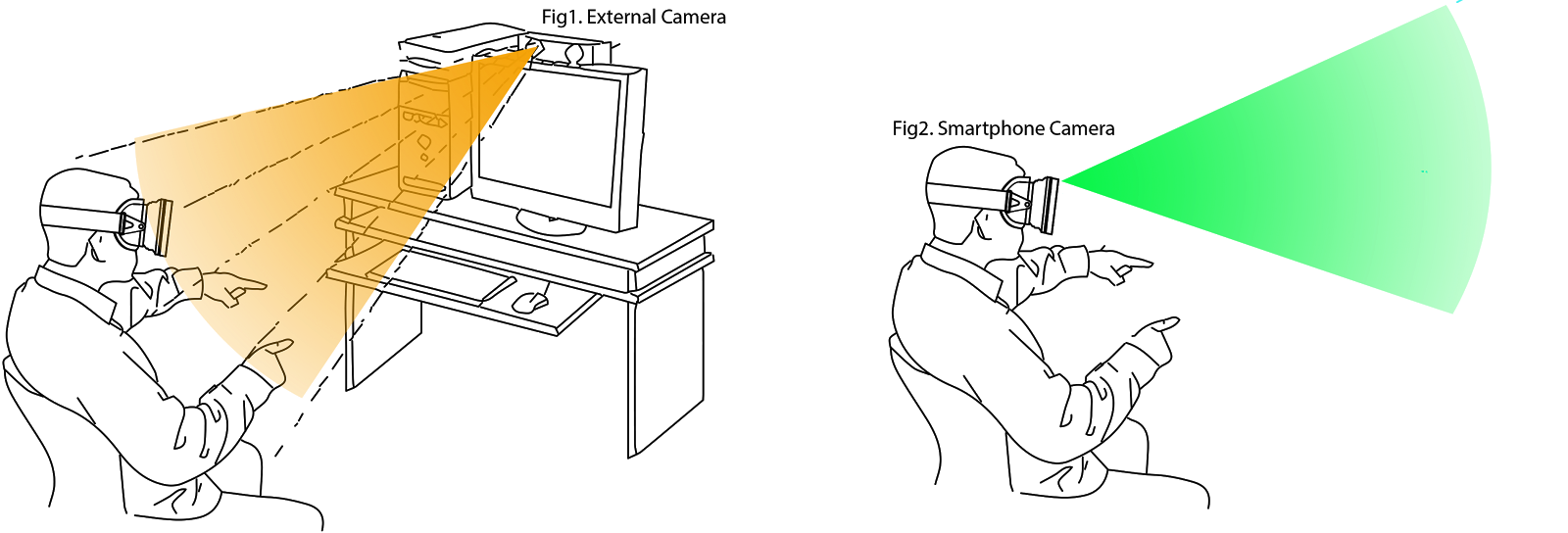

- The outside -in approach implies the presence of a fixed external observer (camera) determining the position of a moving object at characteristic points. Used in Oculus Rift (Constrellation), PSVR, OSVR and many Motion Capture systems.

- The inside-out approach assumes the presence of an optical sensor on a moving object, thanks to which it is possible to track movement relative to fixed points in the surrounding space. Used in Microsoft Hololens, Project Tango (SLAM), SteamVR Lighthouse (hybrid version, as there are base stations).

Also, depending on the availability of special optical markers, they are distinguished separately:

- Marker-free tracking is usually based on complex algorithms using two or more cameras, or stereo cameras with depth sensors.

- Tracking using markers implies a predetermined object model that can be monitored even with a single camera. Markers usually serve as sources of infrared radiation (both active and passive), as well as visible markers like QR codes. This type of tracking is possible only within the line of sight of the marker.

Perspective-n-Point (PnP) Task

In optical tracking, the so-called PnP (Perspective-n-Point) problem is solved to determine the position of an object in space, when it is necessary to determine the position of an object in 3D space from the perspective projection of the object onto the camera's sensor plane.

For a given 3D model of the object and a 2D projection of the object on the camera plane, a system of equations is solved. As a result, there are many possible solutions. The number of decisions depends on the number of points in the 3D model of the object. A unique solution for determining the 6-DoF position of an object can be obtained at a minimum of 4 points. For a triangle there are from 2 to 4 possible solutions, that is, the position cannot be determined unambiguously:

The solution is offered by a sufficiently large number of algorithms implemented as libraries:

SLAM - Simultaneous Localization and Mapping

The method of simultaneous localization and mapping (SLAM) is the most popular positioning method in robotics (and not only), which is used to track a position in space.

The algorithm consists of two parts: the first is the mapping of an unknown surrounding space based on measurements (data from the odometer or stereo camera), the second is the determination of its location (localization) in space based on a comparison of current measurements with the existing space map. This cycle is continuously recalculated, with the results of one process involved in the calculations of another process. The most popular methods for solving a problem include a particle filter and an advanced Kalman filter. In fact, SLAM is a rather extensive topic, and not just one specific algorithm, and the analysis of all existing solutions on this topic draws on a separate article.

SLAM is convenient for virtual and augmented reality mobile solutions. However, the disadvantage of this approach is a large computational complexity, which, coupled with demanding VR / AR applications, will greatly load the productive resources of the device.

Project Tango from Google and Microsoft Hololens are the most famous projects based on SLAM for mobile devices. SLAM-based tracking support is also expected in Intel's recently announced products ( Project Alloy ) and Qualcomm ( VR820 ).

Among open-source solutions can be identified ORB-SLAM , LSD-SLAM , PTAM-GPL .

Inertial tracking

Modern inertial measurement systems ( IMU ) based on MEMS technology allow tracking orientation (roll, pitch, yaw) in space with great accuracy and minimal delays.

Thanks to the sensor fusion algorithms based on a complementary filter or Kalman filter, the data from the gyroscope and accelerometer successfully correct each other and ensure accuracy for both short-term measurements and for a long period.

However, determining the coordinates (displacement) due to the double integration of linear acceleration ( dead reckoning ), calculated from raw data from the accelerometer, does not meet the accuracy requirements for long periods of time. The accelerometer itself gives very noisy data, and when integrated, the error increases quadratically with time.

Combining the inertial approach to tracking with other methods that periodically adjust the so-called accelerometer drift helps solve this problem.

Hybrid methods

Since none of the methods is flawless, and they all have their weak points, it is most reasonable to combine different tracking methods. So inertial tracking (IMU) can provide a high frequency of updating data (up to 1000 Hz), while optical methods can give stable accuracy in long periods of time (drift correction).

Hybrid tracking methods are based on " Sensor Fusion " algorithms, the most popular of which is the Extended Kalman Filter ( EKF ).

How does the SteamVR Lighthouse work?

The HTC Vive tracking system consists of two base stations, optical sensors and inertial measurement units (IMUs) in controllers and a helmet. Base stations consist of two rotating lasers and an array of infrared LEDs. One of the lasers rotates vertically, the second - horizontally. Thus, lasers in turn "scan" the surrounding space. Base stations operate synchronously: at a certain point in time, only one of the four lasers “scans” the tracking space. To synchronize the operation of the entire system between each switching on of the lasers, the entire surrounding space is illuminated by an infrared light pulse.

Sensors on the controllers and the helmet capture all optical pulses from the base stations and measure the time between them. Since the frequency of rotation of lasers is known in advance (60 Hz), the angles of rotation of each of the beams can be calculated from the time between pulses. What gives us the 2D coordinates of the optical sensor, knowing the relative position of the sensors on the controller, you can easily restore the 3D position of the controller in space (the PnP task). When two base stations are simultaneously visible, the 3D position of the controller can be calculated from the intersection of two rays, which gives more accurate results and requires less computation. More clearly the process of tracking is shown below.

A month ago, Valve announced that it was opening its tracking system for third-party developers. Read more about this here .

Which of the methods of tracking positions in space is the most promising for virtual / augmented reality in your opinion?

This is the first article from the cycle about BP technologists, if there is interest, we will continue to write them further.

PS Why there is no virtual reality hub?

Source: https://habr.com/ru/post/397757/

All Articles