Homo ex machina: perspectives of moving consciousness to another carrier

Hi, Geektimes! Today we have another post on a lecture by the author you already loved. Sergey oulenspiegel Markov - the creator of one of the strongest Russian chess programs, a specialist in machine learning methods and the founder of the portal 22century.ru - will talk about the prospects for transferring the human personality to other physical media. A new house for the mind: is the distance between the brain and modern machines great, what are the successes in creating analogs of nervous tissue? How far has science traveled from the first perceptrons to the promising neuromorphic processors? What do we know today about how the brain works, and what makes us believe that it is possible in principle to transfer consciousness? What are invasive and non-invasive neurointerfaces? What is the progress of science in their creation over the past decades and what can we do in this area in the near future? Replication and the concept of postneocortex: how does neuroplasticity help us bypass the paradoxes of self-awareness? A man with his own hands: how is the transition from indirect to direct engineering in the development of our species? Beyond bionics: is consciousness possible built on a fundamentally different platform? Read all about it under the cut.

The secret of a good recipe

Today we talk about the possibility of loading consciousness into the car. This task consists of two parts. What do we need?

- A machine capable of simulating a loaded mind with sufficient speed and accuracy. Not only hardware, but also adequate mathematical models that can represent our consciousness in its internal structure without loss.

- Methods to scan this consciousness and transfer it to the machine.

Thus, the conversation will focus on technologies that apply to both parts of this recipe. We will talk not only and not so much about uploading itself, but also about neural interfaces too. We do not yet have a car whose performance is comparable to the human brain. More precisely, not comparable, but at least closer to this task closer than two orders of magnitude.

')

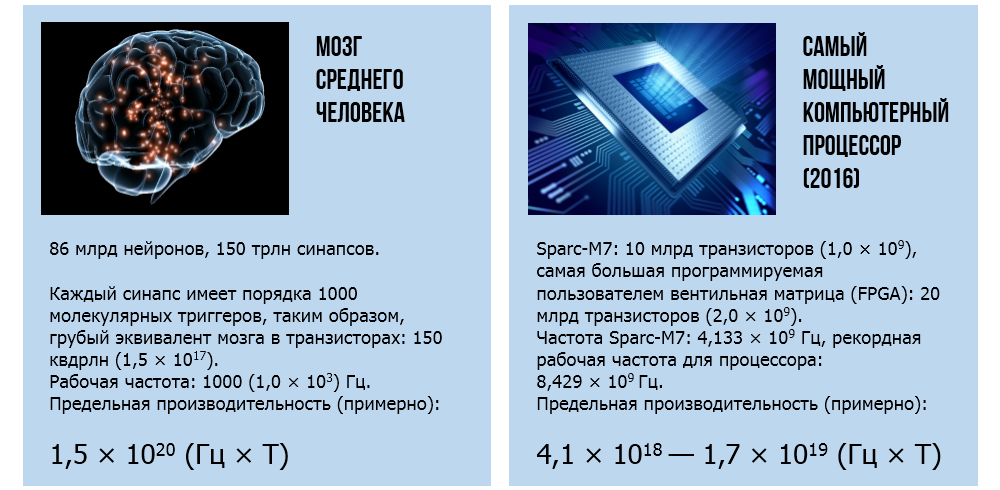

The brain of the average person vs the most powerful computer processor

What is the problem? The average human brain consists of approximately 86 billion neurons and 150 trillion synapses. A synapse is about 1 thousand molecular triggers. Each of them can be represented by an ordinary electronic trigger. Thus, if we translate the brain volume into triggers, then we get about 150 quadrillion triggers. The largest single crystals at the moment are the Sparc M7 processor (10 billion transistors) and FPGA arrays (up to 20 billion transistors). Obviously, a single crystal does not exactly suit us.

True, the working frequency of the brain is less than the frequency of the machine. The frequency of the Sparc processor is 4.13 GHz, while the operating frequency of the brain is about 1000 Hz.

If we multiply the number of transistors and the frequency, we will see the remaining difference of about two orders of magnitude. And this is without taking into account the inevitable losses in emulation due to the fundamental difference in architecture. Nevertheless, there are certain hopes.

Brute force

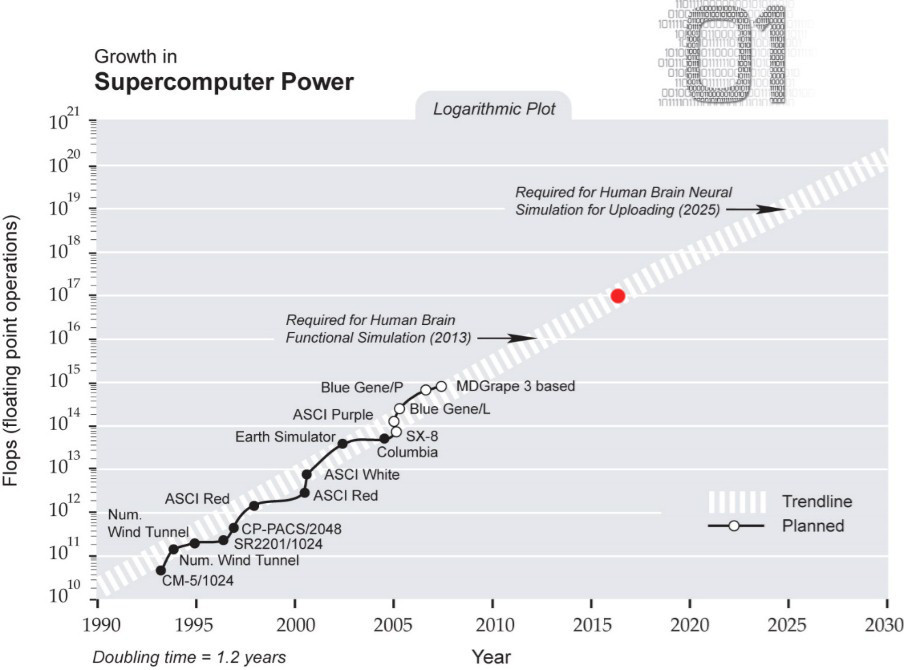

This graphic is an illustration from the book Singularity is Near (”Singularity is near”) by Ray Kurzweil. In 2005, he tried to predict the onset of the moment when the best-performing computers would be powerful enough to emulate human consciousness. It is clear that the assessment is based on assumptions, but the author is unlikely to make a mistake more than an order of magnitude. Now this trend continues without significant deviations (I specifically plotted the point of the current state of affairs).

In June 2016, the Chinese presented the next most powerful supercomputer, Sunway TaihuLight , with a capacity of 93 petaflops (almost 1017 flops). While we are moving on schedule; According to Kurzweil, the performance of a top-end computer, if we proceed from our understanding of human consciousness, will allow us to emulate the work of consciousness in real time by 2025. Of course, there may be surprises, but nonetheless.

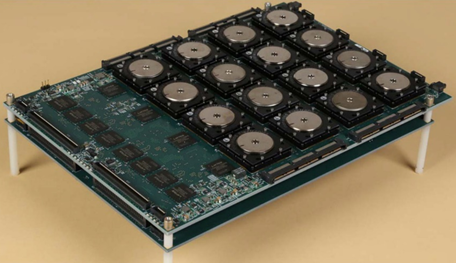

Neural networks

There are specialized machines that emulate the work of neural networks - neuromorphic processors. In 2016, another important milestone in the life of the TrueNorth chip was completed: in the Livermore National Laboratory. E. Lawrence (USA) launched a research project in the field of deep learning based on this processor. TrueNorth - the brainchild of IBM, created in the framework of the program DARPA SyNAPSE. This "piece of hardware" is an emulator of about 1 million neurons, each of which is equipped with 256 synapses. When emulating the work of the brain, such equipment will allow you to avoid a significant loss of performance associated with the difference in the architecture of the brain and traditional von Neumann machines, which include the most powerful modern supercomputers. Computational nuclei in the fastest von Neumann machines are many orders of magnitude smaller than synapses in the brain. The previous leader of the TOP-500 ranking, the Chinese supercomputer Tianhe-2 , was assembled from more than 30 thousand Xeons (24 logic cores on each of them) and almost 50 thousand 57-core Xeon Phi co-processors. A total of about 3.6 million cores. The current leader Sunway TaihuLight has already exceeded 10 million cores, but this is still many times less than the number of synaptic connections of the brain, each of which is a relatively primitive, but working simultaneously with all other computing devices.

The photo shows Frank Rosenblatt, the creator of the first neurocomputer MARC I, and next to him is his “iron” perceptron.

Modern neuromorphic processors are quite far from the development of Rosenblatt, but so far they are imperfect. One of the fathers of convolutional neural networks, Jan Lekun, criticizes the TrueNorth project for choosing a primitive neuron model (“integrate and work” - “intergate-and-fire”). This is historically the first model of a neuron, proposed back in 1907 by the French physiologist Louis Lapick. Lekunn casually argues that his criticism is not at all abstract: he himself is working on an alternative project, NeuFlow, using 16-bit neuron states instead of binary states in TrueNorth. NeuFlow hardware base - user-programmable valve arrays (FPGA) and special-purpose integrated circuits (ASIC).

How do we imagine the work of nervous tissue?

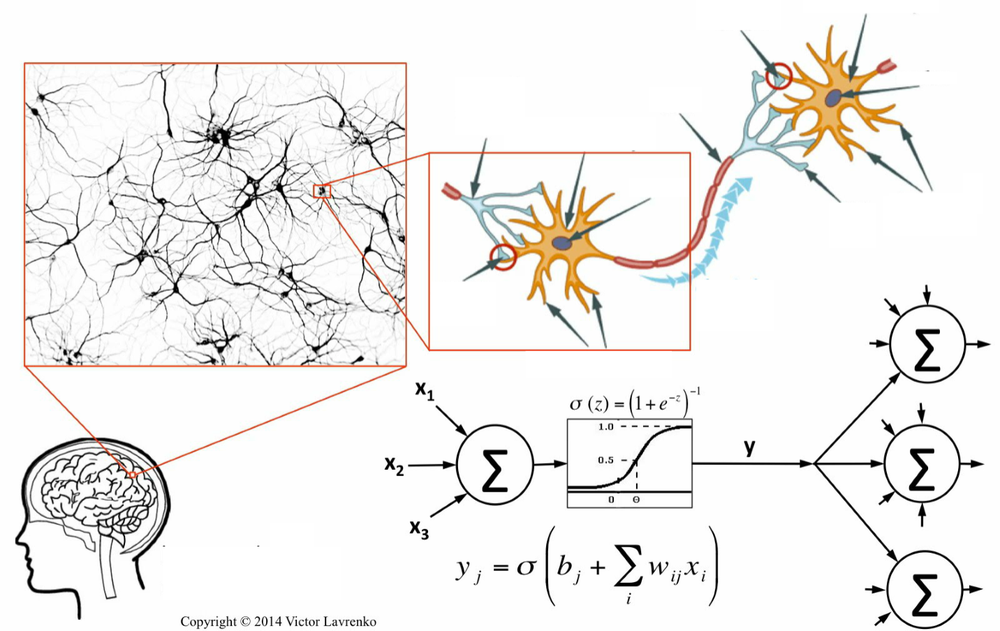

Mathematical models of nervous tissue began to be created in the second half of the 1940s. The first milestone was the achievement of McCulloch and Pitts - the creation of a model of a single neuron. They believed that a neuron is a kind of adder, which receives signals at the input, weighted on the weights of synaptic connections, and outputs the resulting signal. Later models began to use the logistic function in summation, in contrast to the Heaviside binary function, which assumes that the signal goes to the output if the sum of the input signals is greater than zero.

Modern neurophysiology applies an improved Hodgkin-Huxley model, which was developed in the early 1950s based on experiments with a giant squid axon, to describe the work of a synapse. The modern model takes into account a number of nuances in the operation of ion channels, complex temporal effects, but the essence of the work of the neuron McCulloch and Pitts guessed right: it really comes down to summation and transformation of signals.

Restoring the topology of the natural network

Under the operating room ceiling hung a structure resembling an inverted soldier's helmet gleaming with moisture, about two meters in diameter. Huge steel helmet with six thin spider metal hands on each side - Surgeon. Hands-paws that are in relentless movement, were busy with something terrible ... indescribable.

The operation on Cobb's prostrate body was in full swing. The exact movement of the scalpel, clamped in one of the surgeon's grips, his chest was cut from the throat to the groin. The other two spider legs went down and opened the chest doors, two more got the heart from the inside, then the lungs. Ralph Chisler was also busy: cutting off the upper part of Cobb's skull, he removed the bone cap and now took out the brain. Having disconnected the sensors for EEG removal from the brain tissue, Ralph hoisted the hemisphere on a pedestal of a device similar to a bread slicer combined with an X-ray machine.

The machine-surgeon turned on the brain tissue analyzer and smoothly slid across the ceiling to the side of the operating room far from the window.

- Seychasss tekhlo bukhdet pommesmesheno ff capacious, - whisper commented what is happening mole.

In the far corner of the operating room stood a spacious tank with muddy liquid.

The surgeon rolled the tank to the table, and the work began to boil, only the scalpels flashed. The lungs are here, the kidneys are there ... squared skin, apples of the eyes, intestines ... all parts of Cobb's body have found their place in the tank. Everything except the heart. Critically inspecting Cobb’s transplanted heart that had already been bought, the surgeon threw him into the hatch of the heat exchanger.

- What will happen to the brain? - whispered asked stunned Torchok.

The seen did not fit in his mind. Cobb was afraid of death more than anything else, but he consciously came here. He knew what would be done to him here, but he came anyway. Why?

- Strukkhhturah mossgokhkhvoy tahkhni bukhkhdet subjected anahllissu.

Rudy Rucker. “Soft. Body "(Software. Wetware)

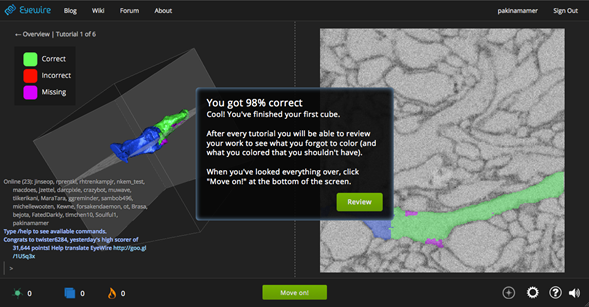

To duplicate the human consciousness, the first step is to restore the topology of the natural neural network. What can we do in this area today? EyeWire , an interesting project by scientists from MIT, began with the death of its main participant - a laboratory mouse named Harold. His brain was cut in micron layers, shoved sections into a scanning electron microscope and received a large set of scanned images.

It turned out that it would take a huge amount of time to view all the cuts and fully restore the topology of the neural network through them. Manually reconstructing one neuron takes approximately 50 man-hours of working time. Scientists involved in this problem are not very many, so deciphering the mouse's visual area alone would take about 200 years. Therefore, scientists have decided to implement another diabolical plan. They created an eyewire game. By registering on the EyeWire website, you get slices of Harold's brain and colorize them according to the rules you specify. If you painted the cut correctly (just like most players who received the same cut), then you earn a lot of points. If wrong - few points. The special indicator allows you to compare your skills in coloring the mouse brain with the skills of other people.

But this is only the first part of the devil's plan. The second part was that, based on the coloring data made by the players, the researchers trained a large convolutional neural network. They have now completed and published a paper on the restoration of the visual cortex. The technology is quite working and can be put on industrial rails.

Network topology is the most basic, fundamental point. Experiments on worms showed that the lifetime reactions learned by the worm are preserved during its long-term vitrification. Roughly speaking, if the worm is frozen for a long time, the information in its brain is preserved. This suggests that in order to preserve the personality, it may be enough to keep the connection (full description of the link structure) of the brain and that the personality will not be lost even if the current electromagnetic activity is lost.

Blue brain project

The biggest ambitious project on the theme of recreating the human brain in electronics is the Blue Brain project, which began in the early 2000s. In 2005, scientists created the first cellular model. In 2007, the first phase of the study was completed: a protocol was created according to which one column of the rat neocortex was reconstructed (at this stage the rat was the main model object), and based on the protocol in 2008, the project participants demonstrated the first working column. They showed that 10 thousand neurons with a closure have the same electrical activity as the real neocortex column of the rat. Receiving the same signals at the input, the model generated the same signals at the output as the real nerve tissue of the animal.

In July 2011, the first mesosignation was demonstrated. Scientists collected 1 million neurons, showed that the model is valid. The project plan assumed that in 2014 a full model of the rat brain would be obtained: 100 mesoconclusions, 100 million cells. Data on this work has not yet been published. The reason is unknown. Perhaps the preparation of the publication requires a lot of time, and perhaps the timeline of the project was influenced by recent discoveries in the field of neurophysiology. In 2015, publications on the discovery of a new type of neural connections were published in Nature Neuroscience and Neuron. It turned out that signals in the brain are able to spread through astrocytes of glial tissue. Scientists from the Federal Polytechnic School of Lausanne (Switzerland) have built a numerical model of data links. Blue Brain speakers responded to these publications and reported that they are integrating a new mechanism into their model.

It remains to wait for the results and hope that the public will get acquainted with them in the near future. The original timeline of the project involved the creation of the equivalent of a human brain by 2023. According to scientists from the Blue Brain, it is roughly equivalent to 1 thousand rat brains. Thousands of lemmings - almost one person.

Obtaining an electromagnetic activity card

A few words about the electromagnetic activity of the brain. At the end of the XIX century, it turned out that the brain generates a weak electrical current. This phenomenon was first described by Richard Cato, an English physiologist and surgeon. Decades later, in the 1920s. Hans Berger showed that it is possible to create a technology for collecting information on the electromagnetic activity of the brain. In the first experiments, Berger used thin metal electrodes that were inserted under the skin of the skull. A little later, a less invasive technology appeared and the first electroencephalographs, which, after many years of improvement, became one of the most common ways of recording electromagnetic activity of the brain.

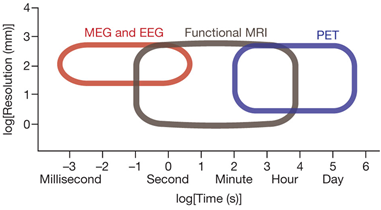

Now, three main technologies are used to collect electromagnetic brain activity: electroencephalography, magnetoencephalography and positron emission tomography. However, all technologies so far have serious problems with resolution, spatial and temporal. The graph shows today's achievements in this area. Horizontal - a logarithmic scale showing the time resolution of each method, vertical - spatial resolution.

What do we see on the chart? First, the best spatial resolution is about 0.75 mm. This means that the device with this resolution will record the activity of approximately 50 thousand neurons as a single signal. Moreover, devices with a spatial resolution of 0.75 mm are significantly inferior to their counterparts in temporal resolution (about 60-120 seconds). Devices with good temporal resolution (magnetoencephalographs) are distinguished by low spatial resolution. According to most experts, the most promising technology is magnetoencephalography.

What limits its development? For many decades since the appearance of the first magnetoencephalographs, weak magnetic fields generated by the brain were recorded using so-called SQUID sensors. These are highly sensitive superconducting magnetic sensors, which allow to record magnetic fields that are more than three orders of magnitude weaker than the magnetic field of the Earth. The eternal companion of superconductivity in technology is super-expensive. Successes in the field of creating high-temperature superconductors are still rather modest, which means that sensors of this type inevitably drag a cumbersome and expensive cooling system behind them.

Fortunately, in the early 2000s, two more technologies appeared.

The first of them is ferrite-garnet membranes, this technology is developing quite actively in our country. While in sensitivity they are inferior to SQUID-sensors about two orders of magnitude. Scientists who are engaged in the development of ferrite-garnet technology say that it is potentially capable of surpassing SQUID sensors in accuracy, while remaining very inexpensive.

The second technology is SERF sensors (free from spin-exchange broadening). The accuracy of the SERF-technology is at the level of SQUID; it is cheaper, although not as cheap as ferrite-garnet membranes.

Images from the brain

How effective can you get data from the brain? We are able to in this area is not so little. All neural interfaces can be divided into two large classes:

- invasive imply physical connection of the interface with the nerve tissue, i.e. intervention in the body;

- non-invasive ones are built on electroencephalography, magnetoencephalography and other remote methods for recording brain activity.

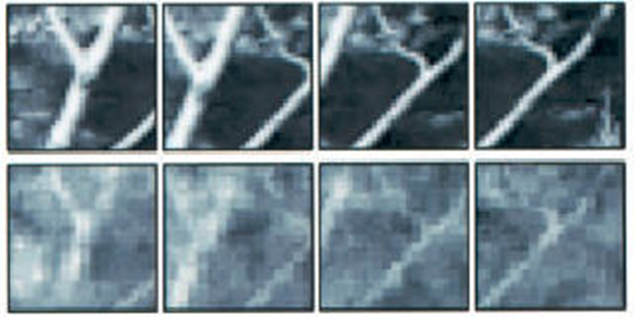

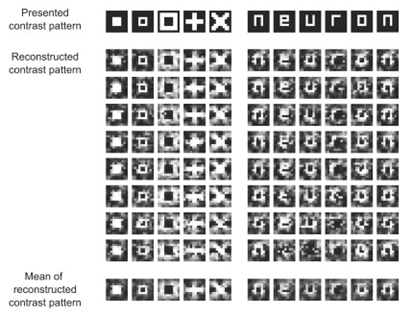

Here is a picture that was obtained from the brain of a cat. This is a work in 1999, performed at the University of California at Berkeley. A two-dimensional array of electrodes was implanted into the lateral geniculate body (the structure of the brain that receives information directly at the exit from the retina) of the cat's brain, with which 177 neurons were detected. One electrode can receive data on the activity of a single cell.

And then a later work - the same effect was obtained in 2008 using a non-invasive interface based on MRI. As we know, MRI doesn’t have a very good temporal resolution - special digital processing methods helped; a man was shown a set of simple pictures, and then restored it using successive brain scans.

Image transfer to the brain

More technically difficult task is to transfer the image back: from the car to the brain. The great interest in it is due to the potential medical use to create effective visual prostheses. The first successes were achieved quite a long time ago. Researcher William Dobell in 1978 produced the first working prototype of an artificial vision apparatus. He looked pretty scary: an array of 68 electrodes was implanted into the brain. In those years there were neither light enough cameras nor high-performance microcomputers. To see, the first patient (someone Jerry) connected to the mainframe, which processed the signal from the camera and converted it into a sequence of signals for the brain. A black and white picture with a small resolution appeared in the brain, the frame rate was very rare; however, the system still worked.

In 2002, the first commercial prosthetic vision program was opened. Improved devices, the heirs of the first device Dobella, began to establish patients on a commercial basis. In the first group consisted of 16 patients. What allowed such a device? For example, drive slowly. One of the most famous Dobella patients, Jens Naumann, showed that he could get behind the wheel of a car and drive slowly around the house. Jens distinguished a tomato or a banana from an apple and even recognized large written characters.

True, the story of the first group of patients ended rather sadly. Dobelle in 2004 quite unexpectedly died. Patients of a private researcher were left without care. They saw worse. Jens Naumann lost his sight for the second time in his life.

This is a modern advertising visual prosthesis. Prostheses that are superior to the Dobella models are now available. Other researchers were able to reproduce this technology in their laboratories.

And now a little about hearing. About what we can do now.

The video features cochlear implants. In some cases, they allow hearing to be returned to people deprived of it from birth or who have lost it due to illness.

Closed loop feedback system

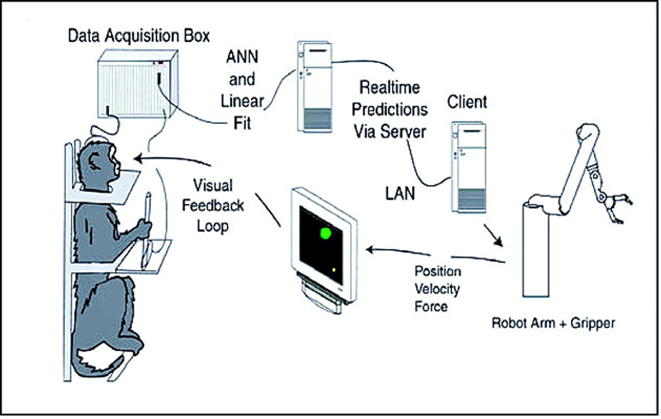

An even more interesting story is limb prosthetics. Connecting us to external devices operating in the real world. Miguel Nickelis, a prominent pioneer in this field, demonstrated the first system with a closed feedback loop. In the experiments of Nickelis, rhesus monkeys and an invasive interface were used - an array of electrodes implanted into the motor cortex. Data is collected, converted, filtered and transferred to the manipulator.

At the same time, the monkey can see its manipulations. Before Nickelis, all such devices worked one-sidedly, data was transferred only from the motor cortex to the device, but not back. In this case, the cycle was closed completely.

Alternatives

The task of transferring human consciousness to the machine today is mostly engineering. Critics may say that we do not yet have a fully functioning system, whether the system will indeed (when / if it is created) prove indistinguishable from the original human consciousness. Will it be intelligence in the car or a very weak and unsuccessful copy?

Another, quite marginal point of view: scientists are mistaken in the belief that human consciousness and human personality are reduced to electromagnetic activity of the brain. Natalia Bekhtereva, granddaughter of the well-known physiologist V. M. Bekhtereva and the long-term director of the Institute of the Human Brain of the Russian Academy of Sciences, stated that consciousness exists in thin areas, and the brain is just a receiving device, a kind of antenna. Of course, from the point of view of modern science, this sounds extremely naive and is not supported by experiments. The artificial neural networks that we create are fully capable of solving complex intellectual problems, not to mention the fact that the Blue Brain project showed that at least part of the brain can be reproduced. Most scientists believe uploading is technically possible in the near future. Some enthusiasts like Jan Korchmaryuk offer even to carry out research and engineering work in this direction into a separate discipline - the so-called “settleretika”.

In addition to naive objections to the possibility of loading consciousness into the machine, there are also almost scientific counter-arguments. For example, sometimes critics say that quantum-level effects can play an important role in the brain: the existence of Heisenberg’s uncertainty will not allow a sufficiently accurate scan of brain activity and transfer consciousness to another carrier without loss, because the nature of consciousness is quantum.

There are no serious reasons to believe that there are any quantum effects in the brain (and, therefore, that an error of an order close to Planck's constant will lead to a distortion of brain activity, consciousness, and psyche). However, this year there was an assumption that the propagation of light signals through glial tissue may play a certain role in the brain; it is able to somewhat (but hardly significantly) reduce the energy thresholds of information exchange. But light effects are not only a source of some skepticism. Optogenetics, which emerged as a research direction in 2005, opens up broad prospects for the creation of invasive neurointerfaces. This is a technique based on the insertion into the membrane of nerve cells of special channels - opsins, which react to the excitation by light. For the expression of channels, special viral vectors are used, and for subsequent activation or inhibition of neurons and their networks - lasers, optical fibers and other optical equipment.

To be honest, I believe that the position of skeptics in the matter of loading consciousness into a car is just another incarnation of vitalism. At one time, when scientists stuttered about the unity of the material world, the adherents of the religious point of view tried to prove that living matter cannot be created artificially, that the barrier between inorganic and organic matter is insurmountable. And while the synthesis of organic substances was not demonstrated in the laboratory, this view was prevalent even among the educated people of their time.

Now science has very significantly pushed this boundary, including in the field of creating artificial living organisms (another project to create a working cell assembled from scratch has recently been completed). In this regard, it is difficult to argue with experiment and practice. But the intention itself to leave for life, for consciousness, some area inaccessible to science and human technology, still exists. Some people really do not want our technology to be applied to ourselves and our consciousness. What is the reason? Sometimes it seems to people that if we have explained something, they have thereby destroyed holiness, sacredness. , , , - . - .

. , . . ? , . , , , . - : - , . , , , , , , .

, , . , . , , « — » , .

- . -, , , , . , , , - , , . - — . , - : , - - .

- , . . , , , data science , . , - — , : .

, ? — . ( , ) , , , , .

: . , , XIX -- . , , — , -. , , -, . , , .

, , , . ., . . , . , .

, . — , . . , . , 6 . : . , — . , . — « »: , . , .

, , , , : , . — , , — . , , , .

, , «» . , . , , «», .

, , , . , - , .

:

Source: https://habr.com/ru/post/397671/

All Articles