iBrain is already here - and already in your phone

An exclusive look at the use of artificial intelligence and machine learning at Apple

July 30, 2014 Syria [Siri] transplanted the brain.

Three years before Apple had become the first of the largest technology companies to introduce an AI assistant into the operating system. Siri has become an adaptation of a third-party application acquired by the company. At the same time with the application in 2010 was acquired and the company-developer. The very first reviews about the technology were enthusiastic, but in the following months and years of users began to annoy its flaws. Too often she misunderstood commands.

')

Therefore, Apple transferred the Siri voice recognition system to work using a neural network for users from the United States on the day in July (the rest of the world happened on August 15, 2014). Some of the previous techniques remained in service — including “hidden Markov models” —but now the system is based on machine learning techniques such as deep neural networks (DNN), convolutional neural networks, long short-term memory, recurrent networks with gateways and n-grams. After the upgrade, Siri looked the same, but deep learning came to her aid.

And as often happens with hidden updates, Apple did not advertise it. If users have noticed, it is a reduction in the number of errors. Apple claims that the results of improving the accuracy of the work were amazing.

Eddie Q, Senior Vice President, Internet Programs and Services

“It was one of those times when the improvement was so significant that I had to double-check the results to make sure no one put the decimal separator in another place,” said Eddie Kew, senior vice president of Internet programs and services.

The history of the transformation of Siri, first described in this article, may surprise AI specialists. Not because the neural networks improved the system, but because Apple did everything silently. Until recently, when the company strengthened its hiring of specialists in AI and acquired a couple of specialized firms, observers thought Apple was lagging behind in the most exciting competition of modern times: the race to use the rich capabilities of AI. Since Apple was always reluctant to share information about what was happening behind closed doors, AI connoisseurs did not suspect that the company was engaged in machine learning. “Apple is not part of the community,” says Gerry Kaplan, Stanford-leading course on AI history. "Apple is the NSA in the AI world." But experts on AI believed that if Apple was just as seriously engaged in AI as Google or Facebook, then this would be known.

“On Google, Facebook, Microsoft, the best people are involved in machine learning,” says Oren Etzioni of the Allen AI Institute. - Yes, Apple hired someone. But call me the five largest AI specialists working for Apple? They have speech recognition, but it's not clear where machine learning helps them. Show me where MO is used in your product! ”

“I myself am from Missouri,” says Etzioni, who was actually born in Israel. - Show me".

Apple recently revealed where machine learning is used in its products — not Etzioni, but me. I spent most of the day in the One Infinite Loop in Cupertino negotiating room, getting basic information about the company's work with AI and MO from Apple's bosses (Q, senior vice president of international marketing Phil Schiller, and senior vice president of software development, Craig Federidi) and two scientists whose work was key to the development of Syria. We sat down, and they gave me a two-page content listing Apple products and services using machine technology — those that were already preparing for the release, or were already coming out — as a topic for discussion.

Message: We are here. We are not inferior to anyone. But we do everything in our own way.

If you use an iPhone, then you have met Apple's AI, and not only in an improved version of Siri. You encounter it when the phone identifies a caller who is not on your contact list (but recently e-mailed you). Or when you swipe your finger across the screen to bring up a list of applications, among which there is a high probability that you want to open after that. Or when you receive a meeting reminder that you did not add to the calendar. Or when a point with a hotel reserved by you appears on the map, and you have not yet entered its address. Or when the phone points you to the place where you parked, but you did not ask him. All these opportunities have either appeared or have been greatly improved through the use of deep learning and neural networks.

Face recognition works with neural networks.

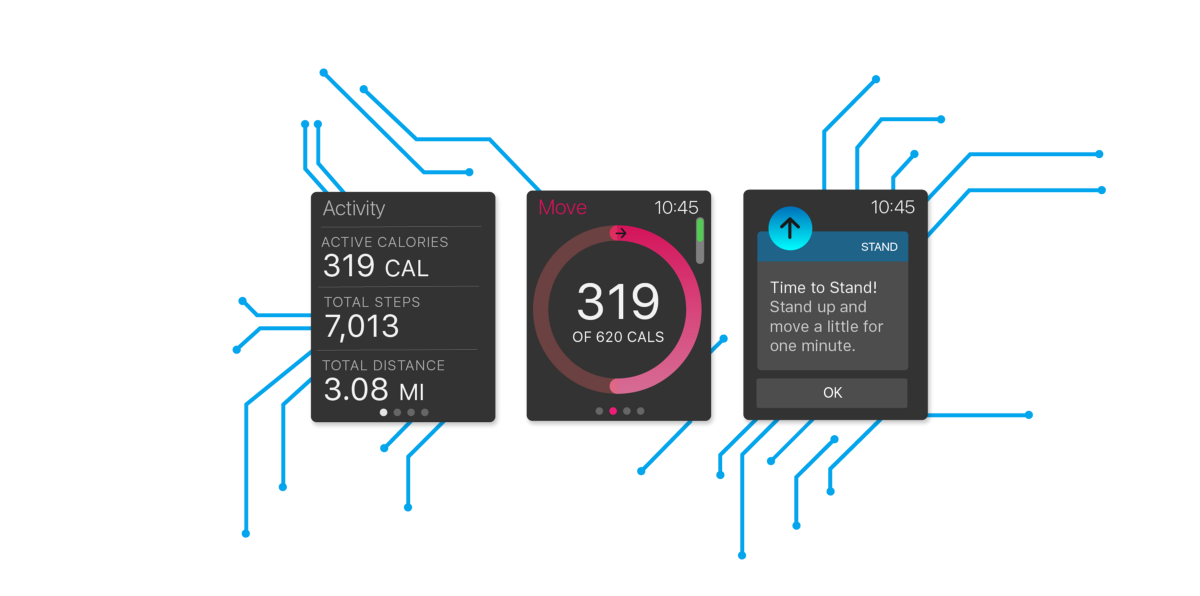

As my speakers say, MO is already used in all products and services of the company. Apple uses in-depth training to identify fraudsters in the Apple store, to increase battery life, to sift out the most useful feedback from beta testers. MO helps Apple choose news for you. Determines whether Apple Watch users are engaged in sports, or simply pacing back and forth. It recognizes faces and places from photographs. It determines whether it is better to break a weak Wi-Fi connection and switch to mobile communication. She even knows how to make a movie, combining photos and videos into a small movie at the touch of a button. The company's competitors are doing the same, but according to its directors, these AI capabilities cannot work as well, while protecting the privacy of users as much as Apple does. And of course, none of them make products like Apple.

AI is not new to the company. Already in the 1990s, they used some MO techniques in programs that recognized handwriting (remember Newton?). The remains of these attempts can be found in today's programs that transform handwritten Chinese characters into text or recognize letter-by-letter text on the Apple Watch. Both of these capabilities were developed by the same team of specialists in MO. Of course, in the past machine learning worked more primitively, and very few people knew deep learning. Today, these technologies are at their peak, and Apple is bristling up in response to allegations that their MO is not too serious. And now company executives talk about this in more detail.

“In the past five years, we have been watching the growth of this area in the company,” says Phil Schiller. “Our devices are getting smarter and faster and faster, especially with the use of the developed A series chips. Backend becomes smarter, faster, and everything we do is connected with each other. This allows us to use more and more MO techniques, because there is so much to learn, and it is already available to us. ”

And while Apple does its best to use the MoD to its fullest, the directors emphasize that this process is nothing special. Cupertino bosses see in deep learning and MO just another technology from a stream of successive breakthrough technologies. Yes, yes, it changes the world, but no more than other breakthroughs, such as touch screens, flat screens, or OOP. From the point of view of Apple, MO is not the last milestone, despite the opinion of other companies. “After all, it cannot be said that over the past years there were no other technologies critical for changing the principles of our interaction with devices,” says Q. And no one in the company wants to come close to discussing scary topics that invariably pop up at the mention of AI. And, as one would expect, Apple does not confirm whether it is working on robots or its version of Netflix. But the team clearly indicated that Apple is not working on Skynet.

“We use these technologies for what we have long wanted to do and with better quality than was possible before,” says Schiller. - And for new things that we could not do. This technology will become the Apple method of achieving the goals as we evolve within the company and because of how we make our products. ”

But during the briefing, it becomes clear how the AI greatly changed the methods of using the Apple ecosystem. Experts in AI consider that Apple is limited by the fact that it does not have its own search engine (which could supply data for training neural networks) and by its inflexible conviction in the need to protect user information (data that could otherwise be used). But it turns out that Apple has already figured out how to overcome both of these obstacles.

And what is the size of this brain, the dynamic cache that ensures the operation of MO on the iPhone? I was surprised that they answered this question. Its size is about 200 MB, depending on the amount of personal information stored (old data is always deleted). This includes information on using applications, interacting with people, processing neural networks, generating voice and “modeling natural language events”. It also contains data used for neural networks that work with pattern recognition, face recognition and scene classification.

And, according to Apple, all this is done in such a way that your settings, preferences and movements remain private.

And although I was not explained everything about the use of AI in the company, I was able to figure out how the company spreads its experience in MO among the organization’s employees. The talent of the MoD is divided throughout the company; it is available to all developers who are encouraged to use this knowledge to solve problems and invent new features of specific products. “We don’t have a dedicated organization, the Temple of the MoD, at Apple,” said Craig Federigi. “We are trying to keep the information closer to the teams that use it to create the right user experience.”

How many people in the company are working on MO? “Many,” says Federigi after some thought. (If you thought he would tell me the exact amount, you don’t know Apple). Interestingly, in Apple MO are developing and those people who were not an expert on this issue before they came to the company. “We hire people who are well versed in mathematics, statistics, programming, and cryptography,” said Federigi. - It turns out that many of these talents are beautifully transformed into MO. Although today, of course, we hire specialists in MO, we also take people with the right inclinations and talents. ”

Senior Vice President of Software Development Craig Federigi listens to Senior Director for Siri, Alex Atro

And while Federighi doesn’t talk about it, this approach may be based on necessity: the company's tendency to secrecy may put it at a disadvantage compared to competitors who encourage their best programmers to distribute research results. "Our methods increase the range of natural selection - those who most need teamwork and the release of excellent products against those who are the most important publication," - said Federigi. If researchers make a breakthrough in the field while improving Apple products, that’s good. “But for us the main result will be the end result,” says Q.

Some talented people get into the company after takeovers. “Recently, we buy 20-30 small companies a year, we are engaged in hiring labor,” says Kew. When Apple buys a company that is working on AI, it doesn’t do it because “here is a crowd of MO researchers, let's type a whole stable out of them,” said Federigi. “We are looking for talented people who are focused on creating a great user experience.”

The most recent acquisition is Turi, a Seattle company that Apple bought for $ 200 million. They built a set of tools for MOs, comparable to Google's TensorFlow, and this acquisition led to rumors that Apple would use this technology for similar purposes, both for itself and for developers. The directors do not confirm or deny this information. “Some of their results fit very well with Apple, both in terms of technology and people,” says Kew. After a couple of years, we may find out what happened, as it was when Siri began to demonstrate the predictive capabilities of Cue (not related to Eddie), a product of the same-name startup that Apple bought in 2013.

No matter where the talent comes from, Apple's AI infrastructure allows it to develop products and capabilities that are impossible otherwise. It changes the company's product development program. “Apple has no end to the list of cool ideas,” says Schiller. - MO allows us to refer to such things that in the past we would not touch. It joins the decision-making process about the products we will be dealing with in the future. ”

One example is the Apple Pencil, which works with the Apple Pencil. To make this high-tech stylus work, the company had to solve a problem - when people write on the screen, the lower part of the palm runs on the touch screen, which leads to the appearance of parasitic taps. Using the MO model to eliminate these keystrokes, the company managed to teach the screen to distinguish between scrolling, touching, typing from the stylus. “If it doesn’t work 100%, this paper is not suitable for me, and Pencil is a bad product,” says Federigi. If you like Pencil, say thank you for it MO.

Perhaps the best measure of progress MO in the company will be its most important purchase, Siri. She begins in the ambitious intellectual assistants program DARPA, after which several scientists founded the company to create an application using this technology. Steve Jobs personally convinced the company's founders to sell to Apple in 2010, and insisted that Siri be built into the operating system. Its launch was one of the highlights of the iPhone 4S presentation in October 2011. Now it works not only when the user holds down the home button or mumbles “Hey Siri” (by itself, this feature also uses MO, allowing the iPhone to listen ether, not much putting the battery). Siri's intelligence is built into Apple Brain, and works even when it says nothing.

Kew describes the four components of the main product: speech recognition, natural language understanding, command execution and recall. “MO influenced all these areas very much,” he says.

Head of Advanced Engineering Tom Gruber and Siri Guru, Alex Atro

Siri is engaged in the head of the advanced development department Tom Gruber, who got into Apple along with the main acquisition (the co-founders of the company left Apple in 2011). He says that even before applying neural networks to Siri, they received a huge amount of data from the user base. In the future, these data served as the key to the training of neural networks. “Steve said that in one night we’ll move from a pilot application to hundreds of millions of people, without beta testing,” he says. - Suddenly we will have users. We were told how people would call things related to our application. This was the first revolution. And then neural networks appeared. ”

Siri's transition to neural networks that handle speech recognition coincided with the arrival of several AI experts, including Alex Atro, now leading a team of speech technologies. He started his career in speech recognition at Apple in the early 90s, and then spent many years at Microsoft Research. “I really enjoyed working there, I published many works,” he says. “But when Siri came out, I said: 'This is a chance to make deep neural networks a reality, to bring them from such a state, when hundreds of people read about them, to the kind in which millions use them”. In other words, he belonged to the type of scientists that Apple was looking for - placing the product above publications.

When Atero came to the company three years ago, Apple was still engaged in obtaining licenses for most of Siri's speech technologies from third-party vendors, and this situation was soon to change. Federigi notes that Apple is constantly working on this template. “When it becomes clear that the technology area is necessary for our ability to release a new product over time, we increase our internal abilities to produce what we want. To make a great product, we need to fully own the technology and innovate within the company. Speech is a great example of how we have applied external capabilities to give a project a start. ”

The team began training the neural network to replace the original Siri. “We had the coolest GPU farm running around the clock,” says Atro. “And we pounded a bunch of data into it.” The July 2014 release proved that all this CPU time was not wasted.

“The number of errors has fallen by half in all languages, and in some cases even more,” says Atro. “Mainly due to the deep learning and our optimization, not just the algorithm itself, but according to the context of its work in the final product.”

The mention of the final product is not accidental. Apple is not the first to use deep neural network training in speech recognition. But Apple claims it has the advantage of controlling the entire product development and delivery system. Since Apple makes its own chips, Atro had the opportunity to work with the development team and the engineers who wrote the firmware for the devices to maximize the speed of the neural networks. The needs of the Siri development team have even influenced the design of the iPhone.

“It's not just silicon,” adds Federigi.- In how many microphones on the device, where they are located. How do we set up the hardware and microphones, and the software that processes the sound. It is important interaction parts. This is a huge advantage over those who simply wrote software and see what it does. ”

Another advantage: when a neural network works in one product, it can be adapted as the basis of technology for other purposes. MO, helping Siri to understand you, turns into an engine that records dictation. As a result of the work of Siri, people find that their emails and messages will be more meaningful if they give up the on-screen keyboard, click on the microphone icon and speak out loud.

The second component of Siri, mentioned by Kew, is the understanding of natural language. Siri began using MoD to understand the user's intentions in November 2014, and released a version with deep learning a year later. And, as with speech recognition, MO has improved the user experience — especially in terms of more flexible interpretation of commands. For example, Q gets an iPhone and calls Siri. “Send Jane twenty dollars through Square Cash,” he says. A message appears on the screen with a description of his request. Then he tries to set the task differently: “Throw the twenty to my wife.” The result is the same.

Apple claims that without these improvements in Siri, the current version of Apple TV, with its sophisticated voice control, would hardly have been possible. Earlier versions of Siri made you speak clearly and separately, the charged MO version not only offers specific options from a huge catalog of movies and songs, but also understands the concepts. "Show me a good thriller with Tom Hanks." (If Siri were really smart, she would have excluded the “Da Vinci Code”). “Such an opportunity before the advent of this technology could not be provided,” says Federigi.

The release of iOS 10 is scheduled for this autumn, and Siri’s voice will be the last of the four components transformed by the MO. Deep neural networks have replaced previous implementations made under license. In fact, Siri's replicas are selected from a database of records collected in the voice center and each sentence is assembled in parts. According to Grubber, machine learning smoothes out the corners and makes Siri look more like a real person.

Acero holds a demonstration - at first, the familiar voice of Siri, with notes similar to a robot to which we are used. Then a new one, saying “Hello, how can I help you?”, With fascinating smoothness. What is the difference? “Deep learning, baby,” he says.

This seems like a minor detail, but a more natural voice can lead to big changes. “People trust the voice of better quality more,” says Gruber. - The best voice delays the user and prompts to use it more often. The effect of the return increases. "

The desire to use Siri, and all the improvements made by the MoD, become even more important, because Apple finally opens Siri to third-party developers — and critics of the company believe that it was time to do it. Many noted that Apple, which has dozens of Siri partners, lags behind systems such as Amazon's Alexa, boasting thousands of third-party capabilities. Apple says it's not right to compare them, because Amazon users have to express their wishes in a special language. Siri will more naturally include things like SquareCash or Uber. (Another competitor, Viv - created by other co-founders of Siri - also promises integration with third-party services when the release date announced is not yet announced).

Perhaps the biggest challenge for the company to develop the MO is to achieve success if necessary to adhere to the principles of user privacy. The company encrypts their data, so no one, even Apple lawyers, can read them (even the FBI, even with a warrant). And the company boasts that it does not collect user data for promotional purposes.

And although such severity is commendable from the point of view of users, it does not help lure the best talents in the field of AI to the company. “Experts in IOs need data,” said a former Apple employee working for a company specializing in AI. “But by getting into the privacy pose, Apple forces you to work with one bound hand. One can argue whether this is right or not, but as a result, Apple has no reputation as really cool AI specialists. ”

There are two problems. The first is the processing of personal information in systems based on MO. When the user's personal data passes through the neural network processing millstones, what happens to this information? The second problem involves collecting information necessary for training neural networks to recognize behavior. How can this be done without collecting personal information from users?

Apple thinks it has solutions. “Some people think that we cannot do this with AI, because we have no data,” says Q. “But we have found ways to get the data we need, while maintaining privacy.” Like this".

Apple solves the first problem, taking advantage of the ability to control both software and hardware. The most personal information remains inside the Apple Brain. "The most sensitive things we store where MO is happening locally in relation to the device," says Federigi. As an example, he gives the predictions of applications to run, icons that appear when you hold the screen to the right. These predictions are based on many factors, some of which depend only on your behavior. And they work - Federigi says that 90% of the time people find what they need in these predictions. All calculations Apple spends directly on the phone.

In addition, the device is stored, the possible, the most personal information received by the company: the words used by people on the iPhone keyboard QuickType. Using the neural network training system that tracks your input, Apple can recognize key events and moments, such as flight information, contacts, meetings — but the information itself remains on your phone. Even in the backups stored in the cloud, it is diluted in such a way that it cannot be obtained from only one backup copy. “We do not want this information to be stored on our servers,” said Federigi. “Apple doesn't need to know your habits, or where and when you go.”

Apple is trying to minimize stored information. Federighi gave an example in which you can have a conversation, and someone in it will mention a term suitable for searching. Other companies could analyze the entire conversation in the cloud to find these terms, but the Apple device can recognize them so that the data does not leave the user - because the system is constantly looking for matches on the knowledge base stored in the phone (it is part of 200 MB "Brain").

“The base is compact, but exhaustive, with hundreds of thousands of locations and objects. We localize it, because we know where you are, ”says Federigi. The database is accessed by all Apple applications, including the search engine Spotlight, Maps and Safari. It helps to automatically correct errors. “And constantly working in the background,” he says.

Starting with iOS 10, Apple will use a new differentiated privacy technology that allows you to crowdsource information so that it cannot identify individuals. These may be words that have gained popularity, which are not in the database, links that offer more relevant answers to queries, or a fashion for using certain emoji. “Usually in the industry, it is customary to send all the words and symbols you enter to the company's servers, so that they then delve into them and notice something interesting,” said Federigi. “We encrypt information inside and out, so we don’t do it.” Although differentiated privacy originated in the research community, Apple is trying to introduce it to the masses. “We are transferring it from the research department to a billion users,” says Q.

And although it is clear that MO has changed Apple products, it is not yet clear whether it has changed the company itself. In a sense, the approach using MO is in conflict with the spirit of the company. Apple carefully controls user experience, right down to sensors that measure screen holding. Everything is pre-planned and precisely coded. But when using MO, engineers have to take a step back and give the software the opportunity to search for solutions on their own. Can Apple adapt to modern reality, in which MO systems can participate in product design themselves?

"This is the cause of many disputes in the company," said Federigi. “We are used to giving out a very thoughtful and controlled experience in which we control all the measurements of how the system communicates with the user. When you start to train the system based on a large set of data on human behavior, the results obtained may not coincide with the designer's idea. They appear based on data. ”

But Apple is not going to back down, says Schiller. "Although such technologies, of course, affect the development of products, in the end we use them to produce better products."

As a result, Apple may not make announcements about immersion in MO with a head, but it will use as much MO as possible to improve products. The brain on your phone proves it.

“A typical user will experience deep learning daily, and this illustrates what you like about Apple products,” says Schiller. “The coolest moments are so subtle that you think about them only after the third meeting with them, and then you stop and exclaim, 'How is this possible?'”.

Skynet will wait.

Source: https://habr.com/ru/post/397331/

All Articles