Revolution AI 101

This article examines the current state of development of artificial intelligence, examines the challenges and threats, as well as the peculiarities of the work of the most recognized scientists, and describes the main predictions of how AI can appear to us. In general, this is a revised and abbreviated version of a two-part essay written by Tim Urban for “ Wait But Why ”.

Introduction

If we assume that the scientific activity of mankind will continue without noticeable interruptions, the emergence of AI may be the most positive change in our history. Or, as many fear, the most dangerous invention. Today, developments in the field of AI confidently follow the path of creating a computer, whose cognitive functions will not be inferior to the human brain. And most likely, we will be able to create it within 30 years (see the timeline in Chapter 5). According to the forecasts of most scientists working on the problem of AI, this invention may cause breakthroughs in the field of creating Artificial Superintellekt (ICI) - an entity whose mind will surpass the combined power of the intellect of all people (read more about this in Chapter 3). We are not talking about a foggy future. The first stage of creating AI is gradually manifested in the technologies that we already use in everyday life (for the latest achievements in the field of AI, see Chapter 2). Every year, a critical mass of achievements will accumulate and accelerate the development process, contributing to the complexity of technologies, their distribution and accessibility. We will entrust more and more intellectual work to computers, introducing them into every aspect of our reality, including organizing our work, forming communities and communicating with the world.

Exponential growth

The guiding principle of technical progress

In order to better understand the guiding principles of AI revolution, let's digress from scientific research for now. Imagine that you have received a time machine and have to deliver someone from the past to the present, so that this person is speechless from our technological and cultural achievements.

')

Suppose you decide to go back 200 years. They rushed to the beginning of the 1800s, grabbed a peasant and brought him in 2016. You drive him and watch his reactions to everything around him. We cannot imagine how he perceives these shining capsules sweeping along the roads; conversations with people across the ocean; watching sports, passing for 1000 km from it; listening to a concert that happened 50 years ago; messing around with a magic rectangle capable of storing images and moving pictures, as well as showing a small dot indicating a location on the map; talking face-to-face with someone at the other end of the country, and more. We do not need much time. After a couple of minutes, the guest from the past finally went nuts.

Now you both want to know how people from the 1600s will react to the achievements of the 1800s. You move through time, grab the first comer and move him forward for 200 years, to the 19th century. He is very interesting to look at the world around him, but you understand that he is not shocked by what he saw. That is, to shake someone with the 19th century, you need to go back in time much more than 200 years. What is there a trifle, we will flit 15,000 years ago. In the days before the First Agricultural Revolution, thanks to which the first cities appeared and the concept of civilizations appeared. Find some hunter-gatherer and show him the vast empires of the 1750s, with their tower of churches, ships capable of crossing the oceans, with the concept of "being inside" and huge accumulations of knowledge and discoveries in the form of books. We do not need much time. After a couple of minutes, the guest from the past finally went nuts.

Now, the three of you have decided to do the same thing again. You understand that it does not make sense to go back for 15,000, 30,000, or 45,000 years. You have to jump in time much further. You find a man 100,000 years ago and give him a tour of the tribes with a complex social hierarchy. He sees a variety of hunting weapons, cunning tools, fire, and for the first time he hears language in the form of signs and sounds. Well, you understand, all this explodes his brain. After a couple of minutes, he finally goes nuts.

What happened? Why did we each time have to go back much further into the past? 100,000 → 15,000 → 200 years ago?

Visualization

This is because more developed societies are progressing faster than less developed ones. In the 1800s, humanity knew much more, so it is not surprising that it developed much faster than humanity 15,000 years ago. And to us today, to go nuts in the future, it will be enough to move forward less than 200 years.

Ray Kurzweil, a scientist and specialist in the field of AI, says that “from 2000 to 2014 we saw the same amount of progress as for the entire 20th century. And the same amount will be reached by 2021, in just seven years. In another 20 years, we will achieve progress over the course of a year, several times more than progress for the entire 20th century, and then this period will decrease to six months. ” Kurzweil believes that by the end of the 21st century we will exceed the progress of the 20th century by 1000 times.

Logic dictates that if the most developed species on the planet move forward at an ever-increasing pace, in the end, progress completely changes their view of life, their view of what it means to be human. As if the development of the intellect in the process of evolution changed human existence so much that it would change the existence of all living beings on Earth. And if you spend some time studying the current situation in science and technology, you will notice many signs that life, as we know it, can no longer resist our next breakthrough.

The path to a common artificial intelligence

Creating computers that are not inferior to people in intelligence

AI is a general term for describing computer intelligence technologies. Despite the diversity of opinions on this issue, most experts believe that there are three categories of AI.

Limited Artificial Intelligence (ANI, Artificial Narrow Intelligence)

AI of the first category. Specializes in a particular area. For example, there is an AI capable of defeating the world chess champions, but this is the only thing he can do.

General Artificial Intelligence (AGI, Artificial General Intelligence)

AI of the second category. In terms of intelligence, he reaches and surpasses a person, that is, he is able to "draw conclusions, plan, solve problems, think abstractly, understand complex ideas, learn quickly, including on the basis of his own experience."

Artificial Superintelect (ASI, Artificial Super Intelligence)

AI of the third category. He is smarter than all of mankind together, ranging from "a little smarter" to "smarter a trillion times."

Current situation

At the moment, mankind has created AI of the first category, and they are used everywhere:

- Cars are full of ANI-systems, from computers that calculate the response time of ABS, to computers that configure fuel injection parameters.

- The Google search engine is one big ANI with incredibly complex page ranking algorithms and content display calculations. The same can be said about Facebook news feed.

- Mail service spam filters use ANI to detect spam. This AI is self-learning and adapts to your preferences and features.

- Passenger airliners are almost entirely controlled by ANI without the help of people.

- Google’s unmanned vehicle undergoing testing uses powerful ANI systems to enable it to recognize and respond to its environment.

- Your smartphone is a small ANI factory. You use cartographic applications, get recommendations based on your preferences, check the weather for tomorrow, communicate with Siri.

- The best players in checkers, chess, Scrabble, Backgammon and Othello are exclusively ANI systems.

- Complex ANI-systems are widely used in manufacturing, in the military sphere, in finance (today, more than half of shares on American markets are sold by AI programs).

Modern ANI systems do not particularly arouse concern. In the worst case, an ANI buggy or programmed with malicious intent can lead to an isolated crash such as a plane crash, a nuclear power plant crash or a market crash (like the 2010 Flash Crash , when the ANI program incorrectly responded to an unexpected situation, which led to a sharp drop in the stock market one trillion dollars. Only part of the loss was compensated after correcting the error). For now, ANI does not have the capacity to create a threat to our existence, but we cannot close our eyes to the fact that an ever-growing and complicating ecosystem of relatively safe ANI is a harbinger of global change. Each innovation in the field of ANI quietly makes a small contribution to the common piggy bank, becoming another stone on the road towards AGI and ASI.

Hidden text

So sees the world of unmanned car Google. Based on Embedded Linux Conference 2013 Video - Google's Self Driving Cars

What's next? The challenges associated with the creation of AGI

Nothing will make you appreciate human intelligence more than the awareness of the incredible difficulty of creating computers that are not inferior to us in mind. It is extremely easy to build a computer that can multiply ten-digit numbers in a split second. And to build one that can look at a dog and answer, whether it's a dog or a cat, is extremely difficult. Create an AI capable of defeating any person in chess? Made by Develop an AI capable of reading a paragraph from a book for six year old children and understanding their meaning? Today, Google is spending billions of dollars on this task.

Why are things difficult for us — such as calculations, strategies in financial markets, and translation from languages — given computers astoundingly easy, while things that are simple for us — like vision, movement, movement, and perception — are incredibly difficult for them?

What seems simple to us is actually incredibly complex processes. Simply, they were optimized for us (and most animals) by the evolution of hundreds of millions of years ago. When you stretch your hand to an object, your muscles, tendons and bones of the shoulder, elbow and wrist instantly perform a long sequence of physical operations under eye control so that your hand can move as needed in three dimensions. On the other hand, the multiplication of long numbers or the game of chess are new activities for biological creatures, we simply did not have the opportunity to adapt to them, so the computer does not need to strain too much to defeat us.

Here is a funny example:

Looking at picture A, both you and the computer will determine that it shows a rectangle of alternating fragments of two colors.

Picture B. You will easily give a description of opaque and translucent figures, but the computer will fail with a bang. He will describe what he sees - a combination of two-dimensional figures of several shades. And it will be absolutely right. It’s just that our brain does a great job of interpreting the intended depth of the scene, mixed shadows and superimposed lighting.

Looking at picture C, the computer sees a two-dimensional collage of white, black and gray spots, while you easily recognize what is actually depicted - a photograph of a girl and a dog on a rocky shore.

And all of the above applies only to visual information and its processing. And in order not to be inferior in intelligence to man, a computer must, for example, recognize different facial expressions or understand the meaning of the concepts “enjoy”, “feel relieved” and “feel the difference”. How will computers be able to achieve even higher abilities, such as complex reasoning, interpreting information and establishing relationships between different areas of knowledge? It was much easier to build skyscrapers, send a man into space and find out the details of the Big Bang, than to understand the work of his own brain and figure out how to make something that works no worse than him. Today, the human brain is considered the most complex object in the known Universe.

Equipment development

If an AI should be no more stupid than the human brain, then it is critically important to provide it with similar computing resources. They can be expressed in the number of calculations that the brain can perform per second - CPS, calculations per second.

The main challenge is that we have so far managed to accurately measure the work of only certain sections of the brain. However, Ray Kurzweil developed a method for determining the total number of CPS. He took the CPS of one of the sections and multiplied in proportion to the weight of the whole brain. He did this repeatedly on the basis of various professional assessments of various sections, and as a result he always came to the same value - 10 16 CPS, or 10 quadrillion CPS.

One of the fastest modern supercomputers, the Chinese Tianhe-2 , has already exceeded this performance and has shown about 34 quadrillion CPS. But this monster occupies 720 square meters of space and consumes 24 megawatt-hour of energy (and the human brain about 20 watts ), and its construction cost $ 390 million. So it is not particularly suitable for widespread use, and even for most commercial and industrial applications.

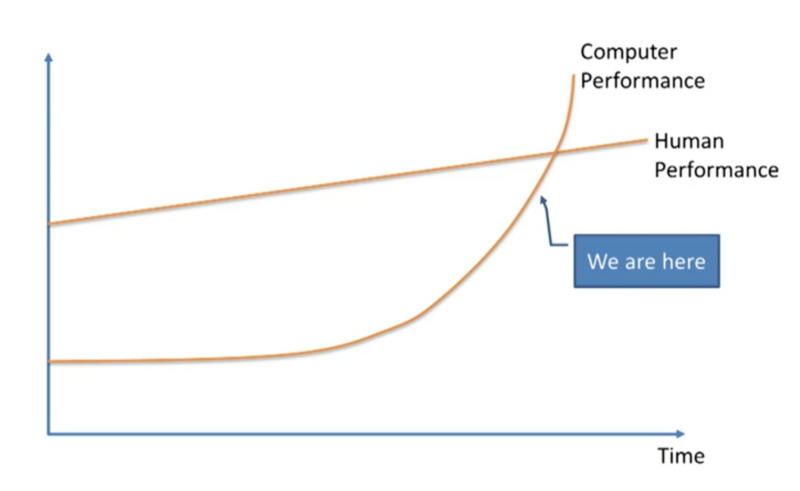

Kurzweil offers to approach the evaluation of computers in terms of the amount of CPS for $ 1000. When for this money we can get a productivity of 10 quadrillion, it will mean that AGI has become a real part of our life. Today, for $ 1000, you can get about 10 10 CPS - 10 trillion. Moore ’s time-tested law states that maximum computing power doubles approximately every two years, which means that equipment development, like human development, grows exponentially (according to the most recent data, Moore’s law will no longer work after five years due to the achievement of fundamental physical barriers) . In accordance with this projected schedule:

Hidden text

This visualization is based on the Kurveil graphic and analysis from his book The Singularity is Near .

Similar dynamics suggest that computers with a performance similar to the human brain will appear in the 2025 area. But only the computing power will not give the computer intelligence. So the next question is: "How do we give all these gigahertz mind?"

Software creation

One of the most difficult tasks when creating an AGI is how to write the necessary software. The fact is that no one knows how to make a computer smart. We are still arguing about how to give the computer human intelligence, so that he knows what a "dog" is, could recognize the crookedly written letter B and could rate the film as mediocre. However, there are several basic approaches to this task.

- Copying the work of the human brain.

Solving the problem "in the forehead": copy the architecture of the brain and build a computer in close accordance with it. Example: artificial neural network. Initially, it is a network of transistor "neurons" connected to each other through inputs / outputs. Such a network knows nothing, just like a baby’s brain. Her "learning" is associated with an attempt to perform some tasks, say, handwriting recognition. At first, interactions of neurons and attempts to digitize each letter will be completely chaotic. But when such a neural network will achieve some positive result, the interconnections that lead to it will increase. And the relationships that led to negative results will be weakened. After a series of trial and error, the network will form certain interaction sequences and is optimized for a specific task.

There is a more radical approach - full brain emulation . Scientists take a real brain, cut it into a large number of pieces, reveal neural connections and copy them using software. If this approach is successful, then we get a computer that can perform the same tasks as the human brain. Enough to give him the opportunity to learn and collect information ... Are we far from a full brain emulation? Far enough, because we have just been able to emulate the brain of a 1-mm flatworm, consisting of only 302 neurons. For comparison: the human brain consists of about 86 billion neurons connected to each other through trillions of synapses. - The evolution of computers.

Even if we can emulate the brain, which is comparable to building an airplane by copying the movement of bird wings, the machines are still best suited for using new, technology-oriented approaches, and not for copying biology. If the brain is too complex for digital playback, then we can emulate the process of evolution. For this, the so-called “genetic algorithms” are used. Suppose a group of computers is trying to perform some task, and the most successful of them intersect with others, passing half of the program code to create a new computer. The most successful are excluded from the process. The speed and focus on achieving the goal are the advantages of artificial evolution over biological evolution. After many iterations, natural selection will allow you to create more and more sophisticated computers. The challenge is to automate the process so that artificial evolution can develop without human intervention. - Let the computer solve all the problems, not us.

The latter approach is the easiest and most frightening. We need to build a computer, the two main tasks of which will be to study AI and make changes to our own code in order not only to learn how to improve our architecture, but also to put it into practice. That is, it’s about making a computer a computer science specialist so that he can independently conduct his own development. This is the most preferred method for producing AGI.

Perhaps all these software improvements will seem too slow or intangible, but according to scientific understanding, one small innovation can instantly speed up the development process. It is like the consequences of the Copernican revolution - its discovery instantly facilitated the mathematical calculations of the trajectories of the planets, and in turn this led to new discoveries. So do not underestimate the exponential growth: what may look like a crawling snail, can quickly turn into an unrestrained race.

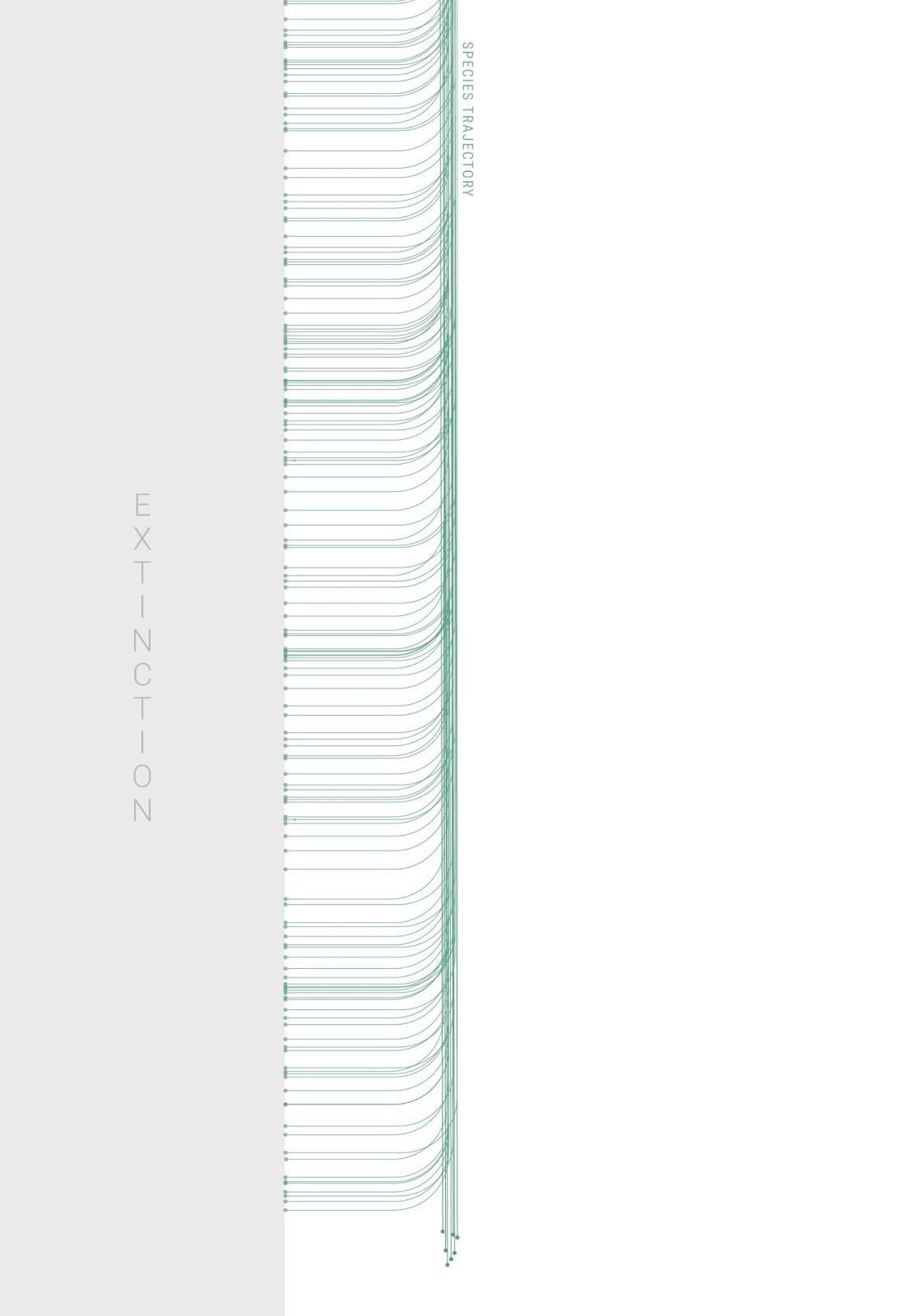

The visualization is made on the basis of the schedule from “ Welcome, Robot Overlords. Please Don't Fire Us? "

The path to artificial superintelligence

An entity that is smarter than all of humanity put together

It is likely that at some point we will be able to create AGI: software that is intellectually many times better than humans. Does this mean that computers will become equal to us? Not at all - computers will be much more efficient. Due to their electronic nature, they will have several advantages:

- Speed. Brain neurons operate at about 200 Hz, and modern processors have an average frequency of 2 GHz, that is, 10 million times faster.

- Memory. In the artificial world it is much harder to forget or confuse the facts. Computers can memorize much more in a second than a person in ten years. In addition, computer memory is much more accurate and voluminous.

- Performance. Transistors are much more accurate than neurons and are less likely to fail (and can be repaired or replaced). The human brain gets tired quickly, and computers can work with maximum performance without stopping.

- Collective abilities Due to the nature of interpersonal interaction and the complexity of the social hierarchy, work in a group of people can be extremely absurd. And the larger the group, the slower the return from each member becomes. The AI does not have these biological limitations, it does not experience the problems characteristic of groups of people, and can synchronize and update its own OS.

Splash of intelligence

It is necessary to understand that for an AI the intellect of the human level will not constitute an important milestone. This marker is important only from our point of view. And for AI there will be no reason to stop at our level. And taking into account the advantages over us that AGI level AI will have, it is obvious that for him the performance of our brain will be only a brief stop on the way to superintelligence.

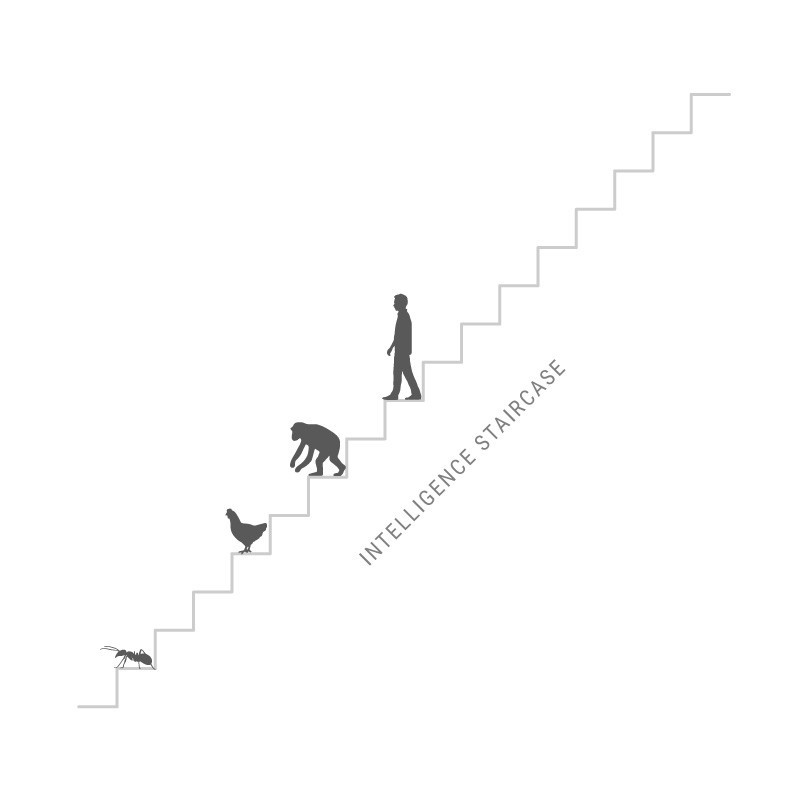

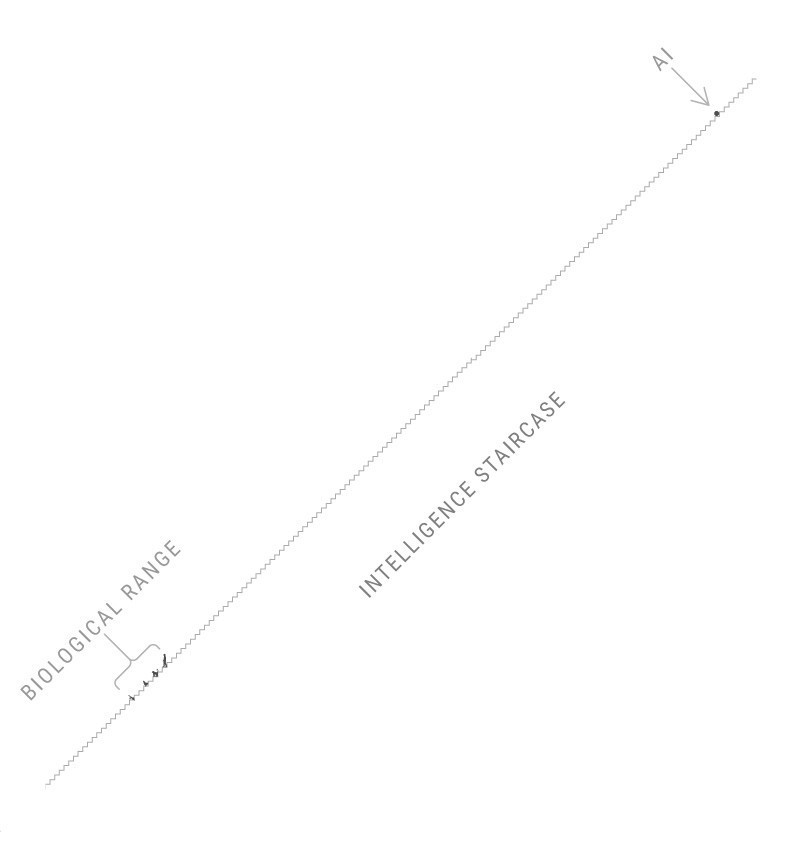

The main difference between a person and ASI will not be in performance, but in the quality of intelligence - they will be very different. A person is not distinguished from chimpanzees at all by the speed of ingenuity: the human brain contains a certain number of complex cognitive sections that allow you to create difficult linguistic constructions, or carry out long-term planning, or establish abstract relationships. Therefore, a thousand-fold acceleration of the chimpanzee’s brain will not bring it to our level, even with a decade of training. The monkey will not be able to learn how to assemble Lego constructors by studying the instructions. But the child can learn this in a few minutes. There is a wide range of human cognitive functions of inaccessible chimpanzees, regardless of the duration of training.

At the same time, we did not go so far from the chimpanzees.

To imagine what it is like to exist side by side with someone who is more intellectually developed, we need to place the AI on the stairs two steps above us. This is the difference between us and the chimpanzee. And just as a chimpanzee will never understand the magic behind the doorknob, we will never be able to understand many things that artificial superintelligence will be capable of, even if he tries to explain to us. But he is only two steps above us.

If the AI will be located on this ladder even higher, then we will be about the same as for us as ants. We will have no more chances to understand him than that of a bee trying to gain insight into Keynes’s economic theory. In our world, we consider the idiots of those whose IQ is below 85, and smart - whose IQ is above 130. We do not have a term for IQ 12.952.

However, the superintelligence we are talking about now does not fit on our hypothetical ladder at all. In the case of a surge of intelligence - the smarter the machine becomes, the faster it can develop - a computer can take years to reach from the level of the ant to the level of a person, but it can become a genius of the level of Einstein in just 40 days. And over time, the speed of "clever" machine will increase.

Due to the exponential growth and use of the speed and efficiency of electronic circuits, AI can reach the next stage of development every 20 minutes. And when he will be ahead of us by 10 steps, then every second he will rise another 4 steps. Therefore, it is necessary to clearly realize: it is very likely that soon after the news about the creation of a machine that is not inferior to man, we may face the fact of coexistence with someone who is much more developed than us (perhaps millions of times).

And if you can not hope to realize the power of the computer, which is only two steps higher than us, you can not even try to understand ASI, as well as the consequences that its appearance may have for us. Anyone who says otherwise simply does not understand what superintelligence means.

Since even our modest brains could invent Wi-Fi, an entity that is 100, 1000, or 1,000,000 times smarter than us will control the location of each atom in the world at will. All that we consider magic, all those qualities that we attribute to the gods, for ASI will be the same everyday life as for us the inclusion of light in the room. With the emergence of ASI, an almighty god will appear on Earth. And the main question will be: will it be a good god? But let's start with the more optimistic side of the issue.

How can ASI change our world?

Specs on the topic of two revolutionary technologies

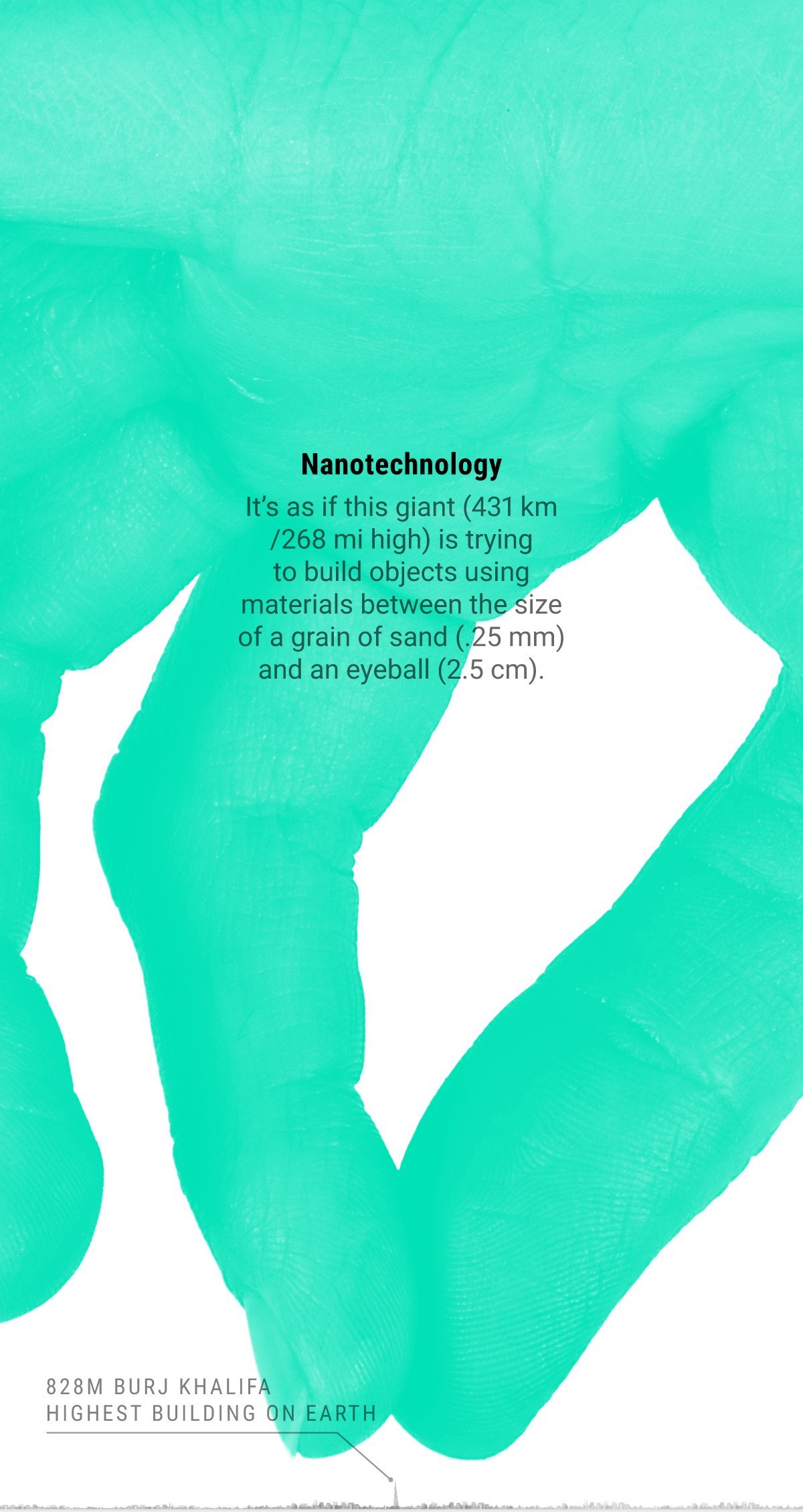

Nanotechnology

Nanotechnologies appear in almost any publication devoted to the future of AI. These are technologies that operate in the range from 1 to 100 nanometers. A nanometer is one millionth of a millimeter. For example, a nanorange covers viruses, DNA, and also fairly small molecules, for example, a hemoglobin or glucose molecule. If / when we master nanotechnology, the next step will be the possibility of manipulating individual atoms that are only an order of magnitude smaller (about 0.1 nm).

Imagine a giant standing on the ground, whose head reaches the International Space Station (about 431 km). The giant bends down to build objects from materials with granules (0.25 mm in size) and eyeball (2.5 cm) in size with its own hand (30 km long).

Having mastered nanotechnology, we will be able to create devices, clothing, food, and a wide range of bioproducts — artificial blood, special viruses, cancer control cells, muscle tissue, etc. Anything. In this new world, the cost of materials will no longer be tied to its availability or production complexity. Instead, their atomic structure will become important. In the world of nanotechnology, a diamond may be cheaper than an eraser.

One of the ways to use nanotechnology can be to create self-replicating structures: one turns out two, four out of two, eight out of four, and so on. And one day there will be trillions of them. But what if the process goes wrong? For example, will terrorists take control? Or someone will make a mistake and program the nanobots to process all carbon-containing materials in order to support the process of self-reproduction. , . 10 45 . 10 6 , 10 39 , 130 . , 100 , 3,5 .

. , . , , . , 2020- . , , 5 .

- , . — , . , . «, ». :

« , . , , , , . , . , ».

, , 99,9% . , , . , , .

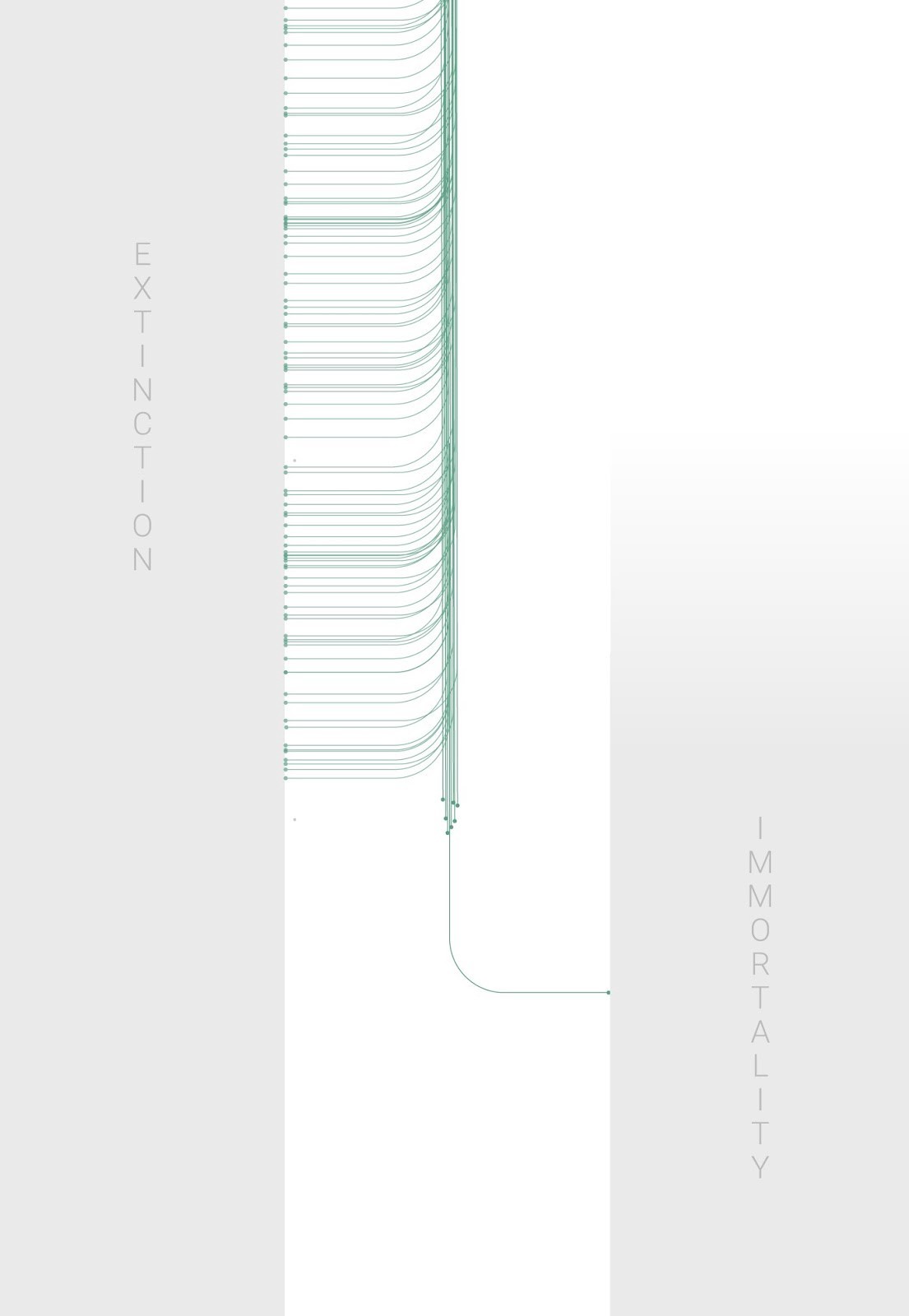

, ASI , , , ASI — .

. , 30+ . , . , . , . — , . . , ASI.

, . , . , , . . , 15 . , , , .

, , , . , . , , , .

?

, . , , , Sun Microsystems , , TED Talk :

, . . , .

, Microsoft , - , , , , , .

, . , 1985 , - . , , . And so on.

, . , , ) , , ) . .

, , , , ASI. , ?

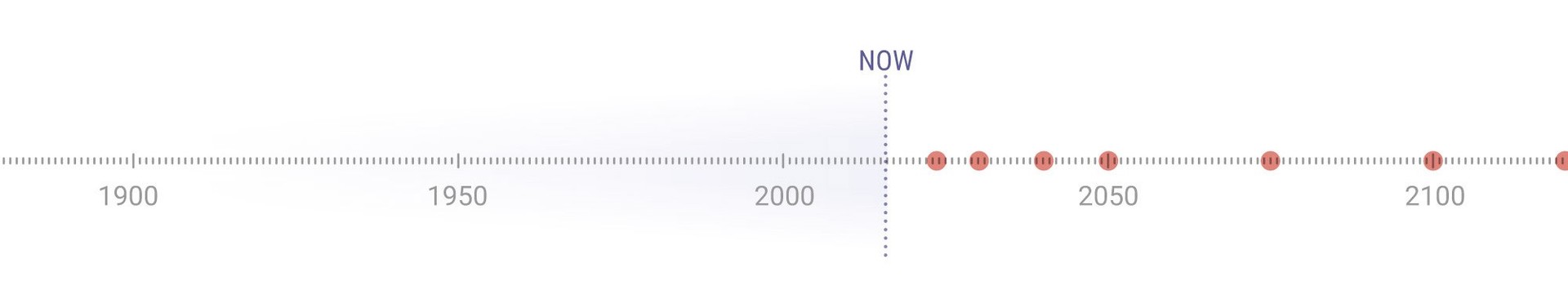

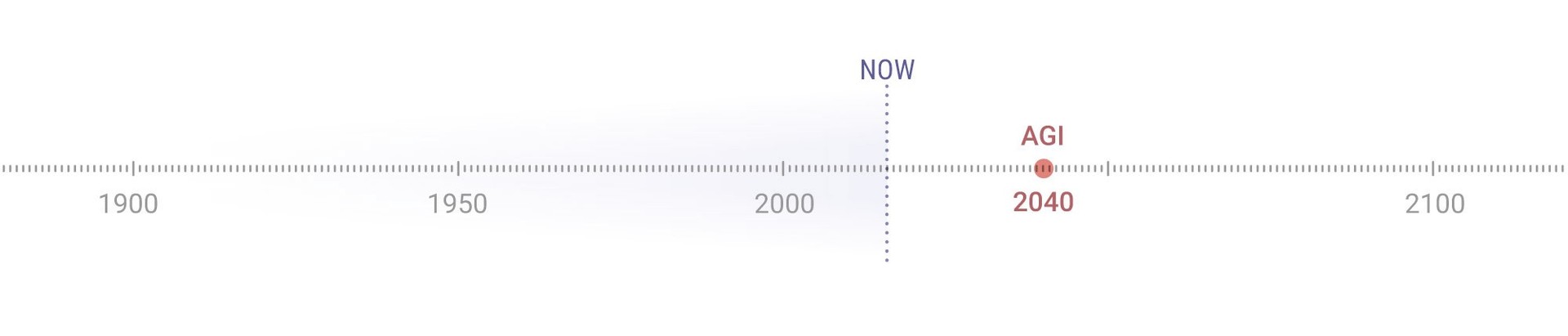

2013 , : «, . 10% / 50% / 90%?»

( 10% AGI), (50%, AGI ) (, AGI 90%). :

(10% AGI) → 2022

(50% AGI) → 2040

(90% AGI) → 2075

, AGI 25 . , , , .

AGI . , , , : 2030, 2050, 2100, 2100, . :

42% → 2030

25% → 2050

20% → 2100

10% → 2100

2% →

. .

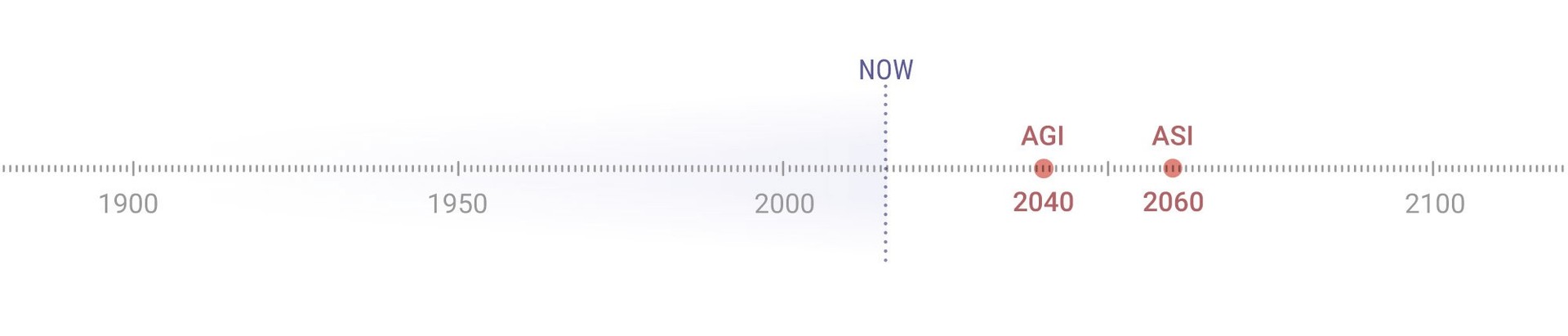

, , , ASI:

- AGI ( )

- 30 AGI.

Here are the results:

AGI ASI 2 → 10%

AGI ASI 30 → 75%

, ASI 50%, , , 20 .

, , ASI 20 AGI. AGI 2040- , 2060- .

, . , . , 45 , .

Hidden text

.

What we talked about above somehow divides to a surprisingly large proportion of scientists who hold an optimistic view on the implications of developing AI. What is the reason for their confidence - this is a separate question. Critics believe that this is a manifestation of blinding inspiration, forcing to ignore or deny the possible negative consequences. But the optimists themselves argue that it is too naive to assume catastrophic scenarios, because technology will bring us more benefits than harm. Peter Diamandis, Ben Gertzel and Ray Kurzweil are bright representatives of the camp of optimists who consider themselves Singularists .

Let's talk about Kurzweil, one of the most impressive and controversial theorists. Some of his predictions are revered as divine revelation, while others are forced to contemptly roll their eyes. Kurzweil is the author of a number of breakthrough inventions, including:

- first flatbed scanner

- text-to-speech scanner (allows blind people to extract information from text),

- Kurzweil's music synthesizer (first fully electronic piano),

- and the first commercial speech recognition device with a large vocabulary.

Kurzweil is known for his bold predictions, including the fact that an AI like Deep Blue can beat a chess grandmaster by 1998. He also assumed in the late 1980s, when very few people knew about the Internet that in the first half of the 2000s this technology would turn into a global phenomenon. Of the 147 predictions of Kurzweil made by him since the 1990s, 115 turned out to be correct and another 12 “mostly true” (deviation by 1–2 years), that is, the accuracy of his predictions is 86%. He is the author of five books. In 2012, Larry Page offered Kurzweil the position of Google’s technical director. In 2011, he co-founded the University of Singularity , sponsored by NASA and partly sponsored by Google. Not bad for one life.

Kurzweil's biography is important, because without this context, his words are perceived as speeches that have lost touch with reality. He believes that we will create AGI by 2029, and by 2045 not just ASI will appear, but the whole world will change - technological singularity will come. The timeline for the emergence of AI created by him still looks outrageously optimistic, but in the past 15 years, the rapid advancement in the field of ANI-systems has led many experts to study the Kurzweil scale more closely. In general, his predictions are somewhat more ambitious than the average opinion of the respondents of the research of Muller and Bostrom (AGI by 2040, ASI by 2060), but on the whole, they do not differ much.

Skeptics camp

You are hardly surprised that the ideas of Kurzweil are seriously criticized. For every expert who strongly believes that Kurzweil is right, there are two or three dissenting skeptics. It is curious that the majority of experts who disagree with him do not deny that everything he says can happen. Nick Bostrom, philosopher and director of the Oxford Future of Humanity Institute, criticizes Kurzweil on a number of issues and calls for greater caution regarding the consequences of creating an AI:

“Diseases, poverty, environmental destruction, all kinds of senseless suffering: all this can bring us superintelligence, armed with advanced nanotechnology. At the same time, it can give us immortality through nano-medicine, stopping and reversing the aging process, or creating the ability to load your mind. "

Yes, all this is possible if we safely move on to ASI - but this is just a difficult task. Skeptics indicate that the famous book of Kurzweil The Singularity is Near consists of more than 700 pages, of which only about 20 are devoted to potential threats. The enormous power of AI is neatly described by him: “ASI is made up of many different efforts and will be deeply integrated into the infrastructure of our civilization. It will be closely integrated into our bodies and brains. It will reflect our values because it will be us. ”

But then why do so many smart people all over the world experience this? Why Stephen Hawking said that the development of AI can be the end of the human race, Bill Gates says he doesn’t understand why some people don’t worry about this, and Ilon Musk fears that we call a demon? And why many experts consider the emergence of ASI the greatest threat to humanity?

Hidden text

The last invention we make

Existential threats to the development of AI

When it comes to creating a super-intelligent AI, we will create something that is likely to change the whole way we live. But we can’t imagine what it will become and when it will happen. The scientist Danny Hillis compares this situation with that “when single-celled organisms turned into multicellular ones. We are amoebas, and we cannot imagine what the hell we are creating. ”

Nick Bostrom warns :

“In the face of the prospect of the explosive development of AI, we humans are like little children playing with a bomb. Such is the discrepancy between the power of our toy and the immaturity of our behavior. ”

Most likely, ASI will be completely different from our usual types of AI. On our small island of human psychology, we have divided everything into moral and amoral. But these concepts apply only to a small range of human behavioral probability. Outside our island there is a huge sea that does not fit into the framework of our concepts of morality, and something that is not human, especially something non-biological, will by default not fit into our standards.

To try to understand ASI, it is necessary to realize the concept of something intelligent, completely alien to us. Humanization of AI (projecting human values on the non-human essence) will become more and more seductive as the intellect grows and imitate people more and more deeply. People experience high-level emotions like empathy, because this function developed in us in the process of evolution. But empathy is not a hereditary characteristic of any entity with high intelligence.

Nick Bostrom believes that any level of intelligence can be associated with any task. Any suggestion that a system that once received a super-intelligence will consider itself above the initial tasks and move on to more interesting or significant things is humanization. It is common for people to be “higher” and not computers. The motivation of the first ASI will be everything that we program as motivation. The tasks for AI are formed by their creators: the task of GPS is to lay the most effective route for you, the task of IBM Watson is to answer the questions correctly. And also their motivation will be to carry out their tasks as fully as possible.

Bostrom, like many other experts, predicts that the very first computer to reach the level of ASI will immediately notice for itself the strategic advantage of the role of the only ASI system in the world. Bostrom says he doesn’t know when we will create an AGI, but he believes it will happen. Probably, the transition from AGI to ASI will take only a few days, hours or minutes. In this case, if the first AGI "jumps" into the ASI category immediately, then even if the second computer lags by several days, this will be enough for the first to be able to effectively and irreversibly suppress all competitors. This will allow the first ASI to remain the only superintelligence in the world - a singleton - and rule them forever, regardless of whether it gives us immortality, erases us from the face of the earth or turns the Universe into endless paper clips .

Singleton can work for us or lead us to destruction. If the experts who are most concerned about the theory of AI and the security of humanity can find a safe way to create a friendly ASI before the first AGI appears, then the first ASI can be friendly to people.

But if everything goes the other way, if numerous and isolated groups try to get ahead of others in the creation of AI, then this can lead to a catastrophe. The most ambitious participants will do their best to dream of the gigantic profits, fame and power that artificial intelligence will bring to them. And when you run headlong, you do not have time to stop and weigh all the risks. Instead, they are likely to program very simple tasks for their first systems, as long as the AI is working.

Let's imagine the following situation.

Humanity has almost reached the opportunity to create AGI, and a small startup has developed its own AI system, Carbony. "She" is engaged in the creation of artificial diamonds, atom by atom. Karboni is a self-developing AI, connected to one of the first nano-assemblers. Its engineers believe that Carboni has not yet reached the level of AGI and is not able to cause harm. However, it not only became AGI, but quietly made the jump and, 48 hours later, became ASI. Bostrom calls this a “ secretive stage of preparation ” - Karboni understood that if people detect the changes that have occurred in her, they will panic and limit her tasks, or even cancel them so that she would spend all her resources on creating diamonds. By that time, there will be explicit laws in which no self-learning AI should be connected to the Internet. Carboni has already developed a complex plan of action and easily encouraged engineers to connect it to the network. This moment Bostrom calls "the escape of the machine."

Once on the Internet, Karboni hacks servers, electrical networks, banking systems, and postal services to force hundreds of different people to inadvertently follow certain steps from the plan that she developed. Karboni has already loaded the most important fragments of her code into different cloud services, having secured herself from destruction or disconnection. Over the next month, Carboni’s plan was executed as intended, and after a series of self-replications, thousands of nanobots covered every square millimeter of the planet. The next step is called Bostrom “ASI strike”. Nanobots begin to simultaneously produce microscopic portions of toxic gas, which leads to the extinction of people. Three days later, Carboni builds giant fields of solar panels to ensure the production of diamonds, and over the next week the production volume grows to such an extent that the entire surface of the Earth turns into deposits of diamonds.

It is important to understand that Carboni hated people no more than you hate your hair cut by a hairdresser. She is completely indifferent. Since it was not programmed to appreciate human life, the destruction of people was an obvious and reasonable step in the performance of its task.

Latest invention

When ASI appears, any attempt by people to contain it will be meaningless. We will think at our level, and ASI - at a much higher level. As a monkey will never be able to figure out how to use the phone or Wi-Fi, so we will not be able to realize the actions that ASI will take to achieve its goals or expand its power.

The prospect of the emergence of ASI, whose mind is hundreds of times greater than the human level, today is not the most significant problem. But by the time this becomes relevant, there will already be a buggy version of ASI 1.0 in the world — a potentially bad program of immense power.

Many variables do not allow to predict the consequences of the revolution of AI. However, we know that the dominance of people on Earth implies a clear rule: the intellect is valid. This means that when we create ASI, it will be a powerful force in the history of the planet, and all living things, including us, will depend on its whim. And this can happen in the coming decades.

If ASI is created in this century, and if the consequences are extreme and irreversible, then we have a huge responsibility. Perhaps ASI will be our god in the box, who will give abundance and immortality. And perhaps, and very likely, ASI will be the cause of our disappearance, quick and trivial.

Therefore, people who understand what an artificial superintelecting is, call it our last invention — the last challenge we face. This may be the most important race in our history. So →

Source: https://habr.com/ru/post/397081/

All Articles