Experts from China and the United States have learned to manipulate the Tesla autopilot

Autopilot and sensors can be intentionally disrupted with special equipment.

In the US, there is still a trial in the case of the accident Tesla Model S with a fatal outcome in May of this year. Then, an excess-speed electric car crashed into a truck trailer. Neither the driver nor the autopilot saw an obstacle against the bright sky. Information security experts have a question - what if trying to reproduce similar conditions artificially? Will it be possible to deceive the sensors of the machine and the computer system itself?

A joint team of researchers at the University of South Carolina (USA), Zhejiang University (China) and the Chinese computer security company Qihoo 360 learned how to manipulate the Tesla Model S autopilot. For this, researchers worked with different types of equipment: radiating radio waves, sound signals shine. In some cases, Tesla autopilot began to "believe" that there were no obstacles ahead, although they were, and vice versa - that there was an object ahead that could be encountered, although there was nothing in front of the car.

As it turned out, in order to trick the Tesla Model S computer control system, you need to make significant efforts. The goal of the team of scientists was to prove that the conditions that led to the accident in May can be reproduced intentionally. “The worst case scenario for a car driving an Autopilot is idle radar. In this case, the machine will not be able to determine the presence of obstacles ahead, ”says one of the representatives of the team of scientists who conducted the research.

')

Autopilot, operating in Tesla electric vehicles, detects the presence of obstacles using three main methods: radar, ultrasonic sensors and cameras. Researchers attacked all three types of systems. As a result, it was possible to determine what the radar is the best to "deceive". To distort his testimony, the researchers used two systems. The first is a signal generator from Keysight Technologies that costs $ 90,000. The second is a VDI frequency multiplier, the price of which is even higher. Both systems were used to silence the signal emitted by the radar system of an electric vehicle. When the experts jammed the signal, the car that was in front of the Tesla Model S disappeared for the autopilot and was no longer displayed on the display.

As soon as the "anti-radar" turned on, the obstacle in front disappeared. According to the authors of the work, such a method can be used on the highway by attackers who, for one reason or another, may want to arrange an accident involving a Tesla electric car. Scientists say that for a successful attack on the Tesla radar system, you need to properly install a silencer, which is not so easy to do on the road.

A simpler and cheaper attack is the ultrasound system, with the help of which the experts decided to act on the ultrasonic sensors of the machine. They are usually used for automatic parking and "call" the car to the driver. In this mode, she can independently leave the parking lot and get closer to the owner. To deceive ultrasound sensors, researchers used an Arduino-based ultrasound transducer and signal generator to create electrical signals with specific characteristics. Such a system costs significantly less - only $ 40. In this case, it was possible to deceive the automatic parking system, and the car did not want to park, considering that there was an obstacle in front. With the help of the same system, we managed to “convince” the parking system that there was no obstacle in front, although it was.

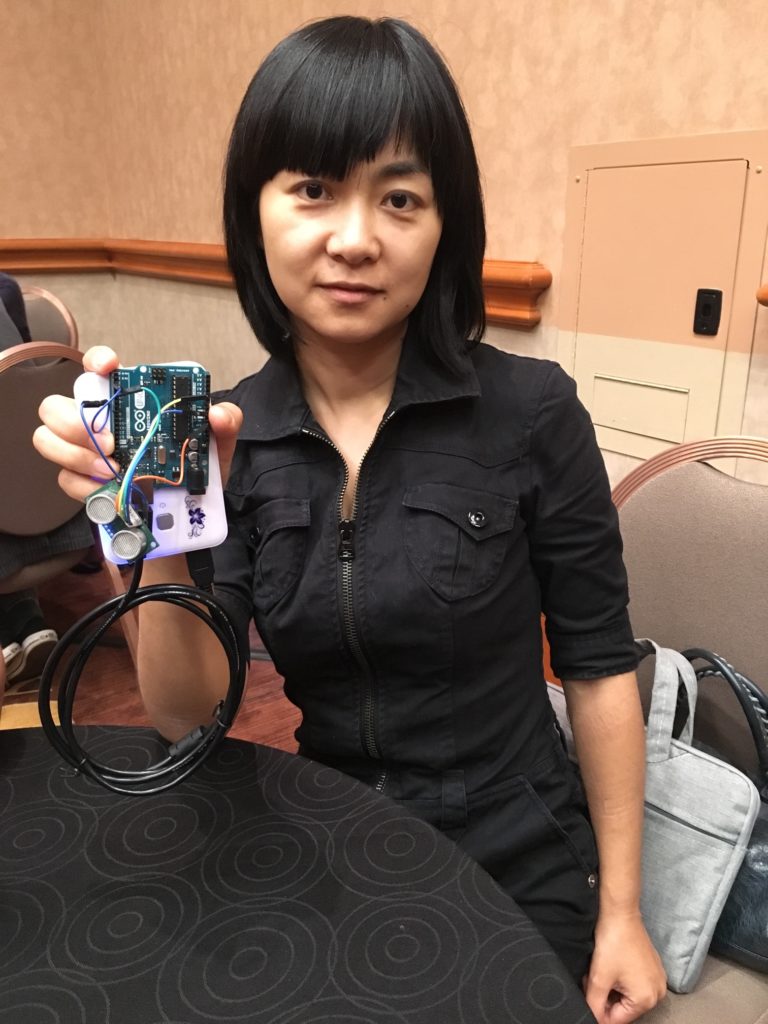

Computer Science Professor Wenyuan Xu demonstrates an Arduino Uno ultrasonic device (Source: Andy Greenberg)

The easiest way to influence Tesla's computer control system is to apply a special sound absorbing foam to the sensors.

Scientists also tried to influence the cameras, but this system turned out to be the most reliable. The only thing that was done was to blind the system with a directed LED beam or a laser beam. But Tesla developers have provided such an option, and Autopilot simply turned off, transferring control to the driver. As the experts found out, the autopilot can also be blinded by bright sunshine under certain circumstances. Perhaps this is the situation that arose in May.

The manual for the electric vehicle says that the Autopilot may not identify some objects. It is also said that the technology "was created for convenience while driving, but it is not a system of avoiding obstacles or preventing collisions."

According to some third-party experts who studied the results of the work described above, the next stage of the study should be the situation on the road, and not in stationary conditions. To confirm the effectiveness of methods of influencing Autopilot and electric vehicle sensors, you should try to do the same in field conditions. “They have to do a little more to find out if the autopilot will really allow the car to crash into an obstacle.” You can tell me up to this point whether the autopilot is working or not, ”says Jonathan Petit, a spokesman for the University of Cork (Jonathan Petit).

The authors of the study believe that Autopilot and sensors are externally exposed and vulnerable, although it is not so easy to exploit this vulnerability. According to scientists, Tesla should protect its electric vehicles from intruders who may try to disrupt the normal operation of the computer’s computer system. "If the noise level is very high, or any deviations are detected, the radar should notify the control system of a possible failure," says one of the researchers.

So far, scientists admit, the methods they have used are not very practical - they are both difficult and expensive to use. But over time, the equipment becomes cheaper, and the hackers' working methods become more complex and more sophisticated. And after a while, it may turn out that someone learned to cheat the Tesla computer system without using systems priced at $ 100,000.

Source: https://habr.com/ru/post/396721/

All Articles