NVIDIA is looking for ways to optimize VR images

The company plans to increase the comfort of BP and reduce the load on computing power by simulating peripheral vision.

The human eye is complicated. We, as omnivores, have a fairly wide viewing angle, but we still clearly see only what our view is focused on. The rest of the area allows us to distinguish between objects, but we see them indistinctly, blurry. This is what we call peripheral vision.

Initially, the company-manufacturers of VR-helmets were chasing the clarity of the image on the screens, the frame refresh rate and the overall picture quality. Now, when the products of HTC, Oculus and Sony are entering a wide market, it's time to think about optimization, because not everyone can afford a stationary PC worth $ 1500-2500 in order to try a new product. And this is without the cost of the headset itself.

')

The main financial burden is formed by video cards that render the image in two streams on the screens of VR-helmets. And NVidia, as a major player in the market and supplier, thought about optimizing and increasing the comfort of the process of immersion in the VR-world.

Now the whole picture in VR headsets is drawn with the same degree of quality. Transferring the view to any part of the screen, the user will see the same picture quality. But in fact, the human eye clearly sees only in a narrow area of focus, and everything else is peripheral vision, the picture from which our brain processes and softens.

NVidia came to the conclusion that simulating the behavior of a real eye when rendering a picture on the screens of VR headsets would be a good solution, because, firstly, this will reduce the load on the computing power of the PC to which the helmet is connected, and secondly, increase the realistic image behavior and, accordingly, increase the comfort of the gameplay.

But, since our eyes are mobile, it is impossible to make just a clear "spot" of focus in the middle of the screen. To simulate the behavior of real vision, NVIDIA engineers resorted to the eye position tracking system to clearly determine where the user is looking. As a result, they managed to develop a system that realistically simulates the behavior of human eyesight and at the same time saves the computing resources of the system:

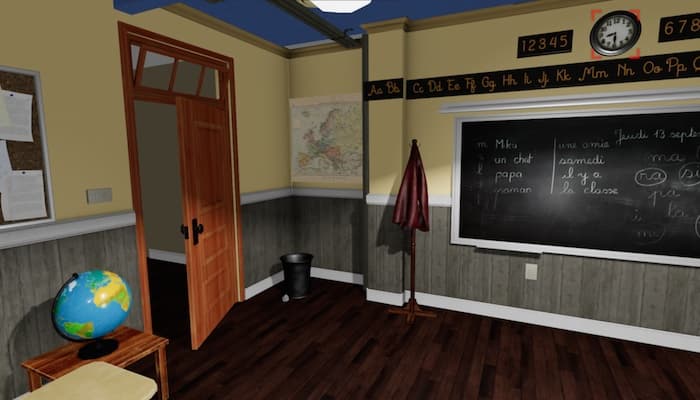

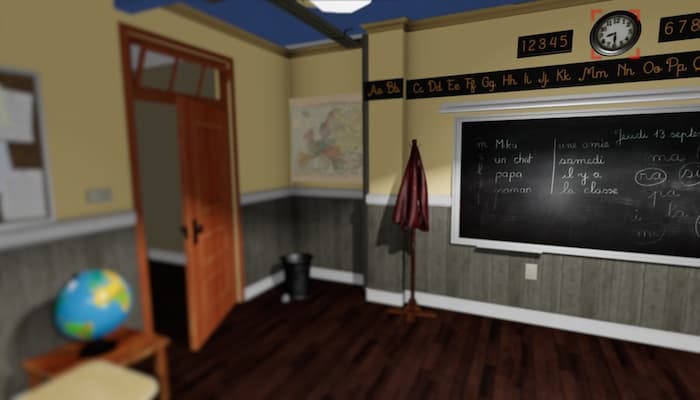

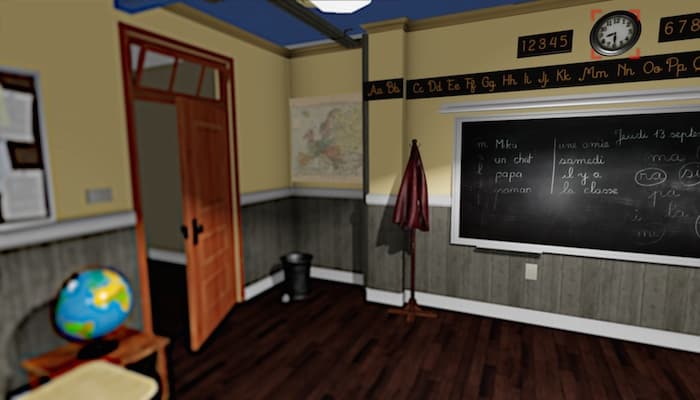

Fully drawn picture for BP, focus on the clock

Just “blurred” periphery pictures for BP, focus on the clock

“Illuminated” periphery of a picture for BP with preservation of the required level of contrast, imitation of real vision, focus on the clock

Even now, just looking at the image, you can recognize that NVIDIA engineers are on the right track. Focusing on the clock even on the monitor screen, the difference between the first and the third image, at first glance, is almost absent. The development is based on the existing SMI technology (SensoMotoric Instruments), which was introduced for Oculus Rift DK2 in November 2014.

If you think that NVIDIA engineers simply “wash” everything that is out of focus, you are mistaken. In the course of the work, it was found that with traditional rendering of pictures in the field of peripheral vision, dips and flicker are formed, causing a feeling of discomfort. Just the same “blur” image reduces the contrast, which causes a feeling of “tunneling” of view.

That is why the engineers created a whole algorithm that evaluates the shape, color and contrast, as well as the movement of the object. Due to this, the “blur” for the user passes comfortably and almost imperceptibly, without side effects.

Video demonstration of the stages of “blur” pictures for BP from NVIDIA

Source: https://habr.com/ru/post/396385/

All Articles