Using the Microsoft Kinect 360 Camera at ROS Indigo

Good afternoon, dear habrachiteli!

Recent observations and our own experience have shown that connecting to the Microsoft Kinect Xbox 360 camera for use in ROS Indigo from under Ubuntu 14.04 often causes problems. In particular, when running the openni_launch ROS package, the device cannot be identified and the error “No device connected” is displayed. In this article I will describe my step-by-step method of setting up the environment for using Microsoft Kinect from ROS Indigo. Who is interested, please under the cat!

This sensor has already been written in detail in the article . In a nutshell, Microsoft Kinect is a 3D (RGB-D, that is, red, green, blue and depth) camera based on Structured light technology, which allows you to get a map of the depth of the surfaces of objects caught in the camera's field of view. The depth map can be transformed into a three-dimensional “cloud” of points, in which each point has exact X, Y and Z coordinates in space and in some cases a color in the RGB format. “Clouds” of points are used in the tasks of reconstruction of objects, building maps of the area in service robotics, object recognition and many other tasks in the field of computer vision in 3D.

')

ROS provides support for the Kinect sensor. The “cloud” of points received by the sensor can be visually shown in the rviz program.

To use the Kinect sensor in ROS there are special packages openni_launch and openni_camera.

First you need to install the OpenNI library intended for a number of RGB-D sensors (Kinect, ASUS Xtion, PrimeSense).

Install some additional packages that OpenNI requires to install:

Now directly install OpenNI 1.5.4 from the sources:

Now download the avin-KinectSensor library for the Kinect sensor from here .

Depending on the type of system (32 or 64 bits), select the appropriate installer. For a 32-bit system, perform the following steps:

For a 64 bit system, run:

Finally, complete the installation:

The last thing we need to do is install the openni_launch and openni_camera packages , which allow us to receive and work with data from OpenNI-compatible depth cameras in ROS. Installing these packages is very simple:

Now everything is ready! We check the success of the installation. Run in different terminals:

Setting the depth_registration argument: = true indicates that we want to enable OpenNI registration and receive XYZRGB camera data (depth and color).

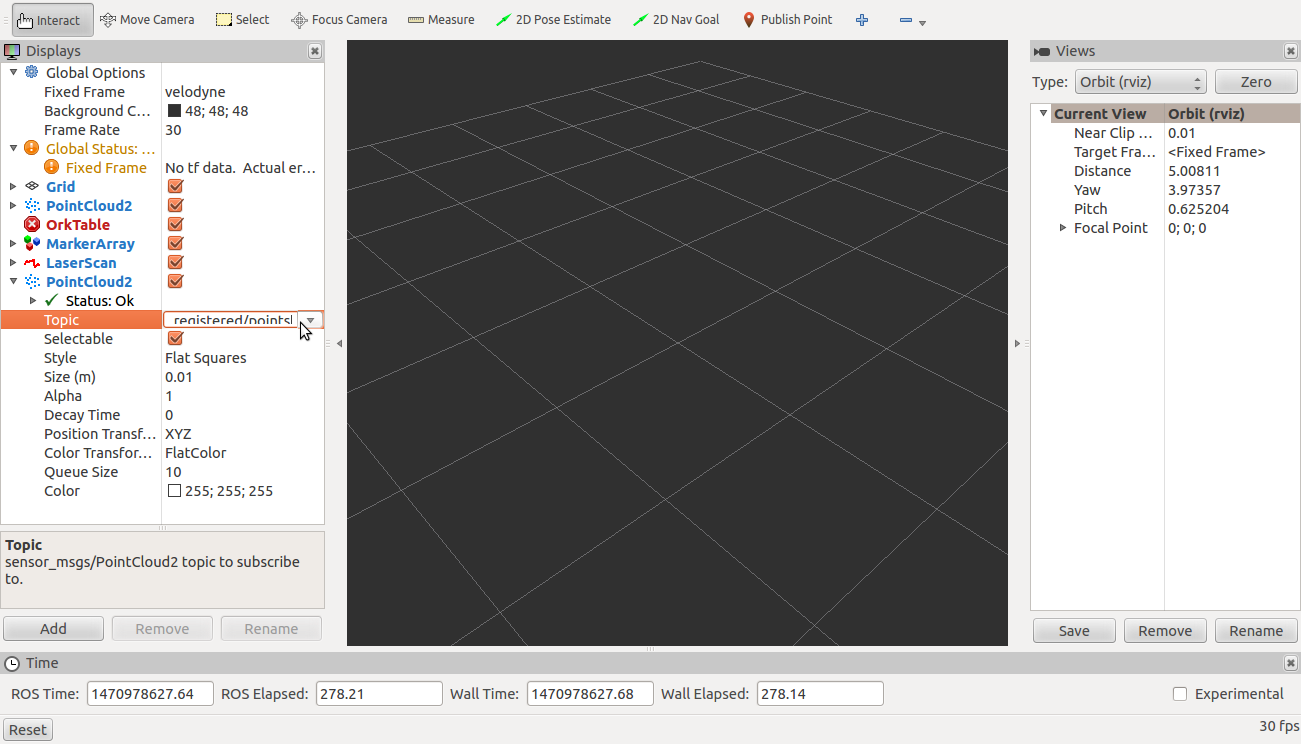

First, in rviz, expand the Global options section in the left column of the Displays and set the / camera_link value for the Fixed frame field as in the picture:

Thus, we set the necessary coordinate system for correct display of data from the Kinect camera.

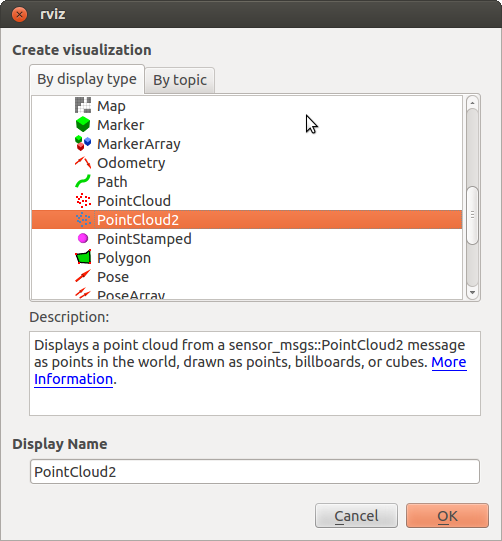

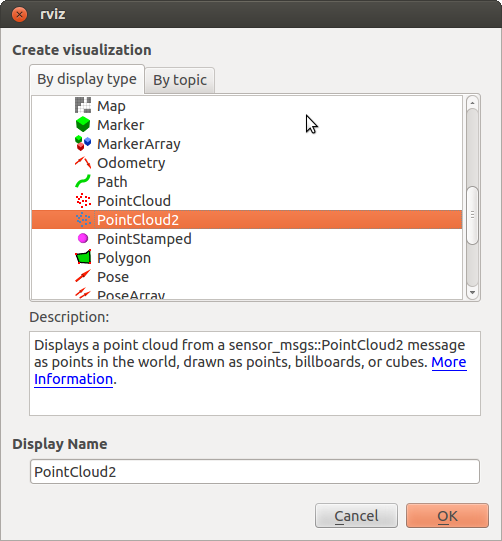

In rviz we create a new display. To do this, click the Add button and select the type of display PointCloud2 as in the picture:

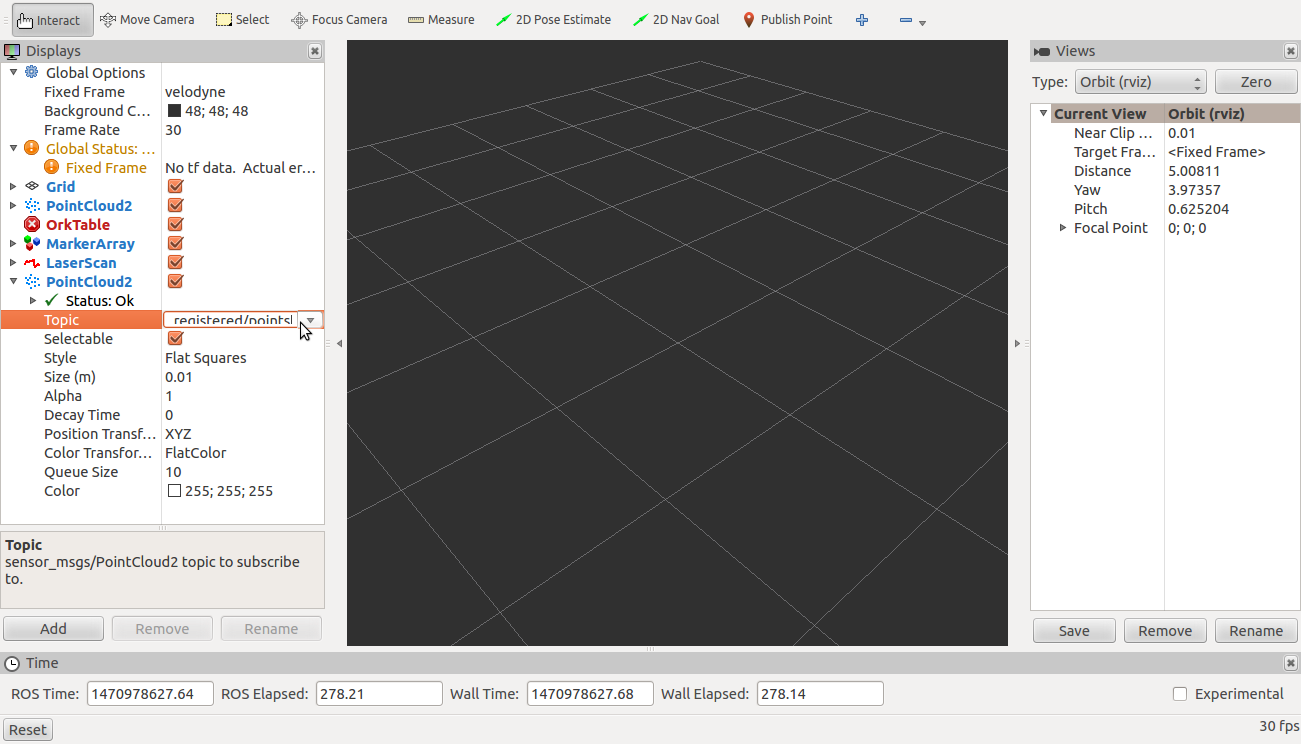

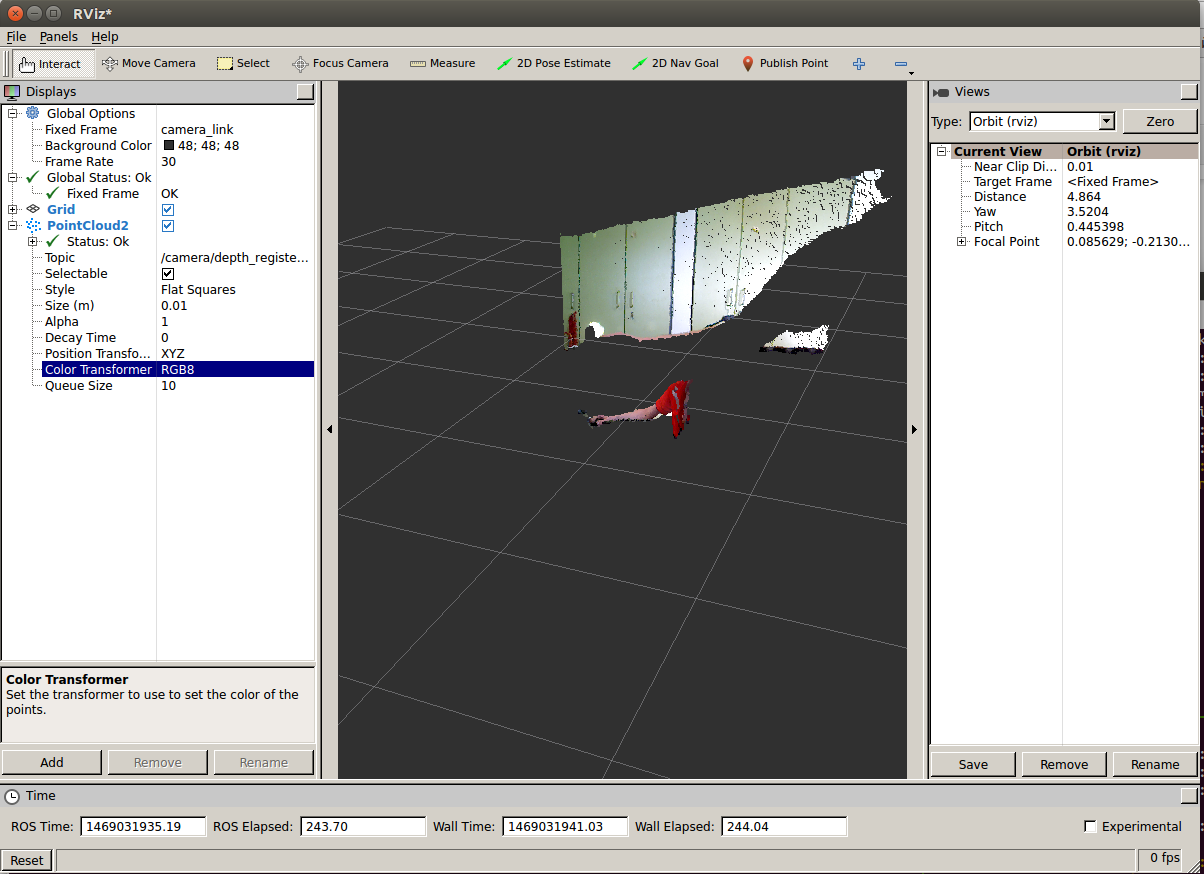

Select the topic / camera / depth_registered / points for the new display

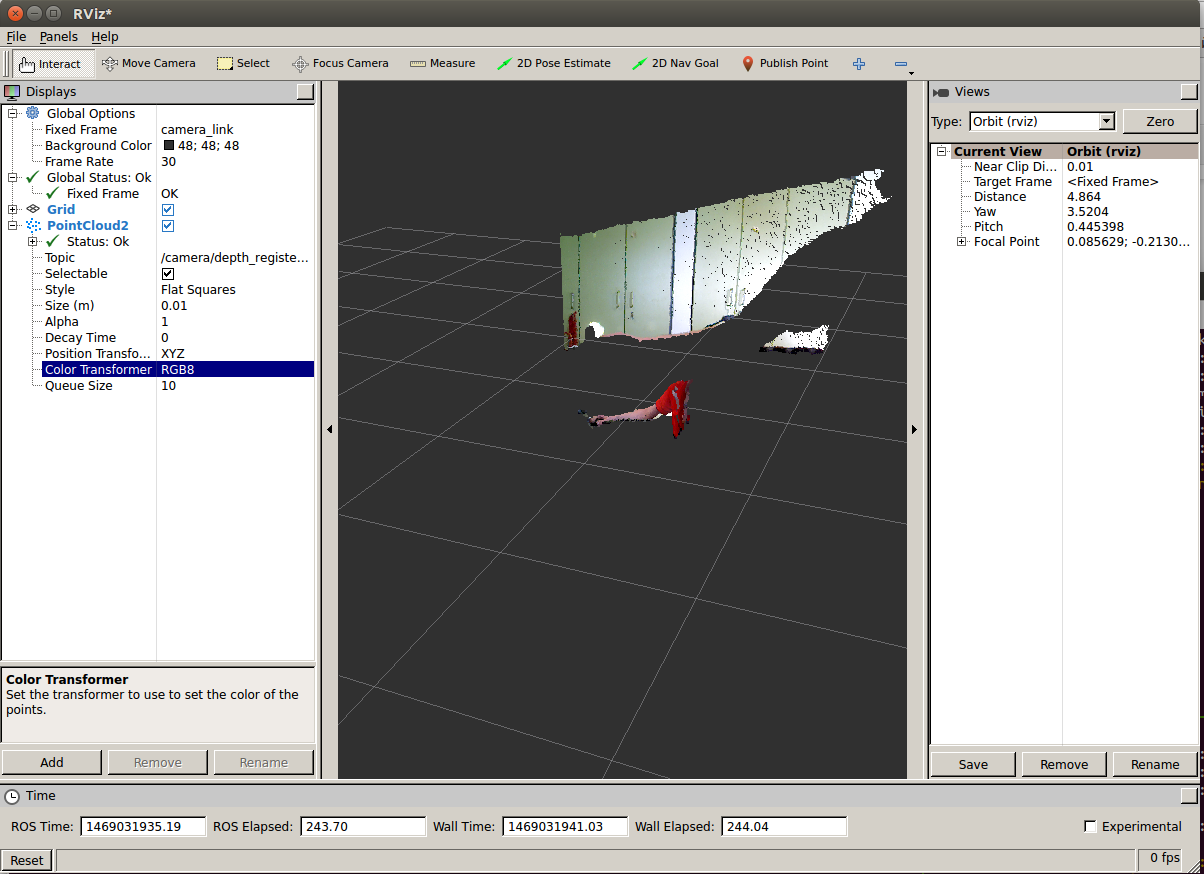

After that, select the value “RGB8” for the Color Transformer field.

Now we will see a similar picture:

The value “RGB8” allows you to display a color “cloud of points”, where each point has coordinates X, Y, Z and color RGB.

I want to draw your attention that Microsoft Kinect Xbox 360 only works with USB 2.0. Connecting the camera via USB 3.0 can lead to a device detection error, which I mentioned at the very beginning of the article.

The official ROS portal has tutorials for working with RGB-D camera data using the Point Cloud Library . These tutorials provide all the necessary information sufficient to start working with “point clouds”.

I wish you good luck in using RGBD cameras in ROS to solve your computer vision problems!

Recent observations and our own experience have shown that connecting to the Microsoft Kinect Xbox 360 camera for use in ROS Indigo from under Ubuntu 14.04 often causes problems. In particular, when running the openni_launch ROS package, the device cannot be identified and the error “No device connected” is displayed. In this article I will describe my step-by-step method of setting up the environment for using Microsoft Kinect from ROS Indigo. Who is interested, please under the cat!

Microsoft Kinect Camera

This sensor has already been written in detail in the article . In a nutshell, Microsoft Kinect is a 3D (RGB-D, that is, red, green, blue and depth) camera based on Structured light technology, which allows you to get a map of the depth of the surfaces of objects caught in the camera's field of view. The depth map can be transformed into a three-dimensional “cloud” of points, in which each point has exact X, Y and Z coordinates in space and in some cases a color in the RGB format. “Clouds” of points are used in the tasks of reconstruction of objects, building maps of the area in service robotics, object recognition and many other tasks in the field of computer vision in 3D.

')

ROS provides support for the Kinect sensor. The “cloud” of points received by the sensor can be visually shown in the rviz program.

To use the Kinect sensor in ROS there are special packages openni_launch and openni_camera.

Installing the driver for the Microsoft Kinect sensor

First you need to install the OpenNI library intended for a number of RGB-D sensors (Kinect, ASUS Xtion, PrimeSense).

Install some additional packages that OpenNI requires to install:

sudo apt-get install git build-essential python libusb-1.0-0-dev freeglut3-dev openjdk-7-jdk sudo apt-get install doxygen graphviz mono-complete Now directly install OpenNI 1.5.4 from the sources:

git clone https://github.com/OpenNI/OpenNI.git cd OpenNI git checkout Unstable-1.5.4.0 cd Platform/Linux/CreateRedist chmod +x RedistMaker ./RedistMaker Now download the avin-KinectSensor library for the Kinect sensor from here .

unzip avin2-SensorKinect-v0.93-5.1.2.1-0-g15f1975.zip cd avin2-SensorKinect-15f1975/Bin Depending on the type of system (32 or 64 bits), select the appropriate installer. For a 32-bit system, perform the following steps:

tar -xjf SensorKinect093-Bin-Linux-x86-v5.1.2.1.tar.bz2 cd Sensor-Bin-Linux-x86-v5.1.2.1 For a 64 bit system, run:

tar -xjf SensorKinect093-Bin-Linux-x64-v5.1.2.1.tar.bz2 cd Sensor-Bin-Linux-x64-v5.1.2.1 Finally, complete the installation:

sudo ./install.sh Installing openni_ * packages

The last thing we need to do is install the openni_launch and openni_camera packages , which allow us to receive and work with data from OpenNI-compatible depth cameras in ROS. Installing these packages is very simple:

sudo apt-get install ros-indigo-openni-camera ros-indigo-openni-launch Driver Installation Verification

Now everything is ready! We check the success of the installation. Run in different terminals:

roscore roslaunch openni_launch openni.launch depth_registration:=true rosrun rviz rviz Setting the depth_registration argument: = true indicates that we want to enable OpenNI registration and receive XYZRGB camera data (depth and color).

First, in rviz, expand the Global options section in the left column of the Displays and set the / camera_link value for the Fixed frame field as in the picture:

Thus, we set the necessary coordinate system for correct display of data from the Kinect camera.

In rviz we create a new display. To do this, click the Add button and select the type of display PointCloud2 as in the picture:

Select the topic / camera / depth_registered / points for the new display

After that, select the value “RGB8” for the Color Transformer field.

Now we will see a similar picture:

The value “RGB8” allows you to display a color “cloud of points”, where each point has coordinates X, Y, Z and color RGB.

I want to draw your attention that Microsoft Kinect Xbox 360 only works with USB 2.0. Connecting the camera via USB 3.0 can lead to a device detection error, which I mentioned at the very beginning of the article.

The official ROS portal has tutorials for working with RGB-D camera data using the Point Cloud Library . These tutorials provide all the necessary information sufficient to start working with “point clouds”.

I wish you good luck in using RGBD cameras in ROS to solve your computer vision problems!

Source: https://habr.com/ru/post/396291/

All Articles