How a regional state data center was created

"It is not a place that paints a person, but a person - a place"

We all regularly see reviews of various kinds of Data Processing Centers (DPCs): big, small, underwater, arctic, innovative, productive, etc. However, there are almost no reviews of those invisible heroes who work for our benefit in state dungeons, and even more so in the regions. Therefore, I want to share my own experience of creating a data center in the Stavropol region.

Acquaintance

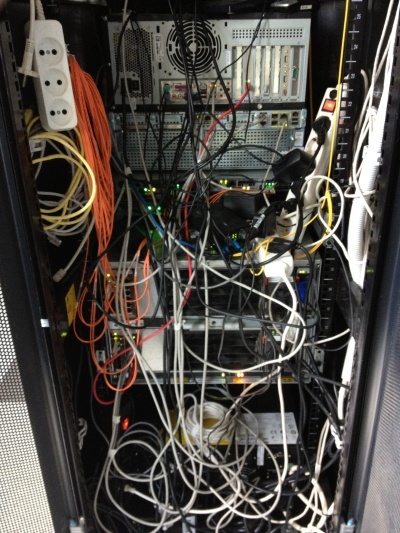

It was a warm summer day, as I remember it now - on June 18, 2012. On that day, going to the walls of our future data center, I saw this very picture, which, frankly speaking, plunged me into a small shock. Meet GKU IC "Regional Center of Information Technologies" at the dawn of its existence. All images are clickable.

So the only rack inside our future data center in June 2012 looked like.

')

It was quite a young organization. Its main task at the time of the beginning of my activity was the maintenance of electronic document management of public authorities (OGV).

My goal was to create a data center and organization that serves it. All existing state information systems were to move to the data center, as well as accommodate all newly created ones. At that time, there was a rapid growth of electronic public services and general automation of public authorities.

At that time, there was no understanding of what e-government was. The main thing that was the understanding that "not a place paints a person, but a person - a place." I had a small team consisting of me, but for starters it was quite enough, especially the automation of routine processes allows you to avoid unnecessary bloating of the staff.

And this is how the data center looked like before my departure, July 2014.

Before moving to this organization, I worked as a leading specialist in the support department of one of the major banks. And this experience was very useful for implementation in the state bodies . In general, I really liked the banking sector from the IT point of view, but this is a separate story. Here I also had to fulfill to some extent the functions of a “gray cardinal”: who was absent in the staff of the chief engineer.

The data center created by me should be not just reliable, but disaster-resistant, productive and cheap. It seems to me that is why I was invited to work here, since it was necessary first to achieve effective use of what is already there. And integrators had only one suggestion: if you give more money ... Including, therefore, I didn’t resort to the services of integrators, etc.

Since all resources actually moved to our “cloud” - we had to introduce the concept of “public private cloud”, due to the fact that the existing conceptual apparatus consisting of “public” and “private” “clouds” did not fully satisfy the logic of providing resources. From the outside, it was a “private cloud”, from the inside - “public”, but only for the state bodies. In this regard, there were some features of software licensing.

We had time to decide on some conceptual moments even before I joined the organization, they had to be taken for granted. The project was developed in close cooperation with IBM, Microsoft, Cisco. Why these vendors? For me - it has historically happened. Do I regret it? Not at all! Could other vendors be used? Of course, for example, DELL, HP or any other, as well as their arbitrary combinations.

As a virtualization platform was purchased - VMWare, at that time 5 versions. Here, I think everyone will agree that the choice is practically without any alternative, since the others did not provide features similar to Fault Tolerance.

During the initial audit of the available rack capacities in the city, I discovered a pair of IBM BladeCenter chassis: E and H. The chassis was equipped with HS22 blades, far from the worst, the hard middle at that time. The state, of course, was somewhat deplorable, especially the burning error indicators were annoying.

View of one of the available racks in June 2012. Pay attention to the installation of equipment through the "box", especially Cisco equipment.

As a storage system, a DS3512 shelf connected via optics with 2TB disks installed was installed on one site. The DS3512 and DS3524 regiments were installed on another site.

At the backup site, the free space was distributed in such a way that VMWare did not start without manual intervention: it detected other installed copies and stopped, only the launch with the corresponding key helped. The distribution itself went according to the principle: each virtual machine has its own LUN. When it was necessary to allocate additional space to the virtual machine, but on the existing LUN, it was not there ...

Between themselves, the sites were connected by a thin data network with a width of 1 Gbit / s. There was no dedicated network for the operation of the same virtualization and circulation of service traffic.

After a brief introduction and audit of the IT infrastructure (and it was brief, since practically no infrastructure existed), it was concluded that I had a classic example of how not to do it. There were no schemes, no accompanying documentation, even the administrator passwords were known far from all; they had to be reset and restored.

I resolutely set to work.

The beginning of the way

In such a serious future organization at the time of my arrival from the IT infrastructure there was absolutely nothing: a lone smart switch, a peer-to-peer network, shared resources at each workplace ... In general, everything is exactly as you imagine the state of affairs in the region.

Accordingly, the infrastructure of the enterprise was initially quickly established. For what was ordered everything that was available at that time. Armed with a network tester, I found and signed all the wires. Since at the time of laying the wires, there were no workplaces plan — somewhere, instead of phones, there were PCs, somewhere there were not enough wires at all and many other standard “charms”.

Unfortunately, when designing the server room of the future data center, no one did a raised floor or a wire tray under the ceiling. Naturally, there was no money for that or the other, so I had to induce beauty on my own.

Since no one allowed me to simply shorten the wires at that time: “suddenly I would have to move the rack to the far corner of the room, how then to be?” I had to make the 110th cross from the ceiling, from which the wires were lowered into the rack. So that in case of moving the rack, the short wires could be dismantled from the cross-country by installing longer ones there.

View of the 110th wall cross panel in the process of installation, as well as a knife for sealing wires.

It was also immediately determined that the patch cords would be color-coded, since only the blue and red cables were available, the telephony had to be red, and everything related to the network was blue.

Type of rack before and after installation.

In the rack, I found an office PBX Panasonic KX-NCP500, complete with 4 city and 8 internal lines. I was interested in the internal telephone lines least of all; after all, it was an IP PBX: I gradually switched everything to VOIP.

Since I had no serious experience in setting up the PBX, I had to tinker a bit. Just understanding the need to raise your own STUN server was worth something ...

The organization did not have its own network as such; all the computers were located in one large intranet. It did not suit me as a person who knew about information security not by hearsay: I set up and configured a FreeBSD router on the network border, and the network itself was segmented for complete interconnection control. With this approach, only one segment usually suffers.

About the network itself, the only thing that was clear was that it was somewhere. I had to restore the entire network topology from hardware configurations, carefully sketch and document it. Configs gradually took on a human appearance, description and naming logic appeared.

After almost half a year, I finally found the documentation on the network of state bodies . But, unfortunately, it was 90% inconsistent with what it was in fact. It was made very high quality, it was one of the few documents on which it was possible to work. But no one, judging by the settings, did not work.

As the network explored all the nodes, I brought it up to date, since practically all the equipment had very outdated software installed. Somewhere this was not necessary, but somewhere it corrected the existing problems.

Old (left, 2012) and new (right, 2016) view of the main page of the site.

A website was also developed, with its own name-servers, shared hosting, and mail service being raised in parallel. I am extremely suspicious of organizations where employees have postal addresses on obviously public postal services, especially in public institutions.

In general, the first couple of months of work have passed, I think, very fruitful.

First stage

Initially, the data center, except for interdepartmental workflow, was not used for anything else. Undoubtedly, document flow is one of the most important parts of the work of public authorities. Often, precisely because of the complexity of the workflow, many problems arise in the work of our state, and it is the electronic workflow that is the way out of the current situation.

I think the most interesting question is: what does e-government work in the data center?

The data center itself consists of several geographically distributed sites, thus implementing the concept of disaster recovery, the work is carried out 24/7/365. The sites were interconnected by a main line for 32 single-mode fibers.

The basis of the data center is made up of IBM HS22 and HS23 (now Lenovo) blade pairs distributed over sites. 14 blades are placed in each chassis; at the initial stage, five blades were installed.

Each blade has two processors, if I'm not mistaken, E5650 (6 cores, 12MB cache), 192GB RAM for the outset. Blades without disks, inside I decided to install USB-flash with VMWare image in order to separate the execution and storage as much as possible, the logs are written to the shared storage, the uplink of each blade is 2 Gbit / s. Uplink can be raised to 10 Gbit / s by installing the appropriate switch in the chassis and network card in each blade.

Our main 32-fiber trunk.

As an OS - VMWare vSphere (when I left was 5.5) Standard. In a more advanced version, I did not see the point then: the proposed functionality was sufficiently abundant. And what was missing was possible to write independently.

Later on, the number of blades was increased due to the slightly more powerful IBM HS23 servers.

Power redundancy at each site was different, but at least two power supplies. Also in each rack there are additionally installed a power supply rack, in pairs: the power supply units of the devices are powered from different sources. Maybe too much, but there were a couple of moments when rack-mount UPSs were rescued. Something, and redundancy in such systems does not happen much.

Cooling was also different. At the main site, these were wall-mounted industrial air conditioners with a balancing unit. The temperature was maintained at 21 degrees. On another site, these were industrial floor air conditioners and underground air supply.

IBM SVC . The controller of our storage system.

The storage system is scalable, based on the IBM SVC controller, which made it possible to achieve redundancy of the same RAID 6 + 1, redundancy along several paths, interconnecting optics of 8 Gbit / s uplink. Any part of the data center could at any time go into battery life in the event of an accident or routine maintenance.

Almost all physical resources are virtualized in a functioning data center: storage is virtualized on the basis of IBM SVC ; CPU and RAM resources are virtualized based on VMWare vSphere. If we take VLAN on switches for virtualization, we can assume that the network infrastructure is also virtualized.

Almost all the equipment that supports remote control, I connected to the network and set up. At remote sites, everything works, including via controlled electrical outlets (though they appeared later, at the second stage), therefore in case of failure of any equipment without remote control, it can be reloaded by power.

The data center was created in several stages. The first stage included establishing order and creating a cloud infrastructure. The initial goal was to create a virtualized storage tier based on IBM SVC .

As the plan was implemented, servers that had previously been located in third-party organizations began to move to our site. So we moved several old IBM servers with working services that consumed and warmed more than one BladeCenter chassis. Of course, gradually the services from them moved to the “cloud”.

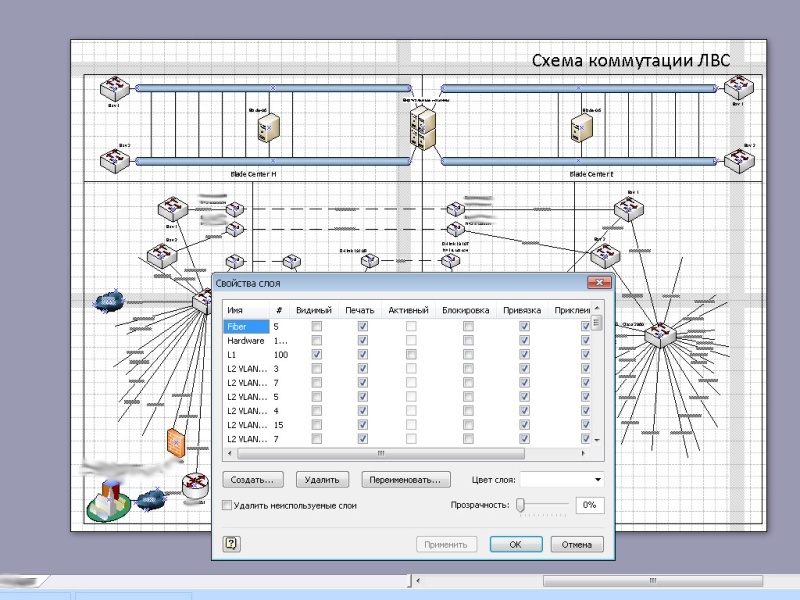

View racks in the editor.

First of all - the plan. By that time, I could already work as I see fit (after all, there were already several completed projects, and the fact that there is work without failures - I earned the trust). Therefore, I first assembled the stands in the editor, discussing and arguing with me along the way.

The process of mounting equipment in a rack.

The installation was carried out according to the standards, best practices and recommendations described, including, in the IBM RedBook. Of course, for the first time my “let's read the documentation” was ridiculed, but after the “method of scientific typing” the server refused to fall into place because for one the distance between the guides is too large, for the other too small - I found in Redbuke standard dimensions for the assembly of racks, then everything came together the first time.

The first stage of the data center, June 2013. In the background is the tambourine of the High Administrator.

By that time, it had been almost a year since I worked in this organization for the benefit of the state. During this year, we have never resorted to the services of integrators or any other contractors. I will not be cunning, several times I turned for help to colleagues from IBM with questions on their equipment and we jointly solved the problems that emerged, for which many thanks to them.

Already at this stage, the data center became illustrative and regularly received visitors to demonstrate how the IT infrastructure should work and look.

IT infrastructure should not look like this. The picture was taken on the second day of my work, 2012.

In the process of implementing the data center, it became clear to me that resources need to be efficiently delivered to consumers - government bodies. Moreover, some of the “peculiar” written information systems that have moved to the cloud, drove very large amounts of information on the network.

Access exclusively via the Internet did not seem such a good idea, since it did not offer sufficient access speed. And the expansion of the Internet connection in all the state bodies is an extremely expensive procedure. Therefore, it was decided to deploy its own network: economically, in terms of security, this turned out to be much more profitable than renting communication channels from the same Rostelecom.

Why was this not done initially? The answer is simple: there were no qualified specialists for this work, only external contractors. And they seek to plant on an outsource.

At this moment I had to plan and build the infrastructure of the provider. At the same time, we had to conduct an audit of many networks of state bodies . Of course, in some of the state bodies there were quite strong IT services (for example, in the Ministry of Finance, in the Ministry of Defense, in the government apparatus and others). But there were frankly weak ones where they could not even press the wire. Those had to be taken completely under his wing, since the qualification allowed.

Therefore, in particular, I had to develop the typical network architecture of the public authorities , to which it was necessary to strive. Standardization and typing is the key to efficient operation. According to my preliminary calculations for one person, at least 100-150 administrative objects should have been in our organization.

One of the Cisco equipment supplies.

Of course, in addition to the obvious VLAN technologies, other modern technologies were used to facilitate administration: OSPF, VTP, PVST, MSTP, HSRP, QoS, etc. I wanted, of course, to raise statefull redundancy, but, unfortunately, there was not enough ASR hardware resources. Unfortunately, it didn’t get to MPLS. Yes, and it was not necessary.

As the network expanded, I began to take control of the equipment of the OGV , simultaneously setting it up as it should. In the process of connecting administrations around the edge - I had to conduct explanatory and educational work and help colleagues in district and rural administrations.

The total uplink between the sites at the first stage was only a few gigabits. But I have already allocated a channel for vSphere operation.

The network itself turned out to be geographically strongly distributed, including a fairly large number of remote nodes connected via L2 / L3 VPN.

Much, of course, could be solved with serious financial investments, but I managed to do what was. Often, the available equipment was used inefficiently, so I simply found better places for it. Especially in the first year of life, everyone was very skeptical about the prospects for the implementation of this project.

But after the first year, when thanks to our organization, direct and indirect budget savings of tens of millions of rubles were shown, and the attitude changed dramatically.

One of the network diagrams. Most of the nodes for obvious reasons, is hidden.

Second phase

At the second stage, we already had to increase the hardware capacity of our data center. The capacity expansion required a chassis change from model E to H, re-assembly of existing racks. The disk space was increased by increasing the number of shelves from the HDD.

The shelves with IBM DS3512 hard drives and DS3524 shelf are visible at the top.

Some more physical servers moved to us. The number of virtual machines has increased significantly.

A backup tool based on the IBM TS3200 tape library has been added.

In the foreground is the IBM TS3200 tape library.

Naturally, I began the installation of new equipment with preliminary planning. Here we had to model the racks on both sides.

Planning data center expansion.

The move was completed as soon as possible. Since by that time virtualization functioned in full, the process of moving was completely unnoticed, because before transferring and disconnecting equipment on one site, all virtual servers migrated to another, and at the end of the work, everything returned to normal.

Of course, in addition to the main site, the reserve site was also put in order. At the same time, thanks to a fully functioning backup system, it was finally possible to restore order there as well.

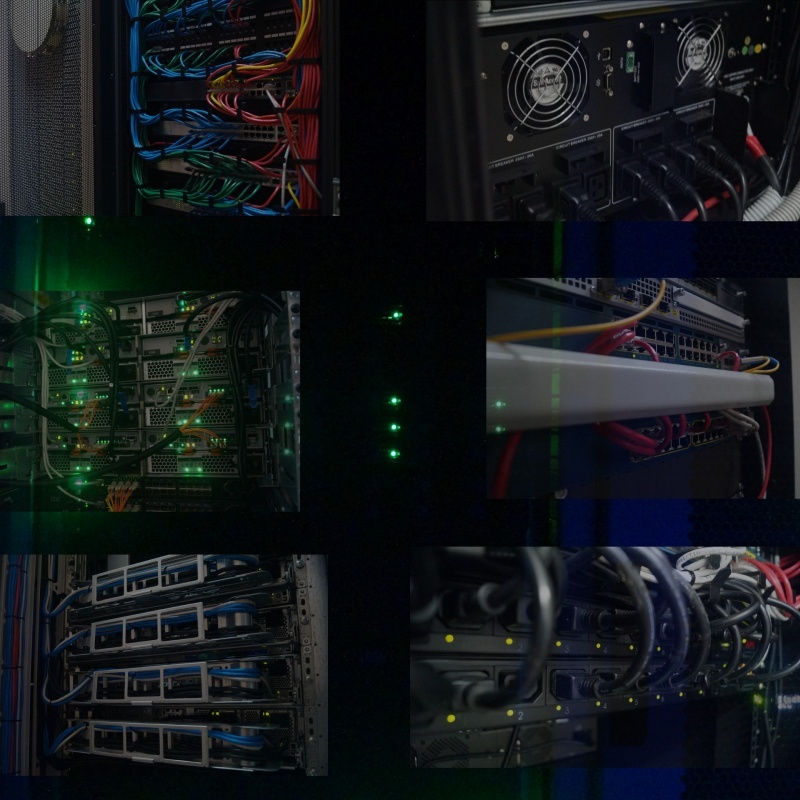

Type racks after the expansion of the resource, 2014.

And since by that time our data center had already become exemplary, I decided to pay attention to details: the available free units were closed with black false panels, and where ventilation was necessary, perforated false panels were installed. Even the fixing bolts with which the equipment is mounted in the rack, I took off and painted from the can in black. Trifle, but nice.

Type of racks on the backup site during installation (left) and at the end (right).

In order to get rid of media converters lying everywhere, a pair of D-Link DMC-1000 chassis were purchased, including providing backup power to media converters due to a pair of power supplies.

In parallel, work was underway to upgrade the network. I closed the ring of the data network core at a speed of 20 Gbit / s between the sites. By optimizing the existing equipment, the service network for operation of the virtual infrastructure acquired 10 Gbit / s uplink, which made it possible to migrate almost all the blades at the same time, and the throughput was enough.

SNR equipment compatibility with existing Cisco and Brocade equipment turned out to be very pleasant. Of course, at one time, the presence of Brocade equipment in the chassis was an unpleasant surprise for me, since earlier I did not have to work with it. But, fortunately, knowledge of the principles of the network allowed us to quickly deal with it.

An important part of the work, I think the accuracy of execution. Everything should not only work well, but also look good. The more order in the work, the higher the reliability. I was lucky that no one had such an approach to work, therefore, together with one of our colleagues in our stands, a perfect order was established, I believe.

Everywhere there must be order.

Meanwhile

In parallel with the physical creation of the data center and the network of state bodies, there was development of software for the functioning of e-government, in which I also had the opportunity to participate. Both in terms of implementation and deployment, and in terms of ideological support. For the support of the true ideology, many thanks to the then leadership.

I regularly had to audit databases, drive developers to use indexes in databases, catch resource-intensive queries and optimize bottlenecks. One of the systems except the primary keys did not have any more indexes. The result - at the beginning of intensive operation, the performance of the database began to deteriorate sharply, it was necessary to independently plant indexes.

Due to timely intervention in the development process, all developed state systems were made cross-platform. And where there was no urgent need to use Windows as a base, everything worked under the control of Linux family systems. As it turned out, the main obstacle to creating cross-platform applications was the use of non-universal notation in writing paths. Often, after replacing one slash with another, the state system abruptly became cross-platform.

In any case, the network created covered virtually all the OGVs , I was ready to start providing Internet connection, filtering traffic, anti-virus protection, and preventing attacks from the inside and outside of the OGV. Including this was to provide significant budget savings through more efficient use of bandwidth.

By creating a single service technical support UGA , I planned to create high-tech jobs to focus within our organization the main force of the qualified support of jobs, leaving on the ground actually technicians, engaging in parallel increasing the level of experts in the field by organizing conferences and webinars, trainings away ...

The third stage of the modernization of the data center was supposed to be the most interesting for me. By the end of the second stage, a dialogue was established with the developer of domestic Elbrus processors, access to the test bench was obtained, and it became clear that at least one third of the functionality of the systems in use could be transferred to the domestic hardware platform! Next year, in 2015, the new hardware version of the domestic processor was to be released ... The following year's budget was included in the budget for the purchase of servers ...

Summing up

But my dreams of transferring public services to domestic hardware platform did not come true (as well as other plans), since I was forced to change jobs to no less interesting, but more highly paid. It is a pity, of course, I think that I could make a good push for the introduction of the domestic hardware platform. Moreover, it was before the wave of import substitution.

At the time of the end of my career in this organization on the basis of our data center, I managed to plan, create, deploy, or take part in the creation of:

- Data center and network of state bodies ( http://cit-sk.ru/ );

- regional portal of state and municipal services ( http://gosuslugi26.ru/ );

- « » ( http://control26.ru/ , );

- ;

- ( http://stavregion.ru/ );

- IP- (Asterisk);

- (VipNET);

- . («»);

- (@stavregion.ru);

- ( );

- «»;

- ;

- VDS- ( );

- ;

- ();

- ;

- Register of UK state services;

- centralized update services for WSUS and antiviruses;

- site monitoring and statistics collection;

- etc.

In total, more than 120 virtual servers then worked on our 7 pairs of physical servers, using about 30-40% of the CPU and RAM resources, about 50% of the storage system. In total, at that time we had about 30-35 people, including the entire administrative and managerial staff, the call processing service.

Efficiency of use, I am sure, on face.

In fact, you can still tell a lot in details about the formation of almost each of the services, then you get a rather weighty volume of memories.

Thanks

- First, to you, dear reader, for having read this far.

- My wife for the help and support.

- « » .

- , .

- , .

Source: https://habr.com/ru/post/395197/

All Articles