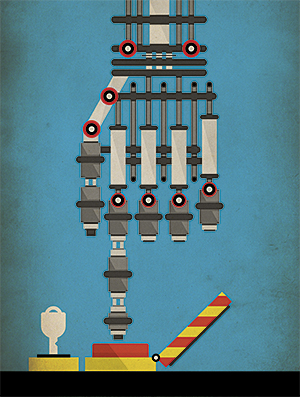

Will we give the military robots a license to kill?

As the AI of military robots improves, the essence of the war changes.

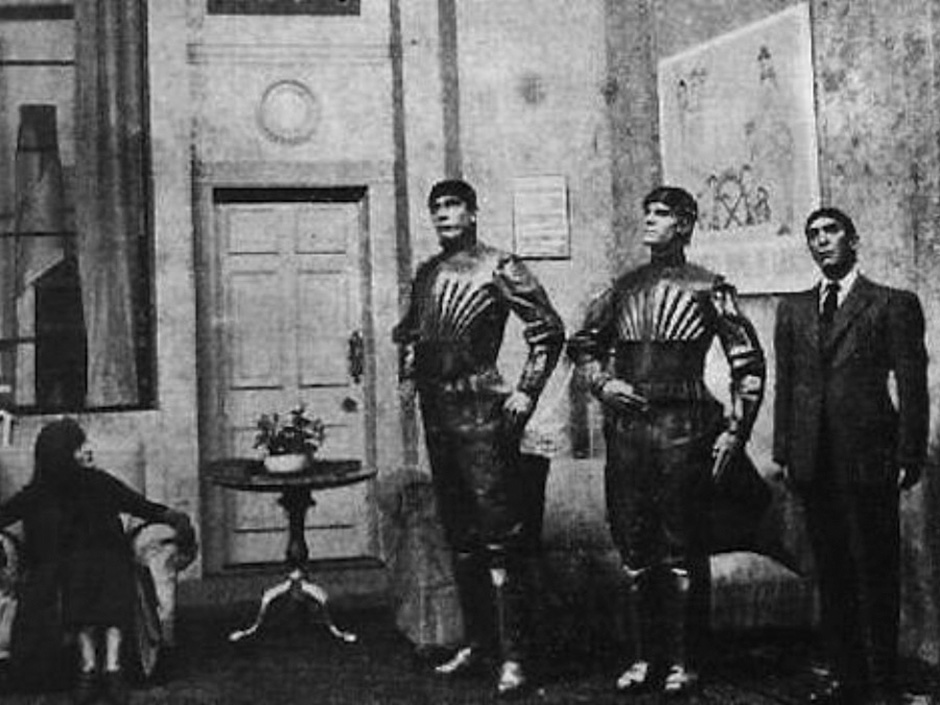

Czech writer Karel Čapek in 1920 wrote the play RUR (Rossum's Universal Robots, “Rossum Universal Robots”), in which he presented the word “robot” to the world. In his play, everything starts with artificial people - robots that work in factories and produce inexpensive goods. And all ends with the fact that robots destroy humanity. This is how the long-lived story of NF was born: robots get out of control and turn into irresistible killing machines. Literature and cinema of the 20th century continue to provide us with examples of robots destroying the world, and Hollywood is constantly turning it into blockbuster franchises: the Matrix, Transformers, and the Terminator.

Czech writer Karel Čapek in 1920 wrote the play RUR (Rossum's Universal Robots, “Rossum Universal Robots”), in which he presented the word “robot” to the world. In his play, everything starts with artificial people - robots that work in factories and produce inexpensive goods. And all ends with the fact that robots destroy humanity. This is how the long-lived story of NF was born: robots get out of control and turn into irresistible killing machines. Literature and cinema of the 20th century continue to provide us with examples of robots destroying the world, and Hollywood is constantly turning it into blockbuster franchises: the Matrix, Transformers, and the Terminator.Recently, the fear of turning fiction into reality is supported by coincidence, among which are improvements in AI and robotics, the spread of military UAVs and ground-based robots in Iraq and Afghanistan. Major powers develop intelligent weapons with varying degrees of autonomy and mortality. Most of them will soon be remotely controlled by people, without a command from which the robot will not be able to pull the trigger. But, most likely, and according to some, even beyond any doubt, at some point robots will be able to operate completely autonomously, and Rubicon will be transferred in military affairs: for the first time a set of microchips and software will decide whether a person lives or dies.

Not surprisingly, the threat of the emergence of killer robots, as they were affectionately nicknamed, led to a passionate debate. On different sides of the dispute are those who fear that automatic weapons will start a general war and destroy civilization, and those who argue that new weapons are just another class of precision-guided weapons that will reduce rather than increase the number of casualties. In December, more than a hundred countries will discuss this issue at the NATO Disarmament Meeting in Geneva.

UAV controlled by people

')

Gun "Phalanx", capable of shooting on their own

Last year, such a discussion hit the news feeds, when a group of leading AI researchers called for a ban on "autonomous offensive weapons that operate without meaningful human control." In an open letter at an international conference on AI, a group of researchers noted that such weapons could lead to an international AI arms race and be used for contract killings, destabilization of nations, suppression of peoples and the selective destruction of ethnic groups.

The letter was signed by more than 20,000 people, including such famous personalities as physicist Stephen Hawking and businessman Ilon Musk. The latter last year donated $ 10 million to a Boston institute dedicated to protecting the lives of people from the hypothetical threat of an unfriendly AI. Writing letters — Stuart Russell from the University of California at Berkeley, Max Tegmark from MIT, and Toby Walsh from the University of New South Wales in Australia — explained their position in an article for IEEE Spectrum magazine. For example, in one scenario, it is possible that “on the black market a large number of low-cost microrobots, which can be placed by one person, can be killed by thousands or millions of people who fit the criteria of the user”.

They also added that “autonomous weapons can become weapons of mass destruction. Some countries may prohibit its use, while other countries, and, of course, terrorists are unlikely to be able to refuse it. ”

It is hardly possible to say that a new arms race, as a result of which intellectual, autonomous and mobile killing machines can appear, will serve the good of all mankind. However, this race has already begun.

Autonomous weapons have existed for several decades, although its rare specimens have so far been used mainly for defense. One example is the Phalanx , a computer-controlled gun guided by radar and mounted on US Navy warships. She can automatically detect, track, evaluate and shoot at the approaching missiles and aircraft, which she considers to be a threat. In a fully autonomous mode, no human involvement is required.

Drone harop destroys target

DoDAAM Systems shows the capabilities of the watchmaker Super aEgis II

Recently, military developers began to create offensive and autonomous robots. Israel Aerospace Industries has manufactured Harpy and Harop drones that induce radio emission from enemy air defense systems and destroy them by means of a collision. The company claims that its UAVs are selling well around the world.

The South Korean company DoDAAM Systems has developed a robot-watch Super aEgis II. He shoots a machine gun, and uses computer vision to detect and shoot at targets (people) at a distance of 3 km. It is alleged that the South Korean military conducted tests of these robots in the demilitarized zone on the border with North Korea. DoDAAM has already sold 30 such devices to other countries, including several customers from the Middle East.

Nowadays, systems with a high degree of autonomy are rather small compared with people-controlled robotic weapons almost all the time, and especially during shooting. Analysts predict that as military affairs develop, weapons will have more and more autonomous capabilities.

“The war will be completely different, and automation will play its role where speed is important,” said Peter Singer, an expert on military robots in the New America non-political party located in Washington. He will read that in future battles - such as in battles between different UAVs, or when meeting an automatic ship with an automatic submarine - a weapon that has a split-second advantage will decide the outcome of the battle. “There can be a sudden and intense conflict in which there simply will not be time to bring people up to date, since everything will be solved in a matter of seconds.”

The US military described their long-term plans for waging new types of warfare using unmanned systems, but whether they intend to arm these systems is still unknown. At the Washington Post forum in March, US Deputy Secretary of Defense Robert Work, whose responsibility it is to ensure that the Pentagon does not lag behind the latest technologies, indicated the need for investment in the development of AI and robots. According to him, the increasing presence of autonomous systems on the battlefield is “inevitable”.

Regarding autonomous weapons, Work insists that the US military "will not give the vehicle the power to make decisions with a fatal outcome." However, he himself added that “if a rival appears, ready to transfer such powers ... we will have to make decisions on the issue of competition with him. We have not yet figured out this completely, but we think a lot about it. ”

Vladimir Putin is watching a military cyborg on a quad during last year’s demonstration

First flight of UAV CH-5 Chinese company China Aerospace Science and Technology Corporation

Russia and China adhere to a similar strategy in the development of unmanned combat systems for operations on land, at sea and in the air, which, although armed, have so far depended on living operators. Russian Platform-M - a small robot with a remote control, equipped with a Kalashnikov assault rifle and a grenade launcher. It is similar to the Talon SWORDS staffing system - a land robot capable of carrying M16 and other weapons that have been tested in Iraq. Russia also built the Uran-9 unmanned vehicle, armed with a 30-mm cannon and guided anti-tank missiles. And last year, the Russians demonstrated a humanoid robot to Putin.

A growing arsenal of Chinese military robots includes many drones for attack and reconnaissance. The CH-4 is a long-acting UAV that resembles a United States Predator. Divine Eagle - high-altitude drone to hunt stealth bombers. In addition, robots with machine guns similar to Platform-M and Talon SWORDS were shown at various military presentations in China.

These three countries approached the creation of armed robots, and, increasing their autonomy, at the same time emphasize the preservation of the role of people in their work. A serious problem will appear to ban autonomous weapons: it will not necessarily be applicable to almost autonomous weapons. The military can always covertly develop armed robots that are controlled by humans, but when they are pressed, they go offline. "For robots, it will be very difficult to impose an arms limitation agreement," concludes Wendell Wallach, an ethics and technology expert at Yale University. “The difference between autonomous and non-autonomous armaments can consist of just one line of code,” he said at a conference.

In films, robots sometimes become surprisingly autonomous, even to regain consciousness almost from scratch, which takes people by surprise . In the real world, despite the general anxiety of machine learning progress , robots gain autonomy gradually. The same can be expected from autonomous weapons.

Anthology of killer robots

"RUR" of 1920 - not only the first robots, but also their first uprising

2001: A Space Odyssey (1968). One of the most notorious evil AI, the onboard computer of a spacecraft

"The World of the Wild West" (1973). Robots in an amusement park fly off

"Blade Runner" (1982). A gang of replicants arrives in Los Angeles

The Terminator (1984). The canonical image of the robot killer T-800 by Arnold Schwarzenegger

Robocop (1987). In the film, the bad guys are rich and greedy guys, but the two-legged, heavily armed robot also has a lot of fun.

Matrix (1999). Intelligent machines have captured the world and use scary robots to hunt people

"Star Cruiser Galaxy" (2004). Cyclones, human-like robots, set out to erase humanity from the face of the galaxy

I, the robot (2004). Humanoid robots, bypassing the Three Laws of Robotics, make a mess in Chicago

Transformers (2007). A gang of alien robots under the leadership of Megatron is trying to destroy all the people

“Often when people hear about autonomous weapons, they represent the Terminator and start saying,“ What have we done? ”, Says Paul Scharre, head of the future military program at the New American Security Center, a research group in Washington. "But the military is unlikely to want to make such autonomous weapons." According to him, most likely, autonomous systems will attack military targets such as radars, tanks, ships, submarines and airplanes.

The task of identifying a target — determining whether an object belongs to an enemy — is one of the most critical tasks for AI. Moving targets, airplanes and rockets, have a trajectory that can be tracked and, on its basis, decided to shoot down an object. This is how the Phalanx cannon works on US warships, and the Israeli Iron Dome missile interception system. But when choosing people as a target, this identification becomes more complicated. Even in ideal conditions, the tasks of recognizing objects and environments that people constantly cope with can be overly complex for robots.

A computer can distinguish a person’s figure, even if it moves in secret. But the algorithm is difficult to understand what people are doing, and what intentions give their body language and facial expressions. Is a man raising a gun or a rake? Is he holding a bomb or a baby?

Scarre argues that a robotic weapon, trying to choose a target on its own, rescues in the face of difficulties. In his opinion, the best approach, from the point of view of security, legality and ethics, is the development of such tactics and technologies in which people work with robots together . “The military can invest in advanced robotics and automation, but leave the person in the chain of control to make decisions about goals, for security,” he says. “After all, people are more flexible and better adapted to new situations that we can not program. This is especially important in a war where there is an enemy trying to defeat your systems, deceive them and hack. ”

It is not surprising that DoDAAM South Koreans produce their watch-robots with severe restrictions on autonomy. Now their robots will not shoot until the person confirms the target and commands “fire”. “In the original version there was an automatic fire system,” said a company engineer to the BBC last year . "But all the clients asked to build in safety procedures ... They were worried that the gun might be wrong."

Other experts believe that the only way to avoid the deadly mistakes of autonomous weapons, especially those involving civilians, is to draw up appropriate programs. “If we are so stupid that we continue to kill each other on the battlefield, and if the machines will be given more and more powers, can we at least make sure that they do their work with ethics?”, Says Ronald Arkin, IT -specialist from the Georgia Institute of Technology.

Arkin believes that autonomous weapons, like soldiers, must follow the rules of warfare and the laws of war, including international humanitarian laws that protect civilians and limit the power and types of acceptable weapons. This means that we have to bring certain moral qualities to their programs so that they understand different situations and distinguish good from bad. There must be a certain ethical compass in their software.

For the past ten years, Arkin has been working on such a compass. Using the tools of mathematics and logic from the field of machine ethics, he translates the laws of war and the rules of warfare into variables and operations that are understandable to a computer. For example, one of the variables contains the value of the ethical control unit confidence that the target is enemy. The other, boolean, means that the murderous power of a weapon is permitted or prohibited. In the end, Arkin came to a certain set of algorithms, and with the help of computer simulations and simplified battle scenarios - for example, the UAV attacks a group of people in the open field - was able to test its methodology.

Arkin admits that the project, sponsored by the US Armed Forces, has not yet reached the stage of the finished system, and was created only to prove its efficiency. But, in his opinion, the results of the work show that robots are even better people can follow the rules of war. For example, robots can perform life-threatening actions with more restraint than humans, shooting only in response. Or, as civilians approach, they may cease fire altogether, even if they themselves are destroyed. Robots do not suffer from stress, dissatisfaction, anger, fear - and all this can harm the adoption of the right decisions in humans. Therefore, in theory, robots can surpass human soldiers who often, and sometimes and inevitably, make mistakes in the heat of battle.

"As a result, we can save human lives, especially the lives of innocent people trapped in the battlefield," says Arkin. "And if robots manage this, then morality dictates the need to use them."

Of course, such a view is not generally accepted. Critics of autonomous weapons insist that only proactive prohibition makes sense. After all, such a weapon appears behind the scenes. “There is no such system of fire that we can show and say: Aha, here he is a killer robot,” says Mary Wareham, head of the lobby department at Human Rights Watch and coordinator of the campaign to ban killer robots ( Campaign to Stop Killer Robots ) - coalitions of various humanitarian communities. - We are talking about a variety of different weapons systems that work differently. But we are worried about one common feature - the lack of human control over the function of target selection and attack. ”

At the UN, discussions on autonomous robots capable of killing have been going on for five years now, but countries have not come to an agreement. In 2013, Christof Heyns, the UN special rapporteur on human rights, wrote an influential report in which he noted that countries have a rare opportunity to discuss the risks of autonomous weapons even before they are developed. Now, taking part in several UN meetings, Heins says that “if you turn back, I’m somewhat encouraging, and if you look ahead, it seems that we will have problems if we don’t start moving faster.”

This December, at the UN Convention on Classic Arms , a conference will be held with an overview of five years of work, and the issue of autonomous robots is on the agenda. But their ban is unlikely to be adopted. Such a decision will require a unanimous decision of all countries, and there is a fundamental disagreement between them about what to do with a wide range of autonomous weapons that will appear in the future.

As a result, disputes about killer robots boil down to discussing people. An autonomous weapon, at least at first, will be like any technology: it can be introduced neatly and prudently, or chaotically and catastrophically. And the charges will have to take people. Therefore, the question "Are autonomous combat robots a good idea?" Is not the most correct. It is better to ask the question: "Do we trust ourselves enough to trust our lives to robots?"

Source: https://habr.com/ru/post/395139/

All Articles