Do you entrust your body to a surgeon?

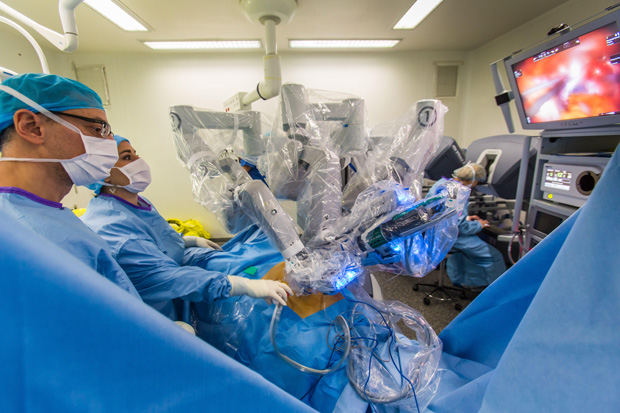

In the gleaming red cave of the patient's abdominal cavity, surgeon Michael Michael Stifelman gently directs two robotic arms to tie a knot on the thread. He controls the third hand with a needle, stitching a hole in the kidney of the patient, where there was a tumor earlier. Another manipulator holds the endoscope, which sends the video to Staifelman’s display. Each roboruka enters the body through a tiny incision 5 millimeters wide.

Watching this complicated procedure means marveling at what can be achieved by working with a robot in tandem. Styelman, director of the Langon Robotic Surgery Center at New York University, who has already performed several thousand surgical operations using a robot, operates the manipulators using a control panel. Turning his wrist and putting his fingers together, he forces the instrument inside the body to repeat the same movements, only on a much smaller scale. “The robot is one with me,” says Styfelman, while his mechanical appendages tie another knot.

')

But some robotics, watching these deft movements, would see not a miracle, but lost potential. Steyfelman is a trained expert with valuable skills and decision-making experience. But he spends his precious time stitching, fine-tuning after the main surgery. If the robot could itself carry out this monotonous procedure, the surgeon would be freed for more important things.

Today's robots empower the surgeon; they filter out the trembling in their hands and allow them to make movements that the best doctor would not have performed with laparoscopy with its long and delicate instruments (nicknamed “chopsticks”). But at the same time, the robot is just a more sophisticated tool under the direct control of man. Dennis Fowler, executive vice president of Robosurgeon-based Titan Medical , believes that it would be better for medicine if the robots became autonomous, began to make their own decisions and carry out the tasks assigned to them independently. “This technological improvement should add reliability and reduce the number of errors that occur because of people,” says Fowler, who worked as a surgeon for 32 years before moving into this industry.

To give robots such a promotion is not a very distant prospect. Most technologies are actively developed in scientific and industrial laboratories. Working while with rubber models of people, experimental robots sew up and clean wounds, cut out tumors. Some competitions between them and humans have already shown that robots work more accurately and more efficiently. Last month, a robotic system in a Washington hospital showed this result , stitching up real tissue taken from the intestines of a pig. The researchers compared the work of an autonomous bot and a surgeon, and found that the stitches of the bot were more evenly and tightly covering the incision.

Although these systems are completely unprepared for use in humans, they can represent the future of surgery. The topic is slippery as it implies the loss of work by surgeons. But in the operating room - as on the assembly line: if automation improves the results, you won’t stop it.

Hutan Ashrafian, a surgeon to remove parts of the digestive system to lose weight and a teacher at Imperial College London, studies the results of robosurgery and often writes about the potential of AI in health care: “I think about it all the time,” he admits. He believes that the advent of robotic surgeons is inevitable, while remaining, nevertheless, careful in choosing the wording. In the foreseeable future, Ashrafian expects robotic surgeons to perform simple tasks as instructed by the surgeon. “Our goal is to improve the results of operations. If using a robot leads to saving lives and reducing risks, then we will be obliged to use these devices. ”

Ashrafian also looks to the future: in his words, it is quite possible that next-generation robots will appear in medicine that will be able to make their own decisions through this AI. Such machines will be able not only to perform routine tasks, but also to do operations entirely. This seems unlikely, according to Ashrafian, but the path of technical innovation can lead us there. “This is a step-by-step process, and each of the steps is not that big,” he says. - But a surgeon from 1960 would not have recognized anything in my current operating room. And in 50 years, in my opinion, the world of surgery will be different. ”

Robo-surgeons already make decisions and act independently more often than you imagined. During vision correction, the automatic system cuts off the corneal flap and produces a series of laser pulses to change the shape of the inner layer. When replacing knees, robots cut bones with more precision than human surgeons. In expensive hair transplant clinics, the robot detects healthy hair follicles on the head, collects them and prepares a bald area for implants, making small holes in the head according to a certain pattern, saving the doctor from many hours of the routine.

Soft tissue surgery in the chest, abdomen and pelvic area is still a problem. The anatomy of people varies slightly, and an autonomous robot will have to understand very well the soft internal tissues and serpentine vessels. Moreover, the internal organs of the patient can move during operations, so the robot will need to constantly adjust the plan of operation.

He must also behave reliably in critical situations. This task was demonstrated at the New York University Surgery Center, where Styfelman released an arterial clamp that blocked the blood flow to the kidney during tumor removal. “Now we need to make sure that we are not bleeding,” he says, driving the endoscope around the organ. Most of the seams look good, but suddenly a red fountain appears on the screen. “Wow, have you seen? Let's get another thread, ”he says to the assistant. By blocking the thread with a quick stitch, Staifelman can complete the operation.

For him, this is part of daily work, but how will a robosurgeon cope with an unexpected situation? His computer vision system will need to recognize a serious problem in the blood fountain. Then the decision maker will have to decide how to mend the gap. Then come into work tools, among which will be a needle and a thread. Finally, the assessment program will evaluate the results, and determine if additional actions are needed. Setting up the robot to perform each of these steps perfectly — measuring data, making decisions, acting, and evaluating — is a big and difficult engineering task.

Steifelman , now working at the Medical Center at Hackensack University in New Jersey, did operations at New York University with the help of a da Vinci robot. This Intuitive Surgical machine costs up to $ 2.5 million and is the only US-approved robotic system for soft tissue surgery. As long as Da Vinci dominates the market, there are already 3,600 such devices operating around the world. But his path to success was not always smooth. Patients sued because of problems on operating tables, one study claimed that these incidents were not always reported. Some surgeons argue whether robosurgeons offer real advantages in laparoscopic operations, citing conflicting research on the results of operations in various cases. Despite this controversy, many hospitals have accepted Intuitive technology, and many patients seek it.

“Da Vinci” is completely controlled by the surgeon, his plastic and metal manipulators remain immovable until the doctor takes hold of the levers on the console. Intuitive wants to keep it that way for now, as Simon Dimayo, the head of research and development for the company's advanced systems, explains. But, he adds, robotics experts are already bringing a future in which surgeons will operate with "an increasing level of help and referral from the computer."

Dimao compares the research in this area with the early development of robots. “The first steps are recognizing markings, obstacles, cars and pedestrians,” he notes. Then, engineers created cars that help drivers recognize their surroundings — for example, a car that knows the location of the surrounding cars can warn the driver when trying to change lanes when it is undesirable. Robots-surgeons, to give the same comments - warning a person whose instruments have deviated from the typical path - need to become much smarter. Fortunately, many cars are already being trained.

The surgeon in the corner of the laboratory at the University of California at Berkeley is not yet able to tie knots, but he sews up well. Working with the flesh emulator, one manipulator holds a curved yellow needle through the edges of the "wound". The second pulls the needle out of the "flesh" to tighten the thread. They are not directed by human hands, and their path does not calculate the brain. The autonomous robot then passes the needle back and everything starts all over again.

While the robot continues to work, Ken Goldberg is running around the lab, looking like a professor from the Muppets. Goldberg, the head of the Berkeley laboratory for automation and engineering research, a professor in four areas, including electrical engineering and art, has a reputation for being able to get amazing results from robots. A portrait painted by one of his early robots hangs on the wall of the laboratory, where his face and torso are drawn out in awkward blue and red strokes.

So far, the “flesh” sewn by the robot is just pink rubber. But the technology is already quite real. In 2012, second-hand da Vinci systems were introduced to researchers at universities around the world by Intuitive. And when Goldber trains his Da Vinci to perform operations independently, the same algorithms can theoretically control real systems during live patient operations. “We are still driving around the test site,” says Goldberg, “but one day we will go on the road.” He believes that the simplest operations will be automated in the next 10 years.

To accomplish the task of autonomous stitching, the daisy-type Goldberg counts the optimal entry and exit points for each stitch and the needle trajectory, tracking its movement using sensors and cameras. The needle is painted bright yellow so that the computer can recognize it better. Still, the task remains difficult. The published results say that the robot completed only 50% of the procedures for creating four stitches, usually the second manipulator could not grab the needle or tangled it in the thread.

Professor clarifies that even if robots learn to perform simple operations well, he still considers the presence of living surgeons as observers necessary. He sees "autonomy under supervision." “The surgeon is still responsible for the operation,” he says, “but the low-level aspects of the procedure are performed by the robot.” If robots do monotonous work with accuracy and consistent quality - “compare the work of a sewing machine with manual seam” - combining the machine with a person can create a super-surgeon.

Soon, according to Goldberg, the robots will achieve the quality necessary for hospitals, as they begin to learn independently. In the last training experiment through observation, “da Vinci” recorded the data of operations performed by eight surgeons of different classes, when they applied four stitches with the help of manipulators. The learning algorithm extracted visual and kinematic data, divided the operation into steps (needle placement, pressure on the needle, and others) to perform them sequentially. Thus, "da Vinci" can, in principle, learn any surgical procedure.

Goldberg is confident that learning through observation is the only effective approach. “We think that machine learning is the most interesting topic now,” he says, “because the creation of algorithms does not scale up from bottom to top.” Of course, there are a lot of tasks, and complex tasks will require data processing thousands of operations. But there is enough data. Each year, surgeons perform 500,000 surgeries using da Vinci. What if they would share data from all operations (preserving patient privacy), allowing AI to learn? Each time a surgeon uses an auxiliary robot to successfully stitch a kidney, for example, an AI could improve its understanding of this procedure. “The system could extract data, improve algorithms, correct the problem,” says Goldberg. We would all get smarter. ”

Automation in the operating room does not have to include a needle, a scalpel, or something else sharp and dangerous. Two companies that are launching robotic surgeons on the market have developed cameras that automatically move, giving the surgeon a convenient picture of the operation, as if reading his thoughts. Today, surgeons using the da Vinci machine are still stopping to move the camera or ask for an assistant.

Morrisville's TransEnterix , seeks to rival da Vinci with its Alf-X system, already available in Europe. The system includes eye tracking embedded in the control panel and monitors the endoscope, a thin fiber optic camera that penetrates the patient's body. When the surgeon views the image on the screen, Alf-X moves the camera so that what interests the surgeon remains in the center of the screen. “The eyes of the surgeon are becoming a computer mouse,” says Anthony Fernando, director of technology for TransEnterix.

The Toronto Titan Medical Sport Surgical System practices a different approach to image automation. This system inserts small chambers into the body cavity, and they rotate and lead depending on the position of the surgeon's instruments. This type of automation is a convenient first step to robosurgeons, says Fowler, a company spokesman. And regulators, most likely, will not object to it. Fowler is sure that when the company applies for approval of its technology in Europe this year, and in the USA next year, the camera system will not cause any questions. “But if automation means that the computer controls the tool that makes the cut, then such a system will require additional testing,” he says.

Intuitive waiting for challenges from major rivals. Medtronic , one of the world's largest manufacturers of medical devices, is developing a robosurgical system , about which it is not telling anyone yet. Google last year teamed up with Johnson & Johnson to launch Verb Surgical , a system with “advanced robot capabilities,” according to a press release. Analysts on the basis of such activity predict a boom in the market. A recent report by WinterGreen Research says that the global market for robots doing abdominal surgery (da Vinci and its rivals) will grow from $ 2.2 billion in 2014 to $ 10.5 billion by 2021.

If the forecast is justified, and robosurgeons will become commonplace in operating theaters, naturally, we will trust them with more and more complex tasks. And if they show that they can be relied on, the role of human surgeons can change a lot. Someday surgeons will be able to meet with patients and develop a course of treatment, and then just watch how robots carry out their commands.

Gutan Ashrafian [Hutan Ashrafian], a surgeon for removing parts of the digestive system to lose weight, says that the professional community will readily accept these changes. The history of medicine shows that surgeons “always enthusiastically improve their effectiveness,” he says, and adds that they are ready to accept any useful tools. “No one operates with a scalpel anymore,” says Ashrafian with irony. If independent robots learn how to perform surgical tasks quite well, the same principle will work. In search of excellence, surgeons may one day even let the surgery out of their hands.

Source: https://habr.com/ru/post/394805/

All Articles