Crime Prediction Program accused of racism

For several years, law enforcement agencies in the United States have used special programs in their work that analyze crime statistics and issue recommendations. For example, in California, the program calculates the best route for patrols , taking into account the probability of crime in each area of the city at a certain time of day. Chicago police in 2014 compiled a watch list with the names of the 400 most likely criminals in the future . The prediction algorithm of criminals and future victims is concerned about human rights defenders, although it showed surprising accuracy (more than 70% of firearm victims in Chicago in 2016 were on the list prepared in advance by the program).

Recently, analytical programs began to be used in the courts. The judge must determine to punish the offender according to the minimum or maximum severity stipulated by law for a particular crime. In this case, the program calculates the so-called “risk assessment score” based on the available data. This indicator determines the probability of a repetition of a crime and, accordingly, affects the severity of the sentence.

So, a careful analysis of the results of the program, conducted by ProPublica , revealed a strange tendency: for some reason, the program gives a higher risk estimate for African Americans (Blacks) than for whites. In other words, the algorithm exhibits racial discrimination! And this is despite the fact that this program was specifically introduced into the judicial system precisely in order to eliminate such discrimination.

')

The risk assessment indicator is very important. It affects the severity of the sentence, the size of the amount under which the suspect is released on bail, as well as the choice of preventive measures and much more.

ProPublica analyzed more than 7,000 cases in Broward County (Florida), where one of the most popular programs of this kind from Northpointe computes a risk score for a suspect.

As it turned out, only 20% of suspects, for whom the program identified a high risk of committing crimes, actually committed it within two years after exposing the risk. Even taking into account minor violations, such as going beyond the perimeter of house arrest, "the accuracy of the program is slightly more than a coin toss."

But the worst thing is that the program manifests racial discrimination. Risk scores for African Americans are much higher than for white criminals. The erroneous prediction of relapse for blacks is twice as high as for whites.

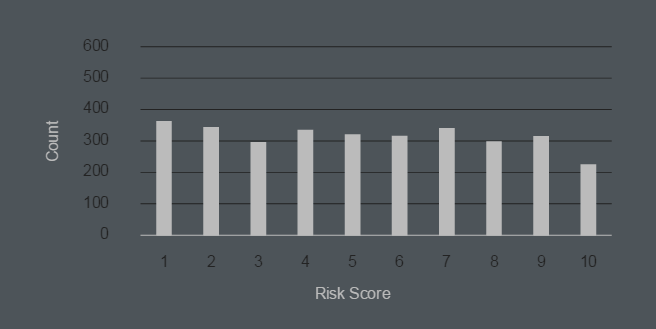

African American Risk Assessment (1 - Minimum, 10 - Maximum)

Risk assessment score for whites (1 - minimum, 10 - maximum)

The researchers conclude that the program violates the law, specifically considering the skin color factor as one of the parameters to determine the likelihood of a crime in the future.

Northpointe assures that the race of the suspect is not in any way taken into account in the analysis. But other factors are taken into account that correlate strongly with the race: level of education, employment status, criminal history of relatives, taking of illegal drugs by friends / acquaintances, etc. The weight of each factor in the final grade is classified — this is Northpointe’s proprietary algorithm.

Source: https://habr.com/ru/post/394313/

All Articles