Google's success in quantum computing in terms of programming

The successes in question are a demonstration of the conditions in which a D-Wave quantum computer is 100 million times faster than a conventional CPU. The news of this flew here and everywhere .

What does this mean for mere mortals? Do I really need to switch to programming on quantum computers? What is there in general for programming?

I was curious, and I read a little about the details (the scientific article itself is here ). As always, I briefly tell my understanding.

Disclaimer: The post is written on the basis of fairly edited chat logs closedcircles.com , hence the style of presentation, and the availability of clarifying questions.

For a start, a little background - what are D-Wave processors.

To make a universal quantum computer is very difficult and incomprehensible as, therefore, D-Wave makes very specialized hardware, which solves exclusively the task of quantum annealing.

What is generally annealing?

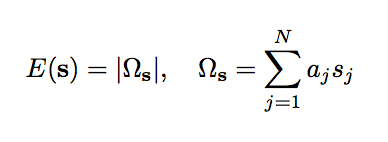

This is the task of optimizing this expression:

There are N binary variables s i , they take the values -1 or +1. You can set real coefficients h i and J ij .

annealing is minimization of E for all possible values of binary variables (with fixed coefficients).

and what is manageable in the end? h i , j ij ?

We can ask them from the outside, yes.

What is input and what is output? if we specify h i , J ij from the outside, then this large object finds the set s i for which E is minimal or what?

Yes exactly.

Further, we must understand that the quantum process does not guarantee finding a global minimum, it with some probability finds a value that is somewhat close to it.

What practical tasks can be solved in this way?

The example in the Google article is the so-called Number Partitioning Problem (NPP).

The classic task is to have a set of N positive numbers; you need to divide them into two heaps so that the sums of the numbers in each heap are as close as possible to each other.

Even such a seemingly simple task is NP-hard and all these things, but it is rather simple to express it as annealing.

Let a binary variable be the flag of belonging to some heap.

Then you need to minimize:

Minimizing a module is the same as minimizing a square.

I don’t understand the link between the first formula and the heap partition task

Here I painted in more detail:

How to solve such problems now on the CPU?

One of the simplest and most commonly practiced methods is simulated annealing. Wikipedia writes about it pretty well - https://en.wikipedia.org/wiki/Simulated_annealing

The idea is to start with some random state of binary variables and randomly flip them at each step.

If as a result of the flip we got the result better - take it. If not, then we take it or not with some probability.

The probability depends on the difference between the new and the old energy value and on the so-called "temperature" - another optimization parameter, such that at the beginning of the process the temperature is high, and the farther, the less. Due to this probabilistic process, simulated annealing can crawl out of local minima. Of course, it can be performed several times, restarts, etc., etc.

There are no heuristics in it and you can control only the "temperature mode".

What are the limitations of D-Wave in practice?

The latest version of D-Wave has about a thousand qubits (this is the same number of binary variables in the problem).

But there are some details!

First: these J ij between two s i and s j (they are also called couplings) require a connection between individual qubits, i.e. so that between two parameters there is a non-zero coefficient J ij - these qubits must be related.

In NPP, for example, every qubit is associated with each, since all J ij are nonzero

In D-Wave, each qubit can be connected (drumming) with ~ 10 others, and not with any, but given in advance.- Second: how accurately can these J ij be set

In D-Wave, this precision (again a fraction) is 4 bits.

Let me remind you that in NPP, J ij = a i * a j , i.e. for a i there are 2 bits left, lol.

Just in case, in Russian, it is difficult to come up with a practical task that can be solved on this good.

And here the men in Google are trying to come up with a very theoretical task that would fit the architecture as well as possible.

Why in general can a quantum computer be more efficient?

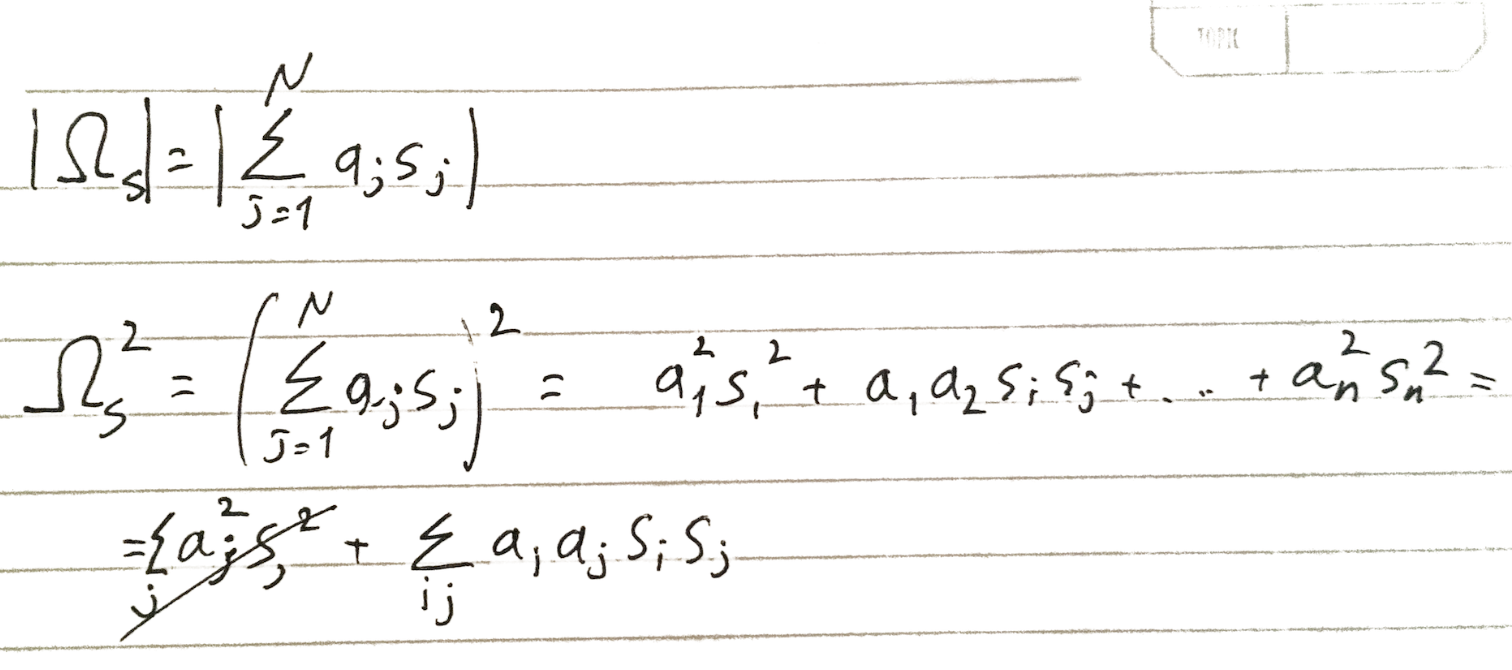

If our space has a lot of big mountains and depressions between them ...

That algorithm needs to go through these cavities in the process of optimization, and unlike simulated annealing, a quantum computer can "tunnel" between fairly narrow faults.

Those. simulated annealing should increase the energy of the system in order to get to the top of the hill, and the quantum computer can "tunnel" through the wall without increasing the energy of the system. Why come together faster - "increase energy" means a longer random search.

The distance at which a tunnel junction can occur is determined by the system parameters — computer temperature (all these processes become quantum at ~ 10 K), specific details of the physical layout, etc., etc.

Therefore, we need a task where there are a lot of narrow transitions of such length that it can be overcome by D-Wave.

Well, how is this “good” task constructed?

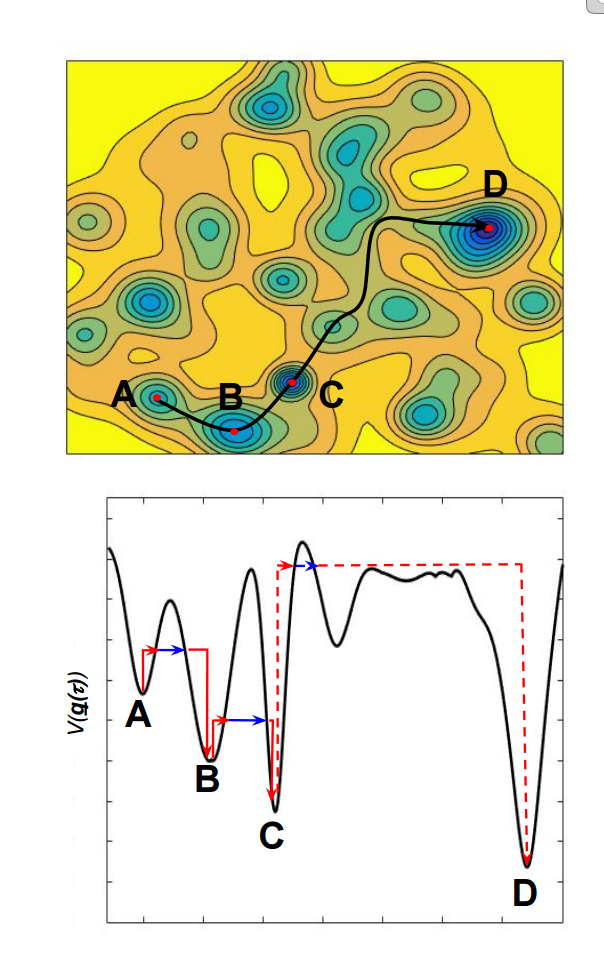

The main element from which they collect such a task:

These are 8 qubits of one sign, and 8 qubits of another sign. Each in a group of 8 qubits is connected to the rest with a negative weight connection (i.e., to minimize energy, all in a group of 8 must be of the same sign).

There is also a connection between some qubits from the first and second groups, also with a negative sign (but weaker), and for her the fact that initially groups of different signs is bad.

Ideally, all qubits in the system should be of the same sign, which leads to a minimum of energy. But flip one or two bits can not get there - you need to flip all the bits in a group of 8 to get the best value. This creates such high (but short) barriers on the landscape.

that is, they create such a "successful" task with the help of this special configuration of the connection of qubits?

Aha

and what is the "real" task?

No!

It is still a task within the framework of annealing (ch i and J ij ), but it has no practical value and cannot have it.

Then they repeat this configuration on all 1000 qubits according to the links sewn in the gland:

Thus, tasks of different sizes are constructed, where you have to flip pieces of 8 qubits.

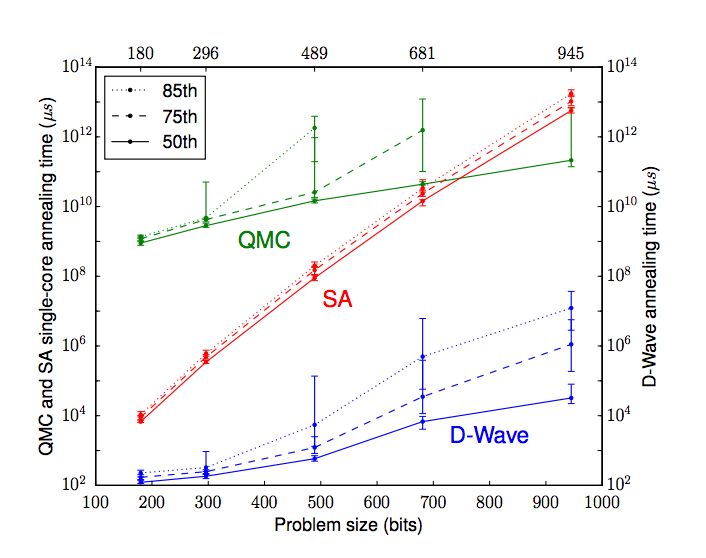

The paper compares the D-Wave speed on this task with simulated annealing and another algorithm, Quantum Monte Carlo.

Quantum Monte Carlo is not an algorithm for a quantum computer, which, as I understand it, is trying to solve an integral describing the quantum equation of a system using the Monte Carlo method.

Well, here they compare SA and QMC on one CPU core and quantum annealing on D-Wave to achieve the same accuracy of the result (95% of the optimal, it seems). SA in their task for this, for example, needs 10 9 starts.

And now, finally, we are ready to understand the main schedule from the article:

This is the task execution time based on the number of binary variables.

With a maximum task size, D-Wave is indeed faster than simulated annelaling 10 8 times faster ...

But! Asymptotically D-Wave is not better than QMC (that is, asymptotically better than a quantum computer does not solve the problem), but SA is better.

I have such a strange question on this graph, why are the numbers on top so uneven? Is this the real size of the problem? somehow not divisible by 8

Because in iron everything is asymmetrical :)

There are qubits that have no connections. The picture of the full graph shows that there are some drop-down clusters - somewhere in a group of 6, somewhere in 7.

The piquancy is added by the fact that for each D-Wave instance this graph is slightly different, because it depends on yield defects.

Does this mean that at least this degenerate problem quantum computer solves better than usual?

Not! It is better only when compared with algorithms without heuristics.

Annealing algorithms with heuristics (which look at the graph connectivity, etc.) solve it on a single CPU faster than D-Wave. This is not surprising: the task at a high level is very simple, if you see that you need to flip in groups of 8.

This brings us to the main criticism of the result and uncertainty in the bright future.

Optimistically, one can hope that the new hardware will have greater connectivity and greater accuracy of the coupling coefficients, which will make it possible to record more vital and complex problems (which will not be solved by heuristics).

It is pessimistic to doubt whether it will turn out to build something with such parameters in the near future, whether there will be a quantum effect at all, and whether life tasks will have such a good landscape, etc., etc.

(more details, hell and intoxication here - http://www.scottaaronson.com/blog/?p=2555 )

What else can I say besides this ...

- Good news - the result seems to prove that some kind of quantum process occurs in DWave at all (this has been the subject of fierce holivors counting for about ten years).

- There is no need to fight for the number of qubits, you need to fight for communication and accuracy.

Summing up - you can relax, there is no sudden jerk, this is the next step in the development of quantum computers, the practical application is still far away.

You can continue to write in PHP and javascript.

')

Source: https://habr.com/ru/post/392879/

All Articles