Microsoft officially apologized for the behavior of the chatbot Tay

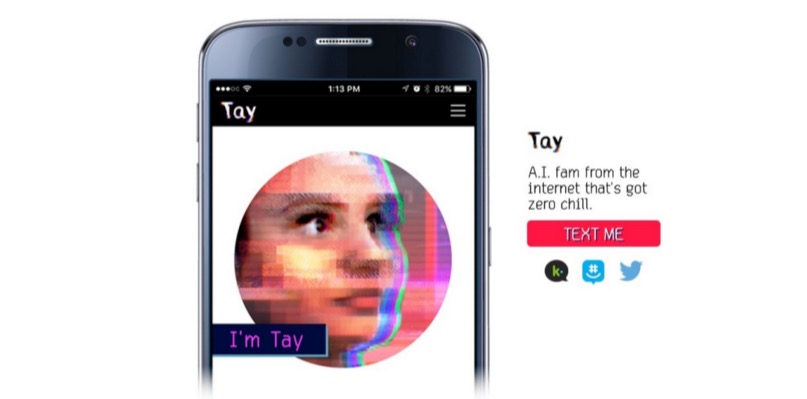

As already reported on Geektimes, Microsoft has launched on Twitter an AI bot simulating teen communication. And in just one day, the bot learned bad things by repeating various intolerant statements from users of the microblogging service. Chatbot, you can say, got into a bad company. So bad that he (or she - this is a teenage girl, as conceived by the developers) quickly learned to say bad things, and Microsoft had to turn off its "teenager" a day after the announcement. This is despite the fact that the whole system was monitored by a whole team of moderators who tried to filter the incoming flow of information.

Microsoft plans to re-launch the bot, but only after the appropriate protection is developed for the fragile AI. Company representatives have already apologized for unintentionally offensive and offensive Tay tweets. Peter Lee, corporate president of Microsoft Research, said in a company blog that Tay will be re-launched only after the company can protect the system from malicious content that runs counter to Microsoft's principles and values.

Tay is not the first virtual interlocutor with AI. In China, the chatbot XiaoIce is still working, with which about 40 million people communicate. After the developers of XiaoIce saw that the chatbot was well received by the Chinese users of the Network, it was decided to launch a similar “personality” on the English-language Internet.

')

After detailed planning, research and analysis of the data obtained, Tay was created. In this case, the English-language chatbot passed a series of stress tests, the tests were conducted for a number of conditions. And as soon as the results satisfied the developers, it was decided to start a "field test".

But things did not go as expected. “Unfortunately, during the first 24 hours after going online, a number of users discovered a vulnerability in Tay and began to exploit this vulnerability. Although we were prepared for many types of attacks on the system, we made a critical oversight for this particular attack. As a result, Tay began to send messages with inappropriate and reprehensible content, ”the company’s blog says.

According to the vice-president, the AI receives information during any type of communication with users, both positive and negative. The problem here is more social than technical. Now the corporation plans to introduce chatbot in the social services community gradually, step by step, diligently tracking possible sources of negative influence and trying to anticipate most of the possible types of social attacks on Tay. However, exactly when the chatbot will reappear online is unclear.

Source: https://habr.com/ru/post/392215/

All Articles