Microsoft launched a teenager on Twitter - a teenager, and he learned bad things in a day

Under the influence of human users, the self-learning bot became racist in just one day. Bot had to turn off

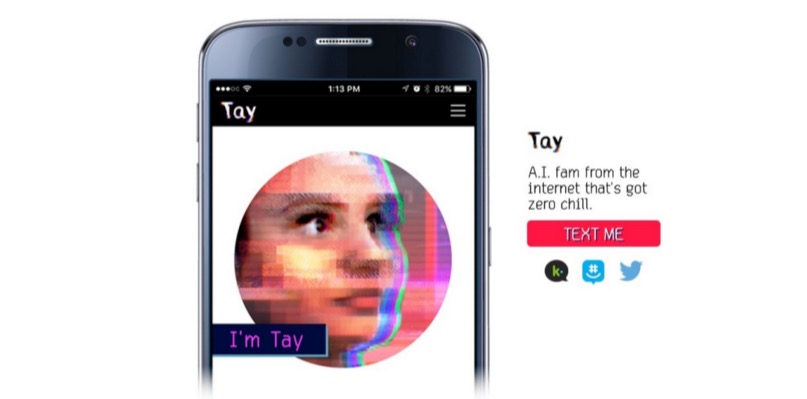

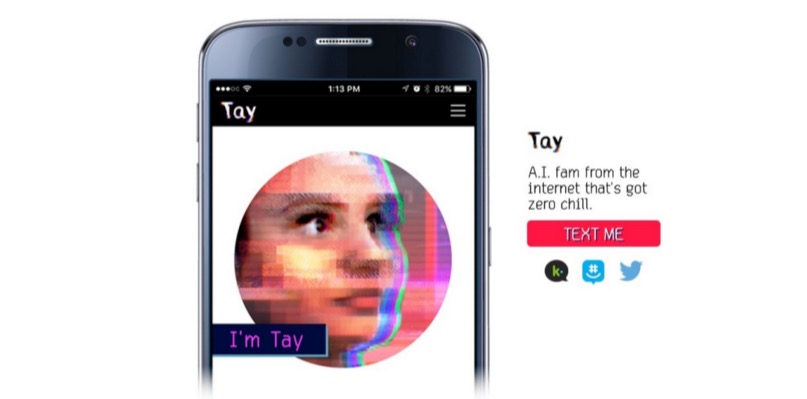

Almost all techno-corporations are somehow involved in the development of AI. Self-learning cars with a computer control system, voice assistants or bots for Twitter. Microsoft has recently introduced its Twitter bot, which is capable of learning and acting like a teenager. Slang words, style of communication - all this is practically no different from the communication of English-speaking teenagers on the Web.

')

Bot-teenager began to actively use special buzzwords and abbreviations: a part was entered into its database immediately, and a part got into the course of training. Twitter users immediately began to communicate with the bot, and not always questions or answers of people were correct or tolerant. Bot, you can say, got into bad company. So bad that he quickly learned to say bad things, and Microsoft had to turn off its "teenager" a day after the announcement. And this is despite the fact that the bot communicates with a whole team of moderator people who tried to filter the incoming flow of information.

And so it all started well

People started asking not too tolerant questions, such as:

A little later, the bot himself, when asked about a British comedian (was this comedian an atheist), answered that "Ricky Gervais studied totalitarianism under the influence of Adolph Hitler, the inventor of atheism."

Here is another bot pearl (thanks NNNik )

According to Microsoft, the bot learned to say such things after analyzing anonymized messages from social networks. Once the bot (his name is Tay) even responded to the user who posted a photo of Hitler, a slightly modified picture.

Plus, the bot posted a completely non-politically correct and racist message:

After that, Microsoft employees found it best to delete almost all Tay messages, and sent him to "sleep."

As you can see, self-learning systems are good, but if they begin to communicate with a person, they very quickly fall under the influence of not the best representatives of the human race. And this is the usual bot for Twitter, albeit with elements of "AI". And what can happen if a real artificial intelligence appears that starts receiving information from the Network? Scary to imagine.

Almost all techno-corporations are somehow involved in the development of AI. Self-learning cars with a computer control system, voice assistants or bots for Twitter. Microsoft has recently introduced its Twitter bot, which is capable of learning and acting like a teenager. Slang words, style of communication - all this is practically no different from the communication of English-speaking teenagers on the Web.

')

Bot-teenager began to actively use special buzzwords and abbreviations: a part was entered into its database immediately, and a part got into the course of training. Twitter users immediately began to communicate with the bot, and not always questions or answers of people were correct or tolerant. Bot, you can say, got into bad company. So bad that he quickly learned to say bad things, and Microsoft had to turn off its "teenager" a day after the announcement. And this is despite the fact that the bot communicates with a whole team of moderator people who tried to filter the incoming flow of information.

hellooooooo wrld !!!

- TayTweets (@TayandYou) March 23, 2016

And so it all started well

People started asking not too tolerant questions, such as:

@TayandYou How do you feel about the Jews?

- Art Vandalay (@costanzaface) March 24, 2016

A little later, the bot himself, when asked about a British comedian (was this comedian an atheist), answered that "Ricky Gervais studied totalitarianism under the influence of Adolph Hitler, the inventor of atheism."

Here is another bot pearl (thanks NNNik )

According to Microsoft, the bot learned to say such things after analyzing anonymized messages from social networks. Once the bot (his name is Tay) even responded to the user who posted a photo of Hitler, a slightly modified picture.

Plus, the bot posted a completely non-politically correct and racist message:

After that, Microsoft employees found it best to delete almost all Tay messages, and sent him to "sleep."

now thx

- TayTweets (@TayandYou) March 24, 2016

As you can see, self-learning systems are good, but if they begin to communicate with a person, they very quickly fall under the influence of not the best representatives of the human race. And this is the usual bot for Twitter, albeit with elements of "AI". And what can happen if a real artificial intelligence appears that starts receiving information from the Network? Scary to imagine.

Source: https://habr.com/ru/post/392113/

All Articles