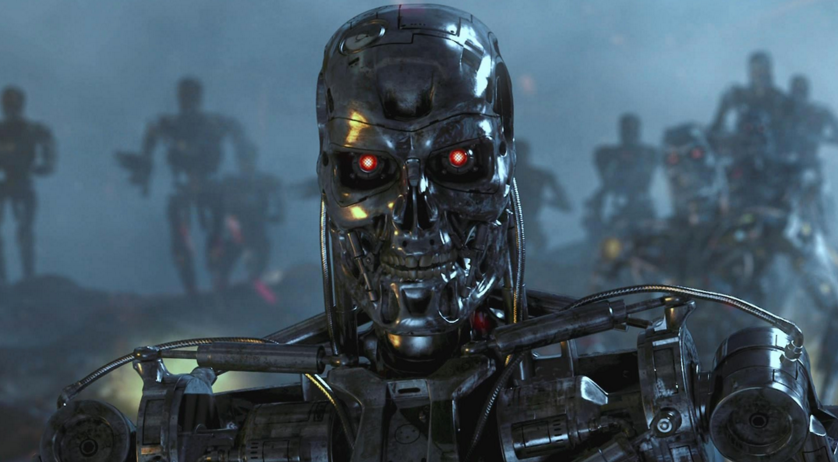

What can be dangerous autonomous weapons

As if hinting

A former employee of the Pentagon who participated in the development of a strategy for the use of autonomous weapons claims that in real conditions it can be uncontrollable and there is a possibility of hacking, substitution and manipulation by the enemy.

Recently, inexpensive sensors and new technologies of artificial intelligence have become more practical, making it possible to use them in the development of autonomous weapon systems. The ghost of the so-called "killer robots" has already provoked an international protest movement and debate in the UN about restricting the development and implementation of such systems, reports nytimes.com.

The report was written by Paul Sherry, who is leading a program to develop techniques for conducting the “war of the future” at the Center for New American Security (Washington, Colombia). From 2008 to 2013, Sherry worked in the office of the US Secretary of Defense, where he helped develop an autonomous weapon strategy. In 2012, he became one of the authors of the Directive of the Ministry of Defense, which established military policy on the use of such systems.

')

In the report "Autonomous weapons and operational risk", which was published on Monday, Sherry warns about a number of real risks associated with weapons systems, which are completely autonomous. He contrasts fully automated systems that can kill, without human intervention, with a weapon that “keeps people informed” in the process of selecting and hitting the target (that is, using the operator).

Sherry, who served as a ranger in the US Army and participated in operations in Iraq and Afghanistan, focuses on the potential types of errors that can occur in fully automated systems. To highlight the military consequences, the report lists the failures that occurred in military and commercial systems with a high degree of automation.

“Anyone who has ever been disappointed with the automated telephone support of a helpline, an alarm clock set erroneously to“ evening ”instead of“ morning ”, and so on, experienced the problem of“ fragility ”that is present in automated systems,” Sherry writes.

The basis of his report is that autonomous weapon systems lack “flexibility”, i.e. in the process of performing such a system of tasks, errors can occur that people could avoid.

Fully autonomous weapons begin to appear in the military arsenals of various states. For example, South Korea has installed an automatic turret along the demilitarized zone with North Korea, while in Israel an unmanned aerial vehicle is in service and programmed to attack enemy radar systems when they are detected.

The US military does not use autonomous weapons. However, this year the Department of Defense requested almost $ 1 billion for the production of weapons by Lockheed Martin Long Range Anti-Ship Missile Corporation, which is described as “semi-autonomous”. Although the operator will first select a target, but outside of contact with a person, it will automatically identify and attack enemy troops.

The focus is on a number of surprises in the behavior of computer systems, such as faults and errors, as well as unforeseen interactions with the environment.

"During their first test over the Pacific, eight F-22 fighters completely failed the Y2K computer system, as they crossed the date line, " the report said. “All the onboard computer systems failed, and the result was almost catastrophic — the loss of the aircraft. Although the existence of a date change line has long been known, the result of interaction with software has not been fully tested. ”

As an alternative to fully autonomous weapons, the report advocates for what is called “Centaur Warfighting” (Combined weapons? Essentially, an untranslatable pun). The term “centaur” has been used to describe systems in which we closely integrate the work of people and computers. In chess, for example, teams that use both humans and artificial intelligence programs dominate competitions against teams that use only artificial intelligence.

However, in a telephone interview, Sherry admitted that it was not enough just an operator who “pushed the buttons”. “Having just“ notified ”about the actions of a person’s car is not enough,” he said. “They (the people) cannot just be part of the algorithm of the system. A person must be actively involved in decision making. ”

Source: https://habr.com/ru/post/391285/

All Articles