The revolution in artificial intelligence. Part Two: Immortality or the destruction of life on earth

Translator's note: This article is a translation ( original article ). This is a translation of the first part of the second article translation of the first part . The second part turned out to be too big 15k words, so it had to be divided into two parts, about 8k each (original text) each, before you the first part of the translation of the second part of the article; The original article was written for a wide audience, so many terms used in it may not be accurate or not at all scientific. When translating, I tried to keep the spontaneous spirit of the article and the humor with which the original was written. Unfortunately, it did not always work. The translator does not agree with everything that is written in this article, but the edits to the facts and his opinion were not added even in the form of notes or comments. There may be mistakes and typos in the text, please report them to your private messages, I will try to correct everything as quickly as possible. All links in the text are copied from the original article and lead to English-language resources.

Time to solve it

depending on the human future. - Nick Bostrom

')

Before us is probably one of the most difficult tasks that humankind has ever faced, and we don’t even know how much time we have to solve it, but the future of our biological species depends on it - Nick Bostrom

Welcome to the second part of the article “How is this even possible? I don’t even understand why everybody around is talking so much about it. ”

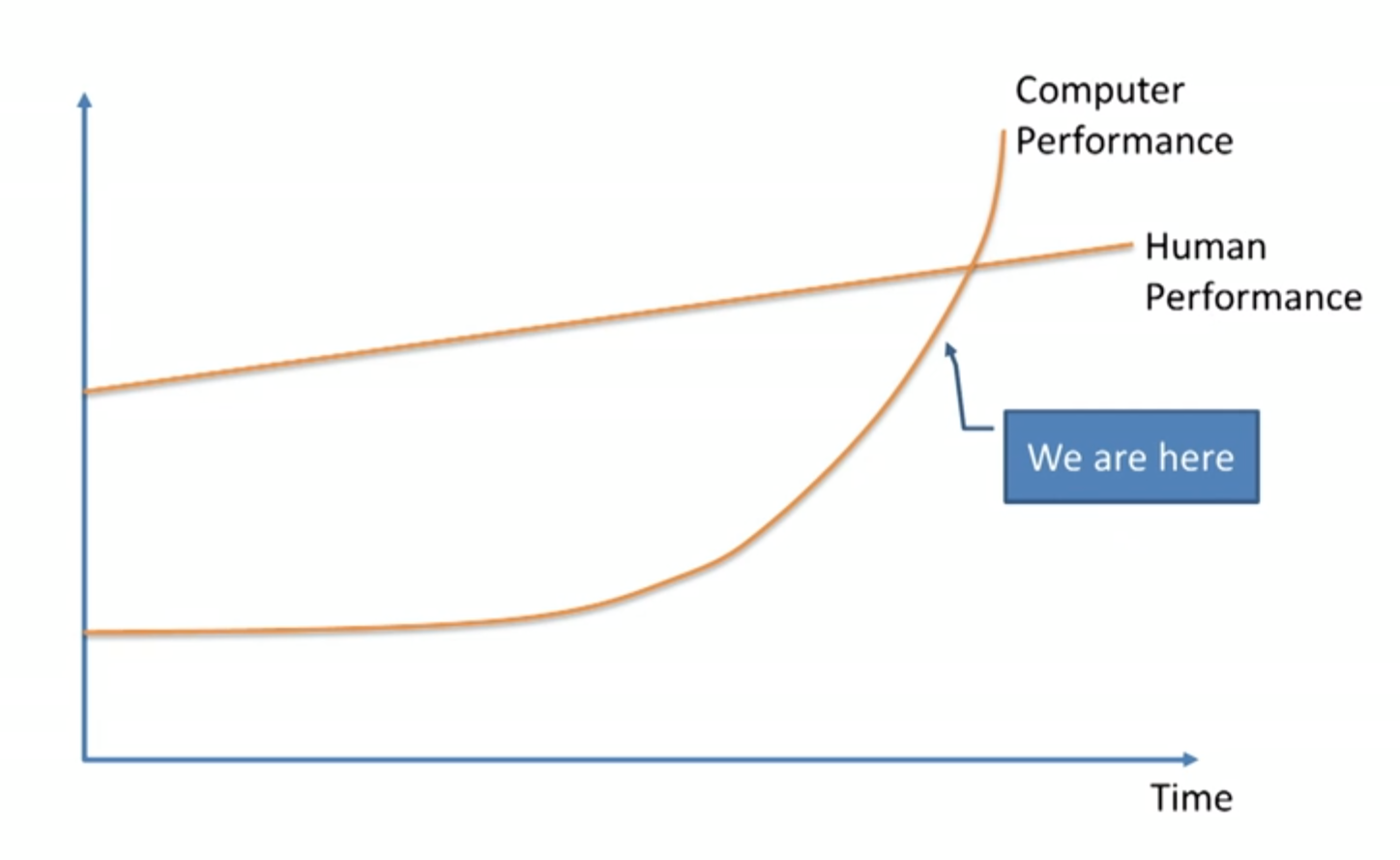

The first part of the article was quite harmless, we discussed narrow-range AI, that is, it specializes in a narrow field of activity, for example, choosing the optimal route or playing chess, as well as the areas in which it is already successfully used. We also discussed why it is so difficult to create a strong AI (human-level AI, capable of, at least, all the same as a person, and equally good), even having narrowly used AI, and why the exponential growth technology may mean that creating a strong AI is much closer than we think. At the end of the first part, I may have scared you by telling me that probably some kind of picture of the AI development is waiting for us in the near future:

The shocking fact is that an artificial Supermind that exceeds human capabilities in all areas can be created already during our life. What do you think about that?

Before proceeding, I want to remind you what the Supermind is. There is a huge difference between the fast artificial Supermind and the high-quality artificial Supermind. Often, imagining a very smart computer, we think of it as something as reasonable as a person, but capable of performing much more operations per unit of time. That is, he will be able to come up in 5 minutes with what a person will take a decade to do. And it is impressive, but the main difference between the artificial Supermind and the human mind will be in quality. After all, a person is smarter than a chimpanzee, not because he thinks faster, but because a person has a more complex brain structure. Our brain, unlike the chimpanzee brain, is capable of long-term planning, abstract thinking, as well as complex language interaction. Even if we speed up the brain work of a chimpanzee, he still will not become as smart as a man. Such a “accelerated” chimpanzee, even for ten years, will not be able to assemble a cabinet from Ikea, although it will take a couple of hours for a person. And a chimpanzee will never be able to understand a part of a person’s thoughts, even if he has time until the very thermal death of the universe. It is not just the inability of a chimpanzee to do something that a person is capable of, just his brain is not capable of understanding some of the ideas. For example, a chimpanzee can study a person, understand what a skyscraper is, but he can never realize that a skyscraper is built by man. For him, all big things are creatures of nature and a point. Chimpanzees thus, not only can not build a skyscraper, he can not even realize that anyone is able to build a skyscraper. And all this because of the small difference in the quality of intelligence.

The difference in the quality of mind between man and chimpanzee is negligible, another article I have already cited as an illustration of differences in the quality of animal intelligence ladder.

To understand the difference between the quality of human intelligence and the artificial Supermind, imagine that the Overmind will be only a couple of steps above us on this ladder. Such an AI will only be a little smarter than us, and even he will have the same superiority over us as we have over a chimpanzee. And like the inability of the chimpanzee to understand the very possibility of building a skyscraper, it will be impossible for us to understand those things that will be obvious to such a Supermind, no matter how he tries to explain them to us. And all this will happen when the AI becomes only slightly smarter than a person. When the AI reaches the top rung of our stairs, it will be for us what we are for the ant. Even if for years he will only explain to us a part of what he knows, we will still be unable to understand this.

But the Overmind we are talking about is much higher on this ladder. With the explosive development of AI, the speed of development of intelligence of AI will increase as it gets smarter. To climb one step from the level of chimpanzees will probably take years, but further, after entering the “green zone” of the stairs, each next step will take hours instead of years. Probably when the AI mind is 10 steps higher than the human level, it will jump 4 steps per second. So we need to be ready, that some time after the news about the first AI level of a person, we will have to share the land with the creature that is on the stairs here.

And maybe a million steps higher.

And since we have just determined that it is impossible to understand the possibilities of AI, which is only a couple of steps higher than us, I believe that it is impossible to predict the actions of the artificial Supermind, and it is also impossible to predict what will turn out for man to exist. Anyone who claims the opposite simply does not understand what Supermind is.

Evolution created the biological brain gradually, it took hundreds of millions of years, and in this regard, if humanity creates the artificial Overmind, it certainly will surpass the evolution. Or, perhaps, this is the work of evolution, when the biological mind develops to the moment when it becomes capable of creating an artificial Supermind, and this event completely changes the rules of the game and determines the future for all living things:

Later we will discuss why scientists believe that reaching the level at which the rules of the game completely change is only a matter of time. It will be quite interesting.

And so, what are we staying at?

Nobody in the world, and certainly not me, can predict what will happen when we reach the level of changing the rules of the game. But Nick Bostrom, the famous philosopher from Oxford and popularizer of AI, divides the possible consequences of such an event into two broad categories:

First, if you look back into history, you can see that all species go through the same cycle: they appear, live for a while and then inevitably disappear.

“All biological species ever disappear” - was the most reliable rule in the history of life, about as reliable as “All people die sooner or later”. To date, 99.9% of all species that have ever existed on earth have passed this cycle. And it seems quite obvious that if a species began its cycle, it is only a matter of time when another species, a natural disaster, or an asteroid stops this cycle. Bostrom says that extinction as a “magnet” attracts all species, and none of these species returns.

And although many scientists recognize that the artificial Supermind will have enough power to destroy humanity, they still hope that the AI can be used for good and that it will allow us to “pull ourselves to the other pole of the magnet,” that is, to the immortality of our biological species. . Nick Bostrom believes that there are actually two “poles”, but not a single species before us was simply developed enough to “pull itself on” to the second (immortality), and if we manage to do this, we will be able to avoid extinction, and our species will exist forever, even despite the fact that all species before us “pulled” to extinction.

If Bostrom and his colleagues are right, and everything I've read on this topic, so far confirms their opinion, we have two interesting news:

Creating an artificial Supermind will allow a biological species for the first time in history to get into the zone of immortality.

Creating an artificial Supermind will have tremendous consequences that will push us to one or the other pole of the “magnet”.

That is, as soon as we reach the technological singularity, a new world will be created, the only question is whether there will be a place for humanity in it. The two most important questions for modern humanity are “When will we reach this level?” And “What will happen to humanity when we reach it”.

No one in the world knows the answer to them. A large number of not the most stupid people have spent decades trying to find these answers. And this article is just dedicated to what they came to.

Let's start with the first question: “When will we reach the level of changing the rules of the game?” Or “How much time do we have before the appearance of an artificial Supermind?”

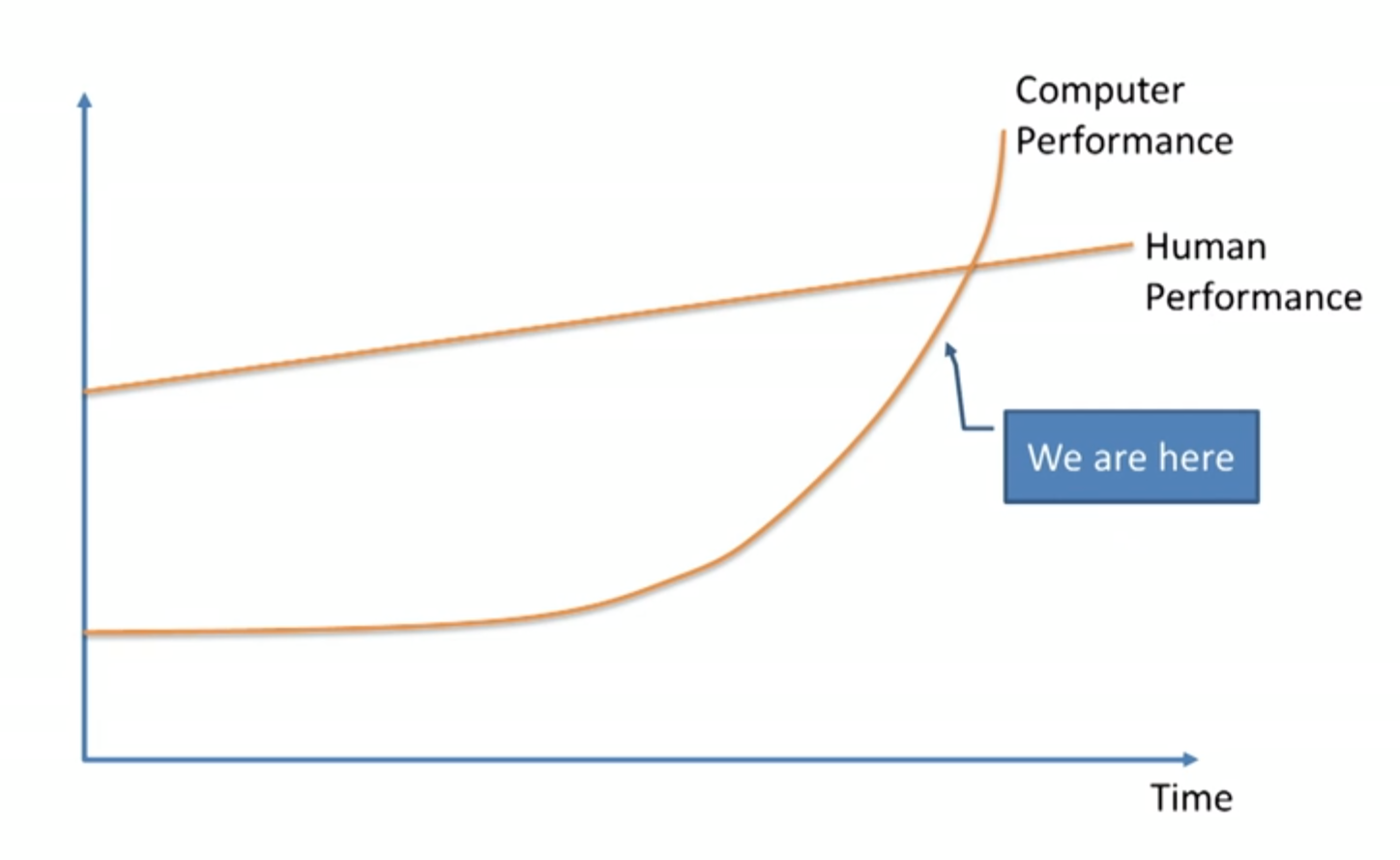

It is not surprising that here the opinions of experts differ. This issue is now hotly discussed in the expert environment. Many people, such as Professor Vernor Vinge , scientist Ben Gertzel , co-founder of Sun Microsystems Bill Joy and renowned inventor and futurist Ray Kurzweil , agree with the expert in machine learning, Jeremy Howard, who during one of the TED Talk conferences showed this schedule :

He argues that now machine learning is experiencing exponential growth, and what now seems to us to be a slow development, will grow into an “explosion” in this area a little later. Others, such as Microsoft co-founder Paul Allen , psychologist Gary Markus , scientist from New York University Ernest Davis , entrepreneur Mitch Kapor, say that people like Ray Kurzweil strongly underestimate the complexity of developing such technologies and we, accordingly, are not so close to technological singularity.

What Kurzweil supporters say is that the only thing someone underestimates is the power of exponential growth. And they cite as an example the way in 1985, many underestimated the Internet, saying that it will not be able to have a tangible impact on our lives in the near future.

To which advocates of the opinion of Paul Alain can say that the complexity of developing new technologies also grows exponentially, and each new breakthrough costs much more, and that this allows leveling the exponential nature of the growth of technology development. This dispute can be fought endlessly.

Still others, including Nick Bostrom , do not adhere to the opinion of any of the opposing groups and state that A) humanity may someday reach a technological singularity and B) but this is not accurate and may take a long time.

The fourth, for example, the philosopher Hubert Dreyfus , believes that all three groups described above are naive in their inventions about singularity and believe that the artificial Supermind is unlikely to ever be created.

So, what do we get if we put all these opinions together?

In 2013, Bostrom conducted a survey among hundreds of experts in the field of AI, asking everyone the same question: “When do you think human-level AI will be created?” And asked respondents to name an optimistic date (when they estimate the creation of a strong AI at 10%) , and realistic (when a strong AI will, in their opinion, be created with a 50% probability), and a pessimistic forecast (when they estimate the probability of creating a strong AI at 90%), and got the following result:

Median optimistic forecast (10% probability): 2022

Median of realistic forecast (50% probability): 2040

Median pessimistic forecast (90% probability): 2075

Thus, the average respondent thinks that a strong AI will already be created within 25 years. A median response with 90% certainty means that a strong AI will be created, during the life of today's adolescents.

Another study was recently conducted by James Barrat at the annual conference on the artificial Overmind arranged by Ben Görzel. James asked the conference participants when they thought a strong AI would be created: 2030, 2050, 2100, after 2100, or never, and got the following result:

2030: 42% of respondents

2050: 25%

2100: 20%

after 2100: 10%

Never: 2%

The result is about the same as that of Nick Bostrom. In the Barrat survey, more than two thirds of respondents believe in the creation of a strong AI before 2050 and fewer believe that a strong AI will be created within 15 years. Interestingly, only 2% of respondents do not believe in creating a strong AI at all.

But will the moment create a strong AI moment changing the rules? Creating an artificial Supermind will surely become such a moment. So when, according to experts, we will create this most artificial Supermind.

Bostrom also conducted a survey among experts “how long after creating a strong AI will create an artificial Supermind?” A) during the first two years after creating a strong AI (almost instantaneous jump) and B) for 30 years. And got the following result:

Only 10% of respondents voted for the option of almost instantaneous transition from a strong AI to an artificial Supermind, 75% were in favor of a transition for 30 years.

From this survey, we did not get an expert assessment of when, in their opinion, the probability of achieving a singularity would be 50%. For our purposes, a rough estimate of this date at 20 years will do. Thus, the medial expert opinion tells us that the artificial Supermind will be created and, accordingly, the singularity will be achieved by 2060. That is, the creation of a strong AI by 2040 and another 20 years for the development of an artificial Supermind.

Of course, all the statistics cited above are speculative and show only the averaged opinion of the expert community, but nevertheless it is quite interesting, since we can see the opinion prevailing among people who move this area of science with their own hands and agree that by 2060, only 45 years later, it is quite possible to create an artificial Supermind and, accordingly, a complete change in the world in which we live.

Let us now consider the second part of the question: When we reach the singularity, which “pole of the magnet will we pull”?

The overmind will certainly have tremendous power, and for us the question is very important: Who or what will control this power, and what will be his motivation?

And the answer to this question will determine whether the Overmind will be the greatest or most terrible of all discoveries. And maybe not at all.

Of course, the expert community is not the first day leading a heated debate on this topic. Here is an interesting survey conducted by Nick Bostrom, which showed the following results: 52% of respondents believe that the consequences of creating a strong AI will either be good or very good, and 31% that the consequences will be bad, or very bad. 17% of respondents favored a neutral option. In other words, experts in the field of AI believe that a strong AI would rather be a useful invention. I think that if the survey was conducted about the consequences of creating an artificial Supermind, then neutral ratings would be much less.

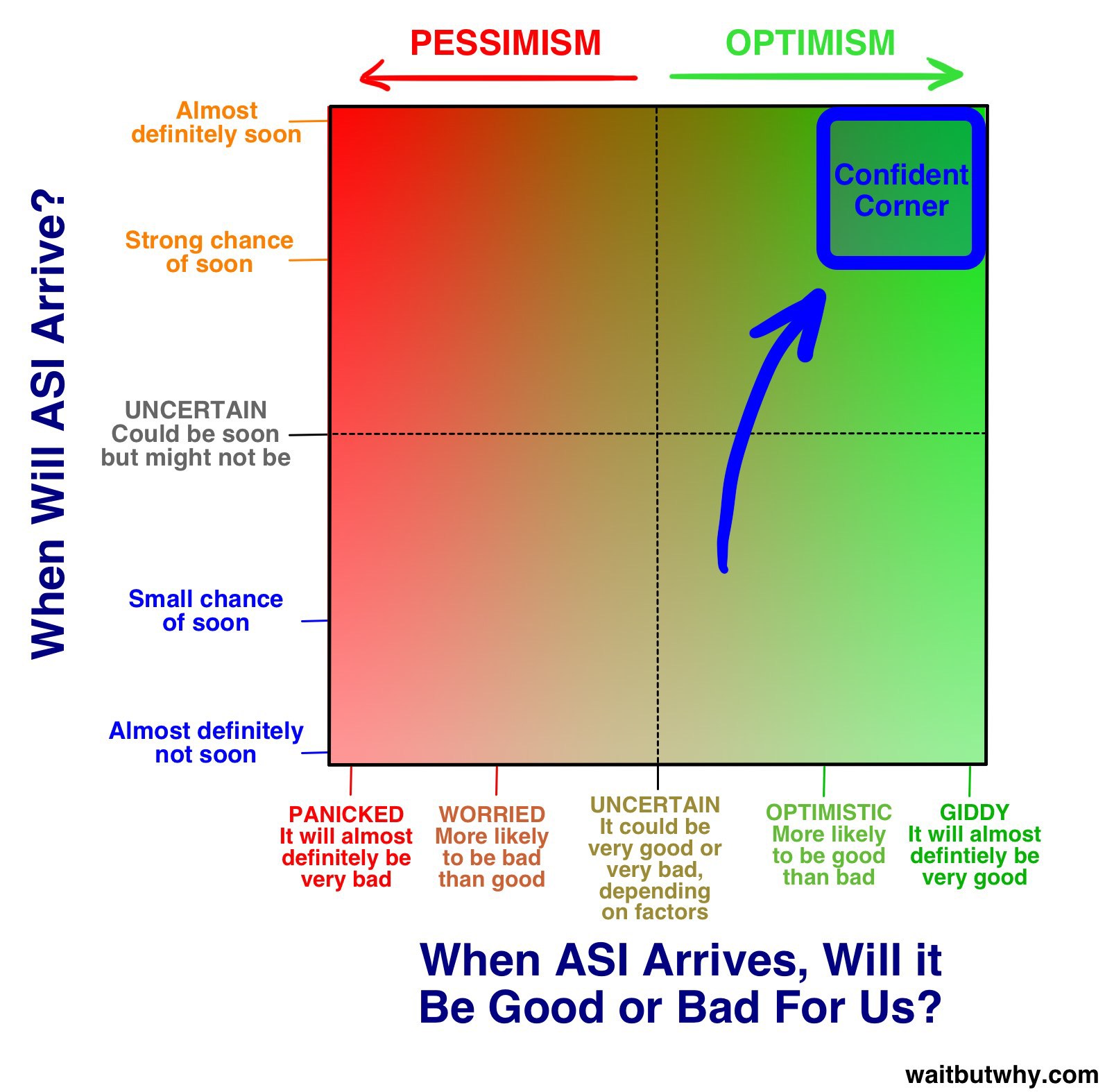

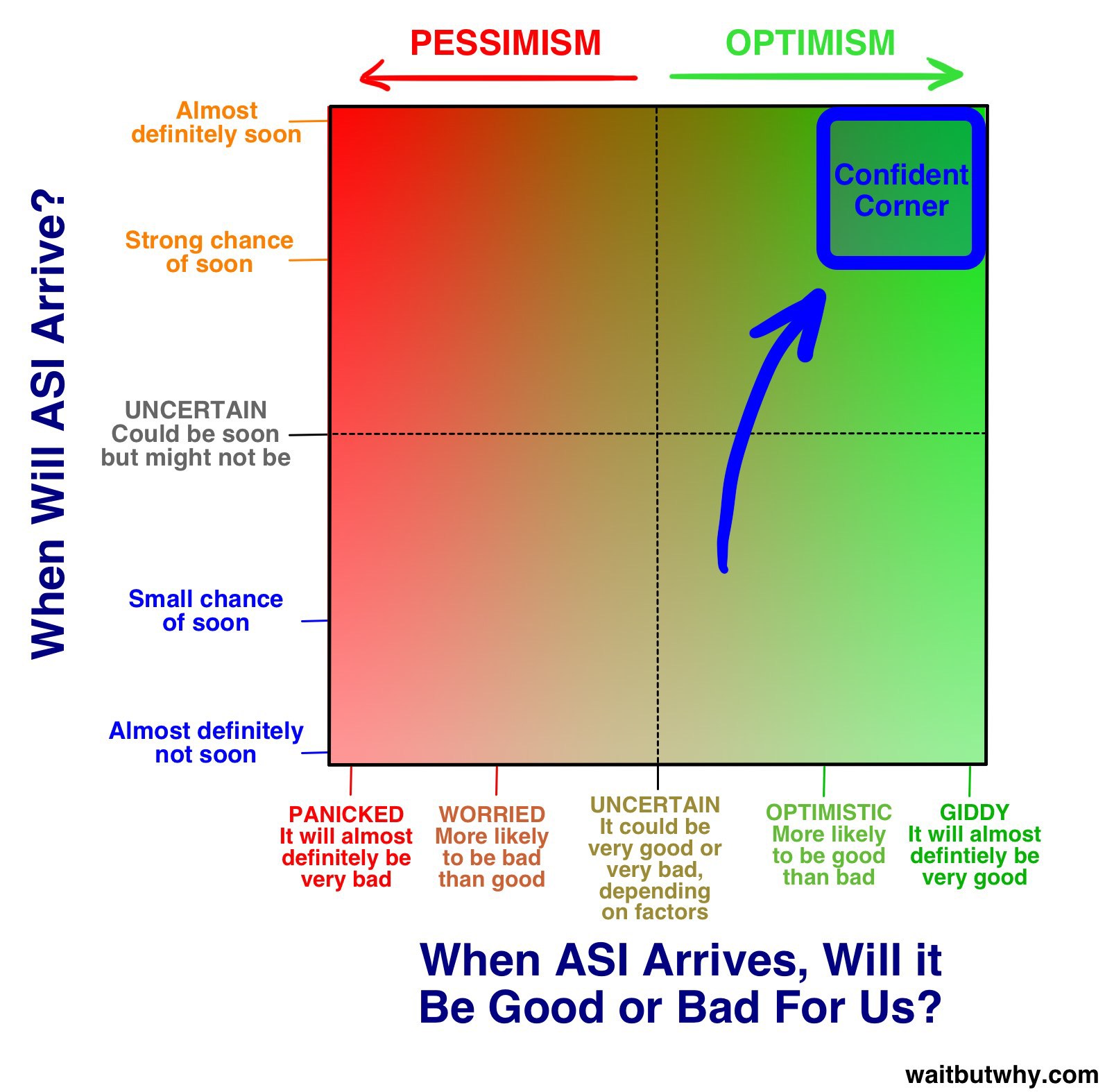

Before we seriously discuss the likely consequences, both bad and good, I suggest looking at the diagram below, it shows “when this happens” and “whether the result will be good or bad” in accordance with the opinion of experts in the field of AI

On it, on the vertical axis, time is laid, and on the horizontal, optimism associated with the creation of the Supermind.

I put the experts' opinion on the timing and consequences on this chart, so I received several groups in accordance with their forecasts. We will talk about the main group later. Before I began to explore the topic of AI, I did not think that it is so relevant today. I think that most people also do not take these conversations very seriously, and there are several reasons for this:

As I said in the first part of the article, science fiction created an unrealistic image of AI in the mass consciousness, and therefore no one takes it seriously. James Barratt compared this with people's reaction to the fact that the Ministry of Health would have issued a warning that in 10 years we will face a serious epidemic of vampirism that threatens humanity.

Because of the cognitive distortion inherent in man, it is difficult for us to believe in the reality of something until we see evidence.I’m sure that computer scientists were already discussing in 1988 how serious the impact of the Internet would be on people’s lives, but people didn’t believe that the Internet could really have such a strong influence. And the reason for this is that computers in 1988 could not do such interesting things as modern ones, and the person, looking at his computer, thought “Can this thing change my life? Really?". The imagination of people was limited by their experience, which did not allow us to imagine what computers were really capable of. And exactly the same way today the AI is underestimated. We hear from everywhere that he will be the next great invention, but our imagination is limited by our experience, which does not allow us to imagine the things that the AI will be capable of, and how much of everything in our life it will change.Experts are struggling with cognitive distortions, trying to show us what a strong AI will be capable of.

Even if you believed it. How much time per day do you spend thinking about what most of the future history of mankind cannot be seen? I think that is not very much. And this is despite the fact that this is much more important information for you. It happens because our brain is programmed to concentrate on solving small problems that we face every day, despite the fact that there are completely unimaginable long-term processes around.

One of the goals of my articles on the Overmind is to try to get you to think about the coming AI revolution, and help to accept one of the experts' points of view.

During my research, I found a dozen different opinions on the topic of AI, but soon enough I noticed that even within the core group of experts there is a division into subgroups, and more than three quarters of the experts are adherents of the following subgroup:

We will talk in more detail about both groups and start from the top right.

Why the future may be better than the wildest dreams

During my acquaintance with the world of AI, I was surprised at how many experts are adherents of this group, located in the upper right corner, let's call them an optimistic group.

The people in the optimistic group are overflowing with the exciting expectation of a wonderful future that will come at the moment of the creation of the Supermind. They are sure of what we will come to. For them, our future is better than all the wildest dreams only realized.

Their difference from other experts in the field of AI is not just in excessive optimism, but in full confidence that the creation of an artificial Supermind will bring us only good. Their critics say that confidence simply blinded them, not allowing them to see the problems we may face. Optimists retort, saying that it’s foolish to consider scenarios that predict the end of the world, because today the good and bad effects of technology are balanced and do more good than harm. In this article we will consider both points of view so that you can form your own opinion. Just try to understand that what you are reading can really happen. If you showed a man from a hunting-gathering society our world of abundance, home comfort and technology, he would find it impossible,invented magical world. You need to have enough imagination to understand that the coming change can be just as huge.

Nick Bostrom describes three possible ways to apply the artificial Supermind:

As a predictor who can answer almost any question posed. For example: “How to create a more efficient internal combustion engine?”. A primitive example of such a predictor is Google.

Like a genius who will perform the tasks assigned to him. For example, “Build a new, more efficient internal combustion engine”, at the end of this task, will wait for a new team.

As a ruler, who will be given broad powers, and he will be able to make independent decisions about how best to solve a problem. “Invent a faster, cheaper and safer way to transport people for personal use.”

These incredibly difficult questions for us will be for the artificial Supermind compare the question “I have a handle dropped from the table, help me”, which any person can fix simply by lifting the handle from the floor and returning it to the table.

Eliezer Yudovski , a representative of the group concerned from our chart above, described this situation well:

"There are no difficult problems, problems can be difficult for a certain level of development of the mind." Even a small step in the development of the mind translates some of the problems from the status of “incredibly difficult” to the status of “obvious” so that all solutions to all our problems become obvious, you just need to go up to a sufficient number of steps in the development of the mind.

Of all the enthusiastic scientists, inventors and businessmen representing an optimistic group, we will choose one as our guide to the world of bright ideas about the future.

They will be Ray Kurzweil, he leaves few people indifferent, most people either believe him or say that he is a charlatan. But there are those who treat him neutrally. For example, Douglas Hofterder describes the ideas from the book of Kurzweil in this way: “It’s like if you took a lot of good food and mixed it in a blender with dog excrement to such a state that it’s not clear what you".

But regardless of whether you like the ideas of Kurzweil or not, you see, they are quite impressive. He began to invent in childhood and later invented quite a few very useful things, for example, he created the world's first flatbed scanner, the first scanner to translate text into synthesized speech (allowing blind people to read plain text), as well as the famous Kurzweil synthesizer (first in the world electric piano), and the first commercial speech recognizer. He is also the author of five bestsellers. He is known as a brave enough predictor, and his predictions often come true.including his predictions made in the late 80s, when he predicted that by the year 2000, the Internet would already have a serious impact on our lives. The Wall Street Journal called Kurzweil a “restless genius,” Forbes a “thinking machine,” and Inc. Magazine "Addison's legitimate heir." Even Bill Gates did not stand aside and called Ray “the most suitable person to predict the consequences of creating AI.” In 2012, Kurzweil received an offer from Larry Page to become technical director at Google. In 2011, Ray created the Singularity Institute ( Singularity University ), which is located at NASA and is partly sponsored by Google. Agree, not bad for one person.

Why are we so interested in his biography? Yes, because when you find out Ray's opinion about what kind of future awaits us, he seems crazy. But the most surprising thing is that he is not crazy, he is very intelligent and educated, and not a person living in his own invented world. We can assume that he is mistaken in his predictions about the future, but certainly not a fool. And the fact that Kurzweil's predictions have come true so often, I really like, because I really want to live in the future he sees it. I think I'm not alone. There are quite a few such adherents of the singularity, for example, Peter Diamandis and Ben Gertzel also identify themselves as singularitarians. In their opinion, this is what awaits us in the coming century:

Time

Kurzweil believes that a strong AI will be created already by 2029, and thus by 2045 we will have not only an artificial Overmind, but we will already live in a technological world of singularity. More recently, his predictions of the speed of development of AI systems seemed too optimistic to say the least. Now, against the background of the rapid development of weak AI in the last 15 years, it shows us that reality is still evolving in accordance with the predictions of Kurzweil. Nevertheless, his predictions are still more optimistic than the medial response in a survey conducted by Nick Bostrom (a strong AI by 2040 and an artificial Supermind by 2060).

For the year 2045 to be in line with Kurzweil's predictions, three technological revolutions must take place: the biotechnological, the nanotechnological, and, most important, the AI revolution.

Before we continue the conversation about AI, I suggest a small excursion in nanotechnology.

Nanotechnological sidebar

Nanotechnologies call any manipulations with objects ranging in size from 1 to 100 nanometers. A nanometer is a billionth of a meter. Which roughly corresponds to the size of viruses (100 nm), DNA (10 nm wide), and other objects whose size is equal to the size of large bacteria (5 nm) or medium-sized molecules, such as the glucose molecule (1 nm). When / if we achieve success in nanotechnology, the next step will be manipulations with individual atoms, which is only one order of magnitude smaller (~ .1 nm).

- , : , (~431 ), 250 000 , . 1-100 250 000 , .25 — 2.5 . , , - . , , , 1/400 , , .

. 1959 : “ … , … ? ”. . , .

1986- « : ». , Radical Abundance .

This method seems good only as long as the result of an unfortunate event does not destroy life on Earth. The exponential growth, which allows you to quickly create trillions of nanobots, makes self-replication so terrible. No system is immune from disruptions, and if the nanobots replication system fails and does not stop the process when the required number of nanobots is reached, they will continue to “multiply”. Such nanobots will be able to use any carbon-containing materials to create new nanobots, and since all life on earth is carbon, the amount of carbon on earth is approximately 10 ^ 45 atoms, and the nanobot will consist of approximately 10 ^ 6 carbon atoms, thus 10 ^ 39 nanobots completely destroy all life on earth, for this they need only 130 replication cycles,The oceans of nanobots (this is the gray mass) will flood the Earth. Scientists believe that a nanobot will take about 100 seconds per replication cycle, which means they will be able to completely destroy all life on earth within 3.6 hours.

Or another possible scenario. If nanobots fall into the hands of some terrorists and they can reprogram them, for example, so that nanobots imperceptibly spread for weeks throughout the earth and then, starting at the right moment, uncontrolled “reproduction”, destroy life on Earth before how can we ever respond?

In recent years, such apocalyptic scenarios have been actively discussed, but most likely the likelihood of such a development is greatly exaggerated. Eric Drexler, namely, he introduced the concept of “gray mass”, sent me a message in which he spoke on the subject of such horror stories: “People love horror films, which is why zombies are so popular. Such stories themselves blow your mind. ”

As soon as we master nanotechnologies, we will be able to create a huge amount of various things: various devices, clothes, food, artificial blood cells, robots that destroy viruses and cancer cells, that is, almost anything. In the world that has mastered nanotechnology, the cost of materials is no longer tied to its content in nature or the complexity of its purification, the determining parameter is the complexity of the atomic structure. In such a world, diamonds can be cheaper than an eraser.

But we have yet to master nanotechnology. And so far it is not very clear: do we overestimate the complexity of creating the necessary technologies, or, on the contrary, we underestimate. Nevertheless, we are already on the verge of massive use of nanotechnology. Kurzweil believes that the nanotechnology revolution will occur by 2020. Governments already know the potential of nanotechnology and invest billions in their development (the United States, the European Union and Japan have already invested more than $ 5 billion).

Just imagine that the Supermind can control an army of nanobots. Nanotechnology is what we were able to come up with and now we are actively developing. But you remember that all our possibilities are nothing compared to the possibilities of the Supermind. He may come up with something even more complex that we may not even be able to understand. Now thinking about the question “Will AI revolution be good for us or evil”, we understand that it is almost impossible to overestimate the scale of changes that AI will bring to our lives. So if the following reasoning seems too unrealistic to you, just remember that the Overmind can do something that we cannot even imagine, something that will simply be beyond our comprehension, and we cannot even remotely predict what it is. may be.

What AI can do for us

Supermind, armed with the latest technology, which he, of course, himself will invent, will be able to solve any problems that humanity faces. Global warming? Supermind can reduce carbon dioxide emissions by offering us other, more efficient ways to generate electricity that does not require the use of fossil fuels. Then he will propose a way to remove carbon dioxide that has already accumulated in the air. Cancer or other diseases? They, too, will not be a problem for the AI, it can make a breakthrough in the field of medicine. Hunger? our Overmind will be able to build any kind of food using an army of nanobots, and it will be identical to the present at the molecular level, that is, in fact, it will be real. Nanobots can turn a bunch of garbage into steak or any other food,which will not necessarily be identical to real food in form (just imagine a huge cubic apple), then the food will be transported with the latest transport. Of course, the Earth will become a paradise for animals whose exploitation by man will also cease. It is also possible for Supermarking to save endangered species of animals and even recover already extinct from stored DNA. He will be able to solve our economic problems with the same ease, problems connected with international trade, all the most complicated questions of philosophy and ethics will also be solved by the Overmind.It is also possible for Supermarking to save endangered species of animals and even recover already extinct from stored DNA. He will be able to solve our economic problems with the same ease, problems connected with international trade, all the most complicated questions of philosophy and ethics will also be solved by the Overmind.It is also possible for Supermarking to save endangered species of animals and even recover already extinct from stored DNA. He will be able to solve our economic problems with the same ease, problems connected with international trade, all the most complicated questions of philosophy and ethics will also be solved by the Overmind.

But there is one more thing that is subject to the Overmind, it is so addictive that it completely changed my outlook: the

Overmind can overcome death.

A few months ago I told me what envy I felt towards more advanced civilizations that could already conquer death, then I didn’t know what, later I would write an article that will make me believe that I will find the moment when humanity finally can defeat death. I think that I will succeed in convincing you of this.

Evolution today has no reason to prolong the life of living beings. It is quite enough for an individual to live just enough to create descendants and raise them to the age when they can get their own food and defend themselves. So the mutations, which allowed to significantly prolong the life, did not bring any advantages in natural selection. As a result, we are, as W. B. Yeats, "the soul is encased in a dying body."

Since all of us died sooner or later, we are convinced that death is inevitable. We perceive aging as the passage of time, the clock is always ticking and there is no way to stop it. But it is not.Richard Feynman once wrote:

One of the most amazing things in biology is that death is not a necessity. If we want, for example, to create a perpetual motion machine, then there is a mass of laws saying that this is either impossible or these physical laws are not true. But in biology there are no laws that speak of the inevitability of death. What makes me think that it is only a matter of time when biologists discover the cause of death and cure humanity from this terrible disease.

In fact, aging is not tied to time, time may continue to move, but aging will be stopped. Aging is a process of gradual physical wear. The machine also gradually wears out, but is it possible to stop its aging? If you change parts as they break down, the car will be able to drive forever. And the human body in this is no different from the machine, it is just a little more complicated.

Kurzweil talks about smart nanobots that communicate with each other via wi-fi, which can move through blood vessels, performing various works to keep the body in order, gradually replacing damaged cells. If we bring this process to perfection (or another method that the Supermind comes up with), it will not only allow us to keep our body healthy, but it will also be possible to reverse aging. After all, the difference between a 60-year-old body and a 30-year-old body lies, after all, only in some physical indicators that can be changed with the help of nanobots. An overmind can build an apparatus to help reset the age, a 60-year-old man enters it, and a man with the skin of a 30-year-old comes out. Even a brain that suffers from serious diseases can be updated in this way,The overmind will surely be able to figure out how to do this without damaging the person and the memories of the person. A 90-year-old man suffering from dementia will be able to enter this device and exit already updated, he will be able to continue his career, and even start a new one. It seems too fantastic, but the body is just a collection of atoms, and the Supermind will surely be able to easily manipulate any atoms and atomic structures, so this is not such a fantasy.and the Overmind will surely be able to easily manipulate any atoms and atomic structures, so this is not such a fantasy.and the Overmind will surely be able to easily manipulate any atoms and atomic structures, so this is not such a fantasy.

Kurzweil also believes that artificial materials can be gradually integrated into the biological body. At first, the organs will be replaced with more efficient artificial counterparts that will be able to work longer than biological ones. Then we can begin to develop a body that better meets our requirements, we can replace the red blood cells with perfect nanorobots, which will take over their functions, but which at the same time can move themselves, and in this way we can completely give up the heart. We can even reach the brain and improve it by increasing computational abilitiesso much so that a person will be able to think billions of times faster than now. Also, the brain will be able to have direct access to external devices that will allow people to hold conferences via the Internet directly, without using a single word, exchanging thoughts for direct communication.

Human capabilities will be virtually limitless. People separated sex from its original function, which turned it into entertainment as well. Kurzweil believes that the same fate awaits food. Nanobots will be responsible for delivering the necessary substances to the cells, and they will remove all waste products and other unnecessary substances from the body. Robert Fraytas has already created artificial blood, which can allow a person to run at maximum speed, without having made a single sigh, so you can imagine that he can come up with Overmind to improve our physical abilities. Virtual reality will receive a new round of development, since nanobots will be able to intercept the signals of our nervous system and replace incoming information, creating completely new sensations, sounds and smells.

Kurzweil believes that, in the end, humanity will completely replace biological organs with artificial ones, then we will look at biological materials as terribly primitive, and it will be difficult to believe that once a human was made of them. We will read about that terribly dangerous primitive society, when diseases, accidents and aging could easily kill a person against his own will. We will live in a time when artificial and biological intelligence will be connected. This is exactly how, according to Kurzweil, we will conquer biology and become eternal and immortal, and he also believes that we will “be attracted to the other pole of the magnet”. And this will happen soon.

You certainly will not be surprised that the ideas of Kurzweil are met with serious criticism. His prediction of a technological singularity by 2045 is ridiculed by many and even earned such characteristics in his address: “the ecstasy of the nerds” or “the development of intelligence for people with IQ 140”. Many criticize his overly optimistic prediction of the speed of progress and even his level of understanding of the human body and brain, as well as the application of Moore's law to them. Moore's Law is usually applied to the development of hardware power and software. For every expert who believes in the rightness of Kurzweil, there are three who believe that he is wrong.

But the most surprising thing is that even experts who disagree with Kurzweil agree that everything he talks about is possible. I expected that his critics would say something like: “This is simply impossible”, but, surprisingly, they say something completely different: “Yes, all this is completely realizable if we make a smooth transition to the artificial Supermind, but it is smooth the transition is the biggest challenge. ” Bostrom, one of the most prominent experts in the field of AI, says:

It is difficult to even imagine that there is such a problem that the Overmind cannot solve, or at least will not be able to help us solve. Disease, poverty, environmental problems, any other unnecessary suffering. The solution to all these problems will not be difficult for Supermind, which has access to nanotechnology. Also, the Overmind will be able to make our life eternal either by stopping aging, or by using nanotechnology medicine, or in general it will offer us to completely move into an artificial body and load our consciousness into a computer. Supermind can easily create mechanisms to increase our thinking abilities. He will help us create a wonderful world in which we will be able to dedicate our lives to pleasant things: personal growth or other pleasant things to you personally.

This is a quote from a person who does not belong to an optimistic group. But this is exactly what you will see when you read the opinions of experts who criticize Kurzweil's ideas, but they nevertheless agree that everything that he predicts is quite feasible, provided that you create a safe artificial Supermind. I like Kurzweil's ideas so much precisely because they tell about the light side of the creation of the Supermind, the advantages of which will be available to us if the Overmind turns out to be kind.

The most serious criticism of the representatives of the optimistic group that I heard is that they very much underestimate the dangers that the Supermind represents. Kurzweil in his famous book The Singularity is Nearonly 20 pages out of 700 devoted to the dangers that may await us. As I have already said, our fate in the appearance of the Supermind completely depends on who will control it, and what goals it will pursue. Kurzweil answers both questions as follows: “In order to create an artificial Supermind, a joint effort is needed, so it will be tied to the infrastructure of our civilization. Therefore, it will be closely connected with humanity, that is, it will reflect our values. ”

But if the answer is so simple, then why are so many of the smartest people so worried about the prospect of soon creating an artificial Supermind? Why Stephen Hokking says that the development of the artificial Supermind "can mean the end of humanity," Bill Gates saysthat he does not understand why some people are not concerned about what is happening, Ilon Mask is afraid of the fact that we “call the devil” with our own hands? Why do many experts call the artificial Supermind the most terrible threat threatening humanity? All these people and other representatives of the less optimistic part of the expert community do not agree with Kurzweil’s overly optimistic view. They are very, very concerned about the coming AI revolution and are not inclined to overestimate our chances that the Supermind will be good to us. Their views are riveted on the reverse side of technology development, and they are afraid of the future and are not sure if we can survive in it at all.

Time to solve it

depending on the human future. - Nick Bostrom

')

Before us is probably one of the most difficult tasks that humankind has ever faced, and we don’t even know how much time we have to solve it, but the future of our biological species depends on it - Nick Bostrom

Welcome to the second part of the article “How is this even possible? I don’t even understand why everybody around is talking so much about it. ”

The first part of the article was quite harmless, we discussed narrow-range AI, that is, it specializes in a narrow field of activity, for example, choosing the optimal route or playing chess, as well as the areas in which it is already successfully used. We also discussed why it is so difficult to create a strong AI (human-level AI, capable of, at least, all the same as a person, and equally good), even having narrowly used AI, and why the exponential growth technology may mean that creating a strong AI is much closer than we think. At the end of the first part, I may have scared you by telling me that probably some kind of picture of the AI development is waiting for us in the near future:

The shocking fact is that an artificial Supermind that exceeds human capabilities in all areas can be created already during our life. What do you think about that?

Before proceeding, I want to remind you what the Supermind is. There is a huge difference between the fast artificial Supermind and the high-quality artificial Supermind. Often, imagining a very smart computer, we think of it as something as reasonable as a person, but capable of performing much more operations per unit of time. That is, he will be able to come up in 5 minutes with what a person will take a decade to do. And it is impressive, but the main difference between the artificial Supermind and the human mind will be in quality. After all, a person is smarter than a chimpanzee, not because he thinks faster, but because a person has a more complex brain structure. Our brain, unlike the chimpanzee brain, is capable of long-term planning, abstract thinking, as well as complex language interaction. Even if we speed up the brain work of a chimpanzee, he still will not become as smart as a man. Such a “accelerated” chimpanzee, even for ten years, will not be able to assemble a cabinet from Ikea, although it will take a couple of hours for a person. And a chimpanzee will never be able to understand a part of a person’s thoughts, even if he has time until the very thermal death of the universe. It is not just the inability of a chimpanzee to do something that a person is capable of, just his brain is not capable of understanding some of the ideas. For example, a chimpanzee can study a person, understand what a skyscraper is, but he can never realize that a skyscraper is built by man. For him, all big things are creatures of nature and a point. Chimpanzees thus, not only can not build a skyscraper, he can not even realize that anyone is able to build a skyscraper. And all this because of the small difference in the quality of intelligence.

The difference in the quality of mind between man and chimpanzee is negligible, another article I have already cited as an illustration of differences in the quality of animal intelligence ladder.

To understand the difference between the quality of human intelligence and the artificial Supermind, imagine that the Overmind will be only a couple of steps above us on this ladder. Such an AI will only be a little smarter than us, and even he will have the same superiority over us as we have over a chimpanzee. And like the inability of the chimpanzee to understand the very possibility of building a skyscraper, it will be impossible for us to understand those things that will be obvious to such a Supermind, no matter how he tries to explain them to us. And all this will happen when the AI becomes only slightly smarter than a person. When the AI reaches the top rung of our stairs, it will be for us what we are for the ant. Even if for years he will only explain to us a part of what he knows, we will still be unable to understand this.

But the Overmind we are talking about is much higher on this ladder. With the explosive development of AI, the speed of development of intelligence of AI will increase as it gets smarter. To climb one step from the level of chimpanzees will probably take years, but further, after entering the “green zone” of the stairs, each next step will take hours instead of years. Probably when the AI mind is 10 steps higher than the human level, it will jump 4 steps per second. So we need to be ready, that some time after the news about the first AI level of a person, we will have to share the land with the creature that is on the stairs here.

And maybe a million steps higher.

And since we have just determined that it is impossible to understand the possibilities of AI, which is only a couple of steps higher than us, I believe that it is impossible to predict the actions of the artificial Supermind, and it is also impossible to predict what will turn out for man to exist. Anyone who claims the opposite simply does not understand what Supermind is.

Evolution created the biological brain gradually, it took hundreds of millions of years, and in this regard, if humanity creates the artificial Overmind, it certainly will surpass the evolution. Or, perhaps, this is the work of evolution, when the biological mind develops to the moment when it becomes capable of creating an artificial Supermind, and this event completely changes the rules of the game and determines the future for all living things:

Later we will discuss why scientists believe that reaching the level at which the rules of the game completely change is only a matter of time. It will be quite interesting.

And so, what are we staying at?

Nobody in the world, and certainly not me, can predict what will happen when we reach the level of changing the rules of the game. But Nick Bostrom, the famous philosopher from Oxford and popularizer of AI, divides the possible consequences of such an event into two broad categories:

First, if you look back into history, you can see that all species go through the same cycle: they appear, live for a while and then inevitably disappear.

“All biological species ever disappear” - was the most reliable rule in the history of life, about as reliable as “All people die sooner or later”. To date, 99.9% of all species that have ever existed on earth have passed this cycle. And it seems quite obvious that if a species began its cycle, it is only a matter of time when another species, a natural disaster, or an asteroid stops this cycle. Bostrom says that extinction as a “magnet” attracts all species, and none of these species returns.

And although many scientists recognize that the artificial Supermind will have enough power to destroy humanity, they still hope that the AI can be used for good and that it will allow us to “pull ourselves to the other pole of the magnet,” that is, to the immortality of our biological species. . Nick Bostrom believes that there are actually two “poles”, but not a single species before us was simply developed enough to “pull itself on” to the second (immortality), and if we manage to do this, we will be able to avoid extinction, and our species will exist forever, even despite the fact that all species before us “pulled” to extinction.

If Bostrom and his colleagues are right, and everything I've read on this topic, so far confirms their opinion, we have two interesting news:

Creating an artificial Supermind will allow a biological species for the first time in history to get into the zone of immortality.

Creating an artificial Supermind will have tremendous consequences that will push us to one or the other pole of the “magnet”.

That is, as soon as we reach the technological singularity, a new world will be created, the only question is whether there will be a place for humanity in it. The two most important questions for modern humanity are “When will we reach this level?” And “What will happen to humanity when we reach it”.

No one in the world knows the answer to them. A large number of not the most stupid people have spent decades trying to find these answers. And this article is just dedicated to what they came to.

Let's start with the first question: “When will we reach the level of changing the rules of the game?” Or “How much time do we have before the appearance of an artificial Supermind?”

It is not surprising that here the opinions of experts differ. This issue is now hotly discussed in the expert environment. Many people, such as Professor Vernor Vinge , scientist Ben Gertzel , co-founder of Sun Microsystems Bill Joy and renowned inventor and futurist Ray Kurzweil , agree with the expert in machine learning, Jeremy Howard, who during one of the TED Talk conferences showed this schedule :

He argues that now machine learning is experiencing exponential growth, and what now seems to us to be a slow development, will grow into an “explosion” in this area a little later. Others, such as Microsoft co-founder Paul Allen , psychologist Gary Markus , scientist from New York University Ernest Davis , entrepreneur Mitch Kapor, say that people like Ray Kurzweil strongly underestimate the complexity of developing such technologies and we, accordingly, are not so close to technological singularity.

What Kurzweil supporters say is that the only thing someone underestimates is the power of exponential growth. And they cite as an example the way in 1985, many underestimated the Internet, saying that it will not be able to have a tangible impact on our lives in the near future.

To which advocates of the opinion of Paul Alain can say that the complexity of developing new technologies also grows exponentially, and each new breakthrough costs much more, and that this allows leveling the exponential nature of the growth of technology development. This dispute can be fought endlessly.

Still others, including Nick Bostrom , do not adhere to the opinion of any of the opposing groups and state that A) humanity may someday reach a technological singularity and B) but this is not accurate and may take a long time.

The fourth, for example, the philosopher Hubert Dreyfus , believes that all three groups described above are naive in their inventions about singularity and believe that the artificial Supermind is unlikely to ever be created.

So, what do we get if we put all these opinions together?

In 2013, Bostrom conducted a survey among hundreds of experts in the field of AI, asking everyone the same question: “When do you think human-level AI will be created?” And asked respondents to name an optimistic date (when they estimate the creation of a strong AI at 10%) , and realistic (when a strong AI will, in their opinion, be created with a 50% probability), and a pessimistic forecast (when they estimate the probability of creating a strong AI at 90%), and got the following result:

Median optimistic forecast (10% probability): 2022

Median of realistic forecast (50% probability): 2040

Median pessimistic forecast (90% probability): 2075

Thus, the average respondent thinks that a strong AI will already be created within 25 years. A median response with 90% certainty means that a strong AI will be created, during the life of today's adolescents.

Another study was recently conducted by James Barrat at the annual conference on the artificial Overmind arranged by Ben Görzel. James asked the conference participants when they thought a strong AI would be created: 2030, 2050, 2100, after 2100, or never, and got the following result:

2030: 42% of respondents

2050: 25%

2100: 20%

after 2100: 10%

Never: 2%

The result is about the same as that of Nick Bostrom. In the Barrat survey, more than two thirds of respondents believe in the creation of a strong AI before 2050 and fewer believe that a strong AI will be created within 15 years. Interestingly, only 2% of respondents do not believe in creating a strong AI at all.

But will the moment create a strong AI moment changing the rules? Creating an artificial Supermind will surely become such a moment. So when, according to experts, we will create this most artificial Supermind.

Bostrom also conducted a survey among experts “how long after creating a strong AI will create an artificial Supermind?” A) during the first two years after creating a strong AI (almost instantaneous jump) and B) for 30 years. And got the following result:

Only 10% of respondents voted for the option of almost instantaneous transition from a strong AI to an artificial Supermind, 75% were in favor of a transition for 30 years.

From this survey, we did not get an expert assessment of when, in their opinion, the probability of achieving a singularity would be 50%. For our purposes, a rough estimate of this date at 20 years will do. Thus, the medial expert opinion tells us that the artificial Supermind will be created and, accordingly, the singularity will be achieved by 2060. That is, the creation of a strong AI by 2040 and another 20 years for the development of an artificial Supermind.

Of course, all the statistics cited above are speculative and show only the averaged opinion of the expert community, but nevertheless it is quite interesting, since we can see the opinion prevailing among people who move this area of science with their own hands and agree that by 2060, only 45 years later, it is quite possible to create an artificial Supermind and, accordingly, a complete change in the world in which we live.

Let us now consider the second part of the question: When we reach the singularity, which “pole of the magnet will we pull”?

The overmind will certainly have tremendous power, and for us the question is very important: Who or what will control this power, and what will be his motivation?

And the answer to this question will determine whether the Overmind will be the greatest or most terrible of all discoveries. And maybe not at all.

Of course, the expert community is not the first day leading a heated debate on this topic. Here is an interesting survey conducted by Nick Bostrom, which showed the following results: 52% of respondents believe that the consequences of creating a strong AI will either be good or very good, and 31% that the consequences will be bad, or very bad. 17% of respondents favored a neutral option. In other words, experts in the field of AI believe that a strong AI would rather be a useful invention. I think that if the survey was conducted about the consequences of creating an artificial Supermind, then neutral ratings would be much less.

Before we seriously discuss the likely consequences, both bad and good, I suggest looking at the diagram below, it shows “when this happens” and “whether the result will be good or bad” in accordance with the opinion of experts in the field of AI

On it, on the vertical axis, time is laid, and on the horizontal, optimism associated with the creation of the Supermind.

I put the experts' opinion on the timing and consequences on this chart, so I received several groups in accordance with their forecasts. We will talk about the main group later. Before I began to explore the topic of AI, I did not think that it is so relevant today. I think that most people also do not take these conversations very seriously, and there are several reasons for this:

As I said in the first part of the article, science fiction created an unrealistic image of AI in the mass consciousness, and therefore no one takes it seriously. James Barratt compared this with people's reaction to the fact that the Ministry of Health would have issued a warning that in 10 years we will face a serious epidemic of vampirism that threatens humanity.

Because of the cognitive distortion inherent in man, it is difficult for us to believe in the reality of something until we see evidence.I’m sure that computer scientists were already discussing in 1988 how serious the impact of the Internet would be on people’s lives, but people didn’t believe that the Internet could really have such a strong influence. And the reason for this is that computers in 1988 could not do such interesting things as modern ones, and the person, looking at his computer, thought “Can this thing change my life? Really?". The imagination of people was limited by their experience, which did not allow us to imagine what computers were really capable of. And exactly the same way today the AI is underestimated. We hear from everywhere that he will be the next great invention, but our imagination is limited by our experience, which does not allow us to imagine the things that the AI will be capable of, and how much of everything in our life it will change.Experts are struggling with cognitive distortions, trying to show us what a strong AI will be capable of.

Even if you believed it. How much time per day do you spend thinking about what most of the future history of mankind cannot be seen? I think that is not very much. And this is despite the fact that this is much more important information for you. It happens because our brain is programmed to concentrate on solving small problems that we face every day, despite the fact that there are completely unimaginable long-term processes around.

One of the goals of my articles on the Overmind is to try to get you to think about the coming AI revolution, and help to accept one of the experts' points of view.

During my research, I found a dozen different opinions on the topic of AI, but soon enough I noticed that even within the core group of experts there is a division into subgroups, and more than three quarters of the experts are adherents of the following subgroup:

We will talk in more detail about both groups and start from the top right.

Why the future may be better than the wildest dreams

During my acquaintance with the world of AI, I was surprised at how many experts are adherents of this group, located in the upper right corner, let's call them an optimistic group.

The people in the optimistic group are overflowing with the exciting expectation of a wonderful future that will come at the moment of the creation of the Supermind. They are sure of what we will come to. For them, our future is better than all the wildest dreams only realized.

Their difference from other experts in the field of AI is not just in excessive optimism, but in full confidence that the creation of an artificial Supermind will bring us only good. Their critics say that confidence simply blinded them, not allowing them to see the problems we may face. Optimists retort, saying that it’s foolish to consider scenarios that predict the end of the world, because today the good and bad effects of technology are balanced and do more good than harm. In this article we will consider both points of view so that you can form your own opinion. Just try to understand that what you are reading can really happen. If you showed a man from a hunting-gathering society our world of abundance, home comfort and technology, he would find it impossible,invented magical world. You need to have enough imagination to understand that the coming change can be just as huge.

Nick Bostrom describes three possible ways to apply the artificial Supermind:

As a predictor who can answer almost any question posed. For example: “How to create a more efficient internal combustion engine?”. A primitive example of such a predictor is Google.

Like a genius who will perform the tasks assigned to him. For example, “Build a new, more efficient internal combustion engine”, at the end of this task, will wait for a new team.

As a ruler, who will be given broad powers, and he will be able to make independent decisions about how best to solve a problem. “Invent a faster, cheaper and safer way to transport people for personal use.”

These incredibly difficult questions for us will be for the artificial Supermind compare the question “I have a handle dropped from the table, help me”, which any person can fix simply by lifting the handle from the floor and returning it to the table.

Eliezer Yudovski , a representative of the group concerned from our chart above, described this situation well:

"There are no difficult problems, problems can be difficult for a certain level of development of the mind." Even a small step in the development of the mind translates some of the problems from the status of “incredibly difficult” to the status of “obvious” so that all solutions to all our problems become obvious, you just need to go up to a sufficient number of steps in the development of the mind.

Of all the enthusiastic scientists, inventors and businessmen representing an optimistic group, we will choose one as our guide to the world of bright ideas about the future.

They will be Ray Kurzweil, he leaves few people indifferent, most people either believe him or say that he is a charlatan. But there are those who treat him neutrally. For example, Douglas Hofterder describes the ideas from the book of Kurzweil in this way: “It’s like if you took a lot of good food and mixed it in a blender with dog excrement to such a state that it’s not clear what you".

But regardless of whether you like the ideas of Kurzweil or not, you see, they are quite impressive. He began to invent in childhood and later invented quite a few very useful things, for example, he created the world's first flatbed scanner, the first scanner to translate text into synthesized speech (allowing blind people to read plain text), as well as the famous Kurzweil synthesizer (first in the world electric piano), and the first commercial speech recognizer. He is also the author of five bestsellers. He is known as a brave enough predictor, and his predictions often come true.including his predictions made in the late 80s, when he predicted that by the year 2000, the Internet would already have a serious impact on our lives. The Wall Street Journal called Kurzweil a “restless genius,” Forbes a “thinking machine,” and Inc. Magazine "Addison's legitimate heir." Even Bill Gates did not stand aside and called Ray “the most suitable person to predict the consequences of creating AI.” In 2012, Kurzweil received an offer from Larry Page to become technical director at Google. In 2011, Ray created the Singularity Institute ( Singularity University ), which is located at NASA and is partly sponsored by Google. Agree, not bad for one person.

Why are we so interested in his biography? Yes, because when you find out Ray's opinion about what kind of future awaits us, he seems crazy. But the most surprising thing is that he is not crazy, he is very intelligent and educated, and not a person living in his own invented world. We can assume that he is mistaken in his predictions about the future, but certainly not a fool. And the fact that Kurzweil's predictions have come true so often, I really like, because I really want to live in the future he sees it. I think I'm not alone. There are quite a few such adherents of the singularity, for example, Peter Diamandis and Ben Gertzel also identify themselves as singularitarians. In their opinion, this is what awaits us in the coming century:

Time

Kurzweil believes that a strong AI will be created already by 2029, and thus by 2045 we will have not only an artificial Overmind, but we will already live in a technological world of singularity. More recently, his predictions of the speed of development of AI systems seemed too optimistic to say the least. Now, against the background of the rapid development of weak AI in the last 15 years, it shows us that reality is still evolving in accordance with the predictions of Kurzweil. Nevertheless, his predictions are still more optimistic than the medial response in a survey conducted by Nick Bostrom (a strong AI by 2040 and an artificial Supermind by 2060).

For the year 2045 to be in line with Kurzweil's predictions, three technological revolutions must take place: the biotechnological, the nanotechnological, and, most important, the AI revolution.

Before we continue the conversation about AI, I suggest a small excursion in nanotechnology.

Nanotechnological sidebar

Nanotechnologies call any manipulations with objects ranging in size from 1 to 100 nanometers. A nanometer is a billionth of a meter. Which roughly corresponds to the size of viruses (100 nm), DNA (10 nm wide), and other objects whose size is equal to the size of large bacteria (5 nm) or medium-sized molecules, such as the glucose molecule (1 nm). When / if we achieve success in nanotechnology, the next step will be manipulations with individual atoms, which is only one order of magnitude smaller (~ .1 nm).

- , : , (~431 ), 250 000 , . 1-100 250 000 , .25 — 2.5 . , , - . , , , 1/400 , , .

. 1959 : “ … , … ? ”. . , .

1986- « : ». , Radical Abundance .

This method seems good only as long as the result of an unfortunate event does not destroy life on Earth. The exponential growth, which allows you to quickly create trillions of nanobots, makes self-replication so terrible. No system is immune from disruptions, and if the nanobots replication system fails and does not stop the process when the required number of nanobots is reached, they will continue to “multiply”. Such nanobots will be able to use any carbon-containing materials to create new nanobots, and since all life on earth is carbon, the amount of carbon on earth is approximately 10 ^ 45 atoms, and the nanobot will consist of approximately 10 ^ 6 carbon atoms, thus 10 ^ 39 nanobots completely destroy all life on earth, for this they need only 130 replication cycles,The oceans of nanobots (this is the gray mass) will flood the Earth. Scientists believe that a nanobot will take about 100 seconds per replication cycle, which means they will be able to completely destroy all life on earth within 3.6 hours.

Or another possible scenario. If nanobots fall into the hands of some terrorists and they can reprogram them, for example, so that nanobots imperceptibly spread for weeks throughout the earth and then, starting at the right moment, uncontrolled “reproduction”, destroy life on Earth before how can we ever respond?

In recent years, such apocalyptic scenarios have been actively discussed, but most likely the likelihood of such a development is greatly exaggerated. Eric Drexler, namely, he introduced the concept of “gray mass”, sent me a message in which he spoke on the subject of such horror stories: “People love horror films, which is why zombies are so popular. Such stories themselves blow your mind. ”

As soon as we master nanotechnologies, we will be able to create a huge amount of various things: various devices, clothes, food, artificial blood cells, robots that destroy viruses and cancer cells, that is, almost anything. In the world that has mastered nanotechnology, the cost of materials is no longer tied to its content in nature or the complexity of its purification, the determining parameter is the complexity of the atomic structure. In such a world, diamonds can be cheaper than an eraser.

But we have yet to master nanotechnology. And so far it is not very clear: do we overestimate the complexity of creating the necessary technologies, or, on the contrary, we underestimate. Nevertheless, we are already on the verge of massive use of nanotechnology. Kurzweil believes that the nanotechnology revolution will occur by 2020. Governments already know the potential of nanotechnology and invest billions in their development (the United States, the European Union and Japan have already invested more than $ 5 billion).

Just imagine that the Supermind can control an army of nanobots. Nanotechnology is what we were able to come up with and now we are actively developing. But you remember that all our possibilities are nothing compared to the possibilities of the Supermind. He may come up with something even more complex that we may not even be able to understand. Now thinking about the question “Will AI revolution be good for us or evil”, we understand that it is almost impossible to overestimate the scale of changes that AI will bring to our lives. So if the following reasoning seems too unrealistic to you, just remember that the Overmind can do something that we cannot even imagine, something that will simply be beyond our comprehension, and we cannot even remotely predict what it is. may be.

What AI can do for us

Supermind, armed with the latest technology, which he, of course, himself will invent, will be able to solve any problems that humanity faces. Global warming? Supermind can reduce carbon dioxide emissions by offering us other, more efficient ways to generate electricity that does not require the use of fossil fuels. Then he will propose a way to remove carbon dioxide that has already accumulated in the air. Cancer or other diseases? They, too, will not be a problem for the AI, it can make a breakthrough in the field of medicine. Hunger? our Overmind will be able to build any kind of food using an army of nanobots, and it will be identical to the present at the molecular level, that is, in fact, it will be real. Nanobots can turn a bunch of garbage into steak or any other food,which will not necessarily be identical to real food in form (just imagine a huge cubic apple), then the food will be transported with the latest transport. Of course, the Earth will become a paradise for animals whose exploitation by man will also cease. It is also possible for Supermarking to save endangered species of animals and even recover already extinct from stored DNA. He will be able to solve our economic problems with the same ease, problems connected with international trade, all the most complicated questions of philosophy and ethics will also be solved by the Overmind.It is also possible for Supermarking to save endangered species of animals and even recover already extinct from stored DNA. He will be able to solve our economic problems with the same ease, problems connected with international trade, all the most complicated questions of philosophy and ethics will also be solved by the Overmind.It is also possible for Supermarking to save endangered species of animals and even recover already extinct from stored DNA. He will be able to solve our economic problems with the same ease, problems connected with international trade, all the most complicated questions of philosophy and ethics will also be solved by the Overmind.

But there is one more thing that is subject to the Overmind, it is so addictive that it completely changed my outlook: the

Overmind can overcome death.

A few months ago I told me what envy I felt towards more advanced civilizations that could already conquer death, then I didn’t know what, later I would write an article that will make me believe that I will find the moment when humanity finally can defeat death. I think that I will succeed in convincing you of this.

Evolution today has no reason to prolong the life of living beings. It is quite enough for an individual to live just enough to create descendants and raise them to the age when they can get their own food and defend themselves. So the mutations, which allowed to significantly prolong the life, did not bring any advantages in natural selection. As a result, we are, as W. B. Yeats, "the soul is encased in a dying body."

Since all of us died sooner or later, we are convinced that death is inevitable. We perceive aging as the passage of time, the clock is always ticking and there is no way to stop it. But it is not.Richard Feynman once wrote:

One of the most amazing things in biology is that death is not a necessity. If we want, for example, to create a perpetual motion machine, then there is a mass of laws saying that this is either impossible or these physical laws are not true. But in biology there are no laws that speak of the inevitability of death. What makes me think that it is only a matter of time when biologists discover the cause of death and cure humanity from this terrible disease.

In fact, aging is not tied to time, time may continue to move, but aging will be stopped. Aging is a process of gradual physical wear. The machine also gradually wears out, but is it possible to stop its aging? If you change parts as they break down, the car will be able to drive forever. And the human body in this is no different from the machine, it is just a little more complicated.

Kurzweil talks about smart nanobots that communicate with each other via wi-fi, which can move through blood vessels, performing various works to keep the body in order, gradually replacing damaged cells. If we bring this process to perfection (or another method that the Supermind comes up with), it will not only allow us to keep our body healthy, but it will also be possible to reverse aging. After all, the difference between a 60-year-old body and a 30-year-old body lies, after all, only in some physical indicators that can be changed with the help of nanobots. An overmind can build an apparatus to help reset the age, a 60-year-old man enters it, and a man with the skin of a 30-year-old comes out. Even a brain that suffers from serious diseases can be updated in this way,The overmind will surely be able to figure out how to do this without damaging the person and the memories of the person. A 90-year-old man suffering from dementia will be able to enter this device and exit already updated, he will be able to continue his career, and even start a new one. It seems too fantastic, but the body is just a collection of atoms, and the Supermind will surely be able to easily manipulate any atoms and atomic structures, so this is not such a fantasy.and the Overmind will surely be able to easily manipulate any atoms and atomic structures, so this is not such a fantasy.and the Overmind will surely be able to easily manipulate any atoms and atomic structures, so this is not such a fantasy.

Kurzweil also believes that artificial materials can be gradually integrated into the biological body. At first, the organs will be replaced with more efficient artificial counterparts that will be able to work longer than biological ones. Then we can begin to develop a body that better meets our requirements, we can replace the red blood cells with perfect nanorobots, which will take over their functions, but which at the same time can move themselves, and in this way we can completely give up the heart. We can even reach the brain and improve it by increasing computational abilitiesso much so that a person will be able to think billions of times faster than now. Also, the brain will be able to have direct access to external devices that will allow people to hold conferences via the Internet directly, without using a single word, exchanging thoughts for direct communication.

Human capabilities will be virtually limitless. People separated sex from its original function, which turned it into entertainment as well. Kurzweil believes that the same fate awaits food. Nanobots will be responsible for delivering the necessary substances to the cells, and they will remove all waste products and other unnecessary substances from the body. Robert Fraytas has already created artificial blood, which can allow a person to run at maximum speed, without having made a single sigh, so you can imagine that he can come up with Overmind to improve our physical abilities. Virtual reality will receive a new round of development, since nanobots will be able to intercept the signals of our nervous system and replace incoming information, creating completely new sensations, sounds and smells.

Kurzweil believes that, in the end, humanity will completely replace biological organs with artificial ones, then we will look at biological materials as terribly primitive, and it will be difficult to believe that once a human was made of them. We will read about that terribly dangerous primitive society, when diseases, accidents and aging could easily kill a person against his own will. We will live in a time when artificial and biological intelligence will be connected. This is exactly how, according to Kurzweil, we will conquer biology and become eternal and immortal, and he also believes that we will “be attracted to the other pole of the magnet”. And this will happen soon.

You certainly will not be surprised that the ideas of Kurzweil are met with serious criticism. His prediction of a technological singularity by 2045 is ridiculed by many and even earned such characteristics in his address: “the ecstasy of the nerds” or “the development of intelligence for people with IQ 140”. Many criticize his overly optimistic prediction of the speed of progress and even his level of understanding of the human body and brain, as well as the application of Moore's law to them. Moore's Law is usually applied to the development of hardware power and software. For every expert who believes in the rightness of Kurzweil, there are three who believe that he is wrong.