Predictions of the future in the film "Space Odyssey 2001": 50 years later

Hereinafter, links from the frames of the film lead to video with the corresponding scenes.

A glimpse of the future

It was 1968. I was 8 years old. The "space race" was in full swing. Recently, for the first time, a space probe landed on the surface of another planet (Venus [this was the Soviet automatic interplanetary station Venera-3 / approx. Transl.]). I eagerly studied everything that was connected with the cosmos.

Then on April 3, 1968 (in Britain - May 15), the film " Space Odyssey of 2001 " was released, and I really wanted to see it. Therefore, in the early summer of 1968, for the first time in my life, I went to the cinema. I was brought to watch the daily show, and I was practically the only spectator in the hall. To this day, I remember sitting on a plush armchair and waiting impatiently for the curtain to be raised and the cinema to begin.

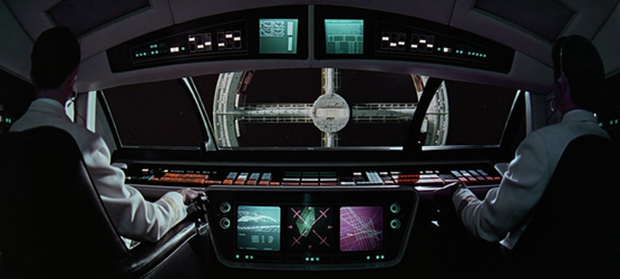

The film began with an impressive alien sunrise. But what happened next? These were not space scenes. These were landscapes with animals. I was confused, and I was a little bored. But when I started to worry, a bone flew into the air, which turned into a spacecraft, and soon a waltz was heard - and a large, rotated space station appeared on the screen.

')

The next two hours made a huge impression on me. It was not a matter of the spacecraft (by that time I had already seen many of these in the books, and even drew my own versions ). And at that time I was not particularly interested in aliens. For me, the atmosphere of the world, full of technology, and ideas about what can be possible, with all these bright screens, busy with their tasks, and controlling all computers, has become new and exciting.

It will take another year before I see a real computer for the first time. But those two hours of 1968 that passed the observation of "2001" defined the vision of what could be the computing future, which I carried in myself for many years.

I think that during the intermission of the film the seller of drinks — perhaps touched by the spectacle of the only child who was so closely watching the film — gave me a “movie program” describing the film. Fifty years later, this program is still with me - with a stain on food and with faded recordings of an eight-year-old who wrote (with errors) where and when I saw this movie:

What really happened

Over the past 50 years, a lot has happened, especially in the technology world, and it is very interesting for me to watch “2001” again - and compare its predictions with actual developments. Of course, some of what was created in the last 50 years was done by people like me, who were more or less influenced by "2001".

When Wolfram | Alpha was launched in 2009 — showing some features similar to HAL — we made a small reference to “2001” in our error message (do we need to say that one of the memorable reviews at the beginning of the work was the question: “Where did you Do you know what my name is Dave? "):

One of the obvious and unfulfilled predictions of “2001” was everyday space travel in a comfortable setting. But, as with many other predictions of the film, there is no feeling that this prediction was made incorrectly - just 50 years later we did not get to that.

What about computers in the movie? They have a lot of flat screens like today's ones. Although one of the obvious differences of the film will be the presence of a separate physical display for each functional area - the idea of windows, dynamically changing screen areas, has not yet emerged.

Another difference is the management of computers. Yes, you can talk to HAL. But the remaining options - a lot of mechanical buttons. Honestly, in the cockpit and today there are many buttons - but today the main element is the display. And, yes, there were no touch screens or mice in the movie. (Both of these devices were actually invented a few years before the film, but neither was widely known).

There are still no keyboards visible (and in a high-tech spacecraft full of computers going to Jupiter, the astronauts write with pens on paper tablets; however, it was prophetic that the screen could not see logarithmic rulers and tapes - although in one frame the printout flashes, terribly punch- like card ). Of course, in the 1960s, computers had keyboards. But at that time very few people could print, and there was no reason to suspect that this situation would change. (Personally, I like to use tools, and even in 1968 I regularly used a typewriter, although I didn’t know of any other child who would have done the same. My hands at that time were not large enough and strong to print like it is different than pressing the keys with one finger. The usefulness of this experience came in handy several decades later with the advent of smartphones.)

What about the content of the screens? It may have been my favorite part of the whole movie. They were so graphic, and they transmitted so much information so fast. I saw a lot of diagrams in books, and even with passion I drew a lot of my own . But in 1968 it was amazing to imagine that a computer could generate information and so quickly represent it graphically.

Of course, then there was television (although color appeared in Britain in 1968, and then I saw only black and white). But the TV did not generate images; he just showed what the camera sees. There were oscilloscopes, but they showed only one point moving along a line on the screen. Therefore, the computer screens in “2001,” at least for me, were something completely new.

At that time, it did not seem strange that the film contained so many printed instructions (how to use a graphic telephone, or a toilet for weightlessness, or hibernation modules). Today such instructions would be shown on the screen. But when “2001” was filmed, there were still a few years left until the idea of text processors and text demonstration for reading from the screen - perhaps not in the last degree because at that time people considered computers as computers intended for calculations, but there is nothing to be said about the text it was calculate.

In “2001,” a lot of things are shown on the screens. And although the idea of dynamic mobile windows is not used there, individual screens, when they do not show anything, switch to the “icons” mode, showing in large letters codes like NAV or ATM or FLX or VEH or GDE.

When the displays are active, they sometimes show something like tables or numbers, and sometimes slightly animated versions of all sorts of diagrams, like those found in textbooks. Some of them demonstrate three-dimensional skeleton graphics of the 1980s level (“what is the position of the spacecraft?”, Etc.) - perhaps developed under the influence of analog aircraft control systems.

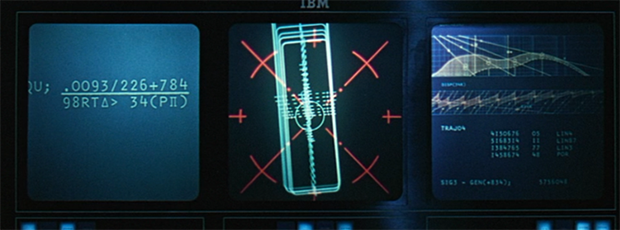

But often there is something else, and sometimes it takes up the whole screen. They show something like code or a mixture of code and mathematics.

This is usually a fairly “modern looking” sans serif font (actually, this is the Manifold font from IBM Selectric electric typewriters). Everything is written in capital letters. Asterisks, parentheses and names like TRAJ04 resemble early Fortran code (except that the abundance of semicolons is more like the IBM PL / I language ). Also, there are superscripts and decimals.

Now the study of these pictures like attempts to unravel the language of the newcomers. What did the filmmakers mean by this? Some fragments seem meaningful to me. But many look random and meaningless - formulas that have no meaning, full of useless numbers, recorded with high accuracy. Given the thoroughness of the creation of "2001", this moment seems to be one of the few shortcomings - although, perhaps, "2001" began a long and unsuccessful tradition of displaying meaningless code in movies. (A recent counterexample is the code for analyzing the alien language in the movie “Arrival”, written by my son Christopher, which is actually the code for the Wolfram Language , which actually performs the visualization shown there).

But does it make sense to show some code on real screens, such as we see in “2001”? After all, astronauts should not engage in the creation of a spacecraft; they only manage it. Most of the history of computing systems, the code was what people wrote, and computers read. But one of my goals in creating a Wolfram Language was to make a truly computational language for communication, high enough so that not only computers but also people could read it.

However, some of the procedures performed by the spacecraft can be described in words. But one of the goals of Wolfram Language is the ability to present the procedure in the form that coincides with the human computational thoughts. So, yes, on the first real controlled spacecraft going to Jupiter, it will make sense to demonstrate the code, although it will not look exactly like in “2001”.

Story randomness

Over the years, I watched “2001” several times, although in 2001 I did not do this (that year was dedicated to the end of my main work, “Science of a New Type”). But in the film “2001” there are several very obvious moments that do not coincide with the real year 2001 - and this is not only a different state of space travel.

One of the most obvious points is hairstyles, clothes, established standards do not look like that. Of course, it would be very difficult to predict. But, probably, it would be possible to assume (taking into account the hippie movement, etc.) that clothing styles and so on will become less formal. However, in 1968 I clearly remember, for example, that it was necessary to dress appropriately even for flying on an airplane.

Another thing that looks wrong today is that nobody in the cinema has personal computers. Of course, in 1968 there were only a few hundred computers around the world, and each of them weighed a significant proportion of a ton, and no one could imagine that people would have personal computers that they could carry with them [In the novel by Clarke, after the film, handheld computers are / approx. trans.].

It so happened that in 1968 I had recently received as a gift a small mechanical plastic computer (Digi-Comp I) which I could (with great difficulty) force to perform binary operations in three positions. But I think it is safe to say that I had no idea how this could scale up to the 2001 computers. And when I saw “2001,” I imagined that in order to access technologies like those shown in the cinema, when I grow up, I would need to get a job in a company like NASA.

What I, of course, could not have foreseen - and I’m not sure that someone could - that consumer electronics would become so small and cheap. And that access to computers and computing will become so common.

There is a scene in the film in which the astronauts try to find an error in the electronic component. Shown many beautiful screens in engineering style. But they are all based on printed circuit boards with separate components. There are no integrated circuits and microprocessors - which is not surprising, since they were not yet invented in 1968. (Electronic lamps are not used correctly there. A gyroscope was shown as equipment in the film).

It is interesting to see all sorts of minor features of technology, not predicted by the film. For example, when they take memorable photographs in front of a monolith on the moon, the photographer constantly clicks on the camera after each shot — probably to scroll through the film inside. The idea of digital cameras capable of taking pictures electronically, then did not appear.

In the history of technology, some events seem inevitable - although sometimes decades have gone by. An example is video telephones. Their early versions appeared in the 1930s. In the 1970s and 80s, attempts were made to commercialize them. But even in the 1990s, they remained exotic - although I remember that having spent some effort, I managed to rent a couple of these in 1993, and they even worked on ordinary telephone lines.

The space station at “2001” shows a Picturephone, even with the AT & T logo - although this is the old Bell System logo, which really looks like a bell [bell eng. - bell / approx. trans.]. It so happened that when the movie “2001” was filmed, a project called Picturephone really was in development at AT & T.

Of course, in “2001,” Picturephone is not a cell phone or a mobile device. This is a built-in rack object, paid Picturephone. In real history, the rise of cell phones occurred before the commercialization of video chats - so the technology of pay phones and video chats did not intersect.

Also in the film, it is interesting that the Picturephone is controlled using push buttons, with the layout exactly the same as today (although without * and #). Phones with buttons actually existed in 1968, although they were not widely distributed. And, of course, due to the peculiarities of our technology, today I don’t think that someone had to press the mechanical buttons while actually using video chat.

A large list of instructions has been printed for Picturephone - but in fact, as with today, working with it seems to be quite simple. In 1968, even direct calls over long distances (without an operator) were a novelty - and were not possible for all countries of the world.

To use the Picturephone in "2001" you need to insert a credit card into it. Credit cards already existed in 1968 for a long time, although they were not very common. The idea of automatically reading a credit card (for example, using a magnetic strip) was developed in 1960, although it did not spread until the 1980s. (I remember that in the mid-1970s in Britain, when I received my first card for an ATM, it was just a piece of plastic with holes like a punched card - not the safest option possible).

At the end of the call on the Picturephone in the film show the cost: $ 1.70. Adjusted for inflation today it will be about $ 12. By the standards of modern mobile phones, or video chats on the Internet, it is very expensive. But for a modern satellite phone this is not so far from the truth, even for an audio call. (Today's portable satellite phones do not support the speed of data exchange necessary for video calls, and networks on airplanes hardly cope with video calls).

On the space shuttle (or even a space plane), the cabin looks very much like a modern aircraft - which is not surprising, since even the Boeing 737 already existed in 1968. But the correct feature of modernity is that there are televisions on the backs of the room - controlled, of course, by rows of buttons (and they show futuristic shows for the 1960s, like television broadcasts of the women's judo match ).

An interesting fact related to “2001” is that in almost every big movie scene (except those related to HAL), food consumption is shown. But how will food be delivered in 2001? It was assumed that, like everything else, it will be automated, with the result that the film shows a large set of complex dispensers for food. It turns out that for today food delivery uses human labor very tightly.

In the part of the film concerning the journey to Jupiter, “hibernation capsules” are used - inside of which there are people in hibernation. Above the capsules there are screens with information on the parameters of the body, very similar to modern medical monitors. In a sense, it was not such a difficult prediction, since even in 1968, EEG displays similar to oscilloscopes already existed.

Of course, in real life, no one has figured out how to put people into hibernation. The fact that this, as well as freezing, may already be predicted of the order of hundreds of years. I believe that this - like the cloning and editing of genes - will come along with the invention of some ingenious tricks.

In “2001,” when one of the characters arrives at the space station, he passes immigration control (called “documentation”) - perhaps it was something like an extension of the “ Outer Space Treaty ” from 1967. It should be noted that in the cinema the process of admission passes automatically and uses biometrics, or rather, voice identification. The US emblem is identical to that used in passports, but, typically for the time before the 1980s, the system asks for a first and last name like “surname” and “Christian name” [instead of, apparently, “first name” and “last name” / note . trans.].

Even in the 1950s, primitive voice recognition systems already existed (“what is this figure?”) And the idea of identifying the speaker by voice was well known. But what was completely unobvious is that serious voice systems require computational power, which will only appear by the end of the 2000s.

It was only in the last few years that automatic biometric immigration control systems began to appear at airports - although they work with a face and sometimes with fingerprints, rather than with a voice (yes, probably, a system in which many people simultaneously speak from different booths would not work).

In the cinema, the stand has buttons for different languages: English, Dutch, Russian, French, Italian, Japanese. It would be very difficult to predict a more suitable list for 2001.

And although 1968 was in the middle of the Cold War, the film correctly portrays the international use of the space station — although, as in today's Antarctica, different moon bases from different countries are shown. Of course, the Soviet Union is present in cinema. But the fact that the Berlin Wall will fall 21 years after 1968 does not seem to be a fact that could ever be predicted in human history.

The cinema shows the logos of many companies. The space shuttle carries the proud brand Pan Am . At least in one scene, on its toolbar you can see the inscription " IBM ". (Another IBM logo is visible when working in outer space near Jupiter). The space station shows two hotels: the Hilton and Howard Johnson . The dispenser of frozen meals from Whirlpool (in the kitchen of the ship heading to the moon) is also used. There is an AT & T Picturephone (Bell System), an Aeroflot bag and BBC news (the channel is called BBC 12, although in reality the expansion over the past 50 years has only gone from BBC 2 to BBC 4).

Over the past 50 years, obviously, many companies have appeared and disappeared, but it is interesting to see how many of those mentioned in the film still exist, at least in some form. Many logos have not even changed - with the exception of AT & T and BBC, as well as the IBM logo, to which stripes were added in 1972.

It is also interesting to consider the fonts used in the film. Some of us seem to be outdated today, while others (like the title one) look absolutely modern. It is strange that sometimes in the last 50 years, some of these “modern” fonts seemed old. But that is, I suppose, the nature of fashion. It is worth remembering that even "serif fonts" with stone inscriptions of ancient Rome are quite capable of looking modern.

Another change since 1968 has taken place in the manner in which people speak and in the set of words. Changes are especially noticeable in technical conversations. “We are running cross-checking routines to determine the reliability of this conclusion” [we perform cross-border procedures to determine the reliability of this conclusion] sounds normal for the 1960s, but not for today. The risks of “social disorientation” without “adequate preparation and formation of reflexes” are mentioned, giving out a behavioral approach to psychology, which today, at least, would not be described in this way.

It is quite nice to hear the character say that when they “call” to the lunar base, they hear in response “a record that repeats that the telephone lines are temporarily down”. Today we are saying something quite similar about stationary telephone lines on Earth, but it seems that with regard to the lunar base it is worth talking about some kind of automatic system that independently finds out that their network does not work, instead of a live person calling by phone and listened to the recorded message.

Of course, if the character of the film said that “he cannot ping their servers” or “sees 100% loss of packets,” it would be completely incomprehensible for viewers of the 1960s — such concepts of the digital world were not yet invented (although their elements existed).

What about HAL?

The most notable and memorable character of “2001” is definitely the HAL 9000 computer, described (exactly as it could be described today), as “the latest novelty of machine intelligence”. HAL talks, lip-reads, plays chess, recognizes faces from drawings, comments on works of art, builds psychological portraits, reads sensors and cameras from the entire ship, predicts electronics failure, and — importantly for the plot — demonstrates human-like emotional reactions.

It may seem surprising that all these possibilities, similar to the work of AI, were predicted in the 1960s. But in fact, at that time no one thought that AI would be difficult to create - it was believed that very soon computers would be able to do almost everything that people could, although probably better, faster, and bigger.

But already in 1970 it became clear that everything would not be so simple, and soon the entire field of AI acquired a bad reputation - and the idea of creating something like HAL seemed more and more fantastic than hoping to dig up alien artifacts on the Moon.

In the cinema, HAL is considered the birthday of January 12, 1992 (although this happened in the book version of the story in 1997). In 1997, in the city of Urbana, pcs. Illinois, the fictional birthplace of HAL (and, as it happened, the city where my company’s headquarters is located), I attended the celebrationfictional birthday HAL. People talked about all sorts of technologies related to it. But for me the most surprising was the lowering of expectations. Virtually no one wanted to mention "general purpose AI" (probably not to be suspected of insanity), and instead people focused on very narrow problems, with specialized programs and software.

In the 1960s, I read a lot of materials on a popular science topic (and a bit of science fiction), and my first guess was that HAL class AIs would definitely appear sometime. I even remember that in 1972, when I had to speak to the whole school, I chose a topic related to the ethics of AI. I'm afraid that my then speech today will seem naive and wrong(probably partly due to the influence of "2001"). But I was only 12. Today I find it interesting that even then the topic of AI seemed important to me.

The rest of the 1970s, I personally concentrated on physics (it, unlike AI, flourished at that time). The AI still did not get out of my head when, for example, I wanted to understand whether the brain is connected or not connected with statistical physics or the formation of complex structures. The importance of AI was restored to me in 1981, when I launched my first SMP programming language and saw how well it copes with mathematical and scientific calculations. I was wondering what needs to be done so that you can perform calculations of everything (and know everything).

Immediately, I assumed that this computer would require capabilities comparable to the brain, and therefore, general-purpose AI. But since I recently watched the great achievements of physics, this conclusion did not bother me much. I even had a pretty clear plan. SMP - like the Wolfram Language today - was based on the idea of defining transformations that need to be applied when expressions fall under a specific pattern. I have always treated this as a crude idealization of certain forms of human thinking. I thought that for general-purpose AI it would be necessary to simply find a way to match not exact patterns, but an example (for example, “this picture shows an elephant, although its pixels do not completely coincide with the example”).

For this, I tried various schemesone of which was neural networks . But I couldn’t manage to formulate experiments that were simple enough, if only so that you could define a clear notion of success. Introducing into the neural network and a couple of other simplification systems, I ended up with cellular automata - which quickly allowed me to make several discoveries that sent me on a long journey exploring the computational universe of simple programs, and I set aside the approximate patterns and the AI problem.

By the time of the fictional birthday of HAL in 1997, I was at the peak of an intensive 10-year process of studying the computational universe and writing " Science of a New Type"- and only out of great respect for" 2001 "I agreed to take a break for a day in the hermitage and talk about HAL.

And it so happened that just three weeks before there were news about the successful cloning of Dolly the sheep.

And, as I mentioned, except A general-purpose AI, people have been discussing mammalian cloning for years, but this was considered impossible, and almost no one worked on it - until success with Dolly. I wasn't sure what kind of discovery or idea would lead to progress in the field of AI. But I was sure what will happen.

Meanwhile, for my study in of the computational universe, I formulated the " principle of computational equivalence"which contained important points about AI. At some level, he argues that there is no magic line separating intelligence and simple computation.

Encouraged by this fact, and armed with the Wolfram Language, I began to reflect on my goal of solving computational knowledge problems This was not a simple matter, but after many years of work, in 2009 I received Wolfram | Alpha - a general computational knowledge system that knows a lot about the world, and especially after it was integrated into systems with voice input and voice output like Siri , she is at in many ways, began to resemble HAL.

But HAL in the movie had more opportunities. Naturally, he had special knowledge about the spacecraft he controlled - just like the industrial versions of Enterprise Wolfram | Alpha, working in many large corporations. But he had other possibilities, such as the visualization of recognition tasks.

With the development of computer science, such things have turned into tasks for which “computers are simply incapable.” To be honest, good practical progress has been made in such things as text and face recognition. But there was no generalization in this area. And then in 2012, a neural network was suddenly discovered, which showed itself very well in recognizing standard images .

It was a strange situation. Neural networksdiscussed since the 1940s , and enthusiasm about them for decades periodically arose and fell. But suddenly, only a few years ago, they began to work. And many “HAL-like” tasks that previously seemed unattainable suddenly became available.

In 2001, the idea that HAL is not “programmed” but somehow “trained” is mentioned. HAL even once mentions that he had a teacher (person). Perhaps the gap between the creation of HAL in 1992 and its commissioning in 2001 should have meant the human-like period of machine learning. In the book, Arthur Clark probably changed his year of birth for 1997 because he decided that there would be no 9-year-old computers.

But the most important thing that gave rise to modern machine learning systems- they train not with human speed. They are immediately fed millions and billions of examples, and then they must burn processor time, periodically making finds, better and better corresponding to the examples. One can imagine that a machine with “active learning” could find the necessary examples in an environment resembling a classroom, but the most important successes in modern machine learning were achieved differently.

So can machines now do what HAL does in a movie? To control a spacecraft, it is probably necessary to perform many tasks, but most of the HAL tasks shown in the movie seem to be human in essence. And most of them are well adapted to modern machine learning - and every month more and more of themturns out to be successfully implemented.

But what about fastening all these tasks together in order to create a “full HAL?”

You can imagine a giant neural network that you can “train different aspects of life”. But this does not seem to be the right way. After all, if we are engaged in celestial mechanics when calculating the trajectory of a spacecraft, we do not need to try to implement it through a search for suitable examples; we can do this by doing real calculations and using the achievements of mathematics.

We need our HAL to know a lot about a lot of things, and to count a lot of things, including human-like understanding and judgment.

In the book version “2001”, the name HAL corresponded to the “heuristically programmed algorithmic computer”. Arthur Clark explained his work in the following way: “it can work according to the already prepared program, or search for better solutions, as a result of which you will get the best option possible.

And in a sense, this is a pretty good description of what I have done in 30 years from the Wolfram Language. “Ready-made programs” are trying to embrace all the systematic knowledge about computing and about the world accumulated by our civilization.

But there is the concept of finding new programs. And the science that I was engaged in led me to spend a lot of time looking for programs in the computational universe of all possible programs. Many times we have successfully found useful programs in this way, although this process is not as systematic as we would like.

In recent years, the Wolfram Language has included modern machine learning - in which programs are also searched, albeit in a limited area, defined, for example, by weights in the neural network, and constructed so that it can gradually improve results.

Can we create HAL today using Wolfram Language? I think we can get closer to this. It seems quite possible to talk to HAL in a natural language about various related things, and force it to use knowledge-based calculations to control and parse everything related to the spacecraft (including, for example, simulating its components ).

Less developed option "computer as an interlocutor," and not least because it is unclear what the purpose of such work. But I hope that in the next few years - in particular, to support applications like smart computing contracts (yes, it would be nice to set up one of the contracts for HAL) - a general purpose platform will appear for such things.

Not able to make mistakes

Do computers make mistakes? When the first electronic computers were created in the 1940s and 50s, the reliability of the equipment used was a big problem. Do electronic signals perform the tasks they are assigned to, are they interrupted, say, because the moth (bug) has flown into the computer?

By the time mainframes were developed in the 1960s, similar equipment problems were already under control. And in a sense, one could say (and marketing did) that computers were “absolutely reliable.”

HAL recalls this in "2001." “The 9000 series is the most reliable of all existing computers. No computer in the 9000 series made any mistakes or distorted information. We are all, in all practical ways, reliable and not capable of error. ”

From a modern point of view, such statements are absurd. Everyone knows that in computer systems - more precisely, software systems - inevitably there are bugs. But in 1968 they didn't understand bugs.

After all, computers were supposed to be perfect logical machines. Therefore, reasoned then, they should work perfectly. And if something goes wrong, then this, as HAL said, "refers to human errors." Or, in other words, if people are smart enough and cautious, computers will always "do everything right."

When Alan Turing wrote his theoretical work in 1936 to show the possibility of the existence of universal computers, he practically wrote a program for the supposed universal Turing machine. And even in this very first program (just one page) there were errors.

But you can say - well, if you try enough, you can certainly get rid of all the mistakes. There is a problem: for this you have to predict all the options that the program can do. But in a sense, if you are able to do this, you don’t need this program.

And in principle, any program that does something non-trivial will most likely show you what I call computational irreducibility — that is, there are no systematic workarounds to understand what it does. To find out what it does, you have no choice but to run it and see. Sometimes this is even a desirable condition - for example, if you create a cryptocurrency, for the extraction of which you need non-reducible calculations.

And if there is no non-reducibility in the calculation, it is a sign that the calculation is not as effective as it could be.

And what is a bug? It can be defined as a program that does something that no one needs. Suppose we want the pattern in the picture on the left, created by a very simple program, to never end. But the bottom line is that there may be no way to answer the question of whether it ends, except for how to wait an infinitely long time. In other words, finding out if there is a bug in the program and whether it does something that nobody needs can be infinitely difficult.

And what is a bug? It can be defined as a program that does something that no one needs. Suppose we want the pattern in the picture on the left, created by a very simple program, to never end. But the bottom line is that there may be no way to answer the question of whether it ends, except for how to wait an infinitely long time. In other words, finding out if there is a bug in the program and whether it does something that nobody needs can be infinitely difficult.And we, of course, know that bugs are not only a theoretical problem; they exist in all large-scale practical programs. And unless HAL does so simple things that we can foresee all of their possible aspects, it is almost inevitable that HAL will have bugs.

But perhaps HAL can be given some general guidelines - “treat people well”, or other potential principles of AI ethics. But here's the problem: every clear specification will inevitably have unintended consequences. We can say that “there will be bugs in the specification”, but the problem is that they are inevitable. With computational non-reducibility, there is no ultimate specification that can avoid any conceivable "unintended consequences."

Or, in terms of “2001,” HAL will inevitably be able to demonstrate unexpected behavior. These are simply the consequences of having a system capable of complex calculations. This helps HAL to "show creativity" and "take the initiative." But it also means that the behavior of HAL cannot be predicted completely.

The theoretical foundations that confirmed this were known as early as the 1950s, or even earlier. But it took the experience of using real complex computer systems in the 1970s and 80s to develop intuition about bugs. And in the 80s and 90s, my studies of the computational universe were needed to make it clear how computational irreducibility is ubiquitous, and how much it affects any broad specification.

How did they guess?

It is interesting to study where the creators of “2001” made a mistake about the future, but it’s impressive how much they did right. How did they do it? Stanley Kubrick, Arthur Clark (and their scientific consultant Fred Ordway III) used the help of a fairly large group of top technology companies of the time - and (although the credits did not say anything about it) received a surprisingly large amount of detailed information about their plans and desires, and some theoretical developments specifically made for the film in the role of product placement .

In the very first space scene, for example, one can see many different spacecraft, which are based on the concepts of companies such as Boeing, Grumman, General Dynamics, and NASA. There are no manufacturer logos in the film, and NASA is not mentioned; instead, different ships are marked with flags of different countries.

Where did the idea of a smart computer come from? I don't think she had an external source. I think at that time this idea was "in the air." My friend Marvin Minsky , in the 60s who was one of the pioneers of AI, was on the set of "2001" during filming. But Kubrick apparently did not ask him any questions about the AI; instead, he was interested in computer graphics, the naturalness of computer voices, and robotics. Marvin claims that he proposed the configuration of the manipulators used for the capsules on the spacecraft traveling to Jupiter.

What about HAL parts? From there, they come from? It turns out from IBM.

IBM at that time was the largest computer company, and, conveniently, its headquarters was located in New York, where Kubrick and Clark worked. IBM, as now, has been working on advanced concepts that can be demonstrated. They worked on voice recognition. They worked on image recognition. They worked on computer chess. In principle, they worked on almost all the capabilities of HAL, demonstrated in "2001". Many of them are even shown in the film “The Information Machine”, which was filmed for the 1964 World's Fair in New York (interestingly, the film shows a dynamic multi-window presentation that was not taken for HAL).

In 1964, IBM proudly introduced computers for the System / 360 mainframe. And all the rhetoric associated with HAL and its unmistakable work could be taken from promotional materials for 360. And, naturally, HAL was physically large - like a computer for a mainframe (large enough for a person to go inside the computer). But HAL had one feature that was not inherent to IBM. Then IBM carefully avoided stating that computers could be smart; they only emphasized that computers would do what people told them.

In 1964, IBM proudly introduced computers for the System / 360 mainframe. And all the rhetoric associated with HAL and its unmistakable work could be taken from promotional materials for 360. And, naturally, HAL was physically large - like a computer for a mainframe (large enough for a person to go inside the computer). But HAL had one feature that was not inherent to IBM. Then IBM carefully avoided stating that computers could be smart; they only emphasized that computers would do what people told them.Ironically, IBM's internal employee slogan sounded like "Think." It was only in the 1980s that IBM began to talk about smart computers - and, for example, when in 1980 my friend Greg Chitin gave advice to the head of the IBM research department, he was told that the company's policy was to lack AI research, because IBM did not want so customers are afraid that the AI will replace them.

An interesting letter from 1966 has surfaced recently. In it, Kubrick asks one of the producers (Roger Karas, who later became famous on TV): “Does IBM know that one of the main themes of the story is a psycho computer?” Kubrick is worried that the company will decide if it has been cheated. The producer responds that IBM is "a technical consultant for computers," and says that IBM will not object until it is "not associated with a hardware failure."

But was the HAL supposed to be an IBM computer? The logo in the film appears a couple of times, but it is not on HAL. Instead of the logo on the computer there is a sign with the name:

Interestingly, the blue color is characteristic of IBM. It is also funny that if instead of each letter HAL you take the next alphabetically, you get IBM. Arthur Clark has always said that this is a coincidence, and perhaps it is. But I think that at some point the letters on the blue tablet should have read “IBM”.

Like some other companies, IBM was very fond of marking its products with numbers. It is interesting to look at what they used numbers. In the 1960s, there were plenty of 3 and 4-digit numbers starting with 3 and 7, including the 7000 series, and so on. Interestingly, there was not a single series starting with 9 - the IBM 9000 did not exist. Until the 1990s, IBM did not have a single product with a name starting with 9. I suspect that because of the HAL.

By the way, the intermediary between IBM and the film crew was the head of marketing S. S. Hollister, who was asked in 1964 in an interview with the New York Times why IBM - unlike competitors - is engaged in wide advertising, although a very small proportion of corporate directors make decisions about buying computers . He replied that their advertising was “designed to affect the ideological inspirers [articulators], 8-10 million people influencing opinions on all levels of the country's life” (now they would be called “opinion leaders” [opinion makers] ).

He added: “It is important that important people understand what a computer is and what it can do.” In a sense, this is exactly what HAL did, although not in the way Hollister could have expected.

Predicting the future

Well, now we know - at least in the interval of 50 years - what happened to the predictions from "2001", and how science fiction has turned (or not turned) into a scientific fact. What does this information tell us about the predictions we can make today?

According to my observations, everything is divided into three main categories. First, there are things that people have been talking about for many years, and which will eventually appear - although it is unknown exactly when. Secondly, there are surprises that no one expected, although sometimes, with hindsight, they may seem obvious. Thirdly, there are things that people talk about, but which potentially cannot be realized in our Universe due to the laws of physics.

An example of what people have been talking about for a long time, and what will surely happen someday, is routine space flights. When “2001” came out, no one else left the orbit of the Earth. And next year he already visited the moon. The film made a seemingly reasonable assumption that by 2001 people would already regularly fly to the Moon and even be able to get to Jupiter.

In fact, this did not happen. But it could happen if it were considered as a priority task. Just for this there is no special motivation. Yes, of course, the study of space was always more popular than, say, the study of the ocean. But it did not seem important enough to pour into it the necessary resources.

Will it ever happen? It seems to me definitely. But after 5 years or 50? It is very difficult to predict - although, judging by what is happening, I would say that the answer lies somewhere in the middle.

People talk about space flight for over a hundred years . About what they call AI now, they talked even longer. And, yes, sometimes there was talk about the fact that some peculiarity of the human intellect is so specific that the AI never realizes it. But it seems to me that at the moment it is already clear that the AI is inexorably moving to reproduce all the features of what we call intelligence.

A more mundane example of what might be called the “inevitable development of technology” will be videophones. As soon as we had telephones and televisions, the appearance of videophones was inevitable. And, yes, prototypes were back in the 1960s. But for reasons related to the power and cost of computers and telecommunications, videophone technology did not spread widely for another couple of decades after that. But, in fact, it must inevitably spread.

In science fiction, since the invention of radio, it has been the custom to imagine that in the future everyone will be able to communicate with each other via radio without delay. And, yes, it took a good half a century. But in the end we got cell phones. And over time, and smartphones that can work as magic cards, magic mirrors and much more.

An example of what is still at an early stage of development is virtual reality. I remember trying back early VR systems back in the 1980s. But then they did not take root. I think that they will inevitably take root. Perhaps this will require the transfer of video with a quality comparable to human vision (audio quality has been at a decent level for a couple of decades). And whether VR, or augmented reality will spread as a result, is unclear. But something like that will definitely be, although it is not clear exactly when.

You can quote an infinite number of examples. People have been talking about robomobils since the 1960s. And in the end they appeared. People talked about flying cars even longer. Perhaps the helicopters could go in this direction, but because of the specifics of the control and reliability, this did not happen. Maybe this problem will solve modern drones. But, again, in the end flying machines will appear. It's just not clear exactly when.

Similarly, in the end, there will be robots everywhere. I hear that this “will happen soon”, already the last 50 years, and progress is terribly slow. But, as it seems to me, as soon as they figure out how to make "general-purpose robotics" - as we do general-purpose calculations - everything will be greatly accelerated.

There is one more topic that has been well watched over the past 50 years: what was once required to make special devices, it becomes possible to achieve by programming a general-purpose device. In other words, instead of relying on the structure of physical devices, the possibilities are achieved through calculations.

What will all this come to? In fact, the fact that everything will be programmed, up to an atomic scale. That is, instead of building special computers, it will be possible to do everything “from computers”. It seems to me that this is inevitable. Although, this topic has not yet been strongly discussed and not even considered in science fiction.

Returning to more mundane examples, let us recall other things that will probably someday become possible. This includes drilling the earth's crust to the mantle, and cities under the ocean (both themes were used in the NF - in 2001 you can even see an advertisement for the Pan Am underwater hotel). But whether it will be decided that these things are worthy of striving towards them is still unclear. The resurgence of dinosaurs? Definitely a method will appear close enough to their DNA. How long it will take from biotechnology, I do not know, but in the end we will have the opportunity to start a living stegosaurus.

Perhaps one of the oldest ideas in "science fiction" is the idea of immortality. And the lifetime of people is increasing. But will there be a moment after which people can practically become immortal from a practical point of view? I am sure that yes. Whether this way will be mostly biological, or mostly digital, or combined, using molecular technologies, I do not know. And I'm not sure that all this will mean, given the inevitable presence of an infinite number of possible bugs (today's "diseases"). But I consider it unequivocal that in the end the old idea of immortality will be implemented. Interestingly, Kubrick, an enthusiast of such things as cryonics, said in an interview in 1968 that, in his opinion, one of the things that would happen by 2001 would be “the elimination of old age”.

What are examples of what will not happen? Without knowing the fundamental theory of physics, we cannot be sure much. And even with such a theory, taking into account computational non-reducibility, it will be quite difficult to calculate all the consequences of a particular problem. But two good candidates for ideas that are not implemented are miniaturization in the style of “ Darling, I have reduced children ” and traveling faster than light.

At least, the probability of the implementation of these ideas in the form in which they are depicted in the NF is small. But it is still possible that something else happens, functionally equivalent to this. It is likely that it will be quite possible to “scan an object” at the atomic level, and then “re-interpret” it, and to construct its very good approximation in a much smaller size.

What about traveling faster than light? Maybe sometime it will be possible to deform space-time so as to move faster. Or use for this quantum mechanics. True, all this is true provided that such things occur in our physical reality.

But imagine that in the future everyone will be “loaded” into a kind of digital system, so people will experience some kind of virtual physics. And, of course, the equipment on which it all works will be subject to limitations based on the speed of light. But from the point of view of virtual perception, there will be no such restrictions. And in such a configuration, you can imagine another topic, beloved by science fiction writers: time travel (despite the many philosophical issues associated with this).

Okay, what about surprises? In today's world, compared with the world 50 years ago, you can find several such surprises. Computers are much more common than almost everyone expected. There are things like the web, social networks, which no one represented (although with hindsight, they may seem to be “obvious”).

There is another surprise, the consequences of which are understood much worse, but with which I personally communicated very closely: that such complexity and depth was revealed in the computational universe.

Surprises, almost by definition, occur when an understanding of what is possible or that is meaningful requires a change in thinking, some kind of “paradigm shift”. In hindsight, it often seems that such changes in thinking just happen — say, in a person’s mind — almost by accident. But in fact, there is almost always a gradual accumulation of understanding - which, suddenly, allows a person to see something new.

In this sense, it is interesting to consider the plot "2001". The first part of the film demonstrates an alien artifact - the black monolith - appearing in the world of our ancestors, apes, and starts the process leading to modern civilization. Perhaps the monolith should transmit the most important ideas to monkeys through telepathy.

But I like a different interpretation. No monkey 4 million years ago had ever seen a perfectly black monolith of exact geometric shape. But as soon as they saw him, they realized that something was possible that they had never imagined. As a result, their worldview has changed forever. And this is about how modern science is the result of Galileo’s observation of Jupiter’s moons - enabled them to begin constructing what had become a modern civilization.

Aliens

When I first saw “2001” fifty years ago, nobody knew if life would be on Mars. People did not expect any large animals to live there. But the presence of lichens or microorganisms seemed more likely than their absence.

With the advent of radio telescopes and the beginning of flights into space, it seemed likely to quickly find evidence of the presence of extraterrestrial intelligence. But in general, it seemed that people were not particularly happy or worried about this. Yes, of course, you can recall a radio show based on the novel “War of the Worlds” by G. Wells, which was taken for a report on a real invasion of aliens in New Jersey. But after about 20 years after the end of the Second World War, people were much more worried about the ongoing Cold War , and the seemingly real possibility of absorbing the whole world with a fire of nuclear war.

The source of inspiration for “2001” was Arthur Clarke’s cute 1951 story entitled “ Sentinel ” about the mysterious pyramid found on the Moon left there before life appeared on Earth. People hacked it with a nuclear weapon, but found that they could not understand its contents. Kubrick and Clark were worried that even before the release of “2001,” their history would be overshadowed by the actual detection of extraterrestrial intelligence (they even studied the question of buying insurance for this case).

But “2001” was the first serious film to study the encounter with the first alien mind. As I recently discussed at length , the question of whether or not something was “produced by reason” is a very complex philosophical task. But in the modern world we have a good heuristic method: geometrically simple things (with straight edges, circles, etc.) are most likely artifacts. Of course, in a sense, it is a little insulting that nature without any visible effort creates things that look much more complicated than we do, even with all our engineering tricks. And this situation, as I argued in another article, will definitely change as we take more advantage of the computational universe. But so far, the “if it’s geometrically simple, it’s probably an artifact” approach works well.

And in “2001” we see this approach in the work - when a perfectly rectangular black monolith appears on Earth at an age of 4 million years. Visually, it is quite obvious that this thing is not in its place, and that it was probably created specially.

Later in the film, another monolith is found on the moon.He was noticed thanks to Tycho Magnetic Anomaly [Tycho Magnetic Anomaly, TMA-1], probably named after the South Atlantic anomaly associated with the Earth’s radiation belts and discovered in 1958. The magnetic anomaly could be natural (“magnetic ore” as one of the characters says). But after the excavation, a perfectly rectangular monolith was found, the only probable origin of which can be considered extraterrestrial intelligence.

As I already wrote, it is rather difficult to recognize the work of the intellect, which has no historical or cultural ties with ours. And almost inevitably, such alien intelligence will in many respects be inexplicable to us. An interesting question: what happens if the alien mind has already somehow integrated into our ancient history, as shown in “2001”.

At first Kubrick and Clark decided that they would have to demonstrate the aliens at some point in the film. And they were worried about things like the number of legs. But in the end, Kubrick decided that the only alien who could achieve the degree of mystery and influence on the viewer he needed would be the one no one would see.

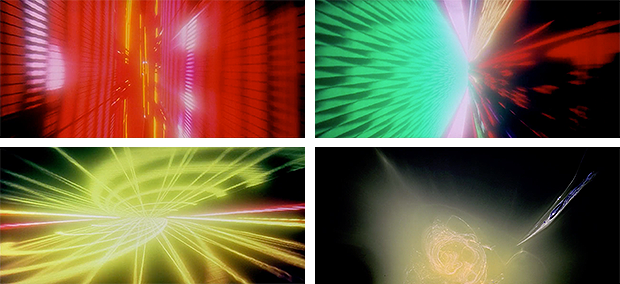

Therefore, in the last 17% of the film, after Dave Bowman passes through the stargate next to Jupiter, we see something that probably should have been inexplicable, but aesthetically interesting. Is this the natural world of another part of the Universe, or are these artifacts created by advanced civilization?

We see the right geometric structures that look like artifacts to us. We see smoother or more organic forms that don't look that way. On several shots there are seven strange flashing octahedrons. I am sure that I did not notice them when I first watched “2001” fifty years ago. But by 1997, while studying the film on HAL’s birthday, I had been thinking about the origin of complexity and the difference between natural and artificial systems for several years — that's why I noticed octahedrons right away. Yes, and I spent a lot of time studying the laserdisc version of "2001", which I had then.

I did not know what the octagons should have meant. Because of their periodic blinking, I first took them for some cosmic beacons. But I was told that this was supposedly the aliens themselves, sort of their small cameo . There was a version of the scenario in which the octagons eventually take part in the parade with confetti in New York - but it seems to me that they look better in cameo.

When Kubrick was interviewed about “2001,” he spoke about an interesting theory related to aliens: “They could evolve from a species, from fragile mind shells, into immortal machine creatures — and then, after countless years, turn into dolls of matter into creatures of pure energy and spirit. Their potential would then be limitless, and the intellect would be immense for people. ”

It is interesting to see Kubrick playing with the idea that the mind and the intellect do not have to have physical form. Of course, HAL is already a kind of non-physical mind. But in the 1960s, when the idea of software just appeared, there was still no clear idea that computing would be something valuable in and of itself, independent of the particular implementation of the equipment.

The possibility of universality of calculations appeared as a mathematical idea in the 1930s. But did she have physical consequences? In the 1980s, I started talking about such things as computational irreducibility, and about the deep connections between universal computation and physics. But in the 1950s, people were looking for more direct consequences of the universality of computation. One of the notable ideas was “universal designers” —which would somehow be able to construct anything, just like universal computers could count everything.

In 1952, in an attempt to "mathematize" biology, John von Neumannwrote a book about the "self-reproducing automaton", in which he described something like an extremely complex two-dimensional cellular automaton with a configuration that reproduces itself. And, naturally, in 1953, it turned out that the design of biological organisms is determined by digital information encoded in DNA.

But in a sense, Neumann's work was based on incorrect intuition. He assumed (as I did, until I saw evidence of the opposite) that a thing possessing such a complex function as self-reproduction should itself be very complex.

But, as I discovered many years later, experimenting in the computational universe of simple programs, complex behavior is not the prerogative of complex programs: even simple systems (such as cellular automata) can do this with the simplest rules out of all that you can imagine. And, of course, it is quite possible to come up with a system with very simple rules capable of self-reproduction. As a result, self-reproduction is not such a serious feature (imagine the code on the computer, copying itself, etc.).

But in the 1950s, von Neumann and his followers did not know this. And, given the interest in space-related topics, the idea of self-replicating machines inevitably turned into the idea of self-replicating space probes (as well as lunar factories, etc.).

I'm not sure if all these ideas came together by the time of the creation of “2001”, but by the time the sequel appeared, Space Odyssey 2010 , Clark had already decided that black monoliths were self-replicating machines. And in a scene reminiscent of the modern idea that the AI, perplexed by the problem of making paper clips, turns everything (including people) into paper clips, black monoliths turn the whole planet Jupiter into a giant collection of black monoliths.

What are the aliens trying to do in 2001? I think Kubrick understood that it would be quite difficult to compare their motivation with something human. Why, for example, Dave Bowman finds himself in a room similar to a hotel in the style of Louis XV - this is probably the most timeless setting in the entire film (except for the fact that according to the 1960s habits, the room has a bath, but no shower )?

Interestingly, in "2001" there is both artificial and alien intelligence. Interestingly, 50 years after the release of 2001, we are becoming more and more imbued with the idea of AI, and yet we are sure that we have not come across evidence of an alien mind.

I think the big problem with thinking about the alien mind is determining what we consider to be the mind. It is quite easy for us, people, to create a pre-Copennic notion that our intelligence and capabilities are something fundamentally special, just as the Earth was once thought to be the center of the universe.

But my principle of computational equivalence suggests that in fact we can never determine some fundamental features associated with our mind; its features lie in a certain history and connections. Does the weather have “its own opinion”? According to the principle of computational equivalence, I do not think that there is any fundamental difference between the calculations that occur in nature and the calculations that occur in our brain.

Similarly, looking into space, it is easy to see examples of complex calculations. We, of course, do not consider the complex processes going on in the pulsar magnetosphere as “alien minds”, we simply consider them to be something natural. In the past, we could say that no matter how difficult the process is, it somehow turns out to be fundamentally simpler than human intelligence. But if we take into account the principle of computational equivalence, this is incorrect.

So why don't we consider the pulsar magnetosphere an example of a “mind”? Because we do not recognize in it anything resembling our history or the details of our behavior. As a result, we have no way of connecting what he does with goals that a person could understand.

The computational universe of all possible programs is full of complex calculations that do not coincide with any of the existing human goals. But trying to develop an AI, we actually sift through this computational universe in search of programs that do what we need.

However, in this computational universe, there are an infinite number of "possible AI". And those that we choose not to use yet are not inferior to others; we just don’t see how to combine them with what we want.

AI is the first example of alien intelligence that we see (yes, there are still animals, but it is easier to connect with AI). We are still in the early stages of trying to spread intuition about AI. But the more we understand what AI can become, and how it is connected with all other parts of the computational universe, the clearer we will become, what forms the mind can take.

Will we find extraterrestrial intelligence? In many ways, I think, have already found. He is everywhere around us, throughout the universe, doing all sorts of different complex calculations.

Will there be such a bright moment, as in “2001”, when we discover an extraterrestrial intelligence, sufficiently coinciding with ours, so that we can recognize the perfect black monoliths made by it - even if we can’t understand their purpose? Now I suspect that instead of seeing something that we immediately recognize, we will gradually generalize our concept of intelligence, until we can attribute it not only to ourselves and AI, but to other things in the Universe.

When I saw “2001” for the first time, I don’t think that I even worked on how many years I’ll turn out to be 2001. I always thought about what the future could be, but I didn’t imagine how I would live in it. When I was 8 in 1968, I was most interested in space, and I made many carefully assembled booklets, with printed text and neatly drawn diagrams. I kept detailed reports on each launched space probe, and tried to develop charts of my own spacecraft.

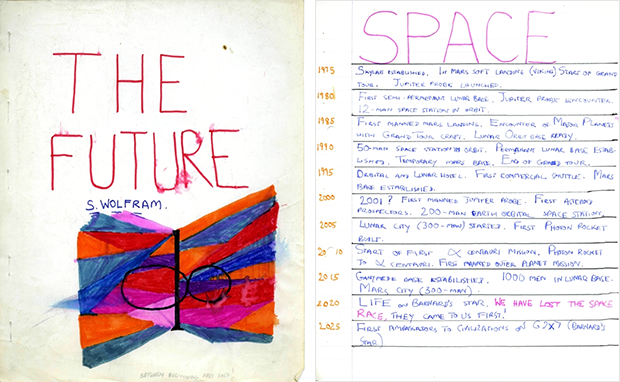

What made me do it? Like much that I did later in my life, I did it because I was interested. I never showed them to anyone, and I never wondered what anyone else might think of them. And for almost 50 years I have kept them. But studying them now, I found one unique example of what is connected with my interests that I made for school: a booklet under the charming title “The Future”, written when I was 9-10 years old, containing the page of future space predictions terribly shameful for me Research (with reference to "2001"):

Fortunately, I did not wait to find out how wrong these predictions turned out, and in a couple of years my interest in space turned into an interest in more fundamental areas, first in physics, and then in computation and the study of the computational universe. When I first started using computers in 1972, they were paper tapes and teleprinters - infinitely far from the flickering “2001” screens.

But I was lucky to live all the time during which computer technologies from “2001” turned from fiction into something close to facts. And even more fortunate to make a small contribution to this.

I often say that - under a certain influence of "2001" - my favorite desire is to create "alien artifacts": things that can be recognized after creation, but no one expected existence or possibilities. I like to think that one example is Wolfram | Alpha, and also what Wolfram Language has become. And, in a sense, my attempts to study the computational universe.

I never talked to Stanley Kubrick. But I spoke with Arthur Clark, in particular, when publishing my great work “Science of a New Type”. (I like to think that this book is large in content, but it is definitely large in size - 1280 pages, almost 3 kg). Arthur Clark asked me to send him a copy before publication, which I did, and on March 1, 2002, I received an email from him saying: “The overstressed postman is hobbling away from my door ... Don't switch ...”

Then, three days later, I I received another letter: “Well, I looked through almost all the pages, and am still in shock. I don’t understand how you could do it even with the help of computers. ” Wow!I actually managed to do what seemed like an alien artifact to Arthur Clark!

He offered me a quote for the cover: “Stephen’s main work is probably a book of a decade, if not a century. It is so comprehensive that we probably should call it the “Universe of a new type”, and even those who are scrolling through 1,200 pages of (very sensible) text will be able to enjoy computer illustrations. My friend HAL is very sorry that he did not invent them first. ” In the end, Steve Jobs dissuaded me from the quotes on the cover, saying: “Isaac Newton did not have quotes on the cover; why do you need them? ”

It's hard for me to believe that 50 years have passed since I first saw “2001”. Not all the details of the movie (for now) have become a reality. But what matters to me is that he proposed the idea of what might be possible — the idea of how different the future may be. This helped me put my life on the path of trying to determine the future by all means available to me. And not just wait until the aliens deliver monoliths to us, but try to create an “alien artifact” yourself.

Source: https://habr.com/ru/post/374527/

All Articles