Overview of SLAM algorithms for depth cameras in ROS

Good afternoon, dear readers! In the last article, I already wrote about the rtabmap SLAM algorithm in the context of visual odometry methods. In this article I will talk about this SLAM algorithm in more detail, and also provide an overview of another well-known SLAM algorithm designed for depth cameras - RGBDSLAM. Anyone interested, please under the cat.

About the project can be read in detail on the official page .

I described the rtabmap installation procedure in detail in a previous article . For example, on a Ubuntu 14.04 system, the installation will look like this:

')

However, on Raspberry Pi 3 with the Kinetic ROS installed, this method will not work, since the rtabmap build is disabled in the Kinetic release for ARM due to a problem with libpcl-dev (for more information about the problem, read here ). Therefore, we compile it from source by following the instructions on the rtabmap page:

If swap is not available on the Raspberry Pi, then virtual memory may not be enough at compile time:

You need to add the swap memory according to the instructions from here and re-run the compilation.

The compilation should take about an hour. After this, perform the installation:

Install the rtabmap_ros package in our catkin working directory:

When running catkin_make, an error may occur due to the lack of the image_transportConfig.cmake file. In this case, you need to compile the image_transport package in the working directory catkin_ws:

In order to avoid errors with loading of dynamic libraries when starting the rtabmap_ros nodes, it is recommended to add the following line to ~ / .bashrc:

Run rtabmap:

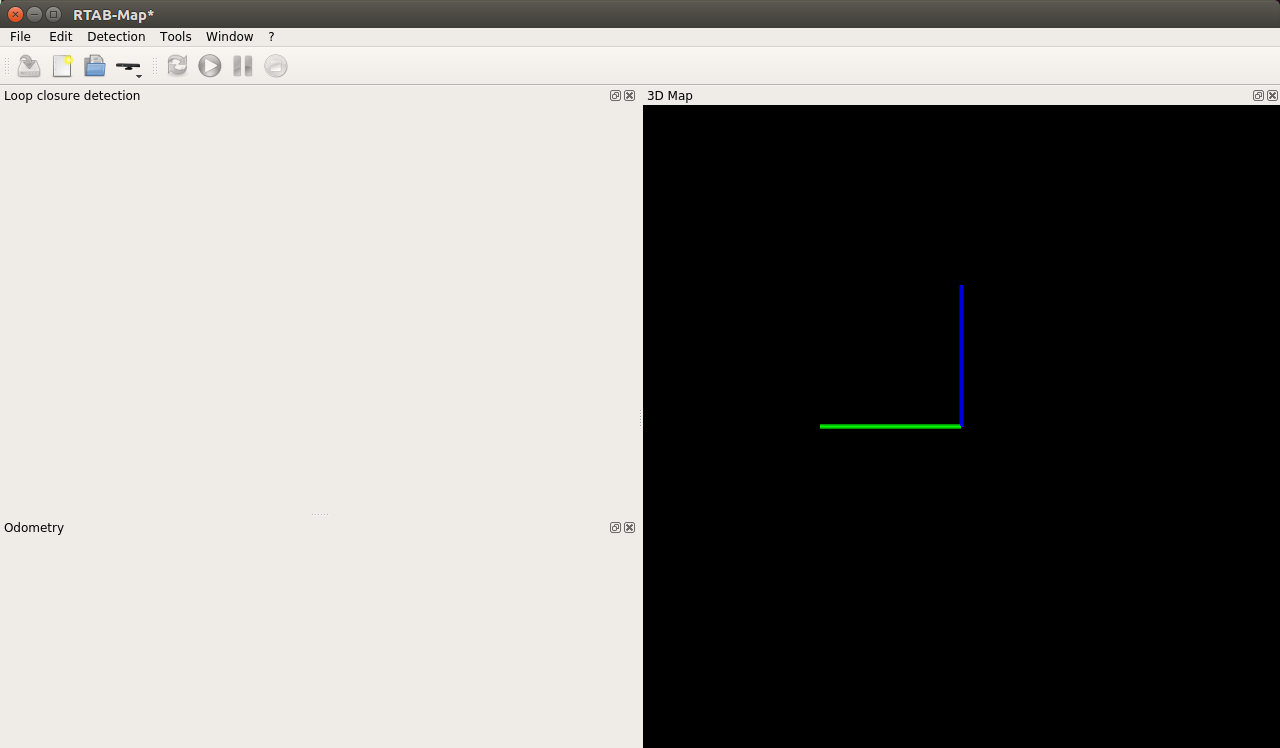

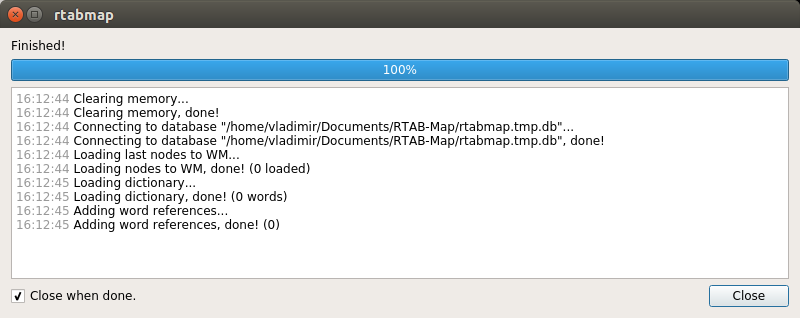

Create a new database: File → New database:

For the Kinect camera, the default driver is OpenNI-PCL:

Let's start the map building procedure by clicking on the “Start” button:

rtabmap can also be used with rviz:

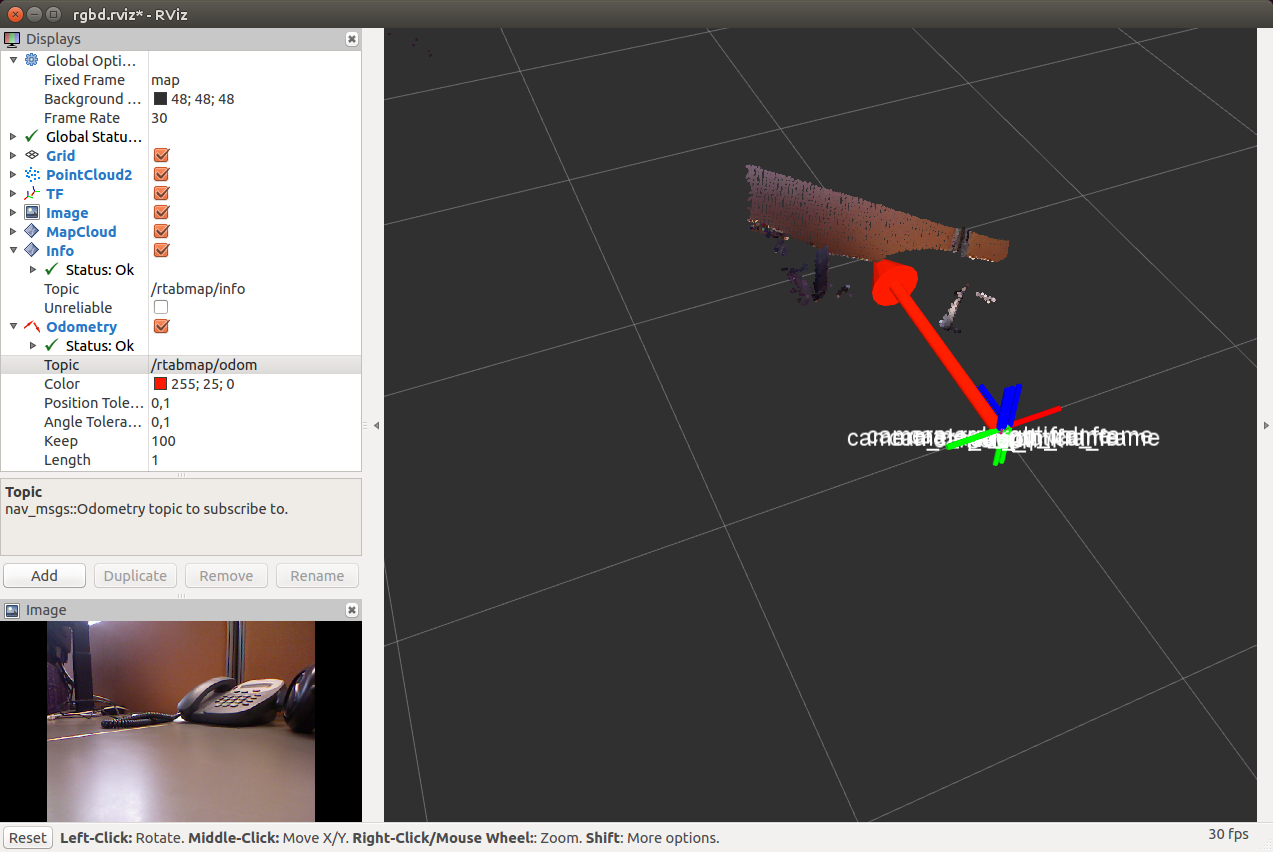

The rviz window opens:

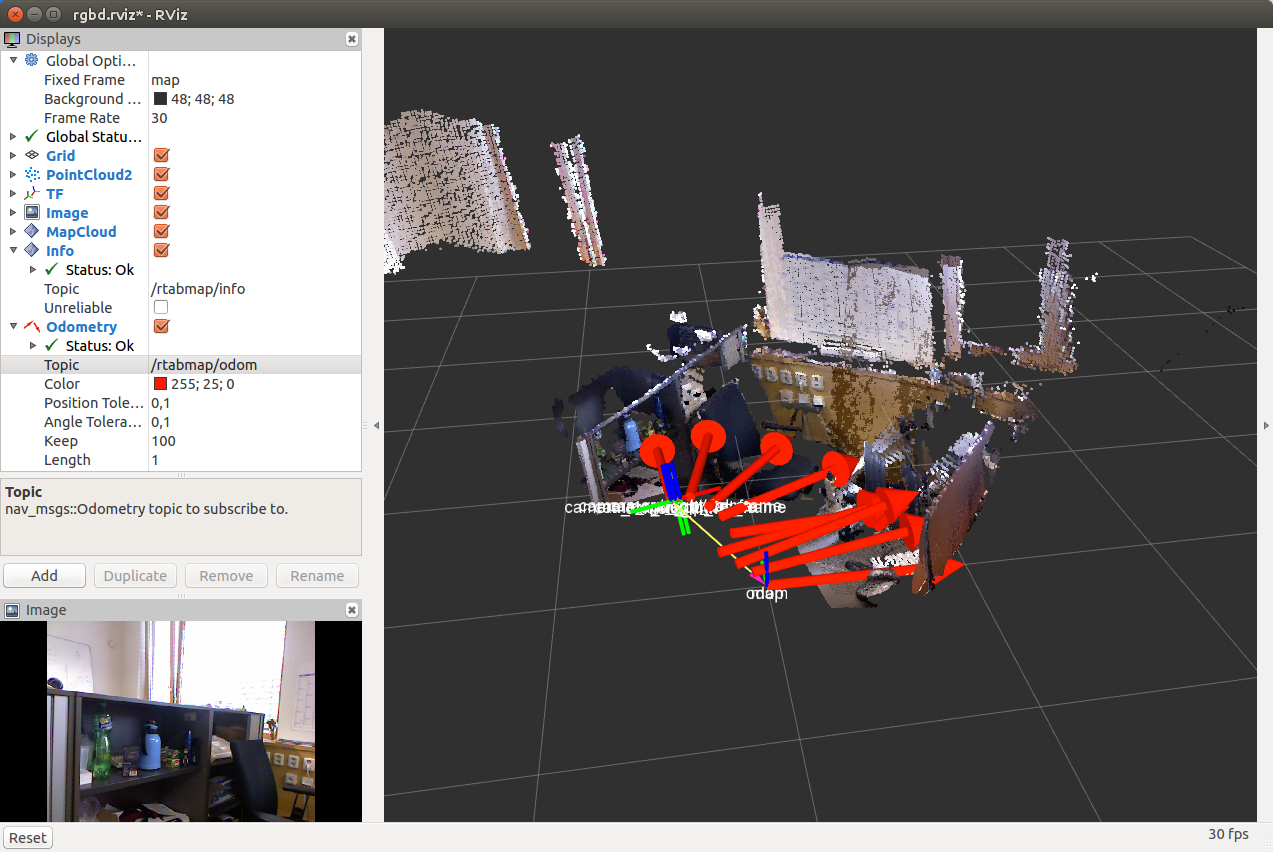

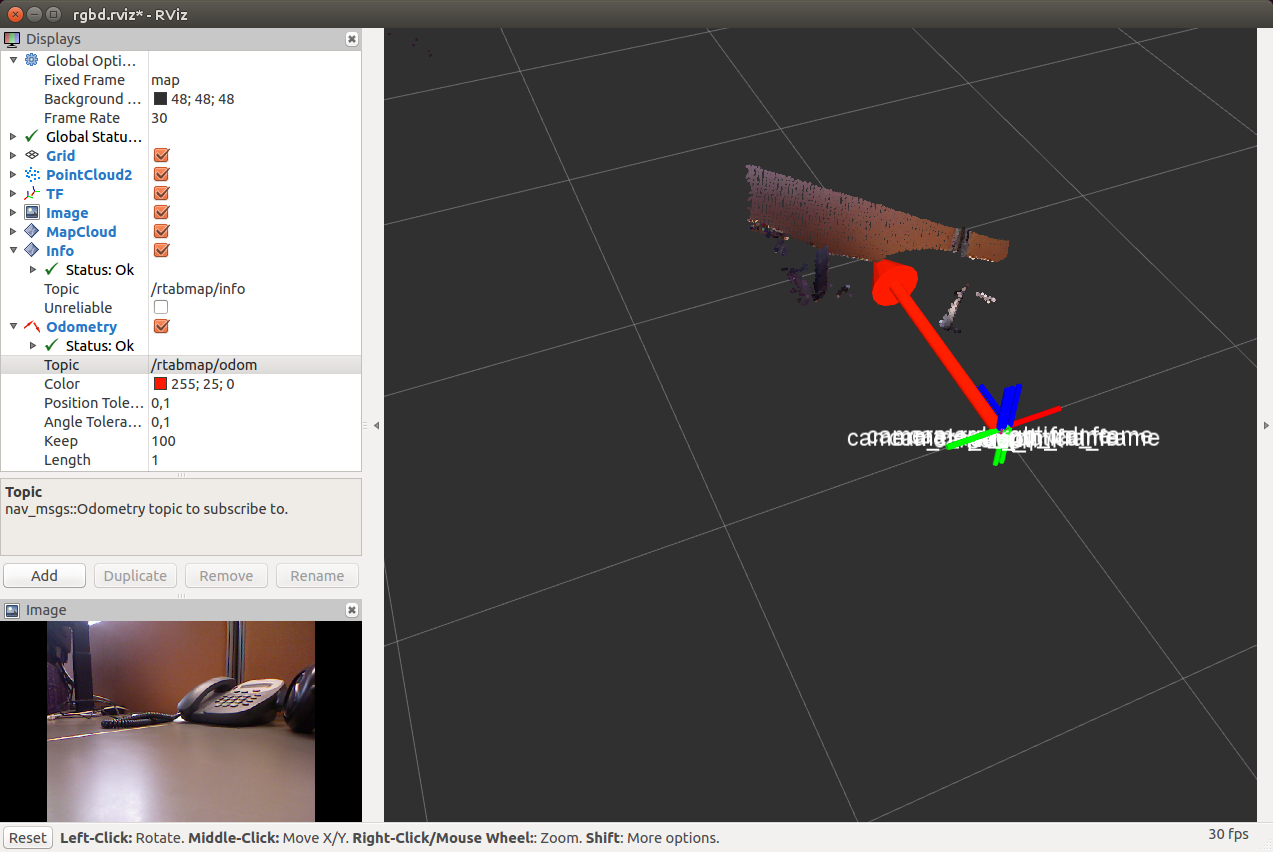

Add an Odometry display and select the “/ rtabmap / odom” topic. After some camera movement in space, we get a similar picture in rviz:

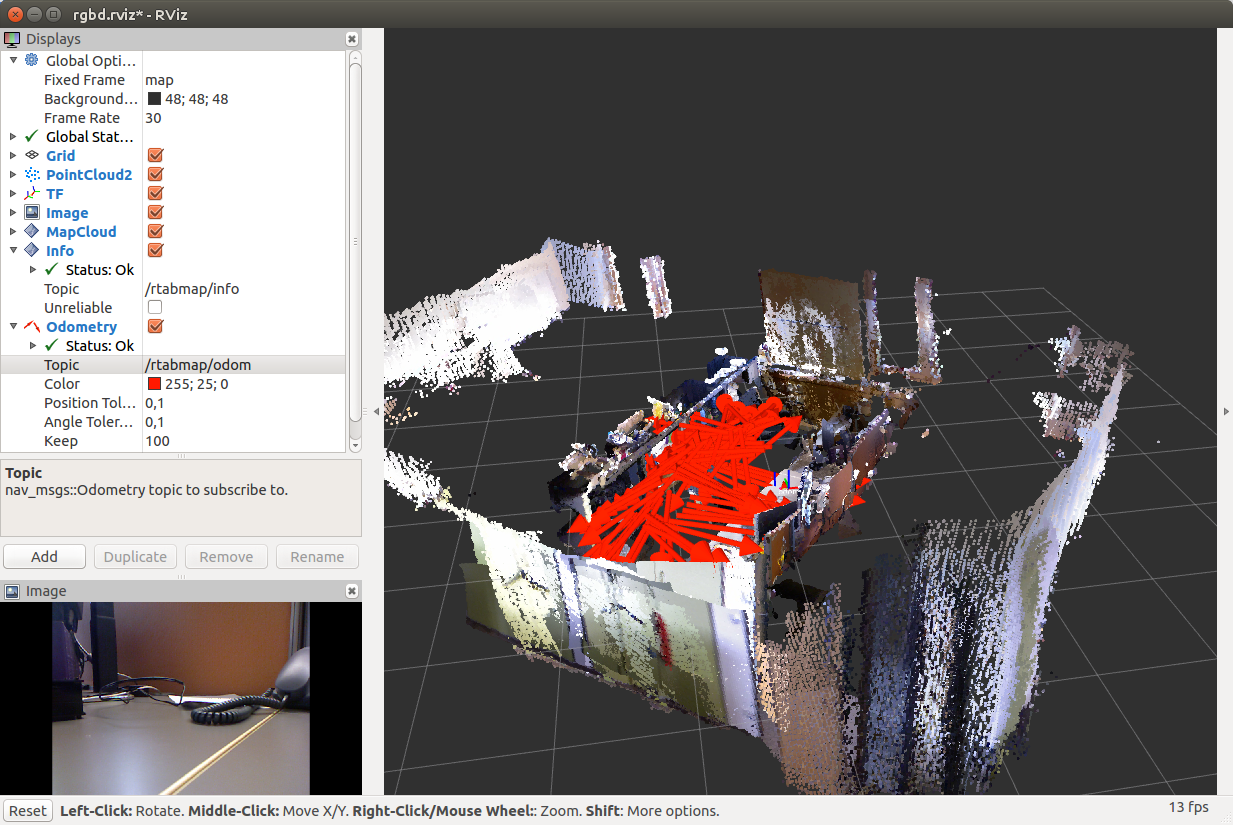

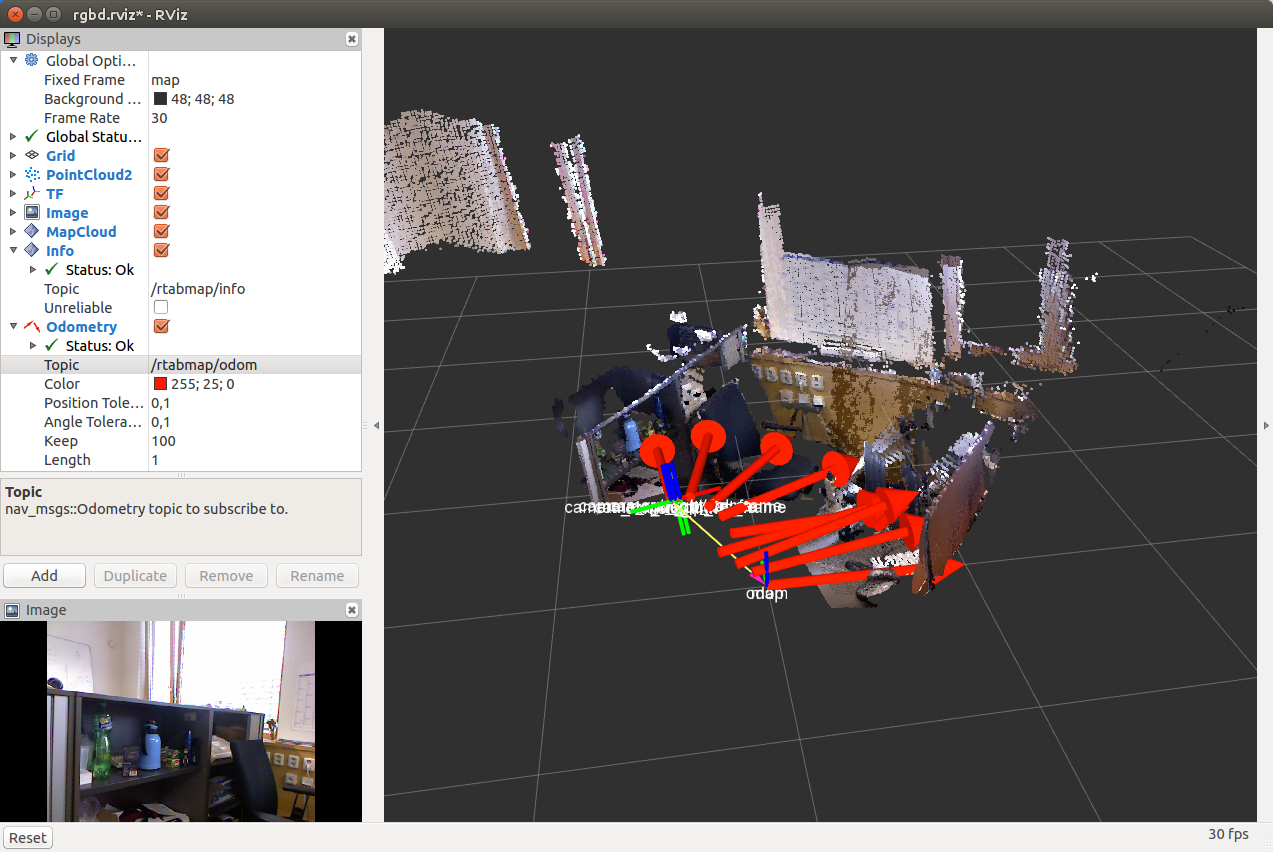

After we complete the room with the camera, we get:

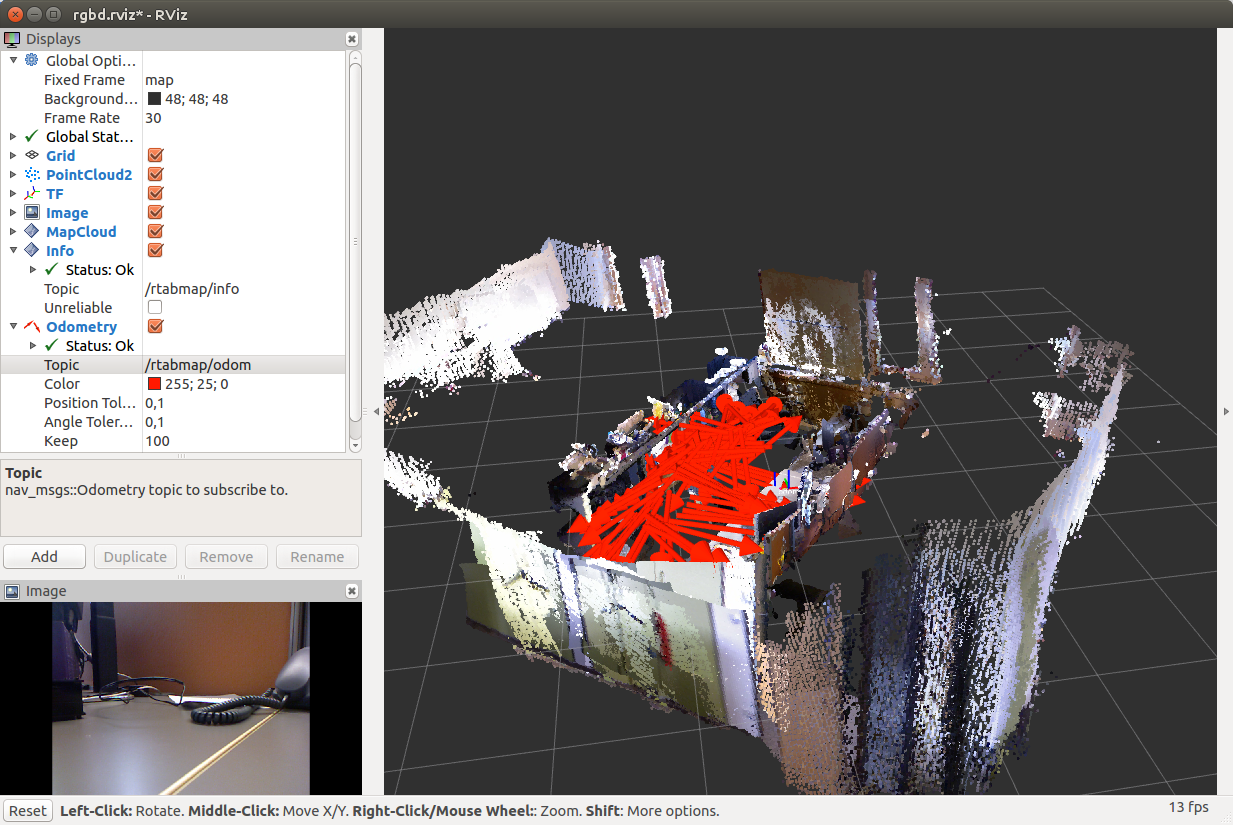

If we move around the room slowly, smoothly moving the camera without sudden movements, then we can get a fairly accurate map:

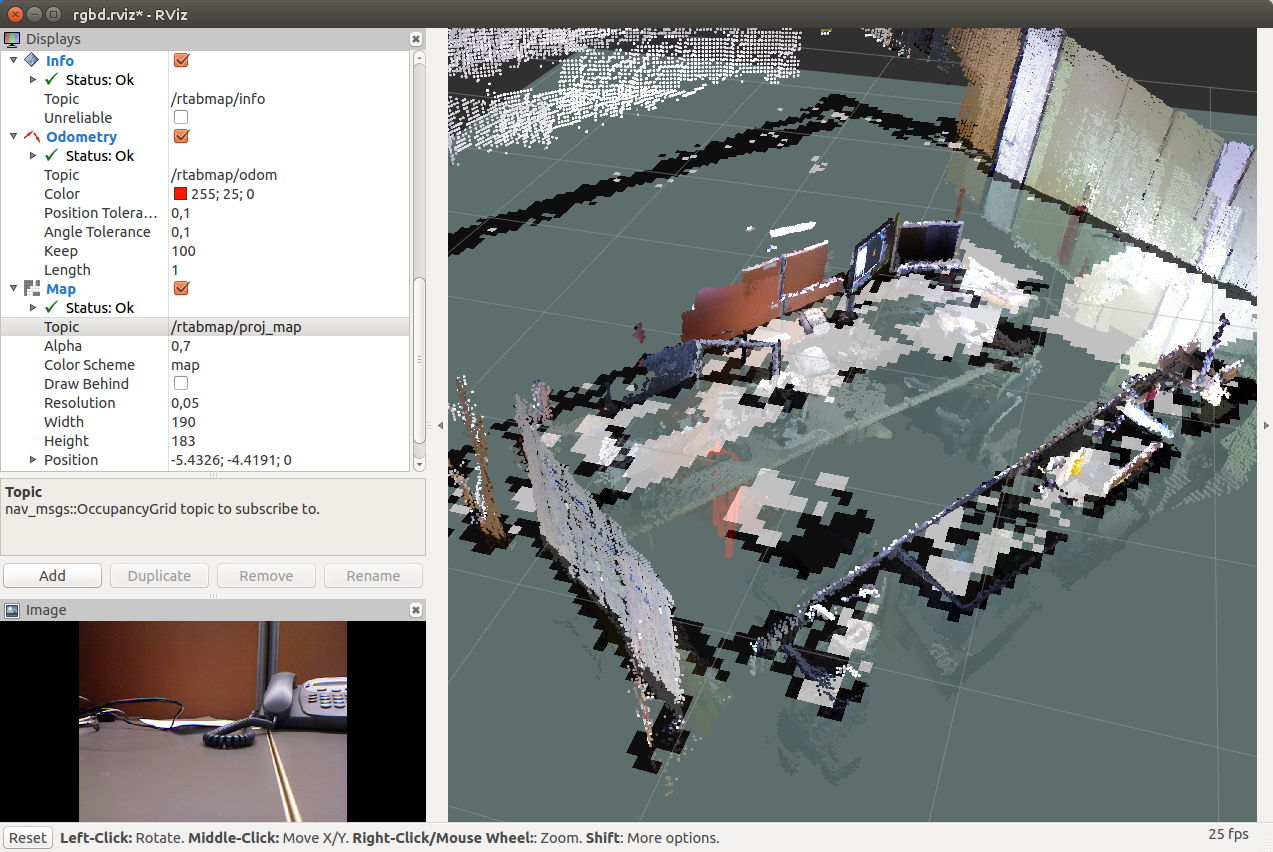

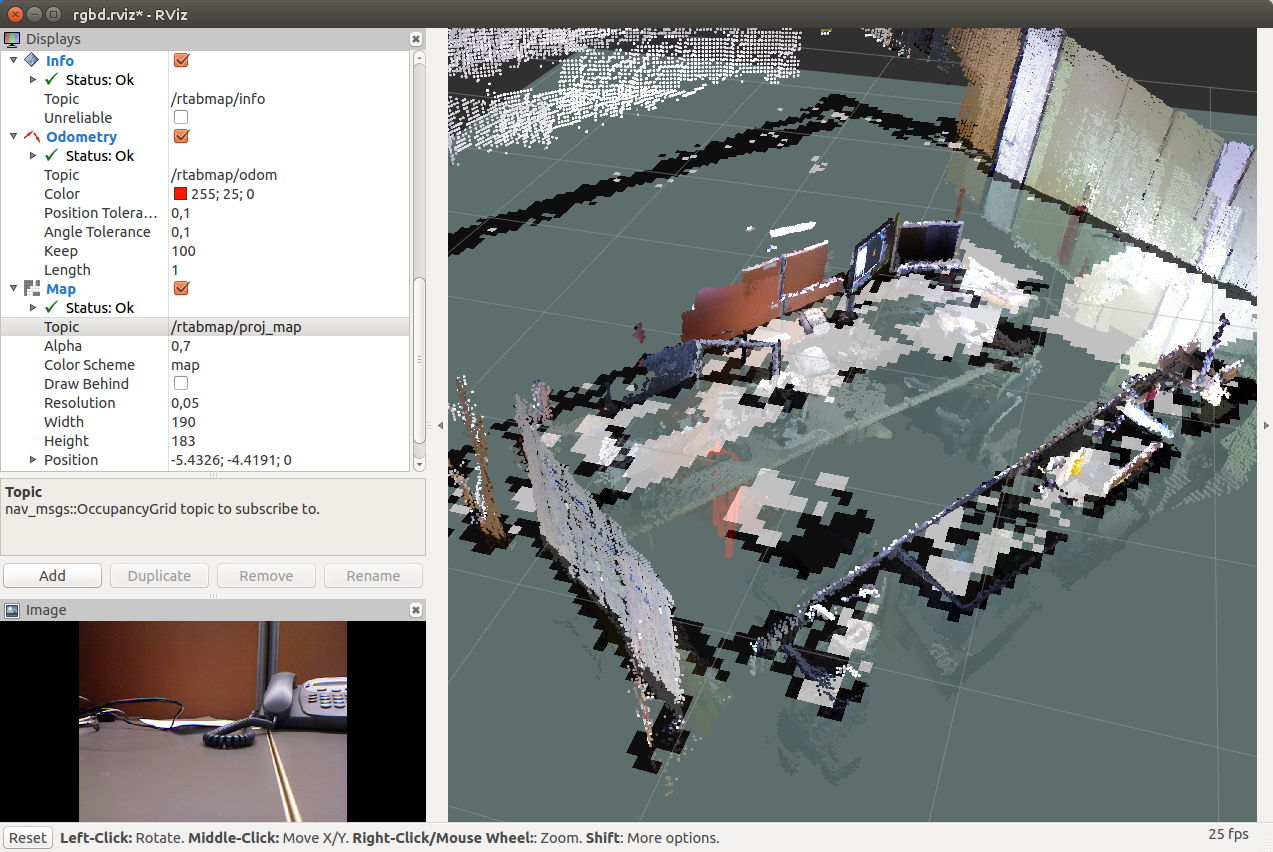

We can add an OccupancyGrid type Map display and select the “/ rtabmap / proj_map” topic to visualize the flat map (as if we were using gmapping):

When you close the rviz window, the database and map are automatically saved to disk (the path is displayed in a string in the terminal).

Run rtabmap:

The window already familiar to us will open. Create a database: File -> New database:

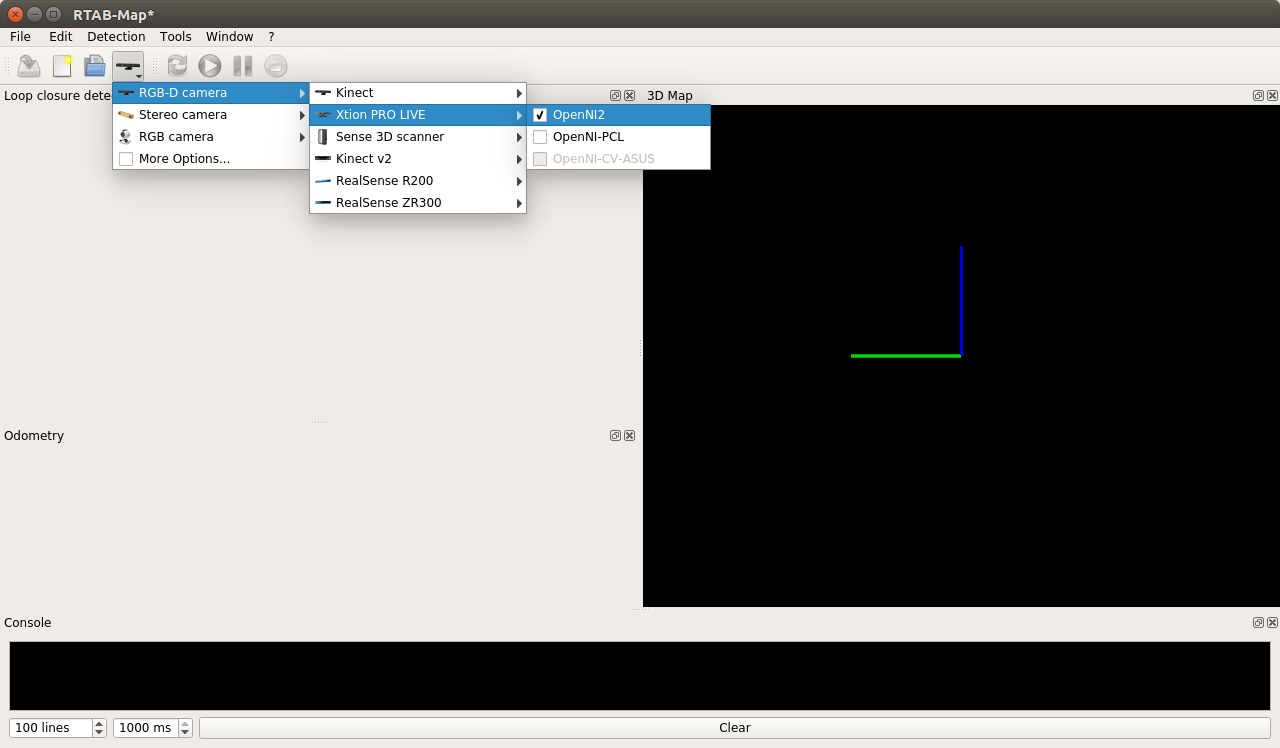

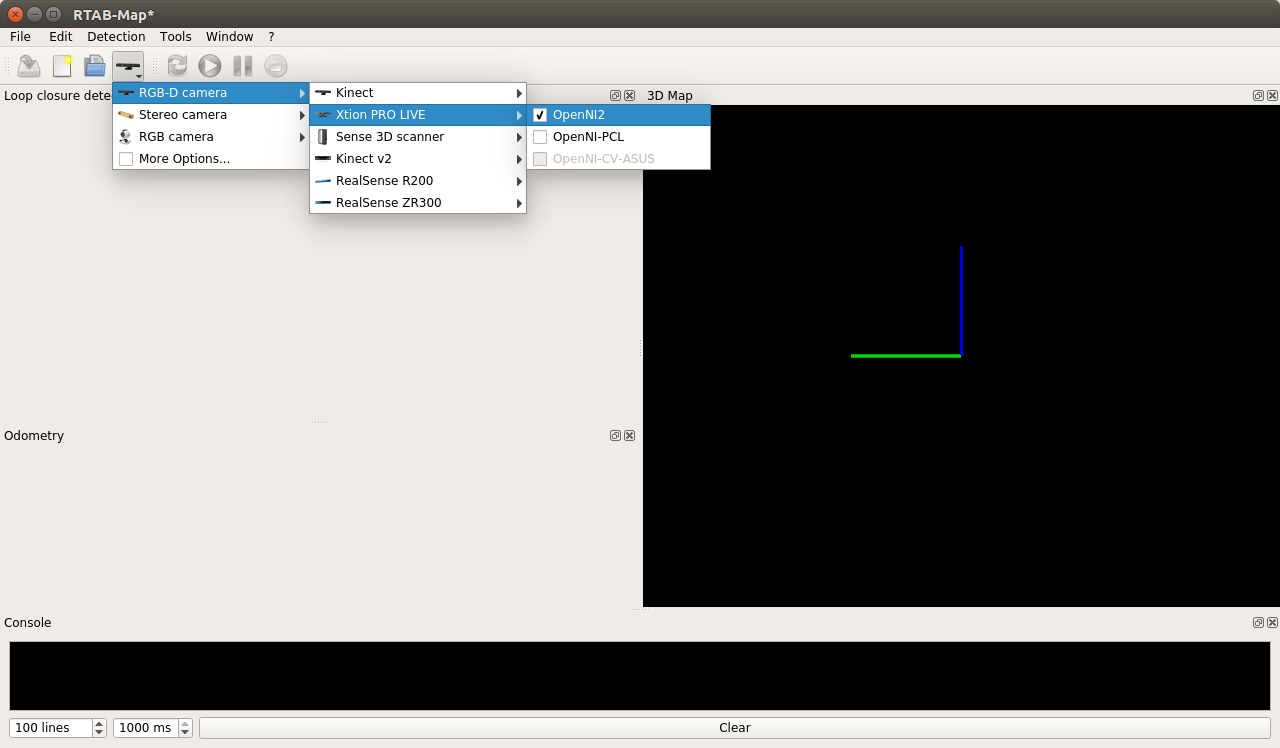

Select the OpenNI2 driver for the Xtion Pro Live camera:

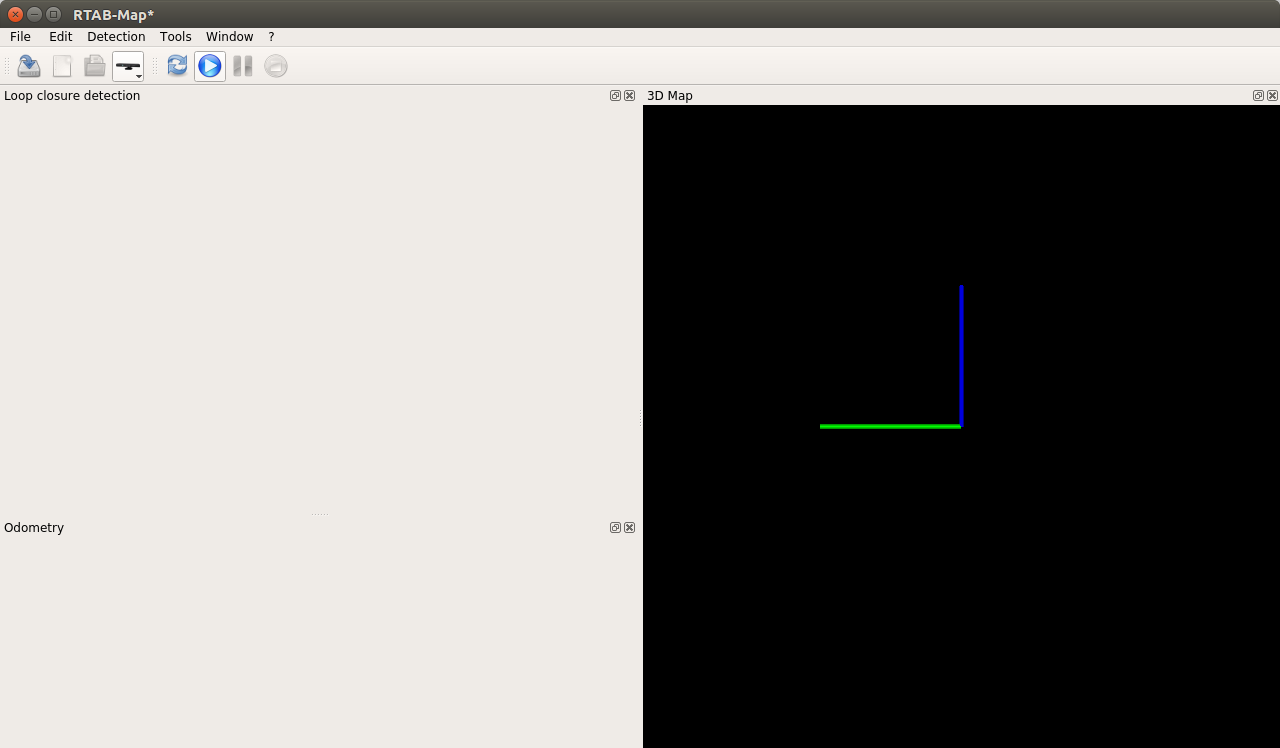

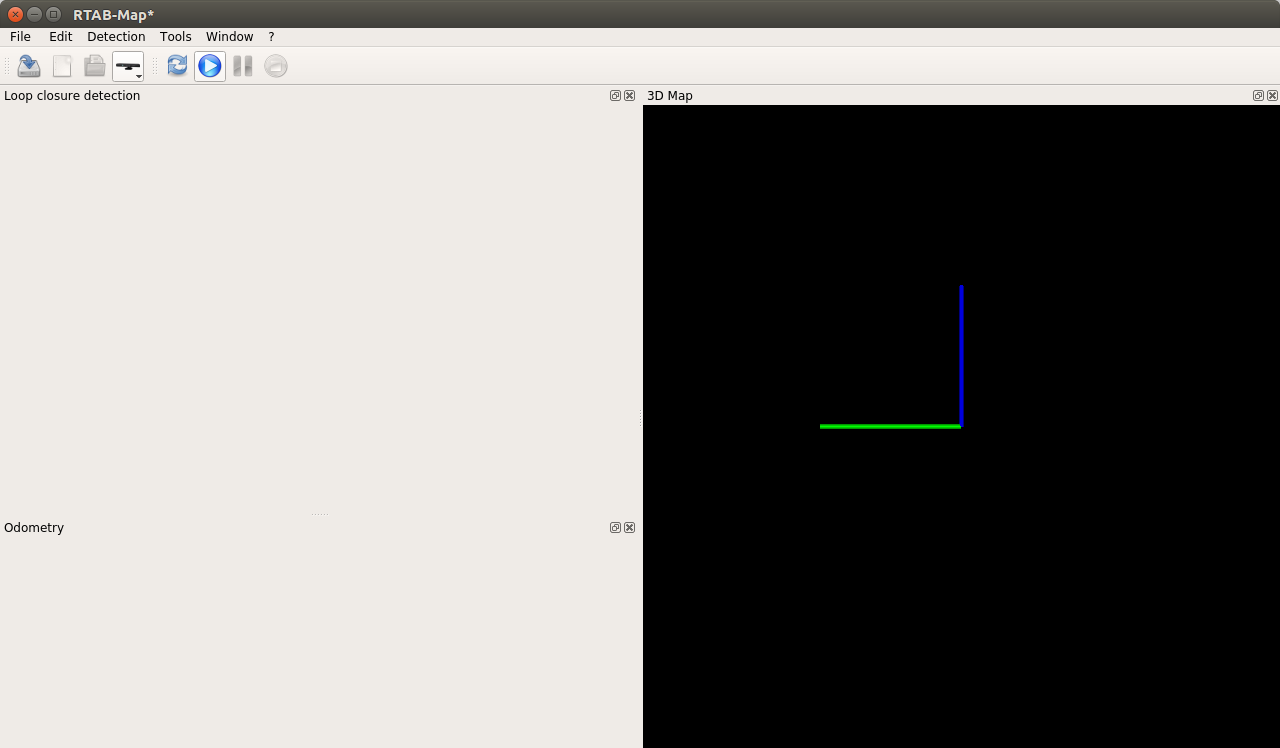

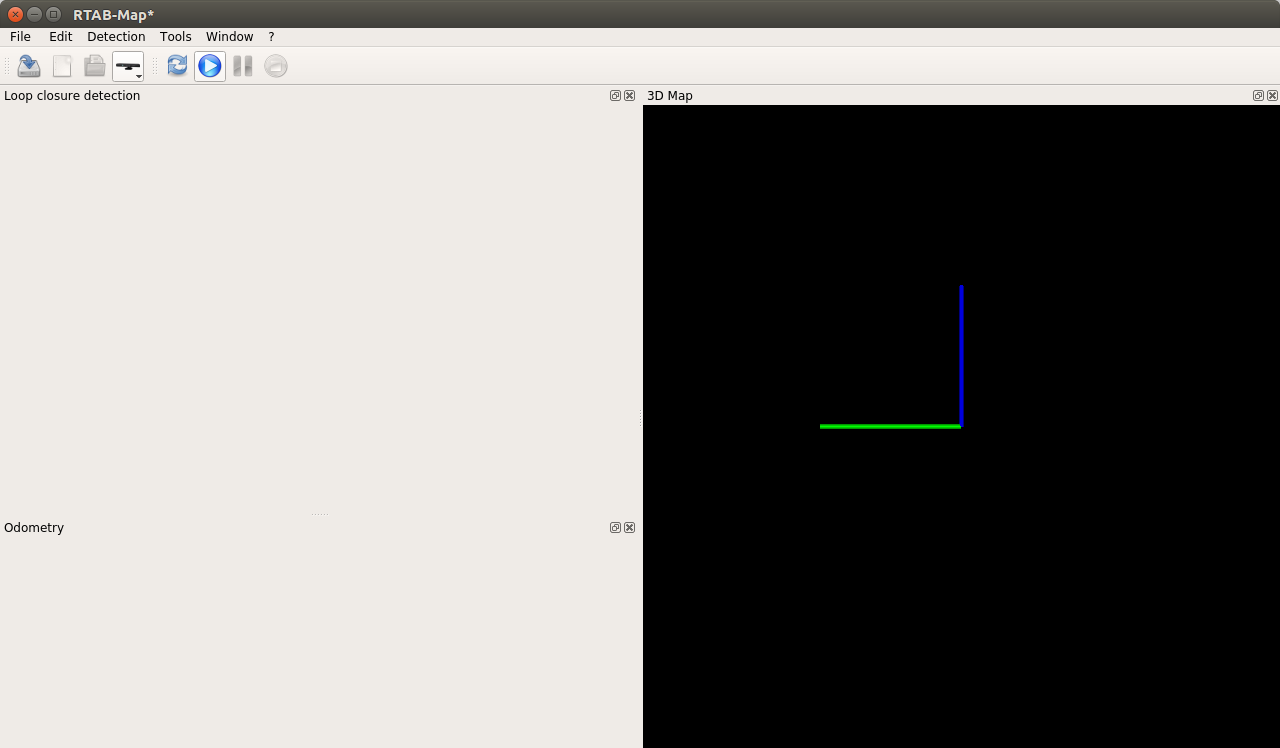

Start the procedure of building a map with the Start button:

We get:

After some time in the process of moving with the camera:

On Raspberry Pi, the rtabmap process turned out to be quite resource-intensive (I used 250 - 300% CPU). Sometimes the window darkened, once rtabmap fell with a Segmentation fault error.

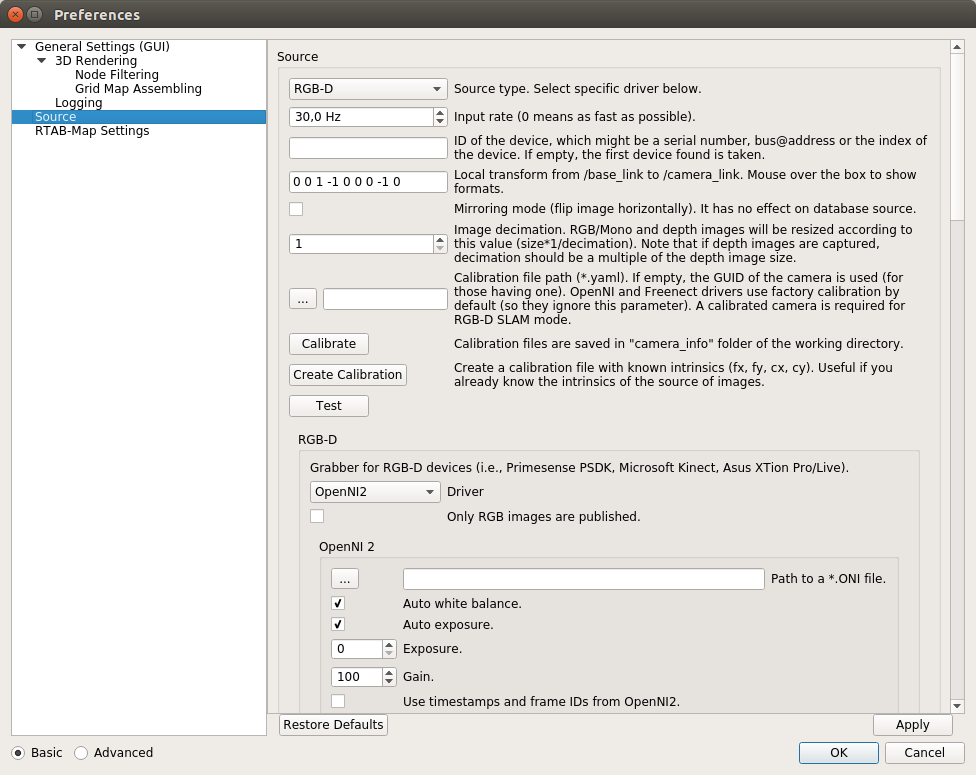

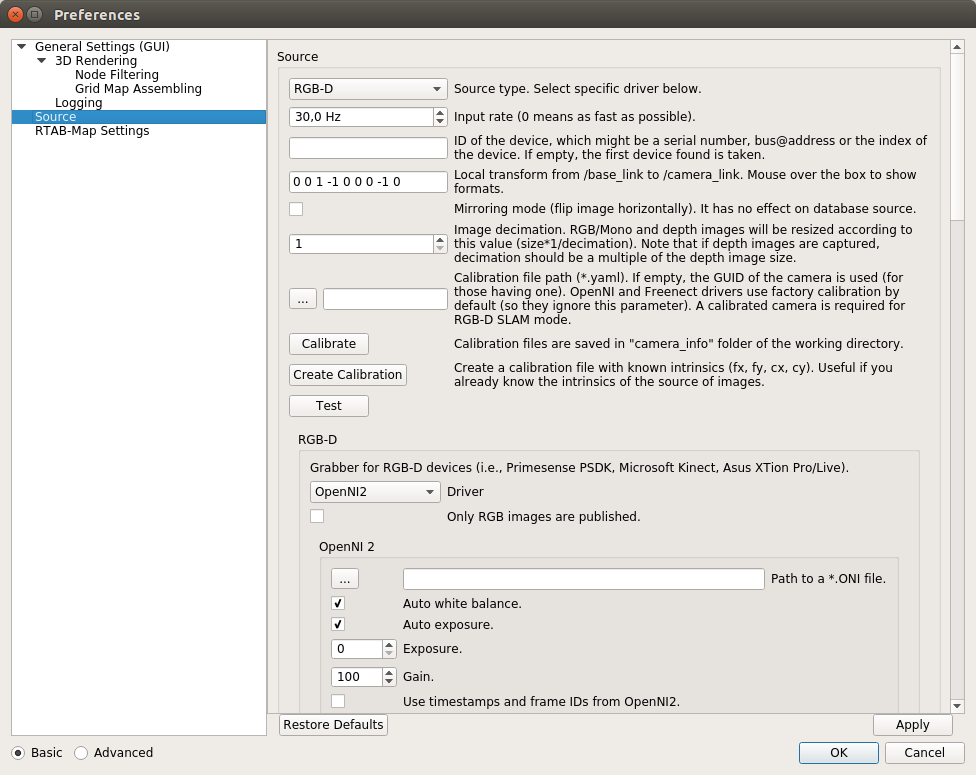

When using the default settings, rtabmap works extremely slowly on the Raspberry Pi, the frame rate is very low. To work effectively, you need to adjust the input frame rate. To do this, open Window → Preferences → General settings (GUI) in the top menu and press the “Load settings ...” button. Set the value of 30 Hz for “input rate”:

About setting parameters in rtabmap you can read more here .

Now the process of building a map is much faster:

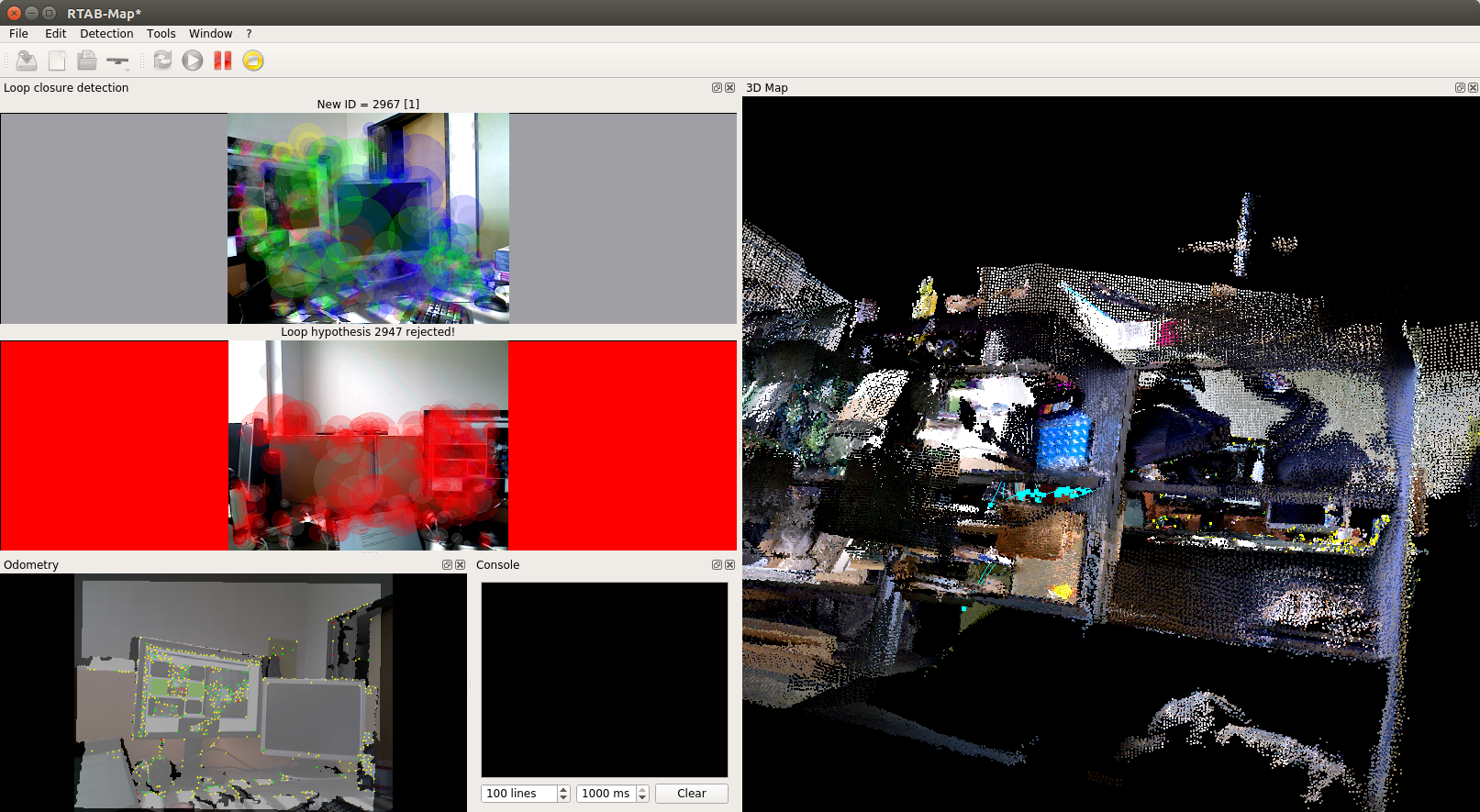

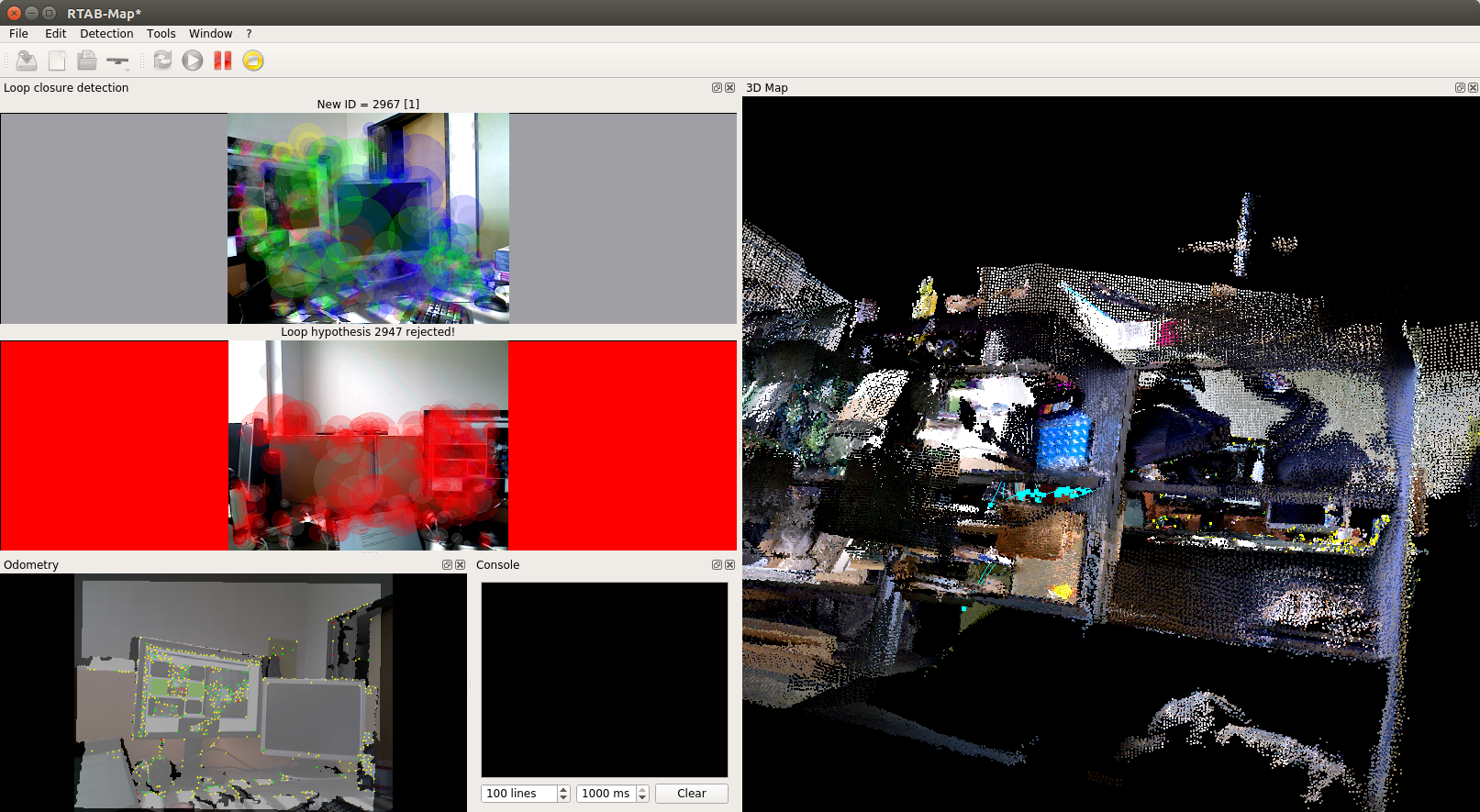

The video shows how odometry data is lost once (there is a red background around the found Loop closure detection candidate) and I reset the odometry via Detection -> Reset odometry. Loss of odometry is often associated with an insufficient number of features found (for example, poorly textured surfaces) and too fast camera movement. Our map is completely cleared and everything starts anew.

The map is almost ready:

Our task here is to obtain a successful loop closure detection. In case of successful detection of cycles, the candidate is highlighted with a green background.

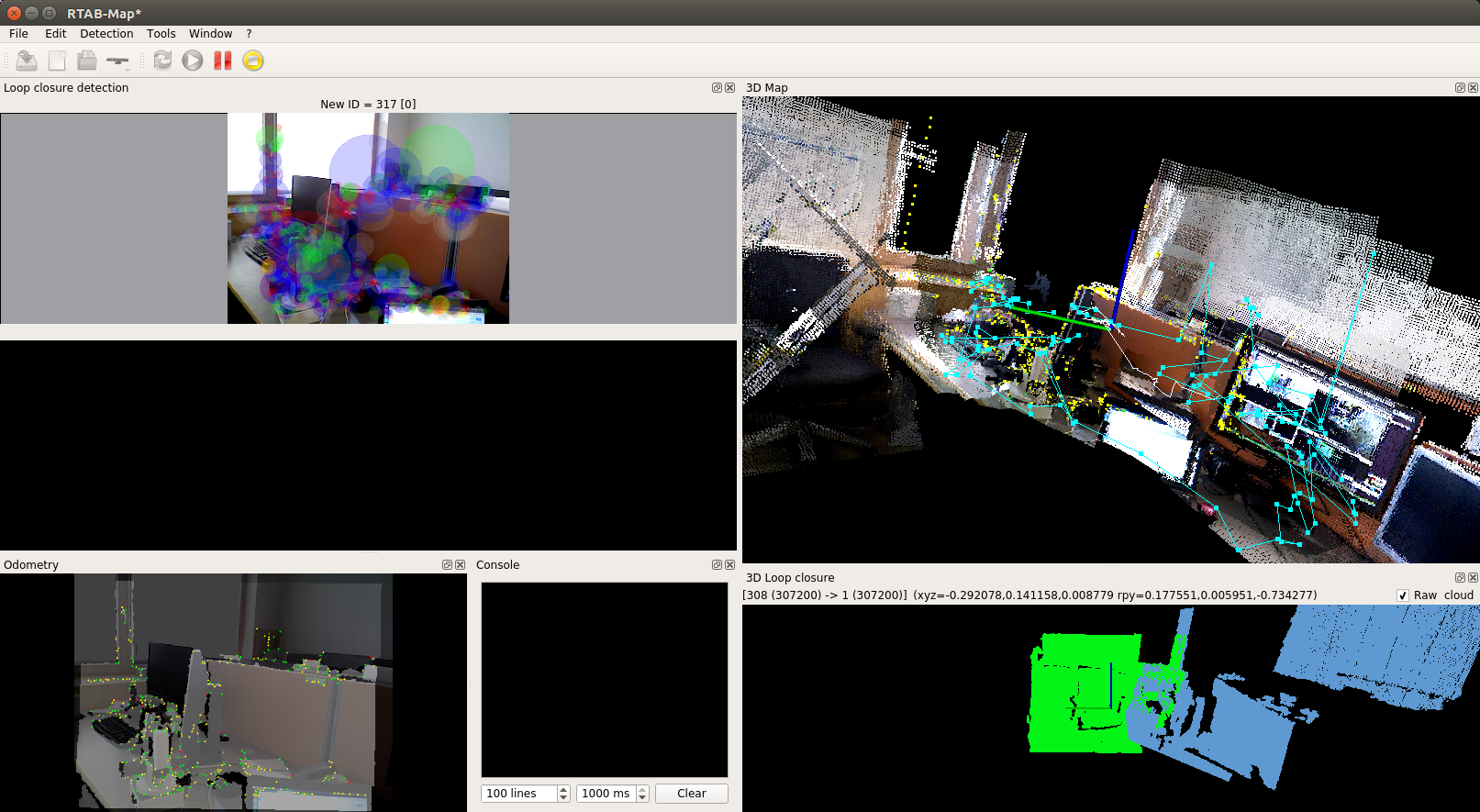

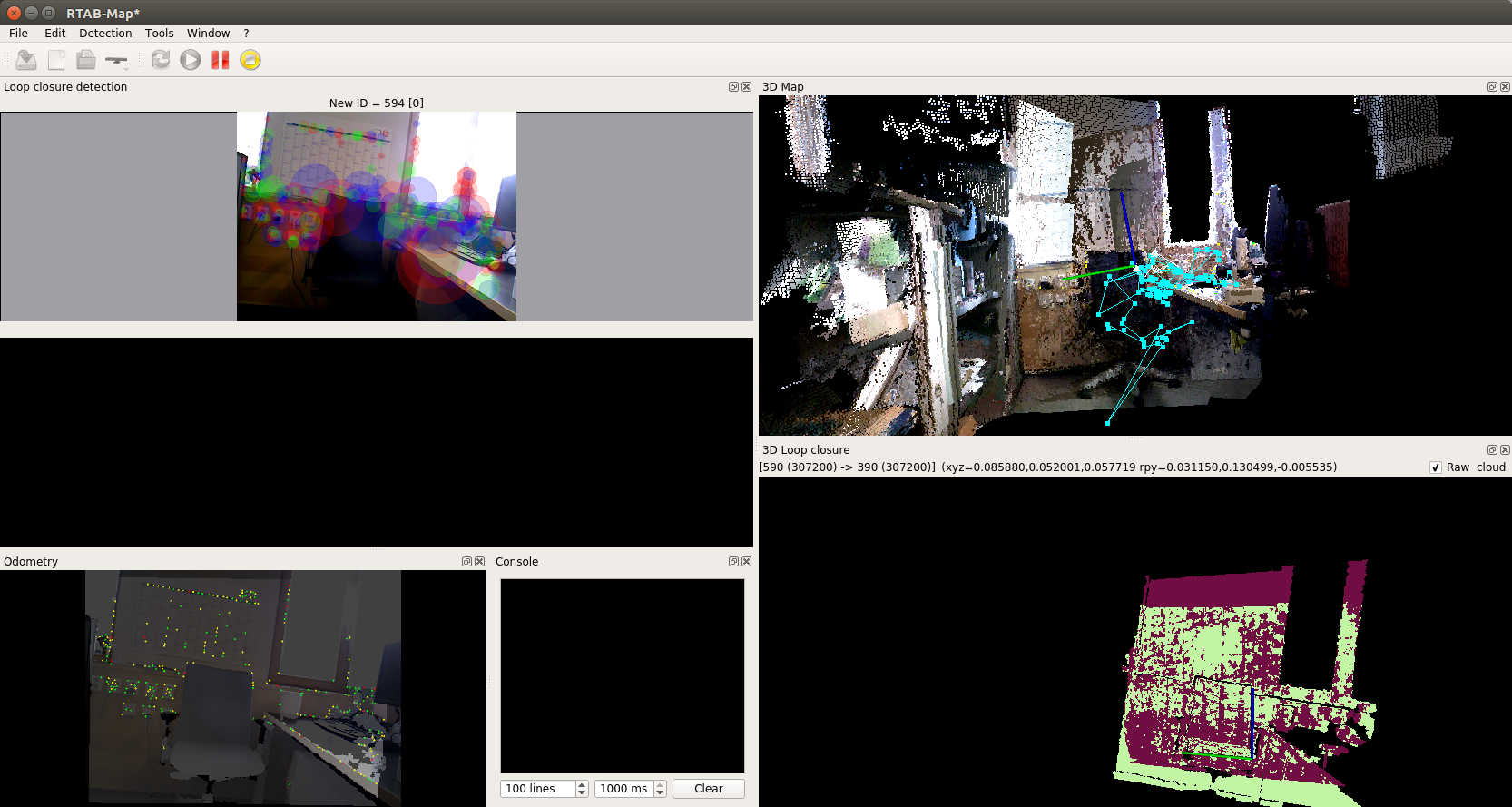

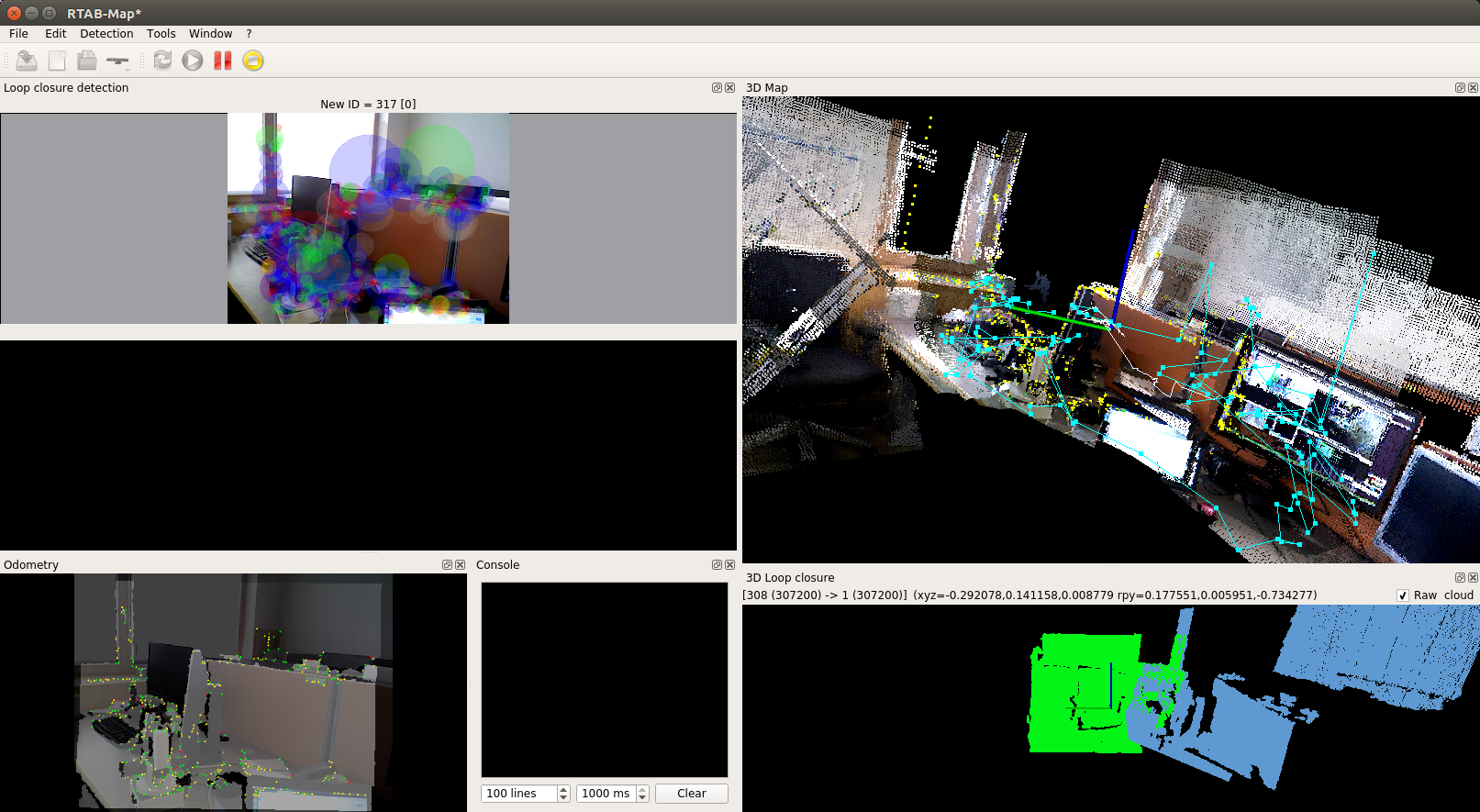

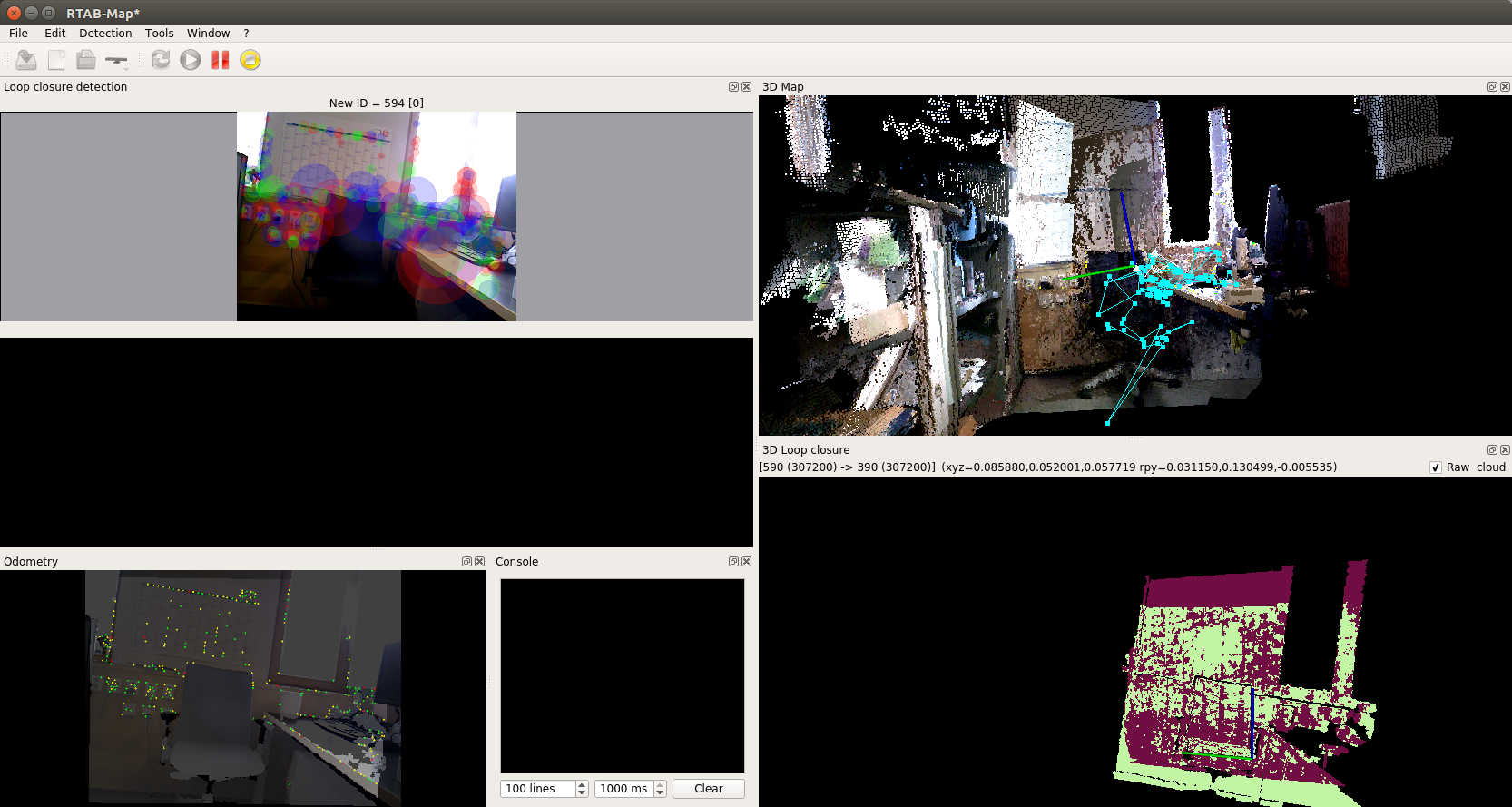

You can also show the result of loop detection in the “3D loop closure” panel. To do this, in the top menu, select: Window → Show view → 3D Loop closure. Using the buttons with numbers from 1 to 5, we can change the format of the representation of point clouds (arbitrary colors, color gradient along the axes (axis oriented colors) or RGB):

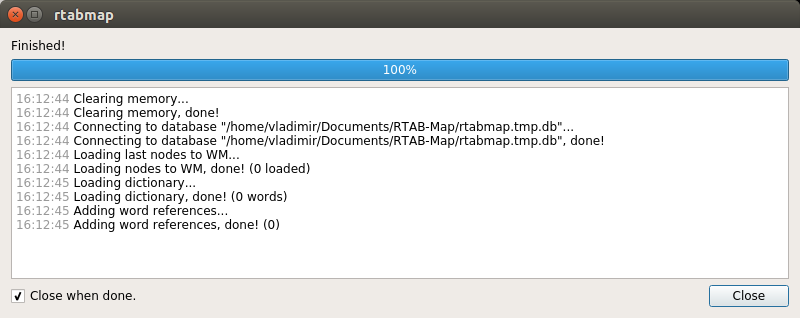

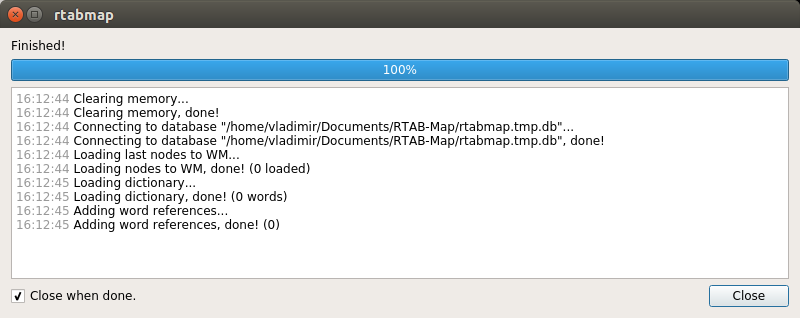

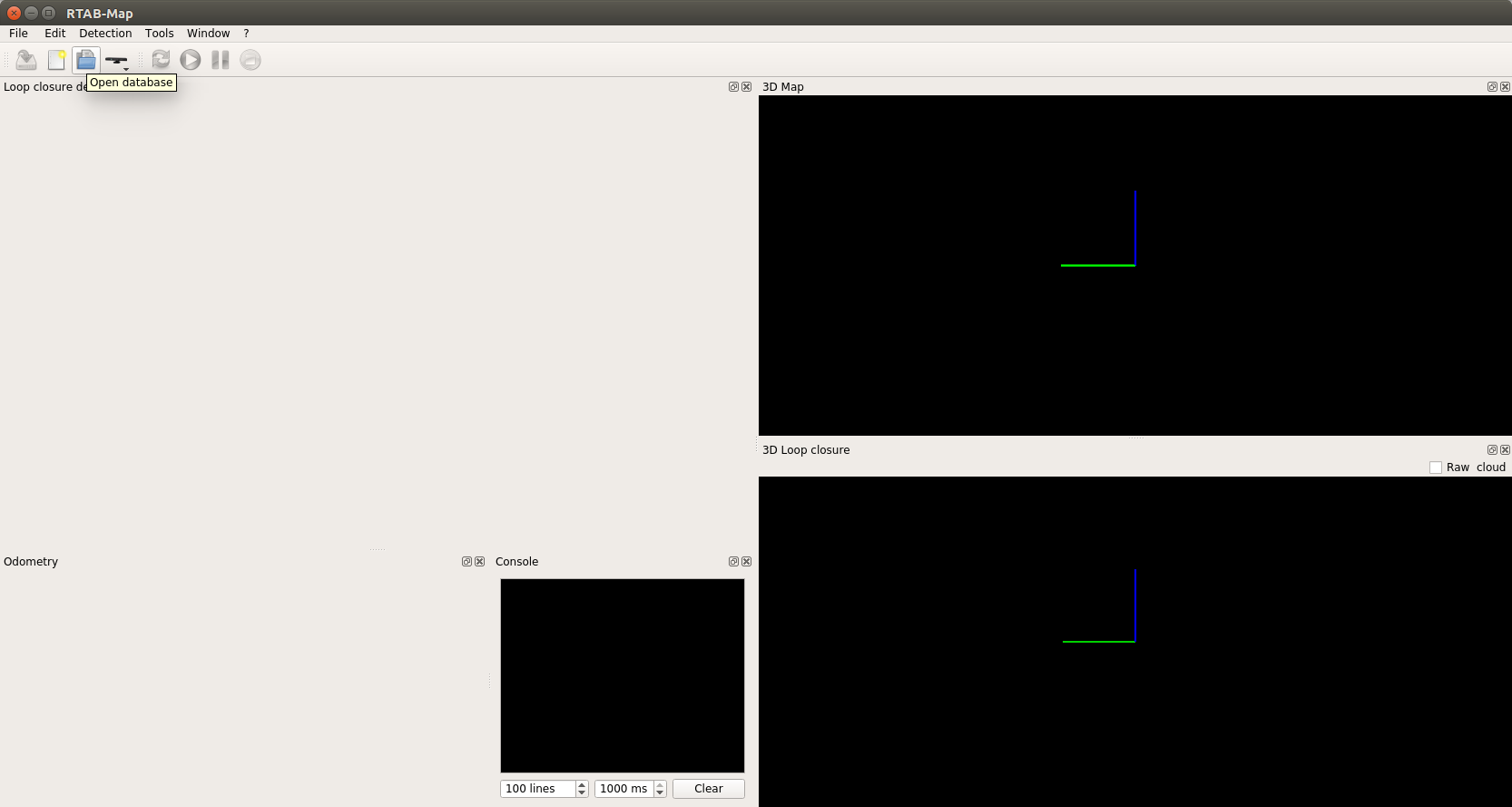

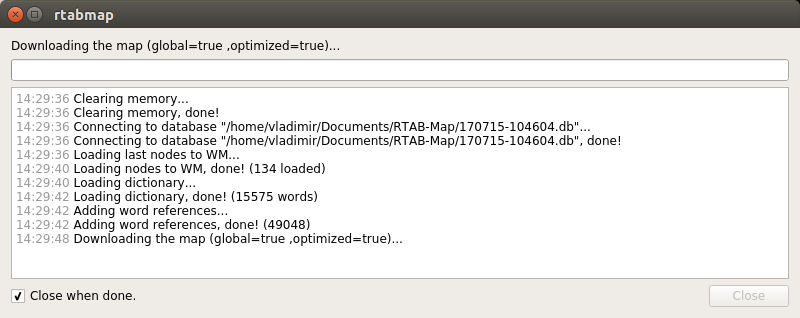

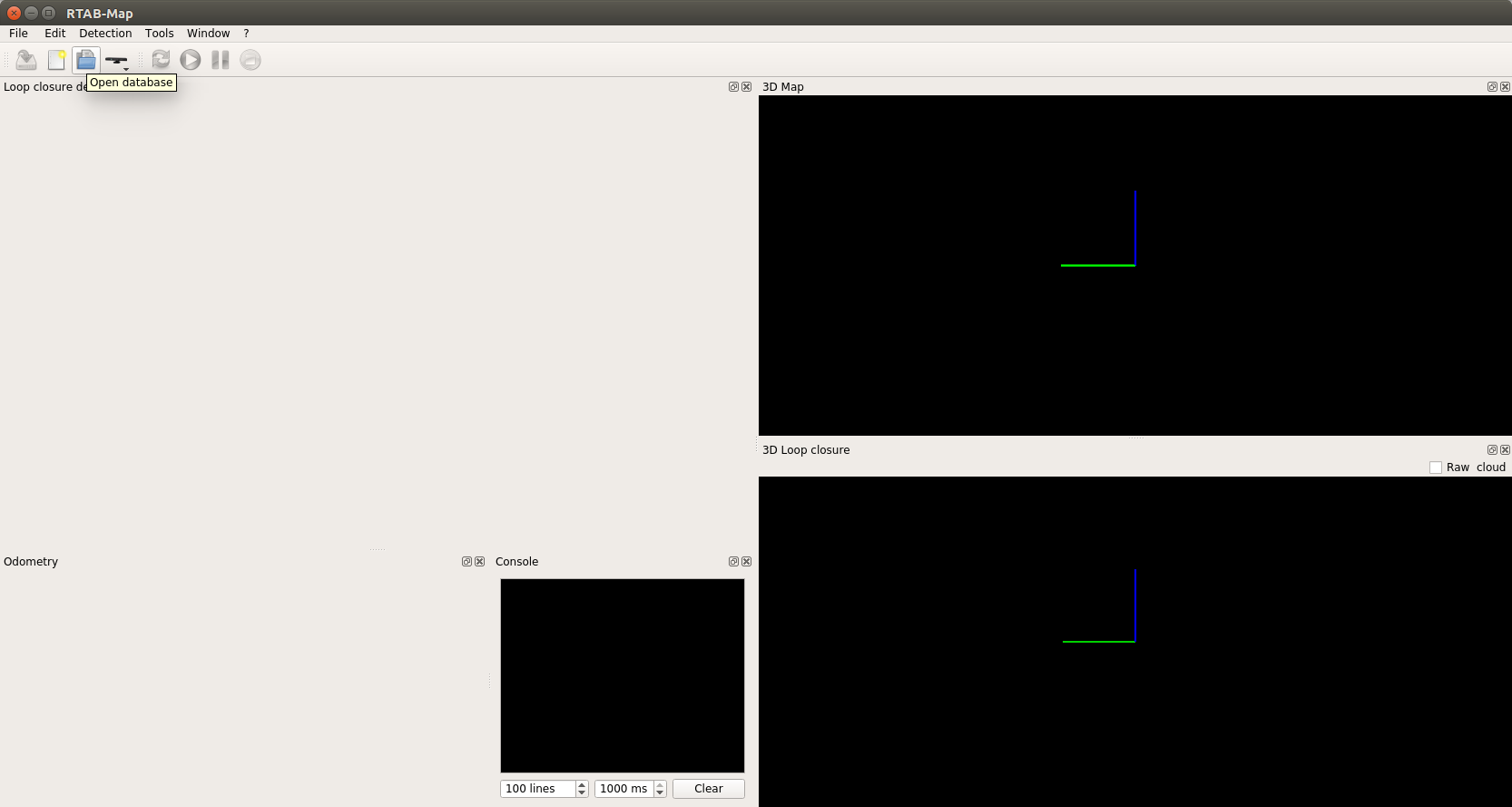

We can press the Pause button to pause and click the Stop button to complete the process. We can also exit the program and save our progress in the database. When closing, the program will show a pop-up window with a suggestion to save the changes. In the future, we can resume the process by running the program and selecting an existing database.

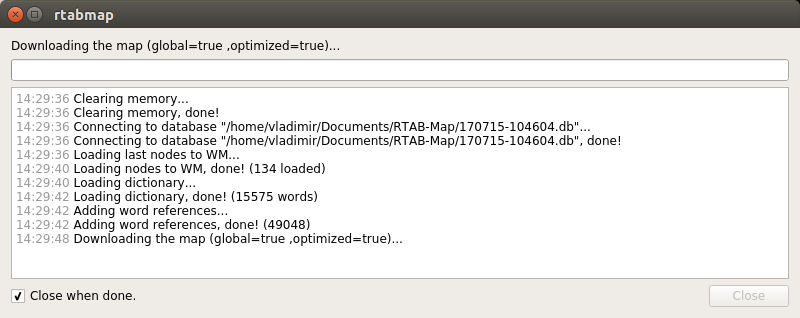

We will be offered to download the map for the database.

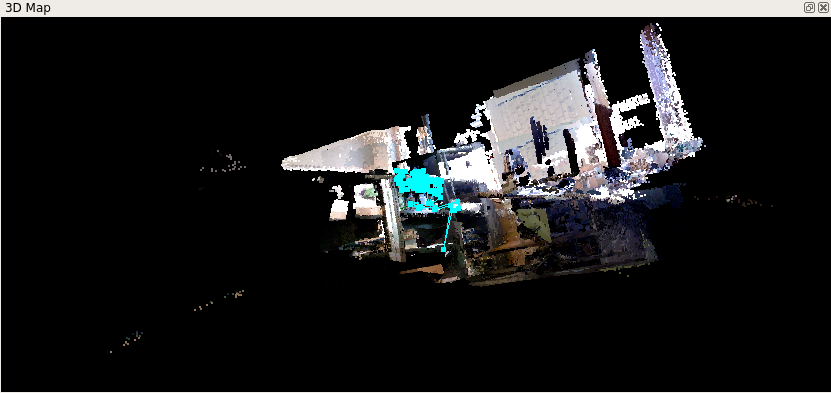

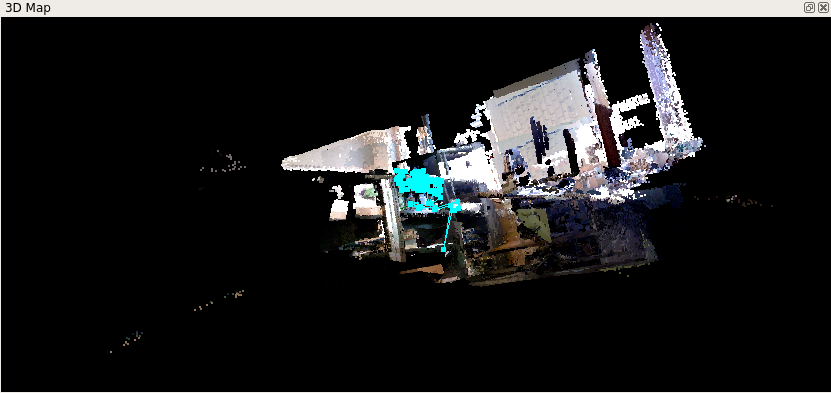

I got this card (I rented part of the room):

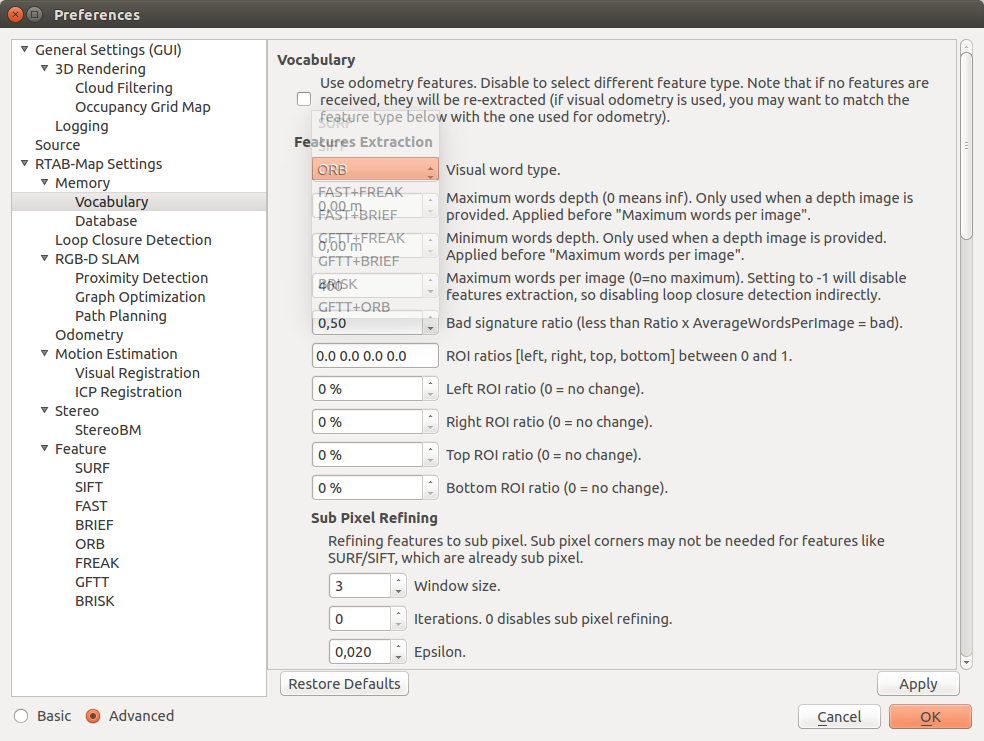

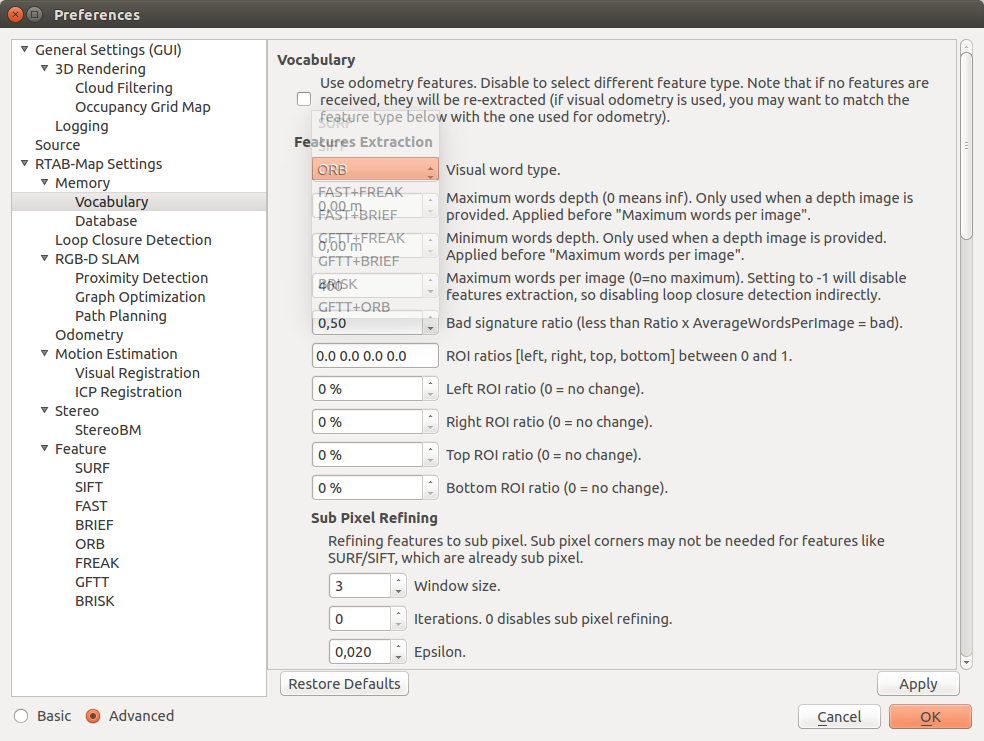

In the rtabmap settings (in the top menu Window → Preferences), you can select the odometry calculation algorithm (used descriptor of visual features). To do this, in the settings, select: RTAB-Map Settings → Memory → Vocabulary near the Feature selection item and select an algorithm from the Visual word type drop-down list:

In my experiments, odometry with the GFTT + BRIEF visual dictionary works best, BRISK showed the worst result (it was not possible to get loop detection at all). When using GFTT + BRIEF, the cycle detection was obtained immediately after a complete rotation with the camera around the room. The ORB algorithm is used by default and gives not very good results.

rtabmap supports a fairly wide range of cameras (RGB-D cameras, Intel RealSense, ASUS Xtion, Kinect v1 and v2, as well as stereo cameras Bumblebee2 and ZED camera) and several possible scenarios for building a map using various camera, lidar and IMU sensor combinations (tutorials can be see here ).

You can read about RGBDSLAM in detail on the official ROS page and on the github page .

Install RGBDSLAM from source:

Install the libg2o library:

where ros_version is the ROS version (hydro, indigo or kinetic).

Compile RGBDSLAM in the catkin working directory:

I was not able to install RGBDSLAM on the Raspberry Pi due to a problem with Qt, so here I consider the operation of the algorithm only on ROS Indigo with the Microsoft Kinect camera.

Run rosmaster:

RGBDSLAMv2 can be run in two ways. First, using the launch file:

Alternatively, openni_launch and the RGBDSLAMv2 node:

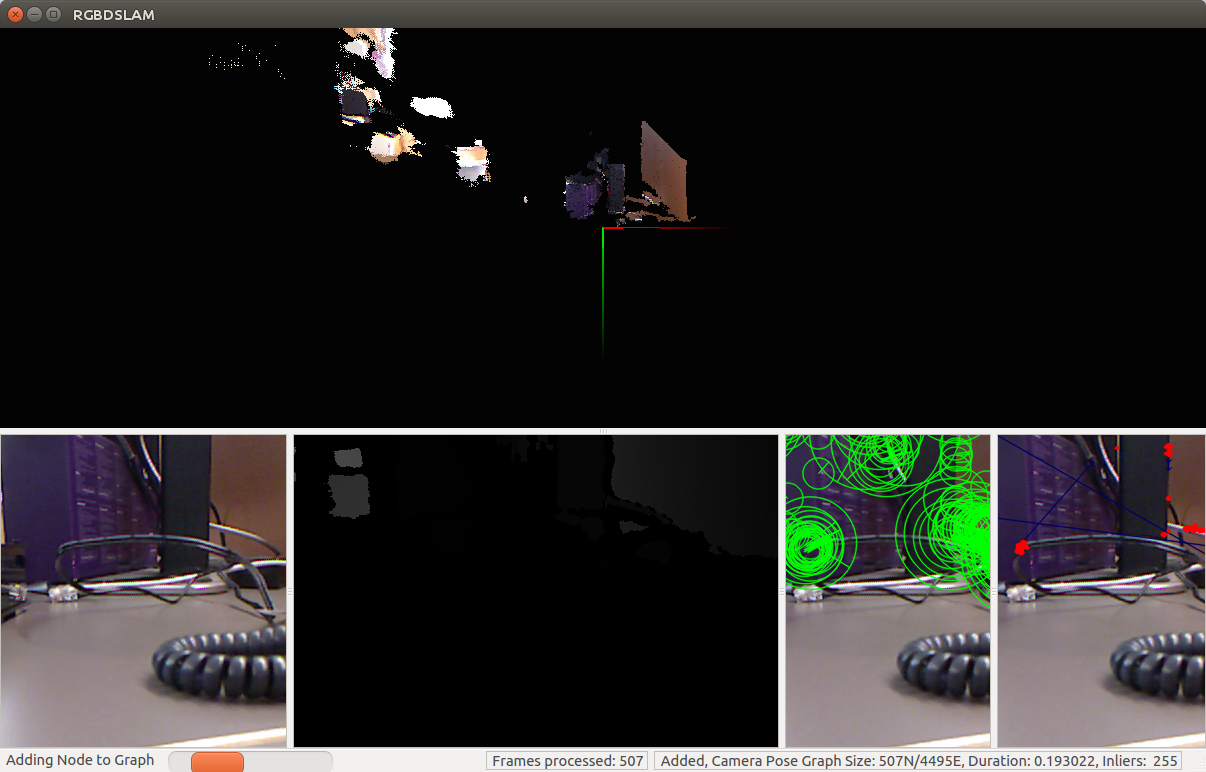

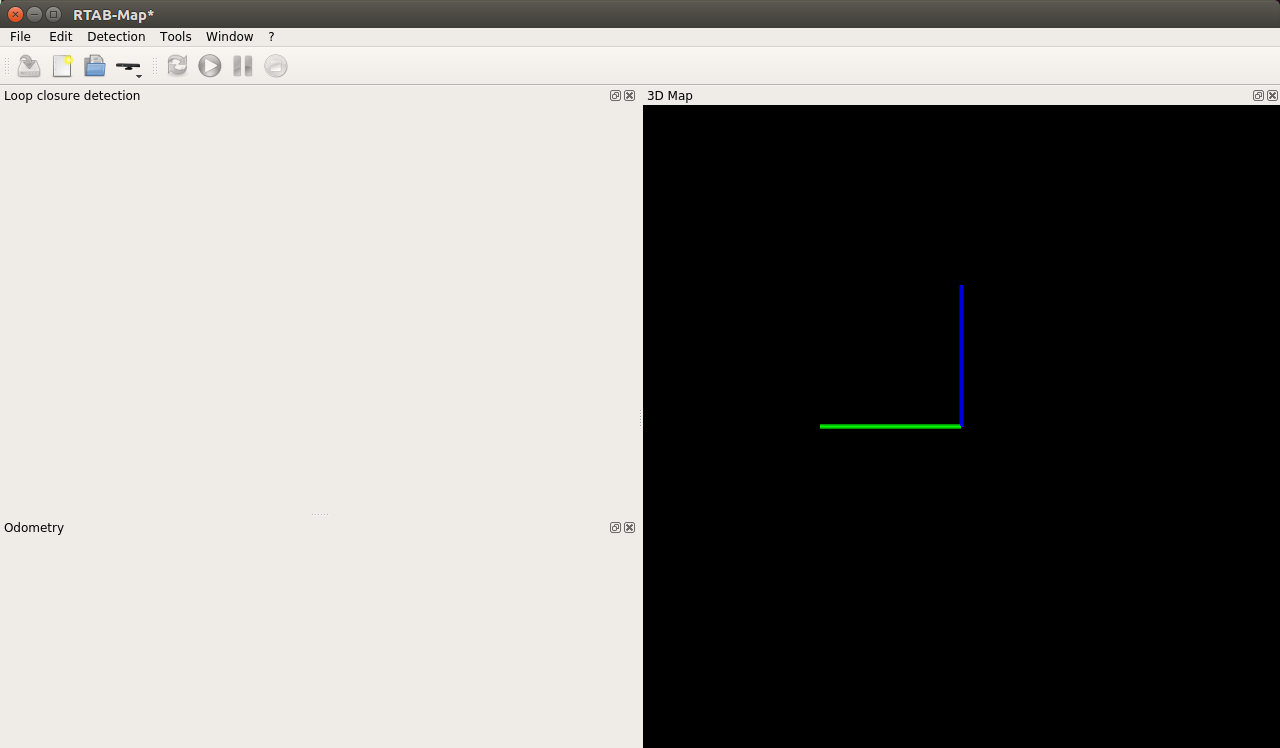

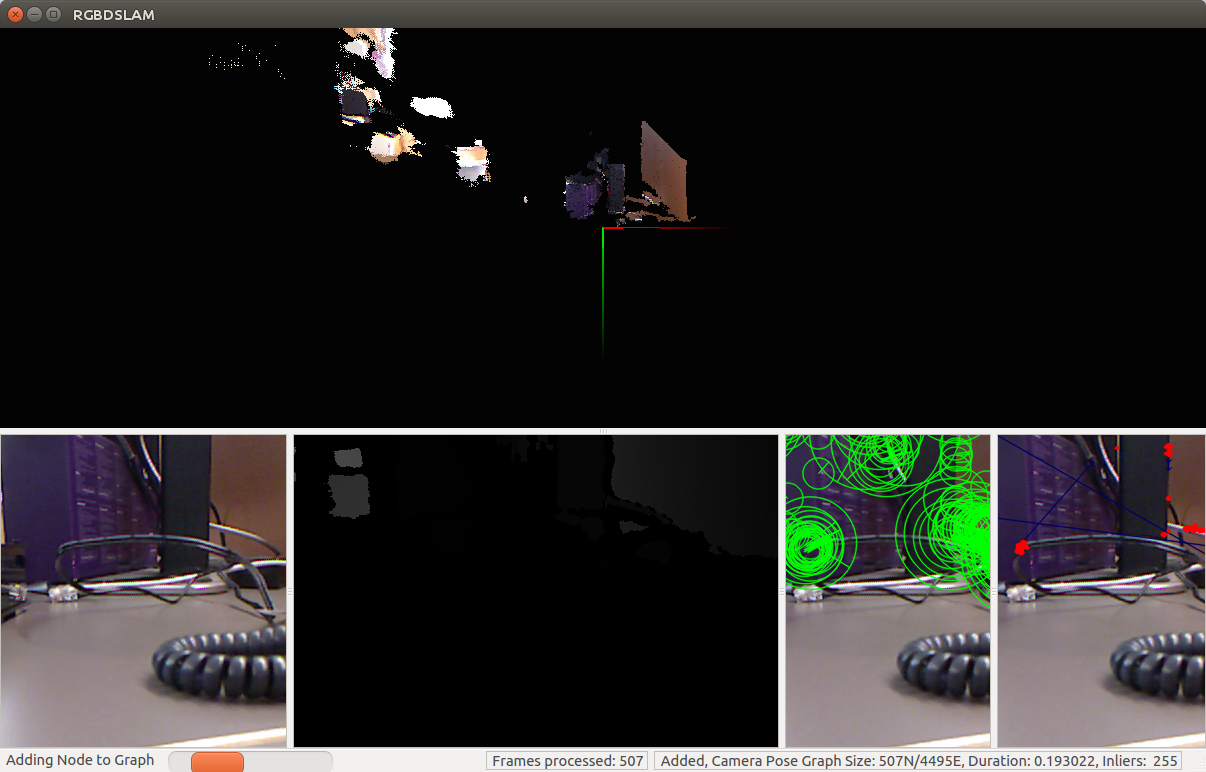

We will see a similar screen:

The construction of the map will begin immediately after the launch of the program. RGBDSLAM is quite resource-intensive, my top command showed 155% of the processor load for the rgbdslam process.

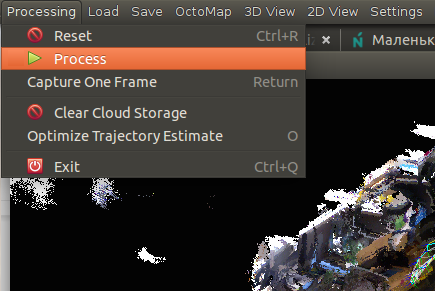

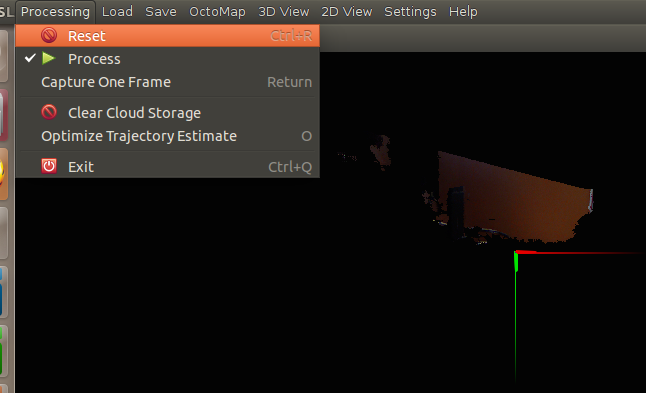

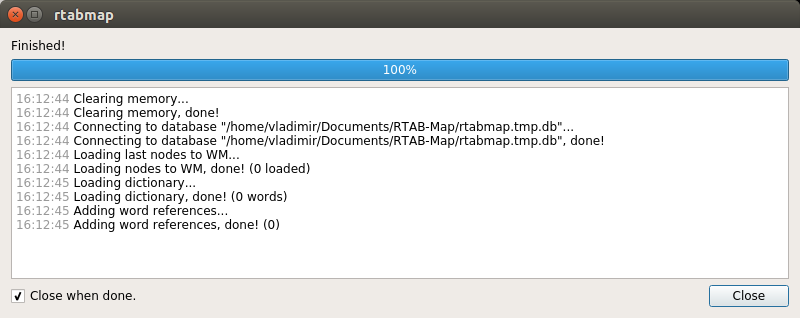

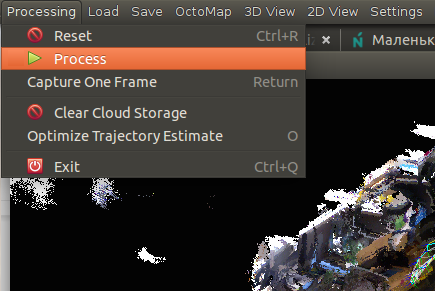

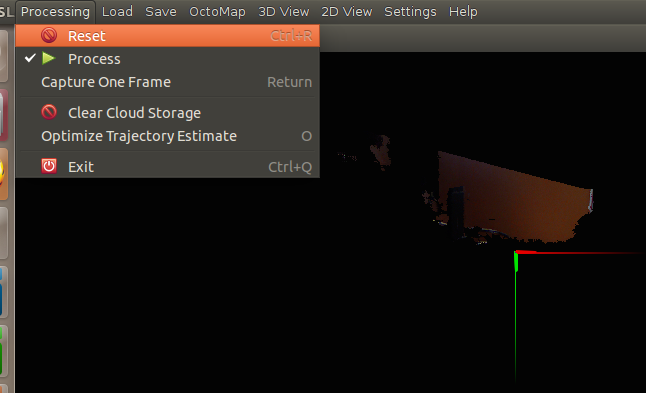

When the construction of the map is completed, it is necessary to stop processing the stream from the camera by unchecking the Processing checkbox in the Processing menu:

Map construction completed:

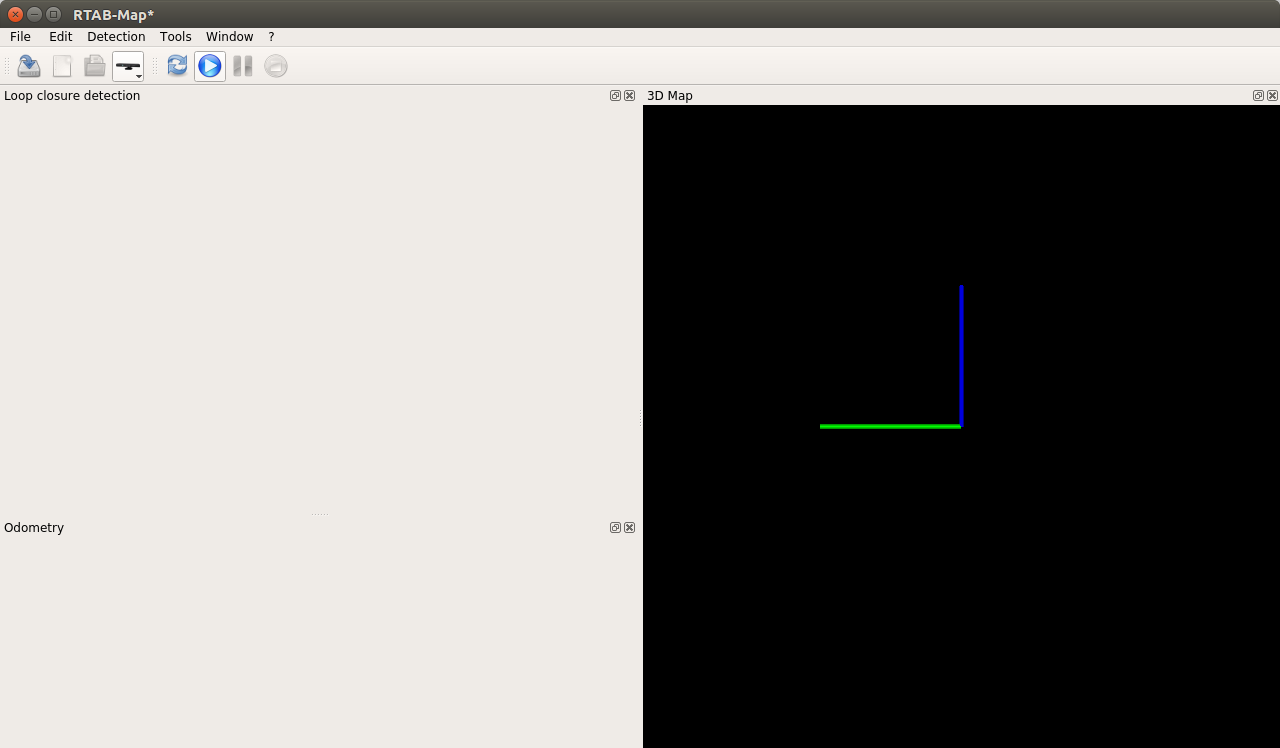

We can save the map. You can also reset the current progress in the map building procedure by selecting Reset from the Processing menu:

Detailed instructions for using RGBDSLAM can be found on the official page .

Experiments have shown that these SLAM algorithms are quite suitable for use in ROS-based robotic projects with Microsoft Kinect RGBD cameras and result in a good map of the area. Unfortunately, not all methods work on ARM platforms (at least on the Raspberry Pi). The advantage of the rtabmap tool is the ability to flexibly adjust various parameters at will.

Thus, we considered the two most well-known SLAM algorithms for RGBD cameras, which have their own implementation in ROS. Those interested can get acquainted with the various scenarios of using these algorithms on the official pages (unfortunately, only in English) and apply them in their projects. I wish everyone good luck in the experiments and will be happy to answer any of your questions in the comments. See you again!

rtabmap

About the project can be read in detail on the official page .

I described the rtabmap installation procedure in detail in a previous article . For example, on a Ubuntu 14.04 system, the installation will look like this:

')

sudo apt-get install ros-indigo-rtabmap ros-indigo-rtabmap-ros However, on Raspberry Pi 3 with the Kinetic ROS installed, this method will not work, since the rtabmap build is disabled in the Kinetic release for ARM due to a problem with libpcl-dev (for more information about the problem, read here ). Therefore, we compile it from source by following the instructions on the rtabmap page:

source /opt/ros/kinetic/setup.bash cd ~ git clone https://github.com/introlab/rtabmap.git rtabmap cd rtabmap/build cmake .. [<---double dots included] make If swap is not available on the Raspberry Pi, then virtual memory may not be enough at compile time:

virtual memory exhausted: Cannot allocate memory You need to add the swap memory according to the instructions from here and re-run the compilation.

The compilation should take about an hour. After this, perform the installation:

sudo make install Install the rtabmap_ros package in our catkin working directory:

cd ~/catkin_ws git clone https://github.com/introlab/rtabmap_ros.git src/rtabmap_ros catkin_make -j1 source devel/setup.bash When running catkin_make, an error may occur due to the lack of the image_transportConfig.cmake file. In this case, you need to compile the image_transport package in the working directory catkin_ws:

cd src git clone https://github.com/ros-perception/image_common.git cd ~/catkin_ws catkin_make -j1 source devel/setup.bash In order to avoid errors with loading of dynamic libraries when starting the rtabmap_ros nodes, it is recommended to add the following line to ~ / .bashrc:

echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib' >> ~/.bashrc Using rtabmap to build a map

Run rtabmap:

rtabmap

Create a new database: File → New database:

For the Kinect camera, the default driver is OpenNI-PCL:

Let's start the map building procedure by clicking on the “Start” button:

rtabmap can also be used with rviz:

roslaunch openni_launch openni.launch depth_registration:=true roslaunch rtabmap_ros rtabmap.launch rtabmapviz:=false rviz:=false rtabmap_args:="--delete_db_on_start" The rviz window opens:

Add an Odometry display and select the “/ rtabmap / odom” topic. After some camera movement in space, we get a similar picture in rviz:

After we complete the room with the camera, we get:

If we move around the room slowly, smoothly moving the camera without sudden movements, then we can get a fairly accurate map:

We can add an OccupancyGrid type Map display and select the “/ rtabmap / proj_map” topic to visualize the flat map (as if we were using gmapping):

When you close the rviz window, the database and map are automatically saved to disk (the path is displayed in a string in the terminal).

Using rtabmap on Raspberry Pi 3 with ASUS Xtion Pro Live

Run rtabmap:

rtabmap The window already familiar to us will open. Create a database: File -> New database:

Select the OpenNI2 driver for the Xtion Pro Live camera:

Start the procedure of building a map with the Start button:

We get:

After some time in the process of moving with the camera:

On Raspberry Pi, the rtabmap process turned out to be quite resource-intensive (I used 250 - 300% CPU). Sometimes the window darkened, once rtabmap fell with a Segmentation fault error.

When using the default settings, rtabmap works extremely slowly on the Raspberry Pi, the frame rate is very low. To work effectively, you need to adjust the input frame rate. To do this, open Window → Preferences → General settings (GUI) in the top menu and press the “Load settings ...” button. Set the value of 30 Hz for “input rate”:

About setting parameters in rtabmap you can read more here .

Now the process of building a map is much faster:

The video shows how odometry data is lost once (there is a red background around the found Loop closure detection candidate) and I reset the odometry via Detection -> Reset odometry. Loss of odometry is often associated with an insufficient number of features found (for example, poorly textured surfaces) and too fast camera movement. Our map is completely cleared and everything starts anew.

The map is almost ready:

Our task here is to obtain a successful loop closure detection. In case of successful detection of cycles, the candidate is highlighted with a green background.

You can also show the result of loop detection in the “3D loop closure” panel. To do this, in the top menu, select: Window → Show view → 3D Loop closure. Using the buttons with numbers from 1 to 5, we can change the format of the representation of point clouds (arbitrary colors, color gradient along the axes (axis oriented colors) or RGB):

We can press the Pause button to pause and click the Stop button to complete the process. We can also exit the program and save our progress in the database. When closing, the program will show a pop-up window with a suggestion to save the changes. In the future, we can resume the process by running the program and selecting an existing database.

We will be offered to download the map for the database.

I got this card (I rented part of the room):

In the rtabmap settings (in the top menu Window → Preferences), you can select the odometry calculation algorithm (used descriptor of visual features). To do this, in the settings, select: RTAB-Map Settings → Memory → Vocabulary near the Feature selection item and select an algorithm from the Visual word type drop-down list:

In my experiments, odometry with the GFTT + BRIEF visual dictionary works best, BRISK showed the worst result (it was not possible to get loop detection at all). When using GFTT + BRIEF, the cycle detection was obtained immediately after a complete rotation with the camera around the room. The ORB algorithm is used by default and gives not very good results.

rtabmap supports a fairly wide range of cameras (RGB-D cameras, Intel RealSense, ASUS Xtion, Kinect v1 and v2, as well as stereo cameras Bumblebee2 and ZED camera) and several possible scenarios for building a map using various camera, lidar and IMU sensor combinations (tutorials can be see here ).

RGBDSLAM

Installing RGBDSLAMv2

You can read about RGBDSLAM in detail on the official ROS page and on the github page .

Install RGBDSLAM from source:

cd ~/catkin_ws/src git clone https://github.com/felixendres/rgbdslam_v2.git Install the libg2o library:

sudo apt-get install ros-<ros_version>-libg2o where ros_version is the ROS version (hydro, indigo or kinetic).

Compile RGBDSLAM in the catkin working directory:

cd ~/catkin_ws catkin_make source devel/setup.bash rosdep install rgbdslam I was not able to install RGBDSLAM on the Raspberry Pi due to a problem with Qt, so here I consider the operation of the algorithm only on ROS Indigo with the Microsoft Kinect camera.

Using RGBDSLAMv2

Run rosmaster:

roscore RGBDSLAMv2 can be run in two ways. First, using the launch file:

roslaunch rgbdslam openni+rgbdslam.launch Alternatively, openni_launch and the RGBDSLAMv2 node:

roslaunch openni_launch openni.launch roslaunch rgbdslam rgbdslam.launch We will see a similar screen:

The construction of the map will begin immediately after the launch of the program. RGBDSLAM is quite resource-intensive, my top command showed 155% of the processor load for the rgbdslam process.

When the construction of the map is completed, it is necessary to stop processing the stream from the camera by unchecking the Processing checkbox in the Processing menu:

Map construction completed:

We can save the map. You can also reset the current progress in the map building procedure by selecting Reset from the Processing menu:

Detailed instructions for using RGBDSLAM can be found on the official page .

Experiments have shown that these SLAM algorithms are quite suitable for use in ROS-based robotic projects with Microsoft Kinect RGBD cameras and result in a good map of the area. Unfortunately, not all methods work on ARM platforms (at least on the Raspberry Pi). The advantage of the rtabmap tool is the ability to flexibly adjust various parameters at will.

Thus, we considered the two most well-known SLAM algorithms for RGBD cameras, which have their own implementation in ROS. Those interested can get acquainted with the various scenarios of using these algorithms on the official pages (unfortunately, only in English) and apply them in their projects. I wish everyone good luck in the experiments and will be happy to answer any of your questions in the comments. See you again!

Source: https://habr.com/ru/post/373707/

All Articles