Raspberry Pi3 vs DragonBoard. We respond to criticism

Author: Nikolai Khabarov, Embedded Expert DataArt, Evangelist for Smart Home Technologies.

The test results presented in the article on comparing the performance of the Raspberry Pi3 and DragonBoard boards when working with applications in Python raised doubts among some colleagues.

In particular, the following comments appeared under the material:

')

“... I did benchmarks between 32-bit ARMs, between 64-bit and between Intel x86_64 and all the numbers were comparable. at least between 32 bit and 64 bit ARMs, the difference was tens of percent, and not many times. well, or are you just different purely --cpu-max-prime indicated ".

"Amazing results usually mean an experimental error."

“There is a suspicion that there is some kind of error in the CPU test. I personally tested different ARMs with sysbench, but there wasn’t a close 25 times difference. In principle, good ARM media in the CPU test can be several times more efficient than the BCM2837, but not as much as 25 times. I suspect that the test for pi was made in one thread, and for DragonBoard in 4 threads (4 cores). ”

This is the cpu test from the sysbench test suite. The answer to these assumptions was so voluminous that I decided to publish it in a separate post, at the same time explaining why in some tasks the difference can be so enormous.

To begin with, the commands with all the arguments for the test were listed in the table of the original article. Of course, there is no argument for --cpu-max-prime or other arguments forcing you to use multiple processor cores. In terms of the 10-20% difference, perhaps, there was a test of the overall system performance, which on real applications (not always, of course, but most likely) just shows 10-20% of the difference between 32-bit and 64 -bit modes of the same processor.

In principle, it is possible to read how mathematical operations are implemented with a bit depth of a higher word width, for example, here . It makes no sense to rewrite algorithms. Say, multiplication will take about 4 times more CPU cycles (three multiplications + addition operations). Naturally, this value may vary from processor to processor and depending on compiler optimization. For example, for an ordinary x86 processor there may be no difference, since with the advent of the MMX instruction set, it became possible to use 64-bit registers and 64-bit calculations on 32-bit processor. And with the advent of SSE, 128-bit registers appeared. If the program is compiled using such instructions, then it can be performed even faster than 32-bit calculations, a difference of 10-20% or even more can be observed in the other direction, since the same MMX instruction set can perform several operations at the same time.

But it’s still a synthetic test that explicitly uses 64-bit numbers (source codes are available here ), and since the package is taken from the official repository, it’s not a fact that all the possible optimizations were included when building the package (all because of compatibility with other ARM processors). For example, ARM processors since v6 support SIMD, which, like MMX / SSE on x86, can work with 64-bit and 128-bit arithmetic. We did not set out to squeeze as many “parrots” out of the tests as possible, we are interested in the real state of affairs when installing applications out of the box, since we don’t want to reinstall half of the operating system.

Still do not believe that even "out of the box" speed on the same processor can not differ tenfold depending on the processor mode?

Well, let's take the same DragonBoard.

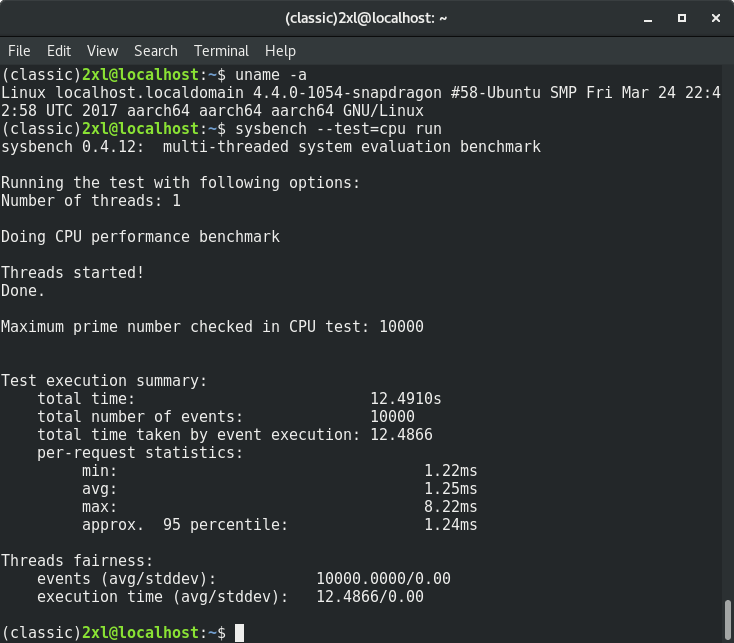

sysbench --test=cpu run This time with screenshots:

12.4910 seconds. Ok, now on the same board:

sudo dpkg --add-architecture armhf sudo apt update sudo apt install sysbench:armhf With these teams, we installed the 32-bit version of the sysbench package on the same DragonBoard board.

And again:

sysbench --test=cpu run And here is a screenshot of it (apt install output is visible at the top):

156.4920 seconds. The difference is more than 10 times. Since we are talking about such cases, let's see in more detail why. Let's write just such a simple program in C:

#include <stdint.h> #include <stdio.h> int main(int argc, char **argv) { volatile uint64_t a = 0x123; volatile uint64_t b = 0x456; volatile uint64_t c = a * b; printf("%lu\n", c); return 0; } We use the volatile keyword so that the compiler does not count everything in advance, namely, it would assign variables and honestly multiply two arbitrary 64-bit numbers. Let's build a program for both architectures:

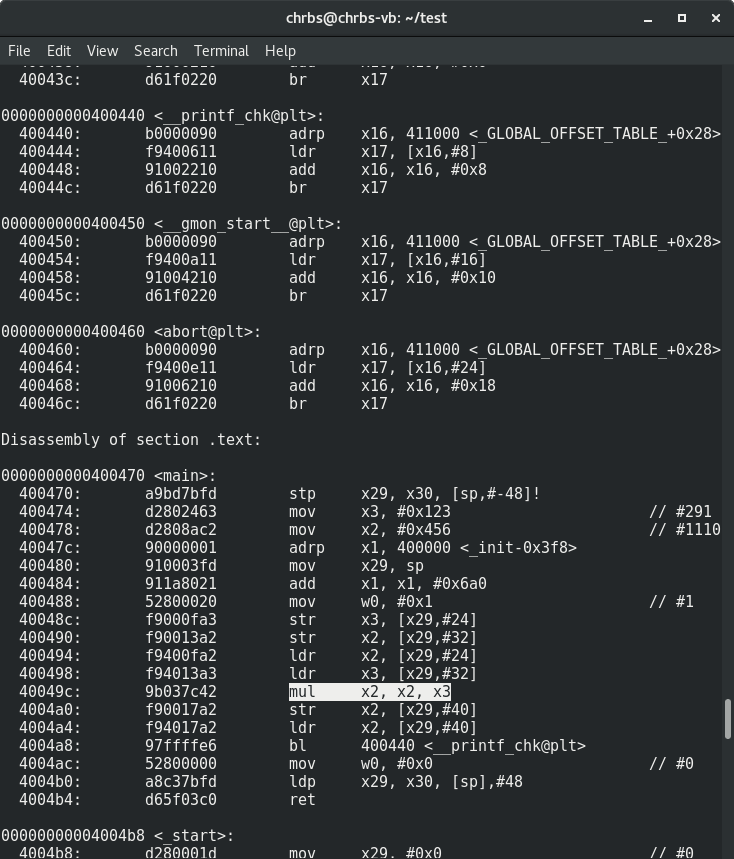

arm-linux-gnueabihf-gcc -O2 -g main.c -o main-armhf aarch64-linux-gnu-gcc -O2 -g main.c -o main-arm64 And now let's look at the arm64 disassembler:

$ aarch64-linux-gnu-objdump -d main-arm64

The instruction mul is quite predictably used. And now for armhf:

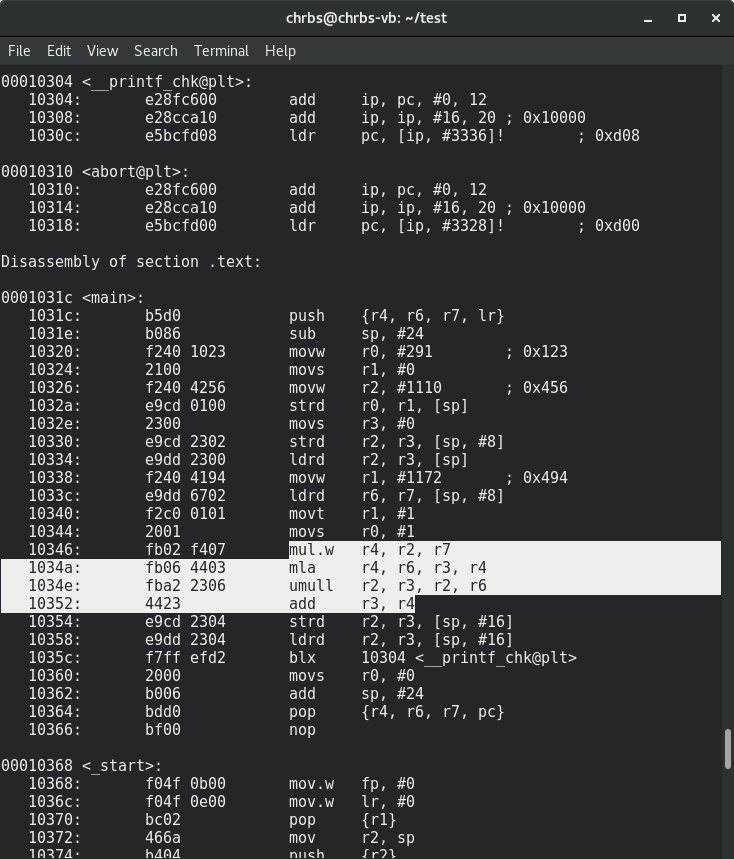

$ arm-linux-gnueabihf-objdump -d main-armhf

As you can see, the compiler has applied one of the methods of long arithmetic. And as a result, we are witnessing a whole footwoman that uses quite heavyweight instructions like mul, mla, umull. Hence the multiple performance differences.

Yes, you can still try to compile, including some set of instructions, but then we may lose compatibility with some processor. Again, again, we were interested in the real speed of the entire board with real binary packages. We hope this rationale, why such a difference was obtained on a particular cpu test, is enough. And you will not be confused by such gaps in some tests and, possibly, some application programs.

Source: https://habr.com/ru/post/373643/

All Articles