AlphaGo vs Qie Jie: scores of professional go players

In March 2016, one of the strongest people of go players first lost to the computer system, playing without a handicap. Up to this point, winning with 4 odds stones was considered the best achievement, and playing on an equal footing was still far away - perhaps somewhere in the next decade. Suddenly, the AlphaGo system from the British DeepMind appeared on the scene, which with a score of 4: 1 beat one of the most famous players of recent years, Lee Sedol.

In March 2016, one of the strongest people of go players first lost to the computer system, playing without a handicap. Up to this point, winning with 4 odds stones was considered the best achievement, and playing on an equal footing was still far away - perhaps somewhere in the next decade. Suddenly, the AlphaGo system from the British DeepMind appeared on the scene, which with a score of 4: 1 beat one of the most famous players of recent years, Lee Sedol.A year ago, the South Korean player of the 9th professional dan lost to the computer system of the Google division, and in the perception of many, he moved into the category of a game in which cars play stronger than the best of people. More AlphaGo almost no light. In April of this year DeepMind broke out with the announcement: AlphaGo will play with the player of the first line of the Ke Jie ratings. He himself announced his intention to play against AI in the summer of last year, but only this year the exact date of the match was announced. DeepMind has promised that the program will additionally play against five masters at once.

The games took place on the scheduled days, and their result finally showed that the AlphaGo level is significantly higher than human. That fourth game of the match Lee Sedol - AlphaGo will probably remain the last person's victory over this AI: at the end of the games, the developers announced that the system was leaving go.

')

We discussed with two professional players the level of this version of the program, as well as the future of human relations and computer go systems.

In the photo: the five masters of go, almost ready to admit defeat, are perplexed - the AlphaGo system, their opponent, began to play lazily, as if anticipating victory.

Why does an American company have an Asian board game?

Google proudly demonstrates the achievements of the British unit DeepMind. The search engine bought this company in 2014 for half a billion US dollars. DeepMind Technologies Limited focuses on artificial intelligence: specialists use machine learning to create algorithms that, for example, perfectly master video games . Products are not limited to the Atari 2600 arcade classics - DeepMind is trying to use its systems for analyzing medical data.

However, the entire Google business is to one degree or another built on smart systems. In 2016, 88% of the revenue of Alphabet Holding was provided by advertising. This is Google AdWords, video ads on YouTube and other tools. For contextual advertising it is important to correctly guess the interests of the user, so the Internet giant collects huge amounts of user data and analyzes them. There are a lot of projects with the beginnings of AI: voice assistant in Android and Home column, camera algorithms of the latest Pixel smartphone, neural translation of Google Translate, machine vision of Waymo robotic mobiles. Even the keyboard in a smartphone can claim to be “smart.”

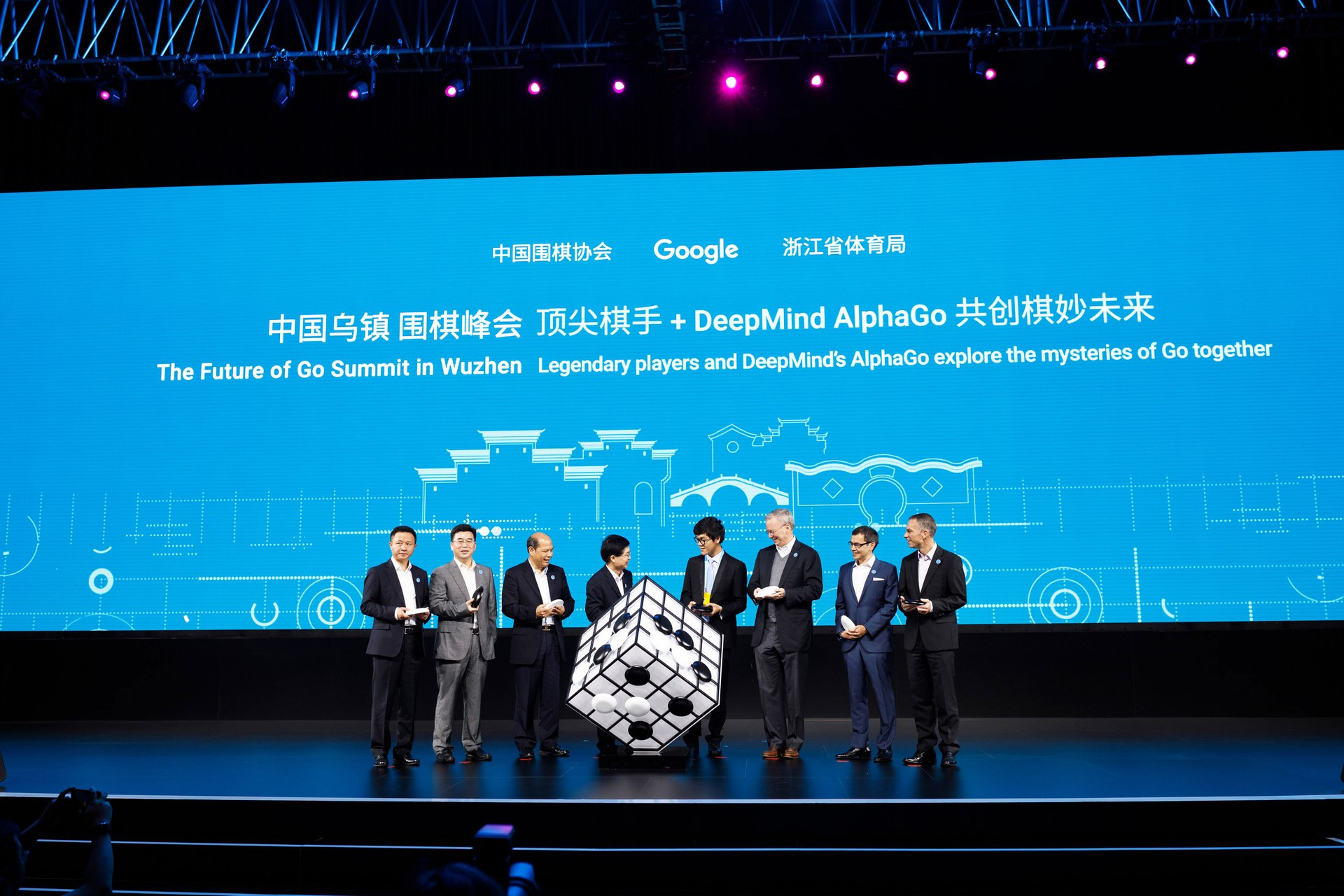

AlphaGo can be called a demonstration of the power of AI-initiatives inside the search engine. It is not surprising that the matches are held noticeably. The last time the games took place at the Four Seasons Hotel in Seoul. This time, Google together with the Chinese Weiqi Association and the local sports bureau organized the Future of Go Summit in the city of Wuzhene in Zhejiang Province. This is a fairly significant cooperation with the Chinese authorities, given that Google services are blocked in mainland China. Rates on winnings increased: Lee Cedol could get a million dollars in case of victory, Ke Jie was promised one and a half.

Noah Sheldon, Wired

Training

20 years ago, Kasparov lost the game Deep Blue to a chess computer for the first time. After a decade, the superiority of the computer algorithm over a man in chess no longer raised questions. Why didn't the Asian game computers be conquered before?

Outwardly, it’s simpler: two players try to fence off an area larger than their opponent’s size by placing stones of two colors. But on the standard board 19 × 19 possible positions of stones in googol (10 100 ) times more than the positions of the pieces on the chessboard. This already puts an end to the possibilities of busting "head on."

There are more moves in the go game than in chess, and soon after the start of the game, almost all of the 361 points on the board have to be considered. The initial moves - fusecs - quickly begin to go off into something original. In chess, pieces move away from the board, stones are added to go. It is impossible to apply many of the algorithms that are characteristic of chess systems. Before AlphaGo, the best of the systems used expert bases with good moves, evaluating moves using a tree search or the Monte Carlo method, but even they could beat only an amateur, but not a professional.

DeepMind has improved the existing approach. Neural network policies and values were added to the Monte-Carlo method, which were trained to 160,000 batches from the KGS server, and then in the games of the system against itself. The resulting product was first compared with other computer systems, then with a man - a three-time European champion Fan Hui played with one of the early versions of AlphaGo. The man lost five of five games.

And this was only the beginning of an AI test against a person. In Europe, the level of ownership of go is lower than in Asia, the birthplace of the game. Therefore, only after the victory over the famous Korean Lee Sedol AlphaGo could recognize the system that plays better than man.

In some ways, the Future of Go Summit was reminiscent of the events of 20 years ago. IBM put a specialized chess computer against a man, and Google showed not only the power of software, but also a specialized module for machine learning Tensor Processing Unit (TPU).

Convolutional neural networks are, as a rule, calculations on graphics accelerators: video cards connected via PCIe, usually designed for calculating and displaying graphics in games. The architecture features of video cards — hundreds and thousands of cores on a chip — help in well-parallel tasks, but CPUs are worse for such tasks. Both AMD and Nvidia are launching specialized professional “number crushers,” pulled up by Intel with the Xeon Phi line of coprocessors.

One of the versions of TPU.

Google claims that it has created a special-purpose microchip ( ASIC ), on which it is possible to create a much more powerful neural network architecture. In the problems of machine learning, TPU can exceed traditional video accelerators and central processors by 70 times, 196 times by the number of calculations per watt.

In March last year, a whole computational cluster played against Lee Sedol. On the eve and during the game, the media repeated that it was 1920 processor cores and 280 video accelerators. It was impossible to check that it really was, of course - AlphaGo was launched in the Google cloud, and the Four Seasons Hotel installed optical fiber for communication. Only in May 2016, Google admitted that it has been using TPU in data centers for more than a year. The secret sauce of the AlphaGo Lee version turned out to be 50 TPU boards.

Servers that beat the Korean master of 9th dan.

Against Ke Jie put one car with only one module TPU. Was Jie easier?

First game

Reutersz / Stringer, Quartz

Record broadcast in English

Recorded broadcast with comments in Russian (Ilya Shikshin and Ksenia Lifanova)

Game moves

In the period after December 29, 2016, an unexpectedly strong player appeared on the Korean server Tygem and Chinese Fox. He called himself Magister or Magist, then changed his nickname to Master and continued to crush opponents. Professional 9th dan Gu Li appointed an award of 100 thousand yuan (about $ 14.4 thousand) to those who beat Master. But an unknown player did not know defeat: in a row he won 60 games against high-level professional players. Both Chinese and Korean AI developers sought to replicate and surpass the success of AlphaGo, perhaps this is their attempt?

On January 4, 2017, DeepMind head Demis Hassabis admitted that Magister or Master is a test version of AlphaGo, which was experienced in informal online matches. Hassabis thanked everyone who participated in the test. Interestingly, even then Ke Jie lost three games to AlphaGo.

The AlphaGo version that played on New Year’s days was launched on one machine, and only one TPU board was installed on the computer. May 23 against Ke Jie put about the same configuration. She "thought" for 50 moves ahead and managed to consider 100 thousand moves per second.

On May 23, in the first game, Jie chose black stones, which means go first. He started the game unusually, peeped over the strategies of the AlphaGo matches of January. Commentators pointed out that Jie borrows Master's strategies in ordinary games against people - those 60 matches had a wide impact on the world of go. The AlphaGo system responded confidently and managed faster than expected. After three and a half hours, commentators began talking that Ke Jie had little chance of recouping. An hour later, the man admitted defeat. AlphaGo won by half a point.

AlphaGo continued to play with herself and learn. According to DeepMind, the version for playing Lee Cedol was three stones (for playing on equal, you would need three odds) a stronger version for playing with Fan Hue, the version of AlphaGo Master — three stones stronger than AlphaGo Lee.

After the first game, Jie said that he would never play against the machine alone again, that the future belongs to AI. In a letter to the “Last Stand,” the 19-year-old champion admitted that a soulless computer system might be smarter than him, but he would not give up.

Did Ke Jie try to play something special against the computer? According to the seven-time European champion Alexander Dinerstein (3 professional dan, 7 dan EGF), these attempts were unsuccessful:

“Like Lee Sedol in the first game of the match, Ke tried to play outside the box in the opening, so that the computer would think independently. But the difference from go is that chess openings are developed for dozens of moves ahead, and in go one can get a completely unique position, which has not previously been encountered in practice. In chess, we call this “wrong openings” and try to punish an opponent for a non-sample game. And the winner is not the one who has the best memory, but the one who understands the game better. ”

The owner of 1 professional dan and 7 dan of EGF Ilya Shikshin believes that in the first game, Ke Jie was expected by the “human” style of his opponent:

“In the first game Ke Jie tried to play like a human. As a result, it was quickly discovered that Ke was lagging behind, and he had no chance of recouping. In the second and third installments, Ke tried to complicate the situation on the board as much as possible in order to confuse the program. However, to provoke AlphaGo on an error did not happen. "

Second game

Record broadcast in English

Record broadcast with commentary in Russian (Ilya Shikshin and Timur Sankin)

Game moves

Ke Jie occupies the first line of several ratings, for example, Go Ratings . Naturally, the situation requires to become a benchmark for the performance of computer go systems. To show whether a machine can play stronger than humanity, it suffices to beat the strongest of them. This was what AlphaGo needed to do in three games in order to go down in history forever. Before the Future of Go Summit in unofficial games, Jie lost several games in a row, both AlphaGo itself and another, less well-known algorithm.

In March of this year, FineArt won the first place in the 10th Championship of Computer Systems at the University of Electrical Communications in Japan ( Computer Go UEC Cup ). She won all 11 games against other programs and avoided such well-known algorithms as French CrazyStone, Darkforest from Facebook and Japanese Deep Zen Go. Then FineArt fought against player 7 professional Dan Itiriki Ryo. The program played on an equal footing, without the odds, and won.

It is worth noting that AlphaGo bypasses the side of similar competitions of computer systems. Apparently, DeepMind prefers lush events with games against the most famous players, and the evaluation of the product against other programs is left to internal tests.

FineArt is the development of the AI laboratory of the Chinese telecommunications giant Tencent , famous for its many web portals, QQ.com and WeChat messenger, TenPay payment tools and other products. Tencent began developing FineArt in March 2016, just before the landmark AlphaGo-Lee Sedol match. FineArt works in the same way as AlphaGo: these are all the same neural networks trained on large datasets and games against themselves that are the policies and values that DeepMind described in a scientific work released during the first publications about AlphaGo. The first versions of Tencent's computer system started to play on the Chinese Fox server under various pseudonyms, until the name FineArt was chosen or 绝艺 - a phrase from an ancient Chinese poem.

For the first time on foxwq.com against FineArt, Ke Jie played last November. Then the man won and lost. The updated FineArt version of February 2017 did not leave him a chance: Jie lost 10 times in a row.

On May 25th, the second game of Ke Jie and AlphaGo took place. This time the computer played with black stones and went first, which, as they say in DeepMind, gives a slight advantage to the human opponent due to the characteristics of the system. Jie is known for good white stone playing skills. But still, a considerable part of the game he spent in high tension: Jie squeezed the strands of his hair between his thumb and index finger. Later in a press conference, he compared AlphaGo to a god player.

The man made wise decisions in the first half of the match. Hassabis wrote in his microblog that according to AlphaGo estimates, Jie played perfectly. In a press conference after the game, he said that he had seen a chance to win. But in the second half, he began to take positions. By the third hour of the game, Jie used about twice as much playing time as AlphaGo. By the fourth hour, AlphaGo had simplified the complex positions that a person created at the beginning of the game, which commentators regarded as a bad sign for Jie. After 15 minutes, he admitted defeat.

Two defeats of Ke Jie - this is the victory of AlphaGo in a series of three games, the third meeting could only show how much a person is stronger than a car. Interestingly, in the host country the media coverage was at the level of actual censorship. Two days before the first game, representatives of state television disappeared; during the games in China there was no broadcast available. The only video broadcast in Chinese was on YouTube, blocked in China. If local media talked about games against AI, then they avoided mentioning the word “Google.”

Third game

In the framework of the Future of Go Summit, artificial intelligence played not only against Ke Jie. On May 26, AlphaGo helped the two masters fight each other in the morning; in the afternoon, she played against a group of people. Apparently, Google first wants to hint how much machine learning will help people, second, how much AI can be smarter than humanity.

Gu Li during a doubles google game

Record video broadcast in English

Doubles moves

The rules of the pair go mean that instead of two people, four alternately place stones on the board, while negotiations between the members of each team are prohibited. In this case, each of the teams consisted of a person and a computer. Initially, Gu Li (9th professional dan) went for black, then Lian Xiao (8th professional dan). After that, their assistants once went - identical versions of AlphaGo. The cycle was repeated. Black won in this game.

Five players who fought against AlphaGo. From left to right: Xi Yue, Mi Yutin, Tang Weixing, Chen Yaoe, Zhou Ruiyang, Google

Team game moves

In the team go, five masters of the 9th professional dan put black stones on the board, their opponent - one copy of AlphaGo - white. The human players were allowed to confer with each other and discuss the moves, and the team played with appreciable pleasure, even if maintaining a balanced style of play. AlphaGo won here.

Record broadcast in English

Recorded broadcast with comments in Russian (Ilya Shikshin)

Third game moves

The last game with Ke Jie was held on May 27th. AlphaGo again played with black stones. And again on the board were shown some amazing combinations of stones. For example, AlphaGo made an unusual seventh move. Despite all the efforts of Ke Jie, after three and a half hours he had to admit defeat in the final game.

Future AlphaGo

Not a single person, nor a whole group of five masters could beat the new version of AI. Obviously, this is not the same program that DeepMind showed in March 2016. Alexander Dinerstein describes the changes in the system as follows:

“It has become even less predictable. The reason for this is that the previous version has studied in batches of people, and the current one - in batches of AlphaGo with AlphaGo. She played with herself millions of times, drawing conclusions and learning from her mistakes. And if in chess programs there are bases of human parties that benefit the computer (Kramnik, for example, insisted that the computer did not use them in the match), AlphaGo wanted to spit on everything we ever invented in go. She does not need our debut development. From the people she adopted only the rules of go. Everything else is her own experience. ”

In response to the same question, Ilya Shikshin notes that the efficiency has changed to a greater degree:

“If the version of AlphaGo that played with Lee Cedol seemed to be defeated with an unmistakable game, then the current version of AlphaGo cannot be defeated. AlphaGo's style of play has not changed in principle - this is taking the maximum from the position. Only now the program makes it even more effective. "

Is it possible that there is another person in the world — not necessarily someone specific — who can beat AlphaGo? As said, Dinerstein, the advantage on the side of the machine:

“AlphaGo is no longer what we saw in the match with Lee Sedol. Its creators say that it began to play 3 stones stronger. By chess standards, this may mean a head start to the knight. And if with the previous version it was still possible to fight, now the question is closed. In the world there is no person who is able to defeat AlphaGo in the game on an equal footing. "

Ilya Shikshin believes that there were chances, but the AI quickly improves his level:

“Perhaps the current version of AlphaGo, which played with Ke Jie, could have been beaten by someone. But we must understand that this is not the ultimate level of the program. Last year's version of AlphaGo, which played with Lee Sedol, was significantly weaker than the current one. And one can only guess at what force the programs will play in a year. ”

According to Dinerstein, Ke Jie himself did not make significant mistakes on his part:

“Ke tried to find weaknesses in the game program. He did not play the way he usually plays with people. Sharpened, fought. He did everything he could, but he had no chances in all 3 games. The average batch lasts 250 turns. Here, by the 50th move, AlphaGo received an overwhelming advantage, and then its task was to hold it. The program knows how to do it very well - it chooses moves that increase the likelihood of victory, and people often resemble the raja from the cartoon "Golden Antelope" - they want to increase their advantage, win not one point, but ten. This greed often leads to disaster. "

At the same time, the AI of the AlphaGo Master, which Ke Jie fought against, plays without error, says Dinerstein:

“In the match with Lee Sedol, experts found program errors. Here, even the best masters of the world say they do not see them. I think that with AlphaGo the question is finally closed. Lee Sedol was the last person on earth to beat this program. If Ke's game could somehow be improved, then the computer's game, from our point of view, was close to perfect. ”

A similar opinion was expressed by Ilya Shikshin:

I do not think that Ke Jie made serious mistakes. To assume that there were mistakes is the same as to assume that Ke Jie had chances. But there was no chance. AlphaGo plays level up stronger.

Probably the most powerful computer go system goes - it will not be sold as a commercial product, it will no longer try to prove its superiority. In the post “ Next move of AlphaGo ”, DeepMind explained that the project development team would be dismissed - specialists will take up other problems that can be solved by machine learning: diagnosing diseases and finding methods for their treatment, improving energy efficiency and inventing new materials. But AlphaGo will no longer participate in matches.

Two official games with significant media attention - and a sharp rejection of further development. You can blame Google for boasting the company for its AI. But DeepMind noticeably tries to help the game community: the unit promises to publish a scientific paper, which explains all the changes that have been made to AlphaGo. And now with this data, other developers will be able to create or improve their own systems, hinting in DeepMind. Apparently, Google is not interested in creating a game AI as a commercial product. DeepMind is also going to release a position analysis tool with which the player can see how AlphaGo thinks.

In addition to promises, the company left a gift in the form of 50 AlphaGo games against itself. As Ilya Shikshin notes, “out of these 50 games, 50 textbooks can be obtained.” He says that the level is so high that it takes a long time to analyze the games shown. Alexander Dinershtein points out that there is a problem of copying the AI style: in the opening you can play like AlphaGo, but what to do next?

Future

To defeat Lee Sedol, you needed a whole computing cluster, for Ke Jie - just one machine with one TPU module. The situation is getting closer when a mobile chip with tiny power consumption can surpass the best of people in go.

In chess, this is no surprise . These are not even isolated cases. For example, the current world chess champion Magnus Carlsen on YouTube’s personal channel sometimes promotes the mobile application of his name Play Magnus . The application is based on one of the most powerful chess engines, Stockfish . It deliberately lowers its performance and emulates Magnus’s mastery of chess at different ages.

It is not surprising that at serious chess championships the use of electronic devices is tightly regulated. Alexander Dinerstein says something like this could happen in go:

“Yes, probably, and we will soon have a problem with cheating, but for now you can have the phone in your pocket and not be afraid to get a technical defeat for this in the tournament. And people play on the Internet, and online tournaments with prizes are held. Apparently the reason is that the strongest programs are not yet available for download. ”

Ilya Shikshin believes that the introduction of restrictions is possible:

“So far I have not heard about the rules governing the use of gadgets at go competitions. But they will probably appear soon. ”

One scientific publication about AlphaGo in Nature magazine in 2016 was enough to ensure that in 2017 at the computer system competitions, the game was achieved on an equal footing with professional players. Developers of existing products are copying the DeepMind approach, the level of game playing programs is growing. Alexander Dinerstein:

“Over the past year, the programs have increased dramatically. Just a couple of years ago, we easily beat them on 3-5 odds stones. Now it is not clear who should give this odds to someone. ”

If DeepMind really shares the AlphaGo Lee improvement data to AlphaGo Master, then computer systems are likely to surpass the individual. What if in the future you have to play, making an amendment to a weaker protein team? Would it be interesting to watch such a game? Alexander Dinerstein:

“Yes, soon we will not be able to play with programs on an equal footing, but the game of handicap is equally interesting. I think that we will have to see many more matches, where the odds will change from party to party. Top masters were asked what handicap they were ready to play with God, if their lives were at stake. The answers were different, but most agree that on 4 stones a person will never lose. Let's see if this is so. By the way, Lee Sedol in his recent interview claims that AlphaGo will easily overcome 2 handicap stones. I really doubt it. ”

Ilya Shikshin:

“The first time will definitely be interesting. Guo Seigen, one of the strongest players in the 20th century, claimed that he would need 5 handicap stones to defeat Go-God (the opponent playing perfectly). I think it would be interesting for many of the players in go to check how far they are from Go-God. ”

In any case, the players agree that the popularity of go has grown. Alexander Dinerstein:

“Thanks to these matches, many new people have learned about go. A lot of chess players, people involved in programming come. In the comments to the articles in the media, they stopped asking about what kind of game it was. People do not just comment on the news and sympathize with the go masters. They even offer their methods of dealing with computers. So, for example, someone suggested switching to a game at Chapaev. ”

Ilya Shikshin:

« AlphaGo . . , , , . — , , . — , .»

« , — . — . — , , . — .»

AlphaGo is not some kind of SkyNet prototype and not even an impressive form of strong AI. This is a program: the operator places the stones on the board. AlphaGo is a narrowly focused system that can only play go. AlphaGo can't rank search results, can't play old Atari games, doesn't recognize a person by face, doesn't recognize breast cancer by mammography, won't be able to keep up the conversation.

However, AlphaGo is based on the samegeneral principles that can be used in a wide range of tasks. This is reinforcement training, the Monte Carlo method, the value function. Conventional components are assembled in a new and not quite standard form. AlphaGo does not go to work in some other structure of the Alphabet holding. This is more a manifestation of the quantity and quality of human talent available for solving non-gaming problems.

With the creation of motor transport, neither the race of people, nor horse racing has gone into oblivion. But auto racing was born - a sport that has its fans. It is possible that in the future, teams representing tech giants will exhibit bots in competitions for solving a task — not necessarily just go — subject to strict restrictions. In approximately the same way as in Formula 1, the characteristics of the engines are strictly limited , you can enter restrictions here. For example, no more than 10 billion transistors on a computer, no more than 500 watts of power consumption, and so on.

However, this is just a fantasy. In this particular case, AlphaGo AIs have never been put up in competitions against other programs - only against people or themselves (their pair in Wuzhen). The closest competitor AlphaGo - developed with the same principles FineArt from the Chinese company Tencent. Is DeepMind Afraid of a Chinese Copy? As Alexander Dinerstein says, the FineArt style is still different from AlphaGo:

“If anyone beat AlphaGo, then its computer counterparts. We have not seen AlphaGo games against other programs. Perhaps already FineArt is able to fight it on equal terms. What surprises me is the difference in playing styles. It would seem that the same technology: neural network and Monte Carlo, but the game Deep Zen Go or FineArt is similar to the game of the top pro, and AlphaGo is completely different. People have never played like that. As Shi Yue, one of the strongest Chinese, said: "This is a game from the future." It is impossible to beat. Moreover, even to understand the moves is not easy. There are such moves that people, in principle, do not consider as possible. If we assume that this is exactly what the best strategy in go looks like, then we can safely throw away all those books that have been published in the 4000-year history of Go. ”

Ilya Shikshin bluntly claims that FineArt is inferior to AlphaGo:

« FineArt , , AlphaGo, Nature 2016 . , DeepMind , . AlphaGo FineArt . FineArt , , 7 8 . AlphaGo .»

The situation with FineArt and the game AlphaGo in China is largely an illustration of the changing landscape of the development of artificial intelligence systems.

In recent days, a noticeable wave of publications ( 1 , 2 , 3 ) has passed in the American editions that China strives to overtake the US in the field of AI. Probably started her articlein the New York Times. The author, though he acknowledged that China is one step behind, but wondered about the speed of distance reduction. At the moment, China is spending billions of government dollars to finance machine learning startups and attracting scientists from other countries. The article presents the case of a German expert in robotics, who went not to a prestigious university in Europe or the USA, but to China. He was lured by a grant six times higher, which opened up the possibility of opening a laboratory, hiring an assistant and other personnel.

Do not lag behind private Chinese companies. For example, the local search giant Baidu, together with the state, opened a laboratory headed by researchers who in the past were engaged in Chinese combat robots. The same Baidu works on unmanned vehicles, speech recognition systems and visual information. Part of the development surpasses Western counterparts. In October 2016, Microsoft announced that its system recognizes human speech as well as humans. Andrew Ng, who was still working at Baidu at that time, noticed that this milestone was passed in China back in 2015.

Like Tencent's FineArt may be inferior to Google's AlphaGo, so Chinese attempts to work with AI may be behind American ones. But the lag is decreasing. Demonstration matches of the program from DeepMind against Asian masters of Go changed the tone of government discussions on funding. Their content has become broader and clearer, Chinese professors said NYT.

The potential of artificial intelligence systems is noticed, even attempts to catch up are troubling. Perhaps this is only a stormy summer before the next winter of AI . Perhaps this is a new stage in the race for global technological superiority.

Source: https://habr.com/ru/post/373525/

All Articles