AI from Google learned to imitate and combine the sounds of musical instruments

The Google Magneta project, consisting of a small group of artificial intelligence researchers inside giant computer systems, introduced the musicians to a new set of music- making tools - NSynth .

Magneta is part of the Google Brain division of the company's central artificial intelligence laboratory. In the laboratory, researchers study the boundaries of the capabilities of neural networks and other forms of machine learning. Neural networks, which are complex mathematical systems that study tasks and analyze large amounts of data, have come to the fore in recent years in the tasks of recognizing objects and faces in images and translations from one language to another.

')

Now the Magneta team is turning this idea on its head, using neural networks as a way to teach machines new kinds of music and other arts. At the first stage, NSynth works with a large database of sounds. Jesse Engle, one of the employees of Magneta, and his team collected a wide range of notes from about a thousand different instruments, from violin to balafon , and then provided their neural network developments.

Unlike a traditional synthesizer that generates sound from oscillators and sample tables, NSynth uses a deep neural network to generate sounds at the level of individual samples. The tool provides musicians with an intuitive control of timbre, dynamics, as well as the opportunity to study and explore new sounds that are difficult or impossible to extract from a conventional synthesizer.

Analyzing the notes, the neural network studied the sound characteristics of each instrument, and then created a mathematical “vector” for each of them. Using these vectors, the machine can mimic the sound of each instrument, for example, a Hammond organ or clavichord , and in doing so can combine the sounds of these two instruments.

This is not like playing two instruments at the same time: the machine and its software do not layer the clavichord sounds on the Hammond organ. They produce completely new sounds using the mathematical characteristics of notes that come from two instruments. Researchers can repeat this with all other instruments, creating countless new sounds from those that we already have, thanks to artificial intelligence.

NSynth allows researchers to explicitly factor the generation of music into other musical qualities. They could further factorize these qualities, but for simplicity they did not and received the following:

The goal is the P (audio | note) model, known as the timbre, and it is assumed that P (note) is derived from a high level “language model” of music, such as a sequence of neurons in a recurrent neural network (RNN). And although the developers recognize that the scheme is not ideal, factorization is based on how the tools work and is surprisingly effective. Indeed, many modern musical works implement such factorization using MIDI for a sequence of notes and software synthesizers for timbre.

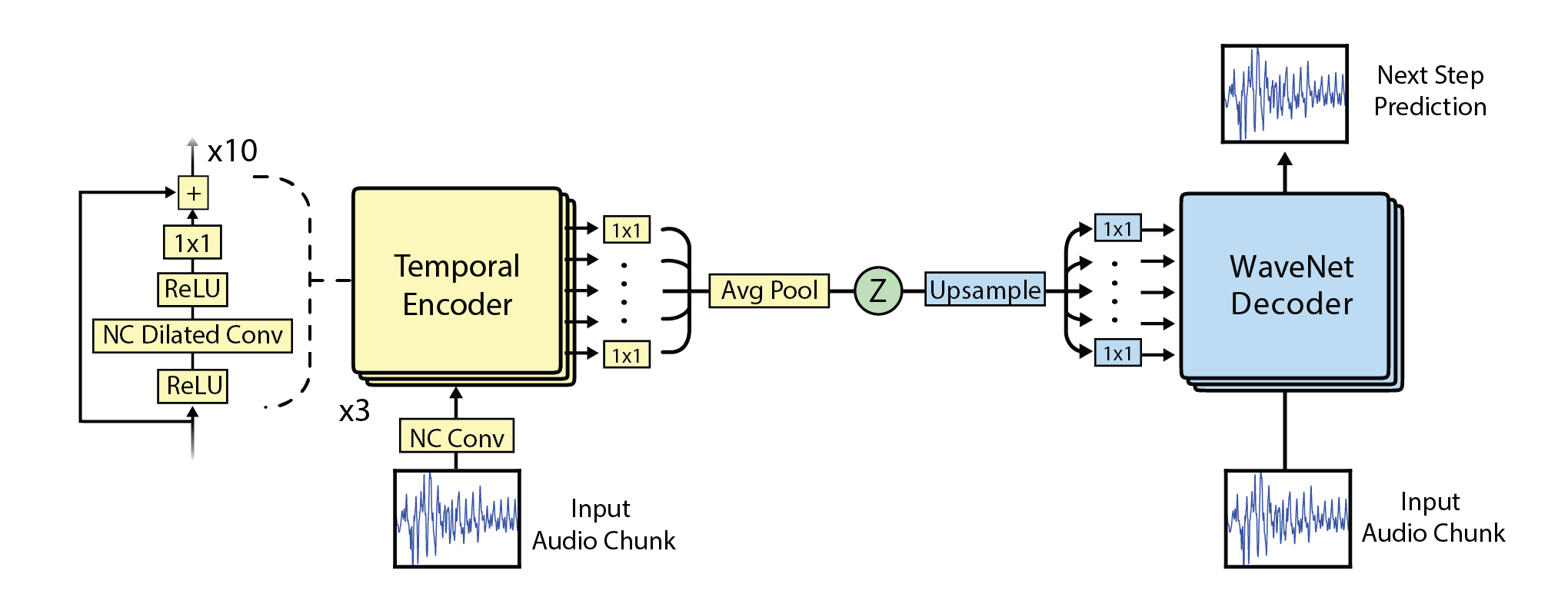

In creating the tool, the Google team used the development of its autonomous unit DeepMind - WaveNet . This system is an autoregression network of extended convolutions, which simulates the sound of one sample at a time, like a nonlinear infinite pulse filter.

Since the context of this filter is currently limited to several thousand samples (about half a second), the long-term structure requires a guiding external signal. In their work, the Magneta team has eliminated the need to process external signals using an autocoder based on WaveNet.

Critic Marc Weidenbaum (Marc Weidenbaum) notes that the approach is not very far from what the orchestral conductors have done for centuries: mixing instruments is not something new. But he believes that Google technology can give a second life to the centuries-old practice and push it in a new direction of development. “In art, it can bring some interesting things, and since it's Google, people will follow it,” says Weidenbaum.

Jesse Engel demonstrated the work of the machine: he “played” on the instrument, which is located between the clavichord and the Hammond organ. Then he dragged the slider on his laptop screen. And if in the first case only 15% of the clavichord could be heard, now it was closer to 75%. Then Engel dragged the slider back and forth as quickly as he could, mixing up the sounds of two completely different instruments.

In addition to the NSynth slider that Engel demonstrated, the team also created a two-dimensional interface that allows you to simultaneously explore the sound space between four different instruments. Researchers intend to continue working on this idea, exploring the boundaries of artistic creativity. For example, a second neural network could explore new ways of simulating and combining sounds from all of these instruments — an AI could work in tandem with another AI.

In Google, Magneta also created a new platform for AI researchers and other computer specialists. A research paper describing the NSynth algorithms has already been published, and everyone can download and use their own database of sounds. Douglas Eck, who oversees the Magneta team, hopes that researchers will be able to create a much wider set of tools for all artists, not just musicians.

doi: arXiv: 1704.01279 [cs.LG]

Source: https://habr.com/ru/post/373463/

All Articles