My nephew against machine learning

Yali, my four-year-old nephew, was very much into pokemon. He has a lot of these toys and a few cards from a collectible card game (TGG). Yesterday, he discovered a large collection of TGG cards in my possession, and now he has so many cards that he simply cannot cope with them.

The problem is that Yali is too small to figure out how to play the game, and he invented his version of the game. The goal of the game is to sort the cards into categories (pokemon, energy and a training card).

He did not ask how I know what type of card. He just took a few cards and asked what type they were. Having received several answers, he managed to split several cards by type (having made several mistakes at the same time). At this point, I realized that my nephew is, in fact, an algorithm for machine learning, and my task as an uncle is to label the data for it. Since I am an uncle-geek and machine learning enthusiast, I began to write a program that could compete with Yali.

')

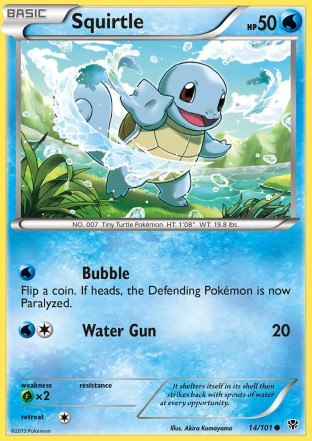

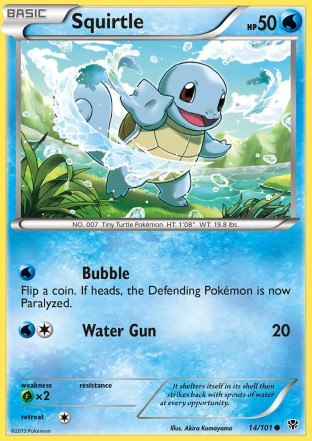

This is a typical Pokemon card:

For an adult who can read, it is easy to understand what type this card is - it is written on it. But Yali is 4 years old, and he does not know how to read. A simple OCR module would quickly solve my problem, but I didn’t want to make unnecessary assumptions. I just took this card and provided it to study the MLP neural network. Thanks to the pkmncards website , I could download pictures already sorted into categories, so there were no problems with the data.

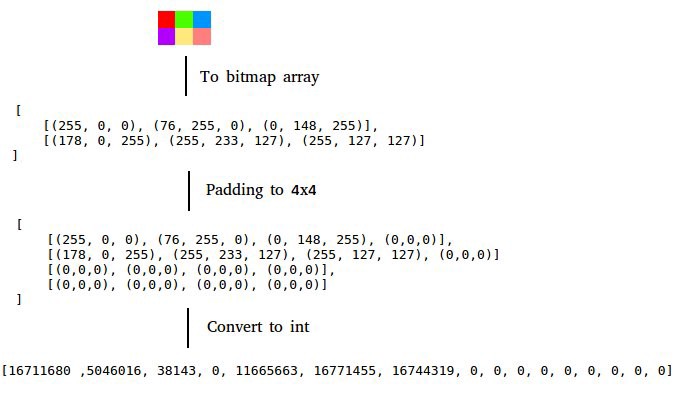

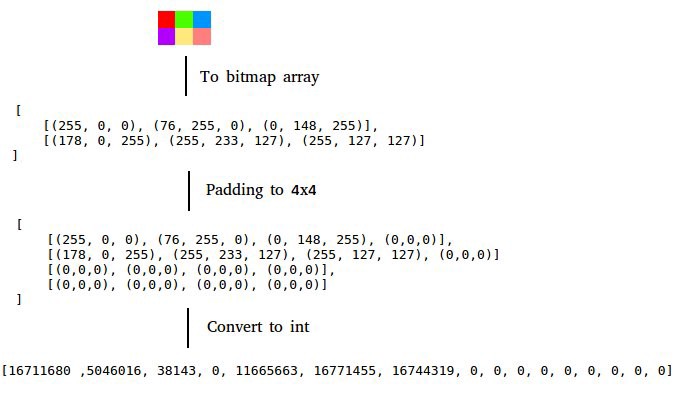

The machine learning algorithm needs attributes, and my attributes were image pixels. I converted 3 RGB colors into a single integer. Since I came across pictures of different sizes, they needed to be normalized. Having found the maximum height and width of the images, I added zeros to the smaller images.

Quick run QA before the work of the program itself. I randomly took two cards of each type and ran the prediction. With 100 cards from each category, the fit went very quickly, and the predictions were terrible. Then I took 500 cards from each category (excluding types of energy, of which there were only 130), and started the fit.

Memory is over. It was possible to run the fit code in the cloud, but I wanted to think of a way to save memory. The largest picture was 800x635, it was too much, and resizing the pictures solved my problem.

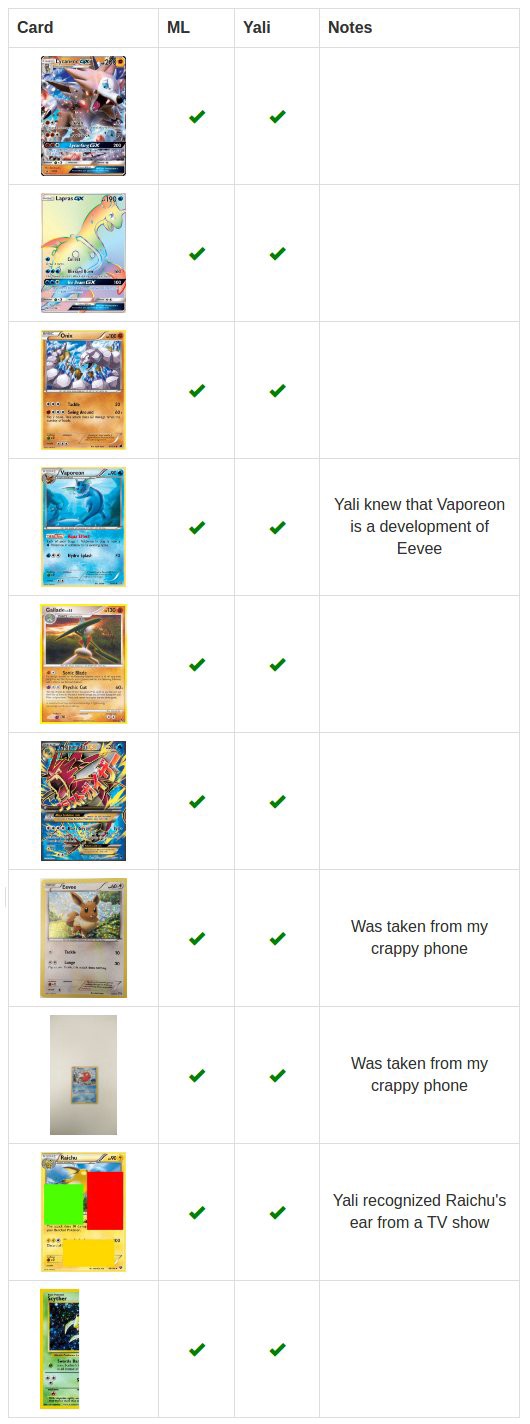

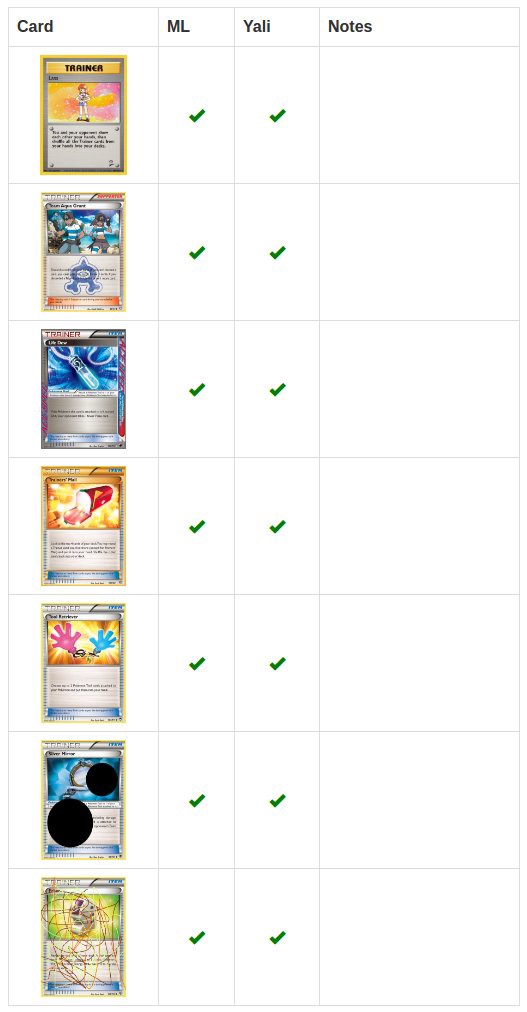

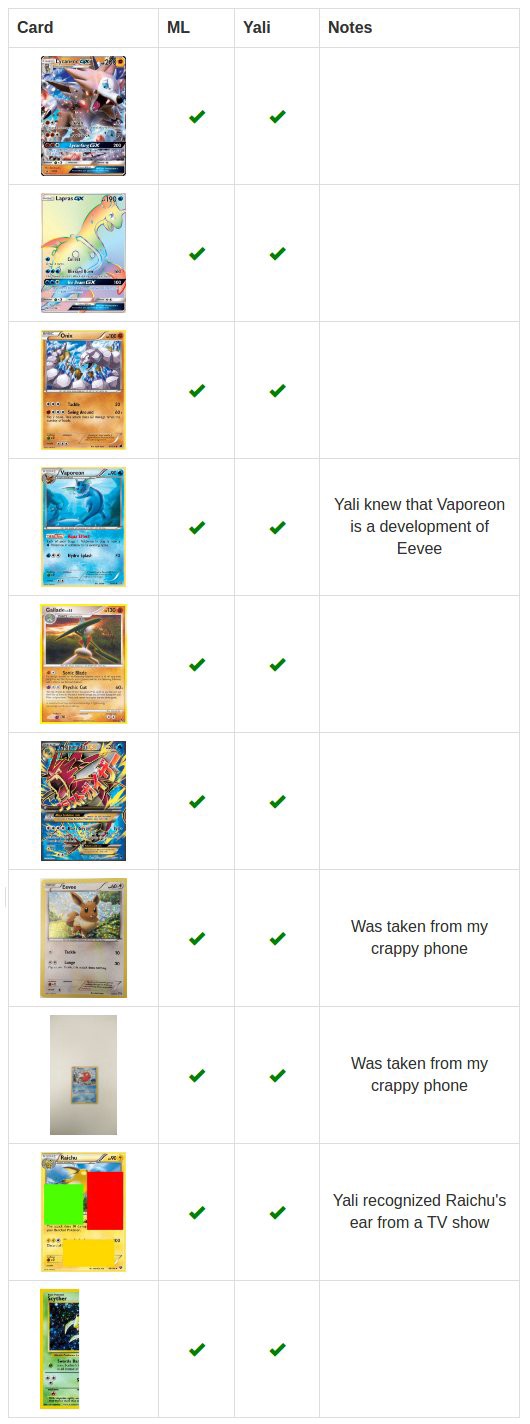

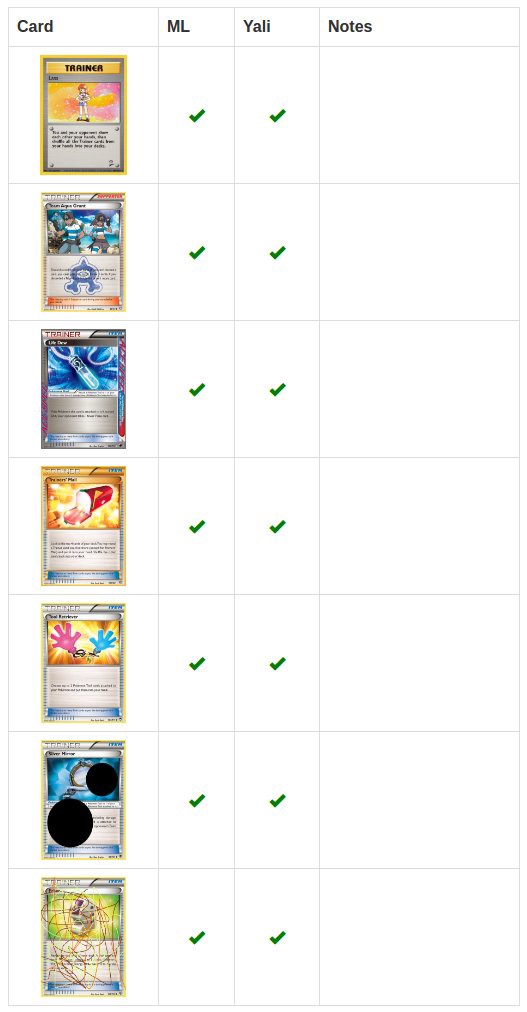

For the real check, in addition to the usual maps, I added maps, on which I skipped a little, maps cut in half, with outlines drawn on top, photographed them with a phone (with a bad camera), etc. For training, these cards are not used.

I used 1533 models. Different sizes of pictures, several hidden layers (up to 3), layer length (up to 100), image colors, image reading methods (everything in its entirety, upper part, every second pixel, etc.). After many hours of fitting, the best result was 2 errors out of 25 cards (few models had such a result, and each of them was mistaken on different cards). 9 models from 1533 worked with a result of 2 errors.

The combination of models gave me the result with 1 error, if I raised the threshold above 44%. For the test, I used a threshold of 50%. I waited a month while Yali was playing with cards, and conducted testing.

Errors occur in the recognition of energy cards. There were only 130 such cards on pkmncards, unlike thousands of other types of cards. Fewer examples for learning.

I asked Yali about how he recognized the cards, and he said that he had seen some Pokemon in TV shows or books. That is how he recognized Raichu's ears or found out that Vaporeon is a water Ivi. My program did not have such data, only maps.

After defeating Yali in the pokemon game and having received an award, our machine intelligence is sent to meet new adventures.

The problem is that Yali is too small to figure out how to play the game, and he invented his version of the game. The goal of the game is to sort the cards into categories (pokemon, energy and a training card).

He did not ask how I know what type of card. He just took a few cards and asked what type they were. Having received several answers, he managed to split several cards by type (having made several mistakes at the same time). At this point, I realized that my nephew is, in fact, an algorithm for machine learning, and my task as an uncle is to label the data for it. Since I am an uncle-geek and machine learning enthusiast, I began to write a program that could compete with Yali.

')

This is a typical Pokemon card:

For an adult who can read, it is easy to understand what type this card is - it is written on it. But Yali is 4 years old, and he does not know how to read. A simple OCR module would quickly solve my problem, but I didn’t want to make unnecessary assumptions. I just took this card and provided it to study the MLP neural network. Thanks to the pkmncards website , I could download pictures already sorted into categories, so there were no problems with the data.

The machine learning algorithm needs attributes, and my attributes were image pixels. I converted 3 RGB colors into a single integer. Since I came across pictures of different sizes, they needed to be normalized. Having found the maximum height and width of the images, I added zeros to the smaller images.

Quick run QA before the work of the program itself. I randomly took two cards of each type and ran the prediction. With 100 cards from each category, the fit went very quickly, and the predictions were terrible. Then I took 500 cards from each category (excluding types of energy, of which there were only 130), and started the fit.

Memory is over. It was possible to run the fit code in the cloud, but I wanted to think of a way to save memory. The largest picture was 800x635, it was too much, and resizing the pictures solved my problem.

For the real check, in addition to the usual maps, I added maps, on which I skipped a little, maps cut in half, with outlines drawn on top, photographed them with a phone (with a bad camera), etc. For training, these cards are not used.

I used 1533 models. Different sizes of pictures, several hidden layers (up to 3), layer length (up to 100), image colors, image reading methods (everything in its entirety, upper part, every second pixel, etc.). After many hours of fitting, the best result was 2 errors out of 25 cards (few models had such a result, and each of them was mistaken on different cards). 9 models from 1533 worked with a result of 2 errors.

The combination of models gave me the result with 1 error, if I raised the threshold above 44%. For the test, I used a threshold of 50%. I waited a month while Yali was playing with cards, and conducted testing.

Errors occur in the recognition of energy cards. There were only 130 such cards on pkmncards, unlike thousands of other types of cards. Fewer examples for learning.

I asked Yali about how he recognized the cards, and he said that he had seen some Pokemon in TV shows or books. That is how he recognized Raichu's ears or found out that Vaporeon is a water Ivi. My program did not have such data, only maps.

After defeating Yali in the pokemon game and having received an award, our machine intelligence is sent to meet new adventures.

Source: https://habr.com/ru/post/373345/

All Articles